k8s 1.20.6 将docker引擎切换为containerd

一、环境介绍

官方文档:https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/#containerd

[root@master ~]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready control-plane,master 4m24s v1.20.6 192.168.11.67 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7 node1 Ready <none> 4m v1.20.6 192.168.11.68 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7 node2 Ready <none> 3m57s v1.20.6 192.168.11.69 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7

二、在master上操作

1、将需要切换的node改为不可调度

kubectl cordon node1

2、驱逐该node上的pod资源

kubectl drain node1 --delete-local-data --force --ignore-daemonsets

3、查看

[root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready control-plane,master 15m v1.20.6 node1 Ready,SchedulingDisabled <none> 14m v1.20.6 node2 Ready <none> 14m v1.20.6

三、在切换引擎的node服务器上操作

1、配置先决条件

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 设置必需的 sysctl 参数,这些参数在重新启动后仍然存在。 cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF # 应用 sysctl 参数而无需重新启动 sudo sysctl --system

2、安装containerd(因为我之前用的docker引擎。所以containerd已经安装好了。所以就不用安装containerd了)

3、配置containerd的config.toml文件(覆盖旧的配置config.toml文件)

containerd config default | sudo tee /etc/containerd/config.toml

4、修改config.toml配置

sandbox_image:将镜像地址替换为国内阿里云的

SystemdCgroup:指定使用systemd作为Cgroup的驱动程序(在options下一行添加的内容)

endpoint:修改镜像加速地址

[root@node1 ~]# cat -n /etc/containerd/config.toml |egrep "sandbox_image|SystemdCgroup |endpoint "

57 sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2"

97 SystemdCgroup = true

106 endpoint = ["https://1nj0zren.mirror.aliyuncs.com"]

5、重启containerd

systemctl restart containerd.service systemctl enable containerd.service

6、配置kubelet使用containerd

[root@node1 ~]# cat /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd"

7、重启kubelet

systemctl restart kubelet

四、查看容器引擎是否成功切换为containerd

1、查看容器引擎是否成功切换为containerd

[root@master ~]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready control-plane,master 22m v1.20.6 192.168.11.67 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7 node1 Ready,SchedulingDisabled <none> 22m v1.20.6 192.168.11.68 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 containerd://1.4.6 node2 Ready <none> 21m v1.20.6 192.168.11.69 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7

2、取消node节点不可被调度的标记

[root@master ~]# kubectl uncordon node1 node/node1 uncordoned [root@master ~]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready control-plane,master 23m v1.20.6 192.168.11.67 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7 node1 Ready <none> 23m v1.20.6 192.168.11.68 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 containerd://1.4.6 node2 Ready <none> 23m v1.20.6 192.168.11.69 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 docker://20.10.7

五、集群切换查看

[root@master ~]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master Ready control-plane,master 46m v1.20.6 192.168.11.67 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 containerd://1.4.6 node1 Ready <none> 46m v1.20.6 192.168.11.68 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 containerd://1.4.6 node2 Ready <none> 46m v1.20.6 192.168.11.69 <none> CentOS Linux 7 (Core) 3.10.0-1160.25.1.el7.x86_64 containerd://1.4.6 [root@master ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-7f4f5bf95d-zs84c 1/1 Running 0 45m calico-node-4kxmh 0/1 Running 1 66s calico-node-jt2m5 1/1 Running 7 45m calico-node-pjl62 1/1 Running 1 45m coredns-54d67798b7-m77pp 1/1 Running 0 46m coredns-54d67798b7-ptsgl 1/1 Running 0 46m etcd-master 1/1 Running 7 3m27s kube-apiserver-master 1/1 Running 7 3m27s kube-controller-manager-master 1/1 Running 7 3m27s kube-proxy-4tv7s 1/1 Running 0 46m kube-proxy-5qbw4 1/1 Running 0 46m kube-proxy-hqtlm 1/1 Running 0 46m kube-scheduler-master 1/1 Running 7 3m27s

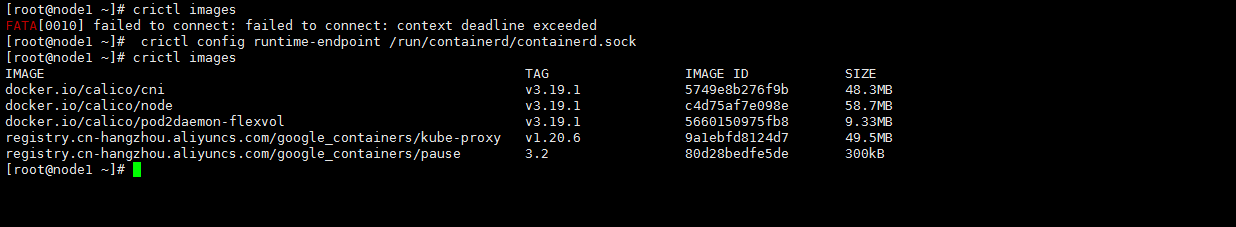

六、FATA[0010] failed to connect: failed to connect: context deadline exceeded错误解决

在服务器上执行命令

crictl config runtime-endpoint /run/containerd/containerd.sock

1、问题解决

七、升级为containerd后,无法在服务器上下载私有镜像仓库的镜像问题

1、问题解决(在config.toml中添加仓库认证)

文档:https://www.orchome.com/10011

[root@test-node1 ~]# cat -n /etc/containerd/config.toml|grep cn-shanghai.aliyuncs.com -C 4 103 [plugins."io.containerd.grpc.v1.cri".registry] 104 [plugins."io.containerd.grpc.v1.cri".registry.mirrors] 105 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] 106 endpoint = ["https://ixxxxx.mirror.aliyuncs.com"] 107 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry-vpc.cn-shanghai.aliyuncs.com"] 108 endpoint = ["https://registry-vpc.cn-shanghai.aliyuncs.com"] 109 [plugins."io.containerd.grpc.v1.cri".registry.configs] 110 [plugins."io.containerd.grpc.v1.cri".registry.configs."registry-vpc.cn-shanghai.aliyuncs.com"] 111 [plugins."io.containerd.grpc.v1.cri".registry.configs."registry-vpc.cn-shanghai.aliyuncs.com".auth] 112 username = "xxxxxxxx" 113 password = "xxxxxxxxxxxxx" 114 [plugins."io.containerd.grpc.v1.cri".image_decryption] 115 key_model = ""