centos下部署kubernetes1.6.14版本--使用kubeadm初始化

虚拟机测试版

1.机器配置:

192.168.253.11 2c/2G/40G 192.168.253.11 2c/2G/40G 192.168.253.11 2c/2G/40G

2.机器初始化:

$ yum -y install vim zip gzip unzip lrzsz iptables iptables-services make gcc gcc-c++ openssl-devel curl-devel sudo lsof telnet wget elinks iotop nmap net-tools git fuse-ssh ntpdate $ systemctl stop firewalld && systemctl disable firewalld $ setenforce 0 $ cat >> /etc/hosts << EOF > 192.168.253.11 k8s-master > 192.168.253.12 k8s-node1 > 192.168.253.13 k8s-node2 > EOF

3.验证mac地址和uuid各个节点是否唯一

$ cat /sys/class/net/ens33/address 00:0c:29:ad:ed:23

$ cat /sys/class/dmi/id/product_uuid 4C6F4D56-3973-EFB6-AC31-597692ADED23

3.关闭swap分区(重启机器失效)

$ swapoff -a

3.1永久关闭

sed -i.bak '/swap/s/^/#/' /etc/fstab

4.br_netfilter模块加载

4.1查看

$ lsmod |grep br_netfilter

4.2临时新增

$ modprobe br_netfilter 您在 /var/spool/mail/root 中有新邮件 $ lsmod |grep br_netfilter br_netfilter 22256 0 bridge 151336 1 br_netfilter

4.2永久新增

$ cat > /etc/rc.sysinit << EOF #!/bin/bash for file in /etc/sysconfig/modules/*.modules ; do [ -x $file ] && $file done EOF $ cat > /etc/sysconfig/modules/br_netfilter.modules << EOF modprobe br_netfilter EOF $ chmod 755 /etc/sysconfig/modules/br_netfilter.modules

5.修改内核参数以及生效

$ cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF $ sysctl -p /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

6.设置k8s yum源

$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

6.1加载k8s yum源

$ yum clean all

$ yum -y makecache

7.所有节点做免密登陆

ssh-keygen -t rsa 7.1分发到其他节点 $ ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-node1 $ ssh-copy-id -i /root/.ssh/id_rsa.pub root@k8s-node2

8.安装docker

8.1安装docker依赖

$ yum install -y yum-utils device-mapper-persistent-data lvm2

8.2拉取docker源

$ yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

8.3安装docker(和k8s对应的版本)

$ yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y

8.4启动docker

$ systemctl start docker && systemctl enable docker

8.5安装bash-completion工具以及加载

$ yum -y install bash-completion

$ source /etc/profile.d/bash_completion.sh

8.6docker配合加速器&重新加载docker加速器

由于Docker Hub的服务器在国外,下载镜像会比较慢,可以配置镜像加速器。主要的加速器有:Docker官方提供的中国registry mirror、阿里云加速器、DaoCloud 加速器,本文以阿里加速器配置为例。

$ mkdir -p /etc/docker $ tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://il4p1lgk.mirror.aliyuncs.com"] } EOF $ systemctl daemon-reload && systemctl restart docker

9.修改Cgroup Driver重新加载

$ more /etc/docker/daemon.json { "registry-mirrors": ["https://v16stybc.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } $ systemctl daemon-reload && systemctl restart docker

10.安装k8s

10.1查看k8s版本

$ yum list kubelet --showduplicates | sort -r

10.2安装kubelet、kubeadm和kubectl以及启动和开机自启

$ yum install -y kubelet-1.16.4 kubeadm-1.16.4 kubectl-1.16.4安装kubelet、kubeadm和kubectl $ systemctl enable kubelet && systemctl start kubelet

安装说明:

kubelet 运行在集群所有节点上,用于启动Pod和容器等对象的工具

kubeadm 用于初始化集群,启动集群的命令工具

kubectl 用于和集群通信的命令行,通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

10.2kubectl命令补全

$ echo "source <(kubectl completion bash)" >> ~/.bash_profile $ source ~/.bash_profile

10.3创建目录编写拉取镜像脚本以及执行

$ mkdir /home/kubernetes $ cat > image.sh < EOF #!/bin/bash url=registry.cn-hangzhou.aliyuncs.com/loong576 version=v1.16.4 images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`) for imagename in ${images[@]} ; do docker pull $url/$imagename docker tag $url/$imagename k8s.gcr.io/$imagename docker rmi -f $url/$imagename done EOF

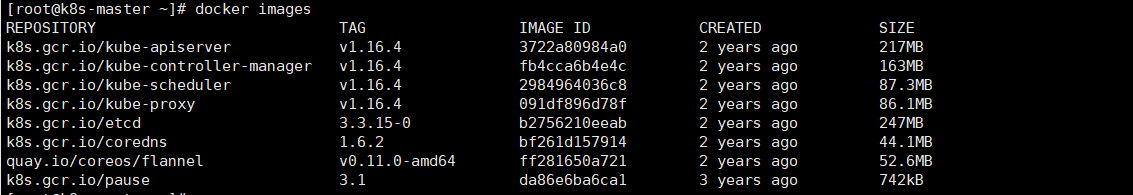

#验证镜像

$ docker images

11.使用kubeadm初始化master

$ cat kubeadm-config.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.16.4 apiServer: certSANs: #填写所有kube-apiserver节点的hostname、IP、VIP - k8s-master - k8s-node1 - k8s-node2 - 192.168.253.11 - 192.168.253.12 - 192.168.253.13 controlPlaneEndpoint: "192.168.253.11:6443" networking: podSubnet: "10.244.0.0/16"

11.1执行初始化

$ kubeadm init --config=kubeadm-config.yaml

#初始化完成保留这段信息,后面使用这段数据在node节点加入master秘钥

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root: kubeadm join 192.168.253.11:6443 --token wbml9k.dls3apjjgzlhnlek \ --discovery-token-ca-cert-hash sha256:04c3e185821000de36522aea2beaf7c6e402c090025b9ce704854452ea9cdc2e \ --control-plane Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.253.11:6443 --token wbml9k.dls3apjjgzlhnlek \ --discovery-token-ca-cert-hash sha256:04c3e185821000de36522aea2beaf7c6e402c090025b9ce704854452ea9cdc2e

11.2添加环境变量

$ echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile $ source ~/.bash_profile #本文所有操作都在root用户下执行,若为非root用户,则执行如下操作: $ mkdir -p $HOME/.kube $ cp -i /etc/kubernetes/admin.conf $HOME/.kube/config $ chown $(id -u):$(id -g) $HOME/.kube/config

12.安装flannel网络(由于网络原因,可能会安装失败,可以在文末直接下载kube-flannel.yml文件,然后再执行apply)

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

13.将node1加入主节点

$ kubeadm join 192.168.253.11:6443 --token wbml9k.dls3apjjgzlhnlek \ --discovery-token-ca-cert-hash sha256:04c3e185821000de36522aea2beaf7c6e402c090025b9ce704854452ea9cdc2e

14.将node2加入主节点

$ kubeadm join 192.168.253.11:6443 --token wbml9k.dls3apjjgzlhnlek \

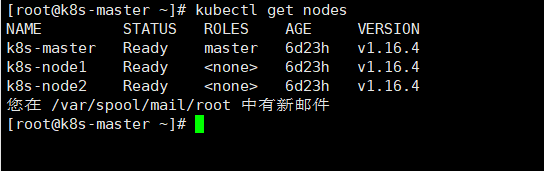

15.查看集群状态

$ kubectl get nodes

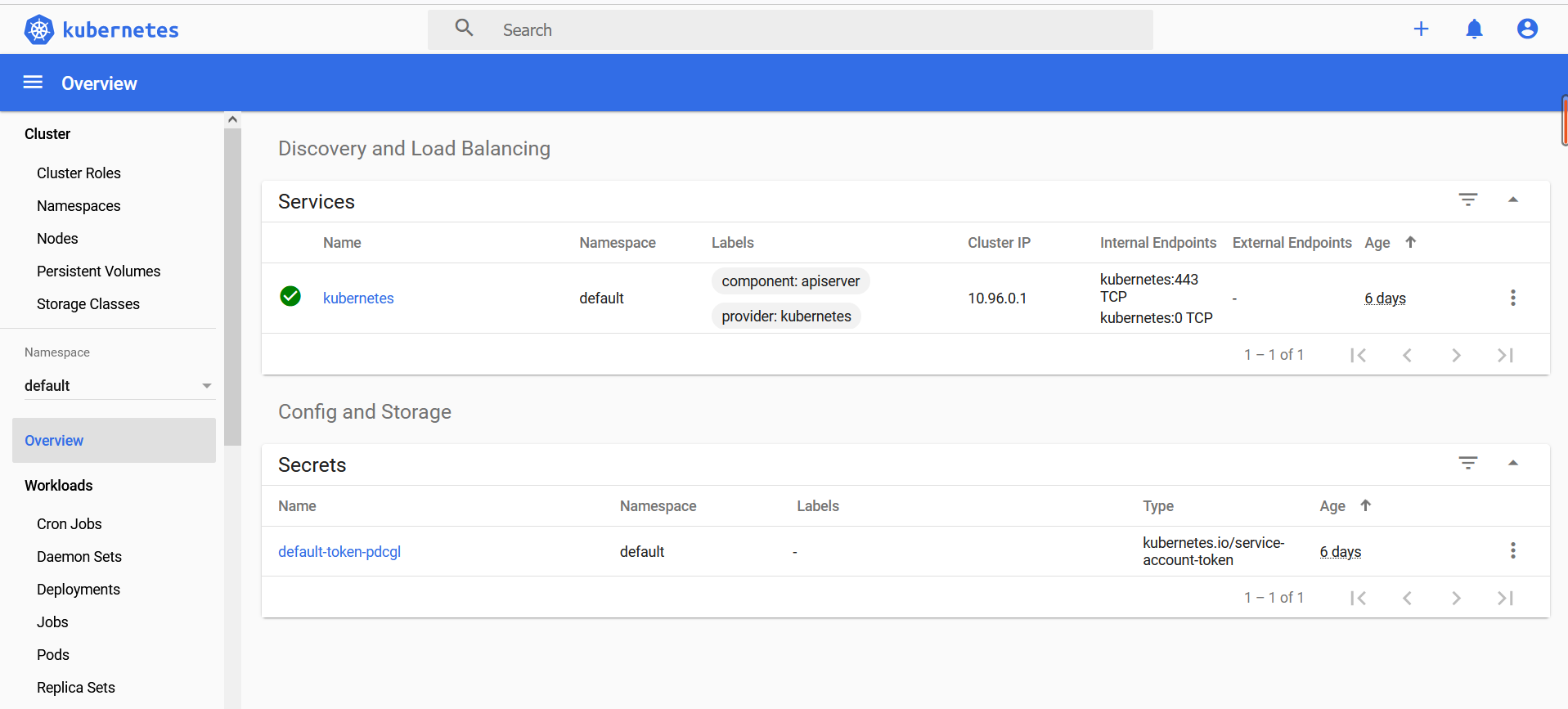

16.Dashboard搭建

$ cd /home/kubernetes

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

#修改镜像地址

$ sed -i 's/kubernetesui/registry.cn-hangzhou.aliyuncs.com\/loong576/g' recommended.yaml

#修改外网访问

$ sed -i '/targetPort: 8443/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' recommended.yaml

#新增账号 创建超级管理员的账号用于登录Dashboard

$ cat >> recommended.yaml << EOF---

# ------------------- dashboard-admin ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

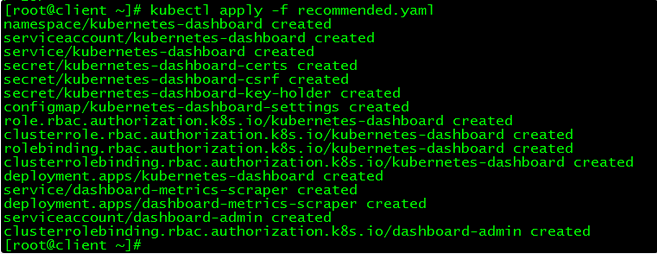

#部署Dashboard$ kubectl apply -f recommended.yaml

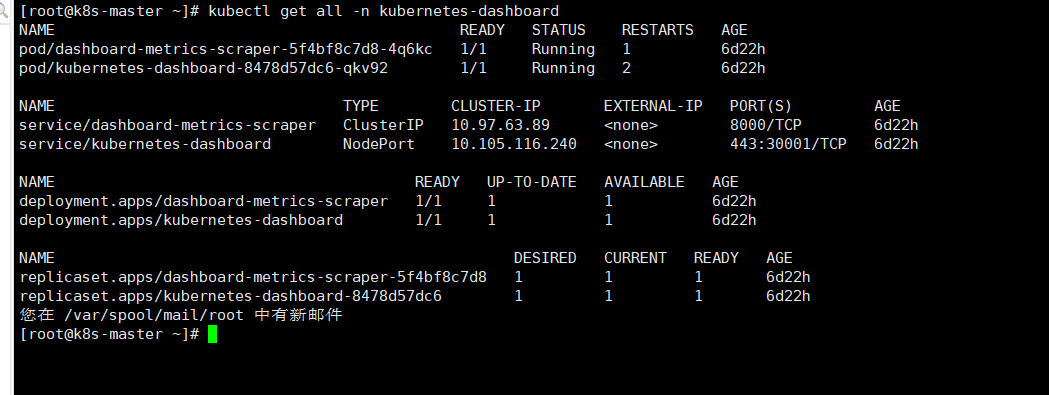

#查看状态

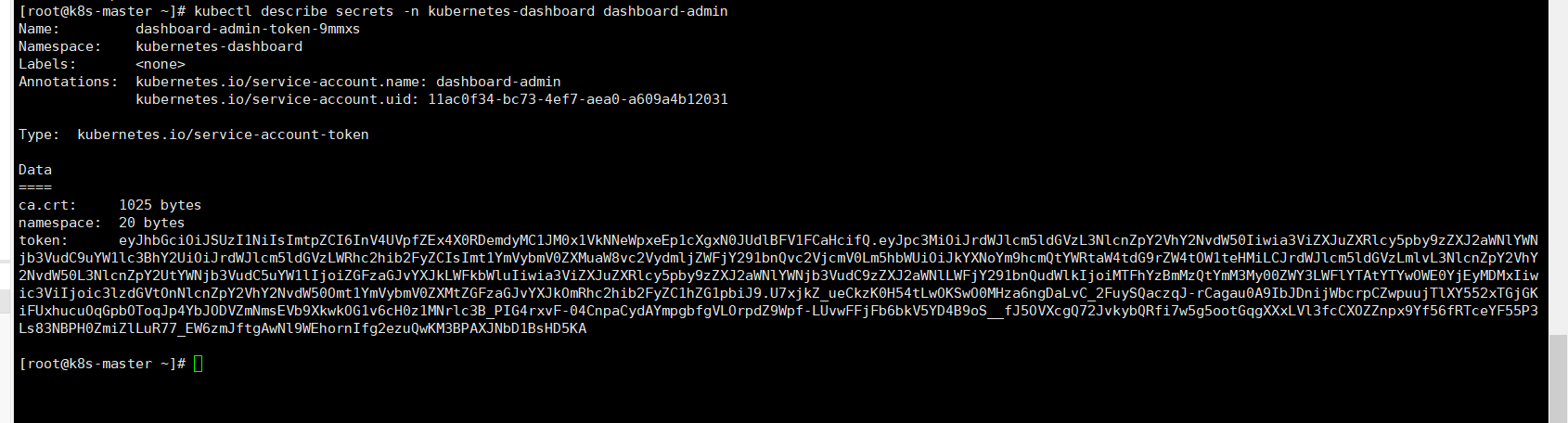

#令牌查看

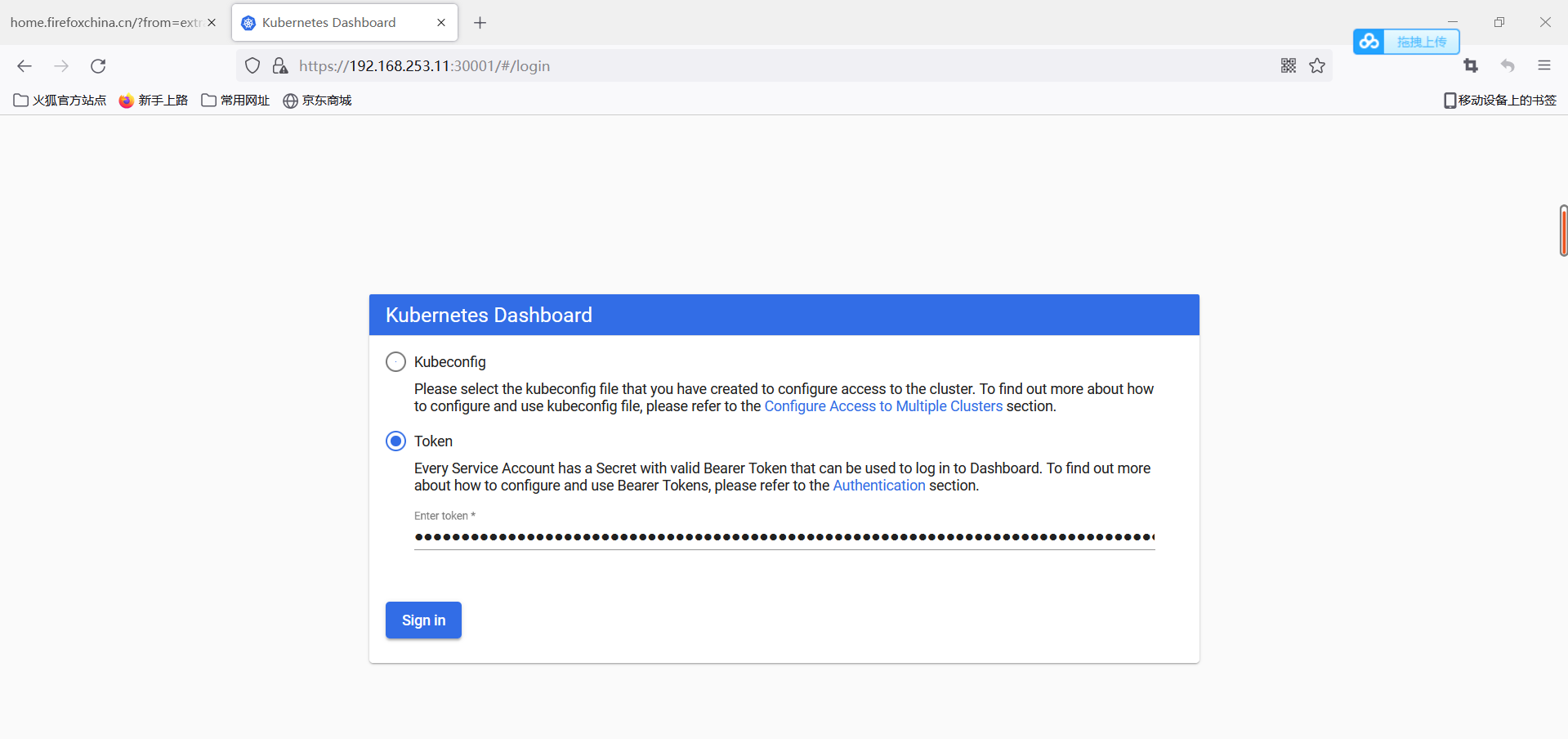

#通过token登陆

部署机器的https://ip+30001