java编写网站数据抓取

来公司已经俩月了,每天加班平均工时11个小时的我又想起了老东家温馨舒适安逸的生活。已经有好久没时间读博客写博客了,我觉得我退步了,嗯嗯,我很不开心

今天记录下抓数据的一些东西吧。

数据抓取现在是很普遍的事情,有用Python的,当然我还是很弱,我只能用java搞,以下就是正经话了。

以下需要注意的:

1.首先有个目标,抓取的目标页面

2.目标页面的数据结构

3.目标网站是否有反爬虫机制(就是会封你ip)

4.数据解析之 存库

获取httpClient

package com.app.utils; import org.apache.http.config.Registry; import org.apache.http.config.RegistryBuilder; import org.apache.http.conn.socket.ConnectionSocketFactory; import org.apache.http.conn.socket.LayeredConnectionSocketFactory; import org.apache.http.conn.socket.PlainConnectionSocketFactory; import org.apache.http.conn.ssl.SSLConnectionSocketFactory; import org.apache.http.impl.client.CloseableHttpClient; import org.apache.http.impl.client.HttpClients; import org.apache.http.impl.conn.PoolingHttpClientConnectionManager; import org.springframework.stereotype.Component; import javax.annotation.PostConstruct; import javax.net.ssl.SSLContext; import java.security.NoSuchAlgorithmException; @Component public class HttpConnectionManager { PoolingHttpClientConnectionManager cm = null; @PostConstruct public void init() { LayeredConnectionSocketFactory sslsf = null; try { sslsf = new SSLConnectionSocketFactory(SSLContext.getDefault()); } catch (NoSuchAlgorithmException e) { e.printStackTrace(); } Registry<ConnectionSocketFactory> socketFactoryRegistry = RegistryBuilder.<ConnectionSocketFactory> create() .register("https", sslsf) .register("http", new PlainConnectionSocketFactory()) .build(); cm =new PoolingHttpClientConnectionManager(socketFactoryRegistry); cm.setMaxTotal(200); cm.setDefaultMaxPerRoute(20); } public CloseableHttpClient getHttpClient() { CloseableHttpClient httpClient = HttpClients.custom() .setConnectionManager(cm) .build(); return httpClient; } }

抓取页面工具类 因为很多网站都做了反爬虫机制,它会在单位时间内限制你的访问次数,如果你爬去的数据不停在变化,即时性要求很高,这就需要使用代理IP

package com.zyt.creenshot.util; import org.apache.commons.collections.MapUtils; import org.apache.commons.lang3.StringUtils; import org.apache.http.HttpEntity; import org.apache.http.HttpHost; import org.apache.http.client.config.RequestConfig; import org.apache.http.client.methods.CloseableHttpResponse; import org.apache.http.client.methods.HttpGet; import org.apache.http.impl.client.CloseableHttpClient; import org.apache.http.util.EntityUtils; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.stereotype.Component; import java.nio.charset.StandardCharsets; import java.util.HashMap; import java.util.Map; /** * @ClassName:DocumentHelper * @Description:<抓取页面工具类> * @Author:zhaiyutao * @Data:2019/7/1 11:15 * @Vesion: v1.0 */ @Component public class DocumentHelper { @Autowired HttpConnectionManager connManager; public String getProxyHttp(String url, String address, int port, String charset) { CloseableHttpResponse response = null; CloseableHttpClient httpClient = connManager.getHttpClient(); try { //发送get请求 HttpGet httpGet = new HttpGet(url); //构建代理IP请求 httpGet = buildProxy(httpGet, address, port); Map<String, String> headerMap = new HashMap<String, String>(); headerMap.put("Referer", "http://*********.com/"); headerMap.put("Content-Type", "text/html; charset=utf-8"); headerMap.put("User-Agent", UserAgentUtil.getRandomUserAgent()); headerMap.put("Accept-Language", "zh-CN,zh;q=0.9"); headerMap.put("Accept-Encoding", "gzip, deflate"); //构建请求header头 httpGet = buildRequestHeader(headerMap, httpGet); response = httpClient.execute(httpGet); response.addHeader("Content-Type", "text/html; charset=utf-8"); //获取响应实体 HttpEntity entity = response.getEntity(); if (entity != null) { String content = EntityUtils.toString(entity); if(null != charset && !"".equals(charset)) { content = new String(content.getBytes(StandardCharsets.ISO_8859_1), charset); } return content; } } catch (Exception e){ //出现问题不处理异常 再次请求 最后统一处理 //log.error("代理解析内部出现问题 url {} address {} port {}",url,address,port); return ""; }finally { try { response.close(); } catch (Exception e) { } } return ""; } private static HttpGet buildProxy(HttpGet httpGet,String address,int port) { RequestConfig requestConfig = null; if(StringUtils.isNotEmpty(address)){ HttpHost proxy = new HttpHost(address, port); requestConfig = RequestConfig.custom() .setProxy(proxy) .setConnectTimeout(4000) .setSocketTimeout(8000) .setConnectionRequestTimeout(4000) .build(); }else{ requestConfig = RequestConfig.custom() .setConnectTimeout(4000) .setSocketTimeout(8000) .setConnectionRequestTimeout(4000) .build(); } httpGet.setConfig(requestConfig); return httpGet; } /** * 构建header头 * @param headerMap * @param httpGet * @return */ private static HttpGet buildRequestHeader(Map<String,String> headerMap,HttpGet httpGet) { if (MapUtils.isNotEmpty(headerMap)) { for (Map.Entry<String,String> kv :headerMap.entrySet()) { httpGet.setHeader(kv.getKey(),kv.getValue()); } } return httpGet; } }

请求头伪装工具类

package com.zyt.creenshot.util; import java.util.Random; public class UserAgentUtil { private static final String[] USER_AGENT = { "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36", //google "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36 OPR/60.0.3255.109", //opera "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36 QIHU 360SE", //360 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.79 Safari/537.36 Maxthon/5.2.7.3000", //遨游 "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:67.0) Gecko/20100101 Firefox/67.0", //firefox "Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50" //safari //"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/18.17763" //IE }; /** * 随机获取user-agent * @return */ public static String getRandomUserAgent() { Random random = new Random(); int i = random.nextInt(USER_AGENT.length); String userAgent = USER_AGENT[i]; return userAgent; } }

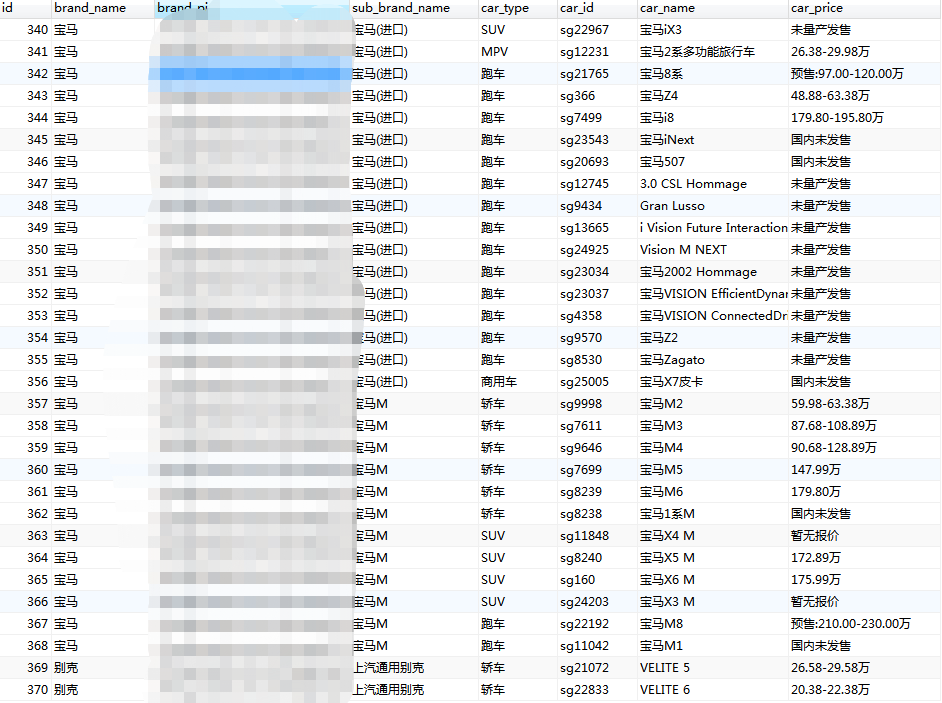

如下以爬取某个汽车网站数据为例 当然表的创建需要自己根据需要设计 我就不贴具体表结构了

package com.zyt.creenshot.service.crawlerData.impl; import com.zyt.creenshot.entity.CarBaseData; import com.zyt.creenshot.mapper.CarBaseDataMapper; import com.zyt.creenshot.service.crawlerData.ICrawlerData; import com.zyt.creenshot.util.DocumentHelper; import com.zyt.creenshot.util.HttpConnectionManager; import lombok.extern.slf4j.Slf4j; import org.apache.commons.collections4.CollectionUtils; import org.apache.commons.lang3.StringUtils; import org.jsoup.Jsoup; import org.jsoup.nodes.Document; import org.jsoup.nodes.Element; import org.jsoup.select.Elements; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.stereotype.Component; import java.util.ArrayList; import java.util.List; /** * @ClassName:CrawlerDataImpl * @Description:<TODO> * @Author:zhaiyutao * @Data:2019/7/8 17:48 * @Vesion: v1.0 */ @Component @Slf4j public class CrawlerDataImpl implements ICrawlerData { @Autowired private HttpConnectionManager connectionManager; @Autowired(required = false) private CarBaseDataMapper carBaseDataMapper; @Override public void crawlerCarBaseData() { String url = "***********要爬取的网址*************"; String resultHtml = DocumentHelper.getProxyHttp(url, null, 0, "GBK", connectionManager); if(StringUtils.isEmpty(resultHtml)){ log.error("没有爬到网站数据"); } Document html = Jsoup.parse(resultHtml); //解析品牌 Elements brandList = html.select("div[class=braRow]"); if(null != brandList && brandList.size() > 0 ){ List<CarBaseData> listCar = new ArrayList<>(); // 获取车的大品牌 for(Element brand : brandList){ Elements brandBig = brand.select("div[class=braRow-icon]"); //大品牌名称 和 车标 String brandName = brandBig.select("p").text().replace("?","·"); String brandPic = brandBig.select("img[src]").attr("#src"); Elements smallBrandList = brand.select("div[class=modA noBorder]"); for( Element sb : smallBrandList){ Elements brandItem = sb.select("div[class=thA]"); // 细分品牌 String brandSmallName = brandItem.select("a[href]").text(); Elements sbInner = sb.select("div[class=tbA ]"); for(Element in : sbInner){ dealCarData(listCar, brandName, brandPic, brandSmallName, in); } Elements sbInnerNother = sb.select("div[class=tbA mt10 noBorder]"); for(Element inner : sbInnerNother){ dealCarData(listCar, brandName, brandPic, brandSmallName, inner); } } } if(CollectionUtils.isNotEmpty(listCar)){ carBaseDataMapper.insertBatch(listCar); } } } private void dealCarData(List<CarBaseData> listCar, String brandName, String brandPic, String brandSmallName, Element in) { String carTypeName = in.select("p[class=stit]").text().split("(")[0]; Elements li = in.select("li"); for(Element element : li){ Element tit = element.select("p[class=tit]").get(0); Element price = element.select("p[class=price]").get(0); Elements carHref = tit.select("a[href]"); String priceStr = price.text(); if(null != carHref){ String href = carHref.attr("href"); if(StringUtils.isEmpty(href)){ continue; } String carName = carHref.attr("title"); String carId = StringUtils.substring(href, 1, href.length() - 1); CarBaseData carBaseData = new CarBaseData(); carBaseData.setCarId(carId); carBaseData.setCarName(carName); carBaseData.setBrandName(brandName); carBaseData.setBrandPic(brandPic); carBaseData.setSubBrandName(brandSmallName); carBaseData.setCarType(carTypeName); carBaseData.setCarPrice(priceStr); listCar.add(carBaseData); } if(listCar.size()>=500){ carBaseDataMapper.insertBatch(listCar); listCar.clear(); } } } }

爬取的数据: