Hadoop高可用集群

1、由于之前已经搭建好完全分布式集群,所以直接改配置文件(如果用的新机器,需要配置免密)

# vim core-site.xml <configuration> <!-- 指定 HDFS 中 NameNode(master)节点 的地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://mycluster</value> </property> <!-- 指定 hadoop 运行时产生文件的存储目录,包括索引数据和真实数据 --> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/ha</value> </property> <property> <name>hadoop.http.staticuser.user</name> <value>hdfs</value> </property> <!-- 指定hdfs回收时间 --> <property> <name>fs.trash.interval</name> <value>1440</value> </property> </configuration>

hdfs-site.xml

# cat hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定 HDFS 块副本的数量 --> <property> <name>dfs.replication</name> <value>3</value> </property> <!--property> <name>dfs.namenode.secondary.http-address</name> <value>node3:50090</value> </property --> <property> <name>dfs.namenode.name.dir</name> <value>/opt/module/ha/dfs/name1,/opt/module/ha/dfs/name2</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/opt/module/ha/dfs/data1,/opt/module/ha/dfs/data2</value> </property> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <property> <name>dfs.ha.namenodes.mycluster</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn1</name> <value>node1:8020</value> </property> <property> <name>dfs.namenode.rpc-address.mycluster.nn2</name> <value>node3:8020</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn1</name> <value>node1:50070</value> </property> <property> <name>dfs.namenode.http-address.mycluster.nn2</name> <value>node3:50070</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://node1:8485;node2:8485;node3:8485/mycluster</value> </property> <property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 在容灾发生时,保护活跃的namenode --> <property> <name>dfs.ha.fencing.methods</name> <value> sshfence shell(/bin/true) </value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/home/hdfs/.ssh/id_rsa</value> </property> </configuration>

同步配置文件到其他两个节点

# for i in node{2..3};do scp core-site.xml hdfs-site.xml $i:/opt/module/hadoop-2.6.0/etc/hadoop/;done core-site.xml 100% 1367 1.8MB/s 00:00 hdfs-site.xml 100% 2536 1.7MB/s 00:00

格式化namenode

# hdfs namenode -format 20/03/06 13:42:25 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = node1/10.0.0.31 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath = /opt/module/hadoop-2.6.0/etc/hadoop:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/opt/module/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/opt/module/hadoop-2.6.0/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_191 ************************************************************/ 20/03/06 13:42:25 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 20/03/06 13:42:25 INFO namenode.NameNode: createNameNode [-format] 20/03/06 13:42:26 WARN common.Util: Path /opt/module/ha/dfs/name1 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/06 13:42:26 WARN common.Util: Path /opt/module/ha/dfs/name2 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/06 13:42:26 WARN common.Util: Path /opt/module/ha/dfs/name1 should be specified as a URI in configuration files. Please update hdfs configuration. 20/03/06 13:42:26 WARN common.Util: Path /opt/module/ha/dfs/name2 should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-0d3fcbd7-d8e0-469e-8ddf-6a2a06baed14 20/03/06 13:42:26 INFO namenode.FSNamesystem: No KeyProvider found. 20/03/06 13:42:26 INFO namenode.FSNamesystem: fsLock is fair:true 20/03/06 13:42:26 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 20/03/06 13:42:26 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 20/03/06 13:42:26 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 20/03/06 13:42:26 INFO blockmanagement.BlockManager: The block deletion will start around 2020 Mar 06 13:42:26 20/03/06 13:42:26 INFO util.GSet: Computing capacity for map BlocksMap 20/03/06 13:42:26 INFO util.GSet: VM type = 64-bit 20/03/06 13:42:26 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB 20/03/06 13:42:26 INFO util.GSet: capacity = 2^21 = 2097152 entries 20/03/06 13:42:26 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 20/03/06 13:42:26 INFO blockmanagement.BlockManager: defaultReplication = 3 20/03/06 13:42:26 INFO blockmanagement.BlockManager: maxReplication = 512 20/03/06 13:42:26 INFO blockmanagement.BlockManager: minReplication = 1 20/03/06 13:42:26 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 20/03/06 13:42:26 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 20/03/06 13:42:26 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 20/03/06 13:42:26 INFO blockmanagement.BlockManager: encryptDataTransfer = false 20/03/06 13:42:26 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 20/03/06 13:42:26 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 20/03/06 13:42:26 INFO namenode.FSNamesystem: supergroup = supergroup 20/03/06 13:42:26 INFO namenode.FSNamesystem: isPermissionEnabled = true 20/03/06 13:42:26 INFO namenode.FSNamesystem: Determined nameservice ID: mycluster 20/03/06 13:42:26 INFO namenode.FSNamesystem: HA Enabled: true 20/03/06 13:42:26 INFO namenode.FSNamesystem: Append Enabled: true 20/03/06 13:42:27 INFO util.GSet: Computing capacity for map INodeMap 20/03/06 13:42:27 INFO util.GSet: VM type = 64-bit 20/03/06 13:42:27 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB 20/03/06 13:42:27 INFO util.GSet: capacity = 2^20 = 1048576 entries 20/03/06 13:42:27 INFO namenode.NameNode: Caching file names occuring more than 10 times 20/03/06 13:42:27 INFO util.GSet: Computing capacity for map cachedBlocks 20/03/06 13:42:27 INFO util.GSet: VM type = 64-bit /03/06 13:42:27 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB ▽0/03/06 13:42:27 INFO util.GSet: capacity = 2^18 = 262144 entries 20/03/06 13:42:27 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 20/03/06 13:42:27 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 20/03/06 13:42:27 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 20/03/06 13:42:27 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 20/03/06 13:42:27 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 20/03/06 13:42:27 INFO util.GSet: Computing capacity for map NameNodeRetryCache 20/03/06 13:42:27 INFO util.GSet: VM type = 64-bit 20/03/06 13:42:27 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB 20/03/06 13:42:27 INFO util.GSet: capacity = 2^15 = 32768 entries 20/03/06 13:42:27 INFO namenode.NNConf: ACLs enabled? false 20/03/06 13:42:27 INFO namenode.NNConf: XAttrs enabled? true 20/03/06 13:42:27 INFO namenode.NNConf: Maximum size of an xattr: 16384 20/03/06 13:42:28 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1693321058-10.0.0.31-1583473348048 20/03/06 13:42:28 INFO common.Storage: Storage directory /opt/module/ha/dfs/name1 has been successfully formatted. 20/03/06 13:42:28 INFO common.Storage: Storage directory /opt/module/ha/dfs/name2 has been successfully formatted. 20/03/06 13:42:28 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 20/03/06 13:42:28 INFO util.ExitUtil: Exiting with status 0 20/03/06 13:42:28 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at node1/10.0.0.31 ************************************************************/

同步元数据和块信息

# scp -r /opt/module/ha node3:/opt/module/ VERSION 100% 200 275.2KB/s 00:00 seen_txid 100% 2 2.1KB/s 00:00 fsimage_0000000000000000000.md5 100% 62 67.1KB/s 00:00 fsimage_0000000000000000000 100% 350 244.2KB/s 00:00 VERSION 100% 200 136.6KB/s 00:00 seen_txid 100% 2 2.3KB/s 00:00 fsimage_0000000000000000000.md5 100% 62 76.9KB/s 00:00 fsimage_0000000000000000000 100% 350 474.6KB/s 00:00 VERSION 100% 229 379.6KB/s 00:00 VERSION 100% 128 240.9KB/s 00:00 dfsUsed 100% 18 41.3KB/s 00:00 dncp_block_verification.log.curr 100% 0 0.0KB/s 00:00 VERSION 100% 229 397.8KB/s 00:00 VERSION 100% 128 253.7KB/s 00:00 dfsUsed 100% 18 48.0KB/s 00:00

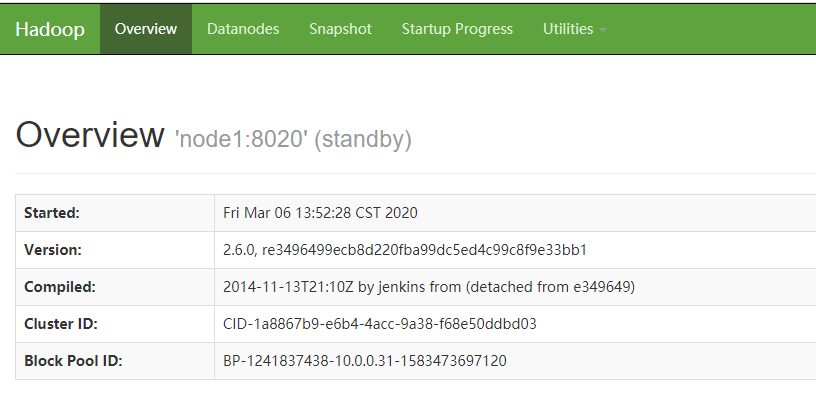

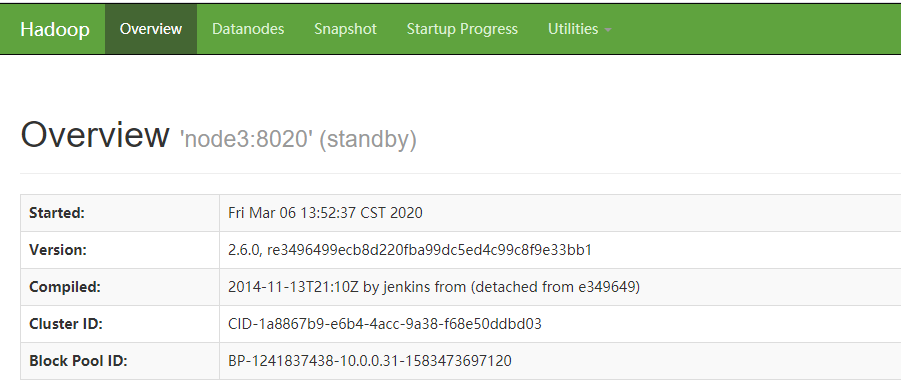

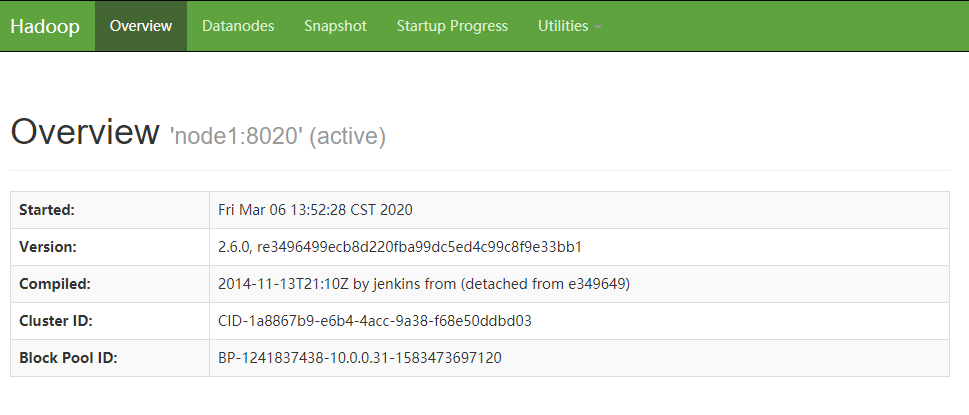

启动hdfs( 由于两个namenode都是standby状态,无法提供服务,需要激活一个)

# stop-dfs.sh Stopping namenodes on [node1 node3] node3: no namenode to stop node1: no namenode to stop node2: stopping datanode node3: stopping datanode node1: stopping datanode Stopping journal nodes [node1 node2 node3] node3: stopping journalnode node1: stopping journalnode node2: stopping journalnode # start-dfs.sh Starting namenodes on [node1 node3] node3: starting namenode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-namenode-node3.out node1: starting namenode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-namenode-node1.out node3: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node3.out node2: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node2.out de1: starting datanode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-datanode-node1.out ▽tarting journal nodes [node1 node2 node3] node2: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node2.out node3: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node3.out node1: starting journalnode, logging to /opt/module/hadoop-2.6.0/logs/hadoop-root-journalnode-node1.out

激活namenode

# hdfs haadmin -transitionToActive nn1

posted on 2020-03-07 11:11 hopeless-dream 阅读(89) 评论(0) 编辑 收藏 举报