为caffe添加最简单的全通层AllPassLayer

参考赵永科的博客,这里我们实现一个新 Layer,名称为 AllPassLayer,顾名思义就是全通 Layer,“全通”借鉴于信号处理中的全通滤波器,将信号无失真地从输入转到输出。

虽然这个 Layer 并没有什么卵用,但是在这个基础上增加你的处理是非常简单的事情。另外也是出于实验考虑,全通层的 Forward/Backward 函数非常简单不需要读者有任何高等数学和求导的背景知识。读者使用该层时可以插入到任何已有网络中,而不会影响训练、预测的准确性。

首先,要把你的实现,要像正常的 Layer 类一样,分解为声明部分和实现部分,分别放在 .hpp 与 .cpp、.cu 中。Layer 名称要起一个能区别于原版实现的新名称。.hpp 文件置于 $CAFFE_ROOT/include/caffe/layers/,而 .cpp 和 .cu 置于 $CAFFE_ROOT/src/caffe/layers/,这样你在 $CAFFE_ROOT 下执行 make 编译时,会自动将这些文件加入构建过程,省去了手动设置编译选项的繁琐流程。

其次,在 $CAFFE_ROOT/src/caffe/proto/caffe.proto 中,增加新 LayerParameter 选项,这样你在编写 train.prototxt 或者 test.prototxt 或者 deploy.prototxt 时就能把新 Layer 的描述写进去,便于修改网络结构和替换其他相同功能的 Layer 了。

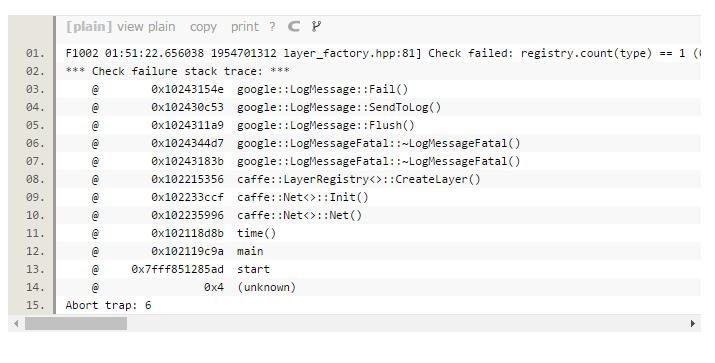

最后也是最容易忽视的一点,在 Layer 工厂注册新 Layer 加工函数,不然在你运行过程中可能会报如下错误:

首先看头文件(all_pass_layer.hpp):

#ifndef CAFFE_ALL_PASS_LAYER_HPP_ #define CAFFE_ALL_PASS_LAYER_HPP_ #include <vector> #include "caffe/blob.hpp" #include "caffe/layer.hpp" #include "caffe/proto/caffe.pb.h" #include "caffe/layers/neuron_layer.hpp" namespace caffe { template <typename Dtype> class AllPassLayer : public NeuronLayer<Dtype> { public: explicit AllPassLayer(const LayerParameter& param) : NeuronLayer<Dtype>(param) {} virtual inline const char* type() const { return "AllPass"; } protected: virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top); virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top); virtual void Backward_cpu(const vector<Blob<Dtype>*>& top, const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom); virtual void Backward_gpu(const vector<Blob<Dtype>*>& top, const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom); }; } // namespace caffe

再看源文件(all_pass_layer.cpp和all_pass_layer.cu,这两个文件我暂时还不知道有什么区别,我是直接复制粘贴的,两个文件内容一样):

#include <algorithm> #include <vector> #include "caffe/layers/all_pass_layer.hpp" #include <iostream> using namespace std; #define DEBUG_AP(str) cout << str << endl; namespace caffe{ template <typename Dtype> void AllPassLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom , const vector<Blob<Dtype>*>& top) { const Dtype* bottom_data = bottom[0] -> cpu_data(); //cpu_data()只读访问cpu data Dtype* top_data = top[0] -> mutable_cpu_data(); //mutable_cpu_data读写访问cpu data const int count = bottom[0] -> count(); //计算Blob中的元素总数 for (int i = 0 ; i < count ; i ++) { top_data[i] = bottom_data[i]; //只是单纯的通过,全通 } DEBUG_AP("Here is All Pass Layer , forwarding."); DEBUG_AP(this -> layer_param_.all_pass_param().key()); //读取prototxt预设值 } template <typename Dtype> void AllPassLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top , const vector<bool>& propagate_down , const vector<Blob<Dtype>*>& bottom) { if (propagate_down[0]) //propagate_down[0] = 1则指定计算权值的剃度 , ...[1] = 1则指定计算偏置项的剃度,这里是计算权值的剃度 { const Dtype* bottom_data = bottom[0] -> cpu_data(); const Dtype* top_diff = top[0] -> cpu_diff(); Dtype* bottom_diff = bottom[0] -> mutable_cpu_diff(); const int count = bottom[0] -> count(); for ( int i = 0 ; i < count ; i ++ ) { bottom_diff[i] = top_diff[i]; } } DEBUG_AP("Here is All Pass Layer , backwarding."); DEBUG_AP(this -> layer_param_.all_pass_param().key()); } #ifndef CPU_ONLY #define CPU_ONLY #endif INSTANTIATE_CLASS(AllPassLayer); REGISTER_LAYER_CLASS(AllPass); } //namespace caffe

时间考虑,我没有实现 GPU 模式的 forward、backward,故本文例程仅支持 CPU_ONLY 模式。

编辑 caffe.proto,找到 LayerParameter 描述,增加一项:

message LayerParameter { optional string name = 1; // the layer name optional string type = 2; // the layer type repeated string bottom = 3; // the name of each bottom blob repeated string top = 4; // the name of each top blob // The train / test phase for computation. optional Phase phase = 10; // The amount of weight to assign each top blob in the objective. // Each layer assigns a default value, usually of either 0 or 1, // to each top blob. repeated float loss_weight = 5; // Specifies training parameters (multipliers on global learning constants, // and the name and other settings used for weight sharing). repeated ParamSpec param = 6; // The blobs containing the numeric parameters of the layer. repeated BlobProto blobs = 7; // Specifies on which bottoms the backpropagation should be skipped. // The size must be either 0 or equal to the number of bottoms. repeated bool propagate_down = 11; // Rules controlling whether and when a layer is included in the network, // based on the current NetState. You may specify a non-zero number of rules // to include OR exclude, but not both. If no include or exclude rules are // specified, the layer is always included. If the current NetState meets // ANY (i.e., one or more) of the specified rules, the layer is // included/excluded. repeated NetStateRule include = 8; repeated NetStateRule exclude = 9; // Parameters for data pre-processing. optional TransformationParameter transform_param = 100; // Parameters shared by loss layers. optional LossParameter loss_param = 101; // Layer type-specific parameters. // // Note: certain layers may have more than one computational engine // for their implementation. These layers include an Engine type and // engine parameter for selecting the implementation. // The default for the engine is set by the ENGINE switch at compile-time. optional AccuracyParameter accuracy_param = 102; optional ArgMaxParameter argmax_param = 103; optional BatchNormParameter batch_norm_param = 139; optional BiasParameter bias_param = 141; optional ConcatParameter concat_param = 104; optional ContrastiveLossParameter contrastive_loss_param = 105; optional ConvolutionParameter convolution_param = 106; optional CropParameter crop_param = 144; optional DataParameter data_param = 107; optional DropoutParameter dropout_param = 108; optional DummyDataParameter dummy_data_param = 109; optional EltwiseParameter eltwise_param = 110; optional ELUParameter elu_param = 140; optional EmbedParameter embed_param = 137; optional ExpParameter exp_param = 111; optional FlattenParameter flatten_param = 135; optional HDF5DataParameter hdf5_data_param = 112; optional HDF5OutputParameter hdf5_output_param = 113; optional HingeLossParameter hinge_loss_param = 114; optional ImageDataParameter image_data_param = 115; optional InfogainLossParameter infogain_loss_param = 116; optional InnerProductParameter inner_product_param = 117; optional InputParameter input_param = 143; optional LogParameter log_param = 134; optional LRNParameter lrn_param = 118; optional MemoryDataParameter memory_data_param = 119; optional MVNParameter mvn_param = 120; optional PoolingParameter pooling_param = 121; optional PowerParameter power_param = 122; optional PReLUParameter prelu_param = 131; optional PythonParameter python_param = 130; optional ReductionParameter reduction_param = 136; optional ReLUParameter relu_param = 123; optional ReshapeParameter reshape_param = 133; optional ScaleParameter scale_param = 142; optional SigmoidParameter sigmoid_param = 124; optional SoftmaxParameter softmax_param = 125; optional SPPParameter spp_param = 132; optional SliceParameter slice_param = 126; optional TanHParameter tanh_param = 127; optional ThresholdParameter threshold_param = 128; optional TileParameter tile_param = 138; optional WindowDataParameter window_data_param = 129; optional AllPassParameter all_pass_param = 155; }

注意新增数字不要和以前的 Layer 数字重复。

仍然在 caffe.proto 中,增加 AllPassParameter 声明,位置任意。我设定了一个参数,可以用于从 prototxt 中读取预设值。

message AllPassParameter { optional float key = 1 [default = 0]; }

这句来读取 prototxt 预设值。

在 $CAFFE_ROOT 下执行 make clean,然后重新 make all。要想一次编译成功,务必规范代码,对常见错误保持敏锐的嗅觉并加以避免。

万事具备,只欠 prototxt 了。

不难,我们写个最简单的 deploy.prototxt,不需要 data layer 和 softmax layer,just for fun。

name: "AllPassTest" layer { name: "data" type: "Input" top: "data" input_param { shape: { dim: 10 dim: 3 dim: 227 dim: 227 } } } layer { name: "ap" type: "AllPass" bottom: "data" top: "conv1" all_pass_param { key: 12.88 } }

注意,这里的 type :后面写的内容,应该是你在 .hpp 中声明的新类 class name 去掉 Layer 后的名称。

上面设定了 key 这个参数的预设值为 12.88,嗯,你想到了刘翔对不对。

为了检验该 Layer 是否能正常创建和执行 forward, backward,我们运行 caffe time 命令并指定刚刚实现的 prototxt :

$ ./build/tools/caffe.bin time -model deploy.prototxt I1002 02:03:41.667682 1954701312 caffe.cpp:312] Use CPU. I1002 02:03:41.671360 1954701312 net.cpp:49] Initializing net from parameters: name: "AllPassTest" state { phase: TRAIN } layer { name: "data" type: "Input" top: "data" input_param { shape { dim: 10 dim: 3 dim: 227 dim: 227 } } } layer { name: "ap" type: "AllPass" bottom: "data" top: "conv1" all_pass_param { key: 12.88 } } I1002 02:03:41.671463 1954701312 layer_factory.hpp:77] Creating layer data I1002 02:03:41.671484 1954701312 net.cpp:91] Creating Layer data I1002 02:03:41.671499 1954701312 net.cpp:399] data -> data I1002 02:03:41.671555 1954701312 net.cpp:141] Setting up data I1002 02:03:41.671566 1954701312 net.cpp:148] Top shape: 10 3 227 227 (1545870) I1002 02:03:41.671592 1954701312 net.cpp:156] Memory required for data: 6183480 I1002 02:03:41.671605 1954701312 layer_factory.hpp:77] Creating layer ap I1002 02:03:41.671620 1954701312 net.cpp:91] Creating Layer ap I1002 02:03:41.671630 1954701312 net.cpp:425] ap <- data I1002 02:03:41.671644 1954701312 net.cpp:399] ap -> conv1 I1002 02:03:41.671663 1954701312 net.cpp:141] Setting up ap I1002 02:03:41.671674 1954701312 net.cpp:148] Top shape: 10 3 227 227 (1545870) I1002 02:03:41.671685 1954701312 net.cpp:156] Memory required for data: 12366960 I1002 02:03:41.671695 1954701312 net.cpp:219] ap does not need backward computation. I1002 02:03:41.671705 1954701312 net.cpp:219] data does not need backward computation. I1002 02:03:41.671710 1954701312 net.cpp:261] This network produces output conv1 I1002 02:03:41.671720 1954701312 net.cpp:274] Network initialization done. I1002 02:03:41.671746 1954701312 caffe.cpp:320] Performing Forward Here is All Pass Layer, forwarding. 12.88 I1002 02:03:41.679689 1954701312 caffe.cpp:325] Initial loss: 0 I1002 02:03:41.679714 1954701312 caffe.cpp:326] Performing Backward I1002 02:03:41.679738 1954701312 caffe.cpp:334] *** Benchmark begins *** I1002 02:03:41.679746 1954701312 caffe.cpp:335] Testing for 50 iterations. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.681139 1954701312 caffe.cpp:363] Iteration: 1 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.682394 1954701312 caffe.cpp:363] Iteration: 2 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.683653 1954701312 caffe.cpp:363] Iteration: 3 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.685096 1954701312 caffe.cpp:363] Iteration: 4 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.686326 1954701312 caffe.cpp:363] Iteration: 5 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.687713 1954701312 caffe.cpp:363] Iteration: 6 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.689038 1954701312 caffe.cpp:363] Iteration: 7 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.690251 1954701312 caffe.cpp:363] Iteration: 8 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.691548 1954701312 caffe.cpp:363] Iteration: 9 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.692805 1954701312 caffe.cpp:363] Iteration: 10 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.694056 1954701312 caffe.cpp:363] Iteration: 11 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.695264 1954701312 caffe.cpp:363] Iteration: 12 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.696761 1954701312 caffe.cpp:363] Iteration: 13 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.698225 1954701312 caffe.cpp:363] Iteration: 14 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.699653 1954701312 caffe.cpp:363] Iteration: 15 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.700945 1954701312 caffe.cpp:363] Iteration: 16 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.702761 1954701312 caffe.cpp:363] Iteration: 17 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.704056 1954701312 caffe.cpp:363] Iteration: 18 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.706471 1954701312 caffe.cpp:363] Iteration: 19 forward-backward time: 2 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.708784 1954701312 caffe.cpp:363] Iteration: 20 forward-backward time: 2 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.710043 1954701312 caffe.cpp:363] Iteration: 21 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.711272 1954701312 caffe.cpp:363] Iteration: 22 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.712528 1954701312 caffe.cpp:363] Iteration: 23 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.713964 1954701312 caffe.cpp:363] Iteration: 24 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.715248 1954701312 caffe.cpp:363] Iteration: 25 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.716487 1954701312 caffe.cpp:363] Iteration: 26 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.717725 1954701312 caffe.cpp:363] Iteration: 27 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.718962 1954701312 caffe.cpp:363] Iteration: 28 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.720289 1954701312 caffe.cpp:363] Iteration: 29 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.721837 1954701312 caffe.cpp:363] Iteration: 30 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.723042 1954701312 caffe.cpp:363] Iteration: 31 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.724261 1954701312 caffe.cpp:363] Iteration: 32 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.725587 1954701312 caffe.cpp:363] Iteration: 33 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.726771 1954701312 caffe.cpp:363] Iteration: 34 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.728013 1954701312 caffe.cpp:363] Iteration: 35 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.729249 1954701312 caffe.cpp:363] Iteration: 36 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.730716 1954701312 caffe.cpp:363] Iteration: 37 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.732275 1954701312 caffe.cpp:363] Iteration: 38 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.733809 1954701312 caffe.cpp:363] Iteration: 39 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.735049 1954701312 caffe.cpp:363] Iteration: 40 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.737144 1954701312 caffe.cpp:363] Iteration: 41 forward-backward time: 2 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.739090 1954701312 caffe.cpp:363] Iteration: 42 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.741575 1954701312 caffe.cpp:363] Iteration: 43 forward-backward time: 2 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.743450 1954701312 caffe.cpp:363] Iteration: 44 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.744732 1954701312 caffe.cpp:363] Iteration: 45 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.745970 1954701312 caffe.cpp:363] Iteration: 46 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.747185 1954701312 caffe.cpp:363] Iteration: 47 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.748430 1954701312 caffe.cpp:363] Iteration: 48 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.749826 1954701312 caffe.cpp:363] Iteration: 49 forward-backward time: 1 ms. Here is All Pass Layer, forwarding. 12.88 Here is All Pass Layer, backwarding. 12.88 I1002 02:03:41.751124 1954701312 caffe.cpp:363] Iteration: 50 forward-backward time: 1 ms. I1002 02:03:41.751147 1954701312 caffe.cpp:366] Average time per layer: I1002 02:03:41.751157 1954701312 caffe.cpp:369] data forward: 0.00108 ms. I1002 02:03:41.751183 1954701312 caffe.cpp:372] data backward: 0.001 ms. I1002 02:03:41.751194 1954701312 caffe.cpp:369] ap forward: 1.37884 ms. I1002 02:03:41.751205 1954701312 caffe.cpp:372] ap backward: 0.01156 ms. I1002 02:03:41.751220 1954701312 caffe.cpp:377] Average Forward pass: 1.38646 ms. I1002 02:03:41.751231 1954701312 caffe.cpp:379] Average Backward pass: 0.0144 ms. I1002 02:03:41.751240 1954701312 caffe.cpp:381] Average Forward-Backward: 1.42 ms. I1002 02:03:41.751250 1954701312 caffe.cpp:383] Total Time: 71 ms. I1002 02:03:41.751260 1954701312 caffe.cpp:384] *** Benchmark ends ***

可见该 Layer 可以正常创建、加载预设参数、执行 forward、backward 函数。

实际上对于算法 Layer,还要写 Test Case 保证功能正确。由于我们选择了极为简单的全通 Layer,故这一步可以省去。我这里偷点懒,您省点阅读时间。

补充:make all后还要重新配置python接口,make pycaffe ,然后再按之前的操作就行了。。。

参考链接:http://blog.csdn.net/kkk584520/article/details/52721838

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】凌霞软件回馈社区,携手博客园推出1Panel与Halo联合会员

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步