深入理解TCP协议的三次握手及其源代码

三次握手

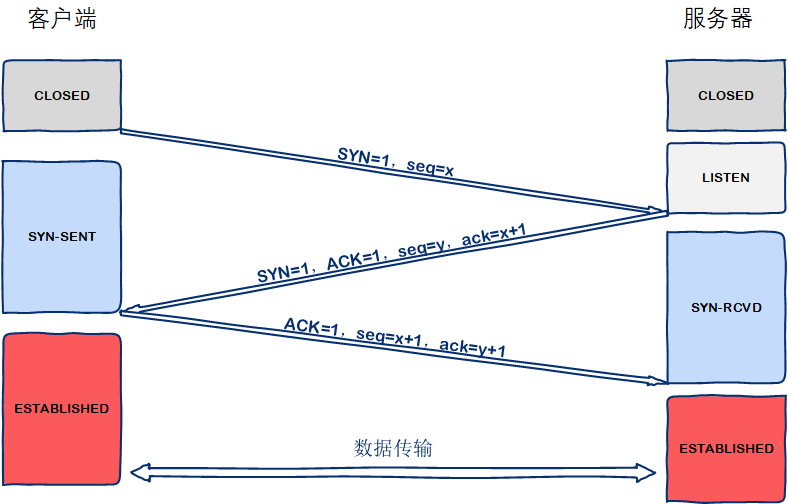

相信大部分人都知道TCP三次握手的机制是什么,流程及客户端和服务器端状态如下图:

关于客户端、服务器状态解析的可以参考之前写的博文Linux下netstat命令详解

TCP的三次握手从用户程序的角度看就是客户端connect和服务端accept建立起连接时背后的完成的工作,在内核socket接口层这两个socket API函数对应着sys_connect和sys_accept函数,进一步对应着sock->opt->connect和sock->opt->accept两个函数指针。

进行源代码分析,在net/ipv4/tcp-ipv4.c文件下的结构体变量struct proto tcp_prot查看了TCP协议栈的访问接口函数,可以看到实际的调用关系,在TCP协议中这两个函数指针对应着tcp_v4_connect函数和inet_csk_accept函数。

struct proto tcp_prot = {

.name = "TCP",

.owner = THIS_MODULE,

.close = tcp_close,

.pre_connect = tcp_v4_pre_connect,

.connect = tcp_v4_connect,

.disconnect = tcp_disconnect,

.accept = inet_csk_accept,

.ioctl = tcp_ioctl,

.init = tcp_v4_init_sock,

.destroy = tcp_v4_destroy_sock,

.shutdown = tcp_shutdown,

.setsockopt = tcp_setsockopt,

.getsockopt = tcp_getsockopt,

.keepalive = tcp_set_keepalive,

.recvmsg = tcp_recvmsg,

.sendmsg = tcp_sendmsg,

.sendpage = tcp_sendpage,

.backlog_rcv = tcp_v4_do_rcv,

.release_cb = tcp_release_cb,

.hash = inet_hash,

.unhash = inet_unhash,

.get_port = inet_csk_get_port,

.enter_memory_pressure = tcp_enter_memory_pressure,

.leave_memory_pressure = tcp_leave_memory_pressure,

.stream_memory_free = tcp_stream_memory_free,

.sockets_allocated = &tcp_sockets_allocated,

.orphan_count = &tcp_orphan_count,

.memory_allocated = &tcp_memory_allocated,

.memory_pressure = &tcp_memory_pressure,

.sysctl_mem = sysctl_tcp_mem,

.sysctl_wmem_offset = offsetof(struct net, ipv4.sysctl_tcp_wmem),

.sysctl_rmem_offset = offsetof(struct net, ipv4.sysctl_tcp_rmem),

.max_header = MAX_TCP_HEADER,

.obj_size = sizeof(struct tcp_sock),

.slab_flags = SLAB_TYPESAFE_BY_RCU,

.twsk_prot = &tcp_timewait_sock_ops,

.rsk_prot = &tcp_request_sock_ops,

.h.hashinfo = &tcp_hashinfo,

.no_autobind = true,

#ifdef CONFIG_COMPAT

.compat_setsockopt = compat_tcp_setsockopt,

.compat_getsockopt = compat_tcp_getsockopt,

#endif

.diag_destroy = tcp_abort,

};

通过源代码分析三次握手过程

通过之前的gdb调试追踪,我们知道相应的socket系统调用函数都在net/socket.c目录下,其中socket接口函数都定义在SYSCALL_DEFINE接口里,找到主要的相关SYSCALL_DEFINE定义如下:

SYSCALL_DEFINE3(socket, int, family, int, type, int, protocol)

{

return __sys_socket(family, type, protocol);

}

SYSCALL_DEFINE3(bind, int, fd, struct sockaddr __user *, umyaddr, int, addrlen)

{

return __sys_bind(fd, umyaddr, addrlen);

}

SYSCALL_DEFINE2(listen, int, fd, int, backlog)

{

return __sys_listen(fd, backlog);

}

SYSCALL_DEFINE3(accept, int, fd, struct sockaddr __user *, upeer_sockaddr,

int __user *, upeer_addrlen)

{

return __sys_accept4(fd, upeer_sockaddr, upeer_addrlen, 0);

}

SYSCALL_DEFINE3(connect, int, fd, struct sockaddr __user *, uservaddr,

int, addrlen)

{

return __sys_connect(fd, uservaddr, addrlen);

}

SYSCALL_DEFINE3(getsockname, int, fd, struct sockaddr __user *, usockaddr,

int __user *, usockaddr_len)

{

return __sys_getsockname(fd, usockaddr, usockaddr_len);

}

SYSCALL_DEFINE3(getpeername, int, fd, struct sockaddr __user *, usockaddr,

int __user *, usockaddr_len)

{

return __sys_getpeername(fd, usockaddr, usockaddr_len);

}

SYSCALL_DEFINE4(send, int, fd, void __user *, buff, size_t, len,

unsigned int, flags)

{

return __sys_sendto(fd, buff, len, flags, NULL, 0);

}

SYSCALL_DEFINE4(recv, int, fd, void __user *, ubuf, size_t, size,

unsigned int, flags)

{

return __sys_recvfrom(fd, ubuf, size, flags, NULL, NULL);

}

第一次握手

客户端发起SYN请求,是通过调用__sys_connect发起连接,源码如下:

int __sys_connect(int fd, struct sockaddr __user *uservaddr, int addrlen)

{

struct socket *sock;

struct sockaddr_storage address;

int err, fput_needed;

//获取socket

sock = sockfd_lookup_light(fd, &err, &fput_needed);

if (!sock)

goto out;

err = move_addr_to_kernel(uservaddr, addrlen, &address);

if (err < 0)

goto out_put;

err =

security_socket_connect(sock, (struct sockaddr *)&address, addrlen);

if (err)

goto out_put;

//对于流式套接字,sock->ops为 inet_stream_ops -->inet_stream_connect

//对于数据报套接字,sock->ops为 inet_dgram_ops --> inet_dgram_connect

err = sock->ops->connect(sock, (struct sockaddr *)&address, addrlen,

sock->file->f_flags);

out_put:

fput_light(sock->file, fput_needed);

out:

return err;

}

该函数完成:

-

根据文件描述符找到指定的socket对象;

-

将地址信息从用户空间拷贝到内核空间;

-

针对TCP/UDP协议,调用流式/数据报套接字的connect函数。

TCP对应的是流式套接字,其connect函数为inet_stream_connect,源代码:

int inet_stream_connect(struct socket *sock, struct sockaddr *uaddr,

int addr_len, int flags)

{

int err;

lock_sock(sock->sk);

err = __inet_stream_connect(sock, uaddr, addr_len, flags);

release_sock(sock->sk);

return err;

}

/*

* Connect to a remote host. There is regrettably still a little

* TCP 'magic' in here.

*/

int __inet_stream_connect(struct socket *sock, struct sockaddr *uaddr,

int addr_len, int flags, int is_sendmsg)

{

struct sock *sk = sock->sk;

int err;

long timeo;

/*

* uaddr can be NULL and addr_len can be 0 if:

* sk is a TCP fastopen active socket and

* TCP_FASTOPEN_CONNECT sockopt is set and

* we already have a valid cookie for this socket.

* In this case, user can call write() after connect().

* write() will invoke tcp_sendmsg_fastopen() which calls

* __inet_stream_connect().

*/

/* 地址存在 */

if (uaddr) {

/* 检查地址长度 */

if (addr_len < sizeof(uaddr->sa_family))

return -EINVAL;

/* 对unspec的特殊处理 */

if (uaddr->sa_family == AF_UNSPEC) {

err = sk->sk_prot->disconnect(sk, flags);

sock->state = err ? SS_DISCONNECTING : SS_UNCONNECTED;

goto out;

}

}

/* 根据socket状态对应处理 */

switch (sock->state) {

default:

err = -EINVAL;

goto out;

/* 已连接 */

case SS_CONNECTED:

err = -EISCONN;

goto out;

/* 正在连接 */

case SS_CONNECTING:

/* 对是否发消息做不同处理,fastopen*/

if (inet_sk(sk)->defer_connect)

err = is_sendmsg ? -EINPROGRESS : -EISCONN;

else

err = -EALREADY;

/* Fall out of switch with err, set for this state */

break;

/* 未连接 */

case SS_UNCONNECTED:

err = -EISCONN;

/* 需要为closed状态 */

if (sk->sk_state != TCP_CLOSE)

goto out;

/* 传输层协议的connect */

err = sk->sk_prot->connect(sk, uaddr, addr_len);

if (err < 0)

goto out;

/* 标记状态为正在连接 */

sock->state = SS_CONNECTING;

/* fastopen */

if (!err && inet_sk(sk)->defer_connect)

goto out;

/* Just entered SS_CONNECTING state; the only

* difference is that return value in non-blocking

* case is EINPROGRESS, rather than EALREADY.

*/

/* 非阻塞情况返回inprogress,阻塞返回already */

err = -EINPROGRESS;

break;

}

/* 阻塞情况下需要获取超时时间,非阻塞为0 */

timeo = sock_sndtimeo(sk, flags & O_NONBLOCK);

/* 已发送或者已收到syn */

if ((1 << sk->sk_state) & (TCPF_SYN_SENT | TCPF_SYN_RECV)) {

int writebias = (sk->sk_protocol == IPPROTO_TCP) &&

tcp_sk(sk)->fastopen_req &&

tcp_sk(sk)->fastopen_req->data ? 1 : 0;

/* Error code is set above */

/* 非阻塞退出,阻塞则等待连接,等待剩余时间为0,退出 */

if (!timeo || !inet_wait_for_connect(sk, timeo, writebias))

goto out;

/* 处理信号,达到最大调度时间或者被打断 */

err = sock_intr_errno(timeo);

if (signal_pending(current))

goto out;

}

/* Connection was closed by RST, timeout, ICMP error

* or another process disconnected us.

*/

/* 状态为关闭 */

if (sk->sk_state == TCP_CLOSE)

goto sock_error;

/* sk->sk_err may be not zero now, if RECVERR was ordered by user

* and error was received after socket entered established state.

* Hence, it is handled normally after connect() return successfully.

*/

/* 设置为连接状态 */

sock->state = SS_CONNECTED;

err = 0;

out:

return err;

sock_error:

err = sock_error(sk) ? : -ECONNABORTED;

/* 设置未连接状态 */

sock->state = SS_UNCONNECTED;

if (sk->sk_prot->disconnect(sk, flags))

sock->state = SS_DISCONNECTING;

goto out;

}

该函数完成:

- 检查socket地址长度和使用的协议族。

- 检查socket的状态,必须是SS_UNCONNECTED或SS_CONNECTING。

- 调用tcp_v4_connect()来发送SYN包。

- 等待后续握手的完成。

由tcp_v4_connect()->tcp_connect()->tcp_transmit_skb()发送,并置为TCP_SYN_SENT.下面是tcp_v4_connect()和tcp_connect()的源代码

int tcp_v4_connect(struct sock *sk, struct sockaddr *uaddr, int addr_len)

{

struct sockaddr_in *usin = (struct sockaddr_in *)uaddr;

struct inet_sock *inet = inet_sk(sk);

struct tcp_sock *tp = tcp_sk(sk);

__be16 orig_sport, orig_dport;

__be32 daddr, nexthop;

struct flowi4 *fl4;

struct rtable *rt;

int err;

struct ip_options_rcu *inet_opt;

struct inet_timewait_death_row *tcp_death_row = &sock_net(sk)->ipv4.tcp_death_row;

if (addr_len < sizeof(struct sockaddr_in))

return -EINVAL;

if (usin->sin_family != AF_INET)

return -EAFNOSUPPORT;

nexthop = daddr = usin->sin_addr.s_addr;

inet_opt = rcu_dereference_protected(inet->inet_opt,

lockdep_sock_is_held(sk));

if (inet_opt && inet_opt->opt.srr) {

if (!daddr)

return -EINVAL;

nexthop = inet_opt->opt.faddr;

}

orig_sport = inet->inet_sport;

orig_dport = usin->sin_port;

fl4 = &inet->cork.fl.u.ip4;

rt = ip_route_connect(fl4, nexthop, inet->inet_saddr,

RT_CONN_FLAGS(sk), sk->sk_bound_dev_if,

IPPROTO_TCP,

orig_sport, orig_dport, sk);

if (IS_ERR(rt)) {

err = PTR_ERR(rt);

if (err == -ENETUNREACH)

IP_INC_STATS(sock_net(sk), IPSTATS_MIB_OUTNOROUTES);

return err;

}

if (rt->rt_flags & (RTCF_MULTICAST | RTCF_BROADCAST)) {

ip_rt_put(rt);

return -ENETUNREACH;

}

if (!inet_opt || !inet_opt->opt.srr)

daddr = fl4->daddr;

if (!inet->inet_saddr)

inet->inet_saddr = fl4->saddr;

sk_rcv_saddr_set(sk, inet->inet_saddr);

if (tp->rx_opt.ts_recent_stamp && inet->inet_daddr != daddr) {

/* Reset inherited state */

tp->rx_opt.ts_recent = 0;

tp->rx_opt.ts_recent_stamp = 0;

if (likely(!tp->repair))

tp->write_seq = 0;

}

inet->inet_dport = usin->sin_port;

sk_daddr_set(sk, daddr);

inet_csk(sk)->icsk_ext_hdr_len = 0;

if (inet_opt)

inet_csk(sk)->icsk_ext_hdr_len = inet_opt->opt.optlen;

tp->rx_opt.mss_clamp = TCP_MSS_DEFAULT;

/* Socket identity is still unknown (sport may be zero).

* However we set state to SYN-SENT and not releasing socket

* lock select source port, enter ourselves into the hash tables and

* complete initialization after this.

*/

tcp_set_state(sk, TCP_SYN_SENT);

err = inet_hash_connect(tcp_death_row, sk);

if (err)

goto failure;

sk_set_txhash(sk);

rt = ip_route_newports(fl4, rt, orig_sport, orig_dport,

inet->inet_sport, inet->inet_dport, sk);

if (IS_ERR(rt)) {

err = PTR_ERR(rt);

rt = NULL;

goto failure;

}

/* OK, now commit destination to socket. */

sk->sk_gso_type = SKB_GSO_TCPV4;

sk_setup_caps(sk, &rt->dst);

rt = NULL;

if (likely(!tp->repair)) {

if (!tp->write_seq)

tp->write_seq = secure_tcp_seq(inet->inet_saddr,

inet->inet_daddr,

inet->inet_sport,

usin->sin_port);

tp->tsoffset = secure_tcp_ts_off(sock_net(sk),

inet->inet_saddr,

inet->inet_daddr);

}

inet->inet_id = tp->write_seq ^ jiffies;

if (tcp_fastopen_defer_connect(sk, &err))

return err;

if (err)

goto failure;

err = tcp_connect(sk);

if (err)

goto failure;

return 0;

failure:

/*

* This unhashes the socket and releases the local port,

* if necessary.

*/

tcp_set_state(sk, TCP_CLOSE);

ip_rt_put(rt);

sk->sk_route_caps = 0;

inet->inet_dport = 0;

return err;

}

int tcp_connect(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

struct sk_buff *buff;

int err;

//初始化传输控制块中与连接相关的成员

tcp_connect_init(sk);

if (unlikely(tp->repair)) {

tcp_finish_connect(sk, NULL);

return 0;

}

//为SYN段分配报文并进行初始化(分配skbuff)

buff = sk_stream_alloc_skb(sk, 0, sk->sk_allocation, true);

if (unlikely(!buff))

return -ENOBUFS;

//在函数tcp_v4_connect中write_seq已经被初始化随机值

tcp_init_nondata_skb(buff, tp->write_seq++, TCPHDR_SYN);

tp->retrans_stamp = tcp_time_stamp;

//将报文添加到发送队列上

tcp_connect_queue_skb(sk, buff);

//拥塞控制

tcp_ecn_send_syn(sk, buff);

err = tp->fastopen_req ? tcp_send_syn_data(sk, buff) :

//构造tcp头和ip头并发送

tcp_transmit_skb(sk, buff, 1, sk->sk_allocation);

if (err == -ECONNREFUSED)

return err;

tp->snd_nxt = tp->write_seq;

tp->pushed_seq = tp->write_seq;

TCP_INC_STATS(sock_net(sk), TCP_MIB_ACTIVEOPENS);

//启动重传定时器

inet_csk_reset_xmit_timer(sk, ICSK_TIME_RETRANS,

inet_csk(sk)->icsk_rto, TCP_RTO_MAX);

return 0;

}

该函数完成:

- 初始化套接字跟连接相关的字段。

- 申请sk_buff空间。

- 将sk_buff初始化为syn报文,实质是操作tcp_skb_cb,在初始化TCP头的时候会用到。

- 调用tcp_connect_queue_skb()函数将报文sk_buff添加到发送队列sk->sk_write_queue。

- 调用tcp_transmit_skb()函数构造tcp头,然后交给网络层。

- 初始化重传定时器

tcp_connect_queue_skb()函数的原理主要是移动sk_buff的data指针,然后填充TCP头。再然后将报文交给网络层,将报文发出。

这样,三次握手中的第一次握手在客户端的层面完成,报文到达服务端,由服务端处理完毕后,第一次握手完成,客户端socket状态变为TCP_SYN_SENT。

第二次握手

当服务端收到客户端发送的报文之后,处理第二次握手,调用tcp_v4_rev,代码中重要的部分如下

int tcp_v4_rcv(struct sk_buff *skb)

{

...

//如果不是发往本地的数据包,则直接丢弃

if (skb->pkt_type != PACKET_HOST)

goto discard_it;

/* Count it even if it's bad */

__TCP_INC_STATS(net, TCP_MIB_INSEGS);

////包长是否大于TCP头的长度

if (!pskb_may_pull(skb, sizeof(struct tcphdr)))

goto discard_it;

...

//根据源端口号,目的端口号和接收的interface查找sock对象

//监听哈希表中才能找到

sk = __inet_lookup_skb(&tcp_hashinfo, skb, __tcp_hdrlen(th), th->source,

th->dest, &refcounted);

//如果找不到处理的socket对象,就把数据报丢掉

if (!sk)

goto no_tcp_socket;

...

//如果socket处于监听状态

if (sk->sk_state == TCP_LISTEN) {

ret = tcp_v4_do_rcv(sk, skb);

goto put_and_return;

}

sk_incoming_cpu_update(sk);

bh_lock_sock_nested(sk);

tcp_segs_in(tcp_sk(sk), skb);

ret = 0;

//查看是否有用户态进程对该sock进行了锁定

//如果sock_owned_by_user为真,则sock的状态不能进行更改

if (!sock_owned_by_user(sk)) {

if (!tcp_prequeue(sk, skb))

ret = tcp_v4_do_rcv(sk, skb);

} else if (tcp_add_backlog(sk, skb)) {

goto discard_and_relse;

}

bh_unlock_sock(sk);

put_and_return:

if (refcounted)

sock_put(sk);

return ret;

...

}

该函数完成

- 根据tcp头部信息查到报文的socket对象,

- 检查socket状态从而做出不同处理(这里是状态是TCP_LISTEN,直接调用函数tcp_v4_do_rcv,接着是调用tcp_rcv_state_process)

tcp_rcv_state_process源代码的重要部分如下

int tcp_rcv_state_process(struct sock *sk, struct sk_buff *skb)

{

...switch (sk->sk_state) {

//SYN_RECV状态的处理

case TCP_CLOSE:

goto discard;

//服务端第一次握手处理

case TCP_LISTEN:

if (th->ack)

return 1;

if (th->rst)

goto discard;

if (th->syn) {

if (th->fin)

goto discard;

if (icsk->icsk_af_ops->conn_request(sk, skb) < 0)

return 1;

consume_skb(skb);

return 0;

}

goto discard;

//客户端第二次握手处理

case TCP_SYN_SENT:

tp->rx_opt.saw_tstamp = 0;

//处理SYN_SENT状态下接收到的TCP段

queued = tcp_rcv_synsent_state_process(sk, skb, th);

if (queued >= 0)

return queued;

//处理完第二次握手后,还需要处理带外数据

tcp_urg(sk, skb, th);

__kfree_skb(skb);

//检测是否有数据需要发送

tcp_data_snd_check(sk);

return 0;

}

...switch (sk->sk_state) {

case TCP_SYN_RECV:

if (!acceptable)

return 1;

if (!tp->srtt_us)

tcp_synack_rtt_meas(sk, req);

if (req) {

inet_csk(sk)->icsk_retransmits = 0;

reqsk_fastopen_remove(sk, req, false);

} else {

//建立路由,初始化拥塞控制模块

icsk->icsk_af_ops->rebuild_header(sk);

tcp_init_congestion_control(sk);

tcp_mtup_init(sk);

tp->copied_seq = tp->rcv_nxt;

tcp_init_buffer_space(sk);

}

smp_mb();

//正常的第三次握手,设置连接状态为TCP_ESTABLISHED

tcp_set_state(sk, TCP_ESTABLISHED);

sk->sk_state_change(sk);

//状态已经正常,唤醒那些等待的线程

if (sk->sk_socket)

sk_wake_async(sk, SOCK_WAKE_IO, POLL_OUT);

...

//更新最近一次发送数据包的时间

tp->lsndtime = tcp_time_stamp;

tcp_initialize_rcv_mss(sk);

//计算有关TCP首部预测的标志

tcp_fast_path_on(tp);

break;

...

}

这是TCP建立连接的关键,几乎所有状态的套接字,在收到报文时都会在这里完成处理。对于服务端来说,收到第一次握手报文时的状态为TCP_LISTEN,接下来将由tcp_v4_conn_request函数处理,该函数实际调用的是tcp_conn_request,如下:

int tcp_conn_request(struct request_sock_ops *rsk_ops,

const struct tcp_request_sock_ops *af_ops,

struct sock *sk, struct sk_buff *skb)

{

...

//处理TCP SYN FLOOD攻击相关的东西

if ((net->ipv4.sysctl_tcp_syncookies == 2 ||

inet_csk_reqsk_queue_is_full(sk)) && !isn) {

want_cookie = tcp_syn_flood_action(sk, skb, rsk_ops->slab_name);

if (!want_cookie)

goto drop;

}

if (sk_acceptq_is_full(sk) && inet_csk_reqsk_queue_young(sk) > 1) {

NET_INC_STATS(sock_net(sk), LINUX_MIB_LISTENOVERFLOWS);

goto drop;

}

req = inet_reqsk_alloc(rsk_ops, sk, !want_cookie);

if (!req)

goto drop;

//特定协议的request_sock的特殊操作函数集

tcp_rsk(req)->af_specific = af_ops;

tcp_clear_options(&tmp_opt);

tmp_opt.mss_clamp = af_ops->mss_clamp;

tmp_opt.user_mss = tp->rx_opt.user_mss;

tcp_parse_options(skb, &tmp_opt, 0, want_cookie ? NULL : &foc);

if (want_cookie && !tmp_opt.saw_tstamp)

tcp_clear_options(&tmp_opt);

tmp_opt.tstamp_ok = tmp_opt.saw_tstamp;

//初始化连接请求块,包括request_sock、inet_request_sock、tcp_request_sock

tcp_openreq_init(req, &tmp_opt, skb, sk);

inet_rsk(req)->no_srccheck = inet_sk(sk)->transparent;

inet_rsk(req)->ir_iif = inet_request_bound_dev_if(sk, skb);

af_ops->init_req(req, sk, skb);

...//接收窗口初始化

tcp_openreq_init_rwin(req, sk, dst);

if (!want_cookie) {

tcp_reqsk_record_syn(sk, req, skb);

fastopen_sk = tcp_try_fastopen(sk, skb, req, &foc, dst);

}

...

drop_and_release:

dst_release(dst);

drop_and_free:

reqsk_free(req);

drop:

tcp_listendrop(sk);

return 0;

}

调用该函数后,会分配一个request_sock对象来代表这次连接请求(状态为TCP_NEW_SYN_RECV),再调用tcp_v4_send_synack回复客户端ack,开启第二次握手

static int tcp_v4_send_synack(const struct sock *sk, struct dst_entry *dst,

struct flowi *fl,

struct request_sock *req,

struct tcp_fastopen_cookie *foc,

enum tcp_synack_type synack_type)

{

const struct inet_request_sock *ireq = inet_rsk(req);

struct flowi4 fl4;

int err = -1;

struct sk_buff *skb;

/* First, grab a route. */

//查找到客户端的路由

if (!dst && (dst = inet_csk_route_req(sk, &fl4, req)) == NULL)

return -1;

//根据路由、传输控制块、连接请求块中的构建SYN+ACK段

skb = tcp_make_synack(sk, dst, req, foc, synack_type);

//生成SYN+ACK段成功

if (skb) {

//生成校验码

__tcp_v4_send_check(skb, ireq->ir_loc_addr, ireq->ir_rmt_addr);

//生成IP数据报并发送出去

err = ip_build_and_send_pkt(skb, sk, ireq->ir_loc_addr,

ireq->ir_rmt_addr,

ireq->opt);

err = net_xmit_eval(err);

}

return err;

}

查找客户端路由,构造syn包,然后调用ip_build_and_send_pkt,通过网络层将数据报发出去。

至此,第二次握手完成。客户端socket状态变为TCP_ESTABLISHED,此时服务端socket的状态为TCP_NEW_SYN_RECV。

第三次握手

1、客户端收到SYN+ACK报文并回复。

case TCP_SYN_SENT:

tp->rx_opt.saw_tstamp = 0;

//处理SYN_SENT状态下接收到的TCP段

queued = tcp_rcv_synsent_state_process(sk, skb, th);

if (queued >= 0)

return queued;

/* Do step6 onward by hand. */

//处理完第二次握手后,还需要处理带外数据

tcp_urg(sk, skb, th);

__kfree_skb(skb);

//检测是否有数据需要发送

tcp_data_snd_check(sk);

return 0;

}

2、服务器端接收ACK报文

最后服务器端还需要接受ACK报文并进入到ESTABLISHED状态,三次握手才算最终完成。

case TCP_SYN_RECV:

if (!acceptable)

return 1;

if (!tp->srtt_us)

tcp_synack_rtt_meas(sk, req);

/* Once we leave TCP_SYN_RECV, we no longer need req

* so release it.

*/

if (req) {

inet_csk(sk)->icsk_retransmits = 0;

reqsk_fastopen_remove(sk, req, false);

} else {

/* Make sure socket is routed, for correct metrics. */

//建立路由,初始化拥塞控制模块

icsk->icsk_af_ops->rebuild_header(sk);

tcp_init_congestion_control(sk);

tcp_mtup_init(sk);

tp->copied_seq = tp->rcv_nxt;

tcp_init_buffer_space(sk);

}

smp_mb();

//正常的第三次握手,设置连接状态为TCP_ESTABLISHED

tcp_set_state(sk, TCP_ESTABLISHED);

sk->sk_state_change(sk);

/* Note, that this wakeup is only for marginal crossed SYN case.

* Passively open sockets are not waked up, because

* sk->sk_sleep == NULL and sk->sk_socket == NULL.

*/

//状态已经正常,唤醒那些等待的线程

if (sk->sk_socket)

sk_wake_async(sk, SOCK_WAKE_IO, POLL_OUT);

tp->snd_una = TCP_SKB_CB(skb)->ack_seq;

tp->snd_wnd = ntohs(th->window) << tp->rx_opt.snd_wscale;

tcp_init_wl(tp, TCP_SKB_CB(skb)->seq);

if (tp->rx_opt.tstamp_ok)

tp->advmss -= TCPOLEN_TSTAMP_ALIGNED;

if (req) {

/* Re-arm the timer because data may have been sent out.

* This is similar to the regular data transmission case

* when new data has just been ack'ed.

*

* (TFO) - we could try to be more aggressive and

* retransmitting any data sooner based on when they

* are sent out.

*/

tcp_rearm_rto(sk);

} else

tcp_init_metrics(sk);

if (!inet_csk(sk)->icsk_ca_ops->cong_control)

tcp_update_pacing_rate(sk);

/* Prevent spurious tcp_cwnd_restart() on first data packet */

//更新最近一次发送数据包的时间

tp->lsndtime = tcp_time_stamp;

tcp_initialize_rcv_mss(sk);

//计算有关TCP首部预测的标志

tcp_fast_path_on(tp);

break;

浙公网安备 33010602011771号

浙公网安备 33010602011771号