elk7.5搭建详解

- 一、技术选型

1、简易版:logstash->elasticsearch->kibana

2、完整版:filebeat->kafka->logstash->elasticsearch->kibana

3、es的可以用elasticsearch-head这个组件来查看和调试

4、kafka可以用kafka-manager这个组件来监控情况

完整版的理由:

1、Filebeat用于日志收集和传输,相比Logstash更加轻量级和易部署,对系统资源开销更小,日志流架构的话,Filebeat适合部署在收集的最前端,Logstash相比Filebeat功能更强,可以在Filebeat收集之后,由Logstash进一步做日志的解析。

2、日志分发到kafka,可以更加灵活控制,比如订阅kafka数据分发到其他业务。

3、其实filebeat->elasticsearch这个链路也可以, 但是用logstash是因为他具有更加丰富的功能,比如过滤。

- 二、docker部署

docker-compose.yml文件如下

version: '2.2'

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.5.2

container_name: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- $HOME/docker/elk/es/data1:/usr/share/elasticsearch/data #各个节点的数据和配置目录都要独立,防止分片数据污染。

- $HOME/docker/elk/es/config1:/usr/share/elasticsearch/config

ports:

- 9200:9200

networks:

- elastic

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.5.2

container_name: es02

environment:

- node.name=es02

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- $HOME/docker/elk/es/data2:/usr/share/elasticsearch/data

- $HOME/docker/elk/es/config2:/usr/share/elasticsearch/config

networks:

- elastic

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.5.2

container_name: es03

environment:

- node.name=es03

- cluster.name=es-docker-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- $HOME/docker/elk/es/data3:/usr/share/elasticsearch/data

- $HOME/docker/elk/es/config3:/usr/share/elasticsearch/config

networks:

- elastic

esh:

image: mobz/elasticsearch-head:5

container_name: es-head

ports:

- 9100:9100

volumes:

- $HOME/docker/elk/esh/vendor.js:/usr/src/app/_site/vendor.js

kibana:

image: kibana:7.5.2

container_name: kbn

volumes:

- $HOME/docker/elk/kiabna:/usr/share/kibana/config

ports:

- 5601:5601

networks:

- elastic

logstash:

image: logstash:7.5.2

container_name: logstash

volumes:

- $HOME/docker/elk/logstash/config:/usr/share/logstash/config

- $HOME/docker/elk/logstash/pipeline:/usr/share/logstash/pipeline

ports:

- 5044:5044

networks:

- elastic

filebeat:

image: elastic/filebeat:7.5.2

container_name: filebeat

volumes:

- $HOME/docker/elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml

- $HOME/docker/elk/filebeat/log:/usr/share/filebeat/log

networks:

- elastic

zk1:

image: zookeeper:3.4

container_name: zk1

networks:

- elastic

ports:

- "21811:2181"

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

zk2:

image: zookeeper:3.4

container_name: zk2

networks:

- elastic

ports:

- "21812:2181"

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

zk3:

image: zookeeper:3.4

container_name: zk3

networks:

- elastic

ports:

- "21813:2181"

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zk1:2888:3888 server.2=zk2:2888:3888 server.3=zk3:2888:3888

kafka1:

image: wurstmeister/kafka

restart: always

container_name: kafka1

ports:

- 9092:9092

environment:

KAFKA_BROKER_ID: 1 #kafka的broker的顺序

KAFKA_LISTENERS: PLAINTEXT://kafka1:9092 # kafka的内部监听端口

#KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.102.137:9092 # kafka的外部监听端口,取代了KAFKA_ADVERTISED_HOST_NAME和KAFKA_ADVERTISED_PORT,ip为宿主机ip

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_DEFAULT_REPLICATION_FACTOR: 3 # 默认开启了分片,一般分片的数量跟集群的数量一致

KAFKA_NUM_PARTITIONS: 3 # 默认开启了副本,一般副本的数量跟集群的数量一致

JMX_PORT: 9988 #开启jmx监听kafka

networks:

- elastic

kafka2:

image: wurstmeister/kafka

restart: always

container_name: kafka2

ports:

- 9093:9092

environment:

KAFKA_BROKER_ID: 2 #kafka的broker的顺序

KAFKA_LISTENERS: PLAINTEXT://kafka2:9092 # kafka的内部监听端口

#KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.102.137:9093 # kafka的外部监听端口,取代了KAFKA_ADVERTISED_HOST_NAME和KAFKA_ADVERTISED_PORT,ip为宿主机ip

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_DEFAULT_REPLICATION_FACTOR: 3 # 默认开启了分片,一般分片的数量跟集群的数量一致

KAFKA_NUM_PARTITIONS: 3 # 默认开启了副本,一般副本的数量跟集群的数量一致

JMX_PORT: 9988 #开启jmx监听kafka

networks:

- elastic

kafka3:

image: wurstmeister/kafka

restart: always

container_name: kafka3

ports:

- 9094:9092

environment:

KAFKA_BROKER_ID: 3 #kafka的broker的顺序

KAFKA_LISTENERS: PLAINTEXT://kafka3:9092 # kafka的内部监听端口

#KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.102.137:9094 # kafka的外部监听端口,取代了KAFKA_ADVERTISED_HOST_NAME和KAFKA_ADVERTISED_PORT,ip为宿主机ip

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_DEFAULT_REPLICATION_FACTOR: 3 # 默认开启了分片,一般分片的数量跟集群的数量一致

KAFKA_NUM_PARTITIONS: 3 # 默认开启了副本,一般副本的数量跟集群的数量一致

JMX_PORT: 9988 #开启jmx监听kafka

networks:

- elastic

kafka-manager:

image: sheepkiller/kafka-manager:latest

restart: always

container_name: kafka-manager

hostname: kafka-manager

ports:

- "9090:9000"

environment:

ZK_HOSTS: zk1:2181,zk2:2181,zk3:2181

KAFKA_BROKERS: kafka1:9092,kafka2:9092,kafka3:9092

APPLICATION_SECRET: letmein

KM_ARGS: -Djava.net.preferIPv4Stack=true

networks:

- elastic

networks:

elastic:

driver: bridge

- 三、服务配置文件

filebeat相关

filebeat.yml

# 收集系统日志

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/share/filebeat/log/a.log #监听日志文件,注意不能用vim编辑,不然会刷新整个文件记录,导致每次都重新加载所有记录。测试可以用echo "xxx" > a.log

filebeat.config:

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

processors:

- add_cloud_metadata: ~

- add_docker_metadata: ~

# output.elasticsearch:

# hosts: 'es01:9200'

# username: '${ELASTICSEARCH_USERNAME:}'

# password: '${ELASTICSEARCH_PASSWORD:}'

# indices:

# - index: "filebeat"

output.kafka:

hosts: ["kafka1:9092","kafka2:9092","kafka3:9092"]

topic: logdata1 #指定kafka的topic

required_acks: 1

kibana相关

kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://es01:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

logstash相关

config/logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

logstash相关

pipeline/logstash.conf

input {

#beats {

# port => 5044

#}

#file {

# path => [ "/usr/share/logstash/log/a.log" ]

#}

#http {

# ssl => false

#}

kafka {

bootstrap_servers => "kafka1:9092,kafka2:9092,kafka3:9092"

group_id => "log"

client_id => "logstash1"

auto_offset_reset => "latest"

topics => ["logdata1"]

add_field => {"logs_type" => "vpn"}

codec => json { charset => "UTF-8" }

}

}

filter {

mutate {

remove_field => ["@version","host","type"] # 删除字段,不然会报错:failed to parse field [host] of type [text] in document with id 'U-RnbXgBYed8Q5MJhFRV'. Preview of field's value: '{name=fd688b2e3aff}'", "caused_by"=>{"type"=>"illegal_state_exception", "reason"=>"Can't get text on a START_OBJECT

#replace => {"message" => "%{[formatlog]}"} #重写message,只保留json中的formatlog

}

}

output {

#stdout {

# codec => rubydebug

#}

elasticsearch {

hosts => ["http://es01:9200"]

index => "abc"

}

stdout {

codec => json_lines

}

}

es相关

elasticsearch.yml

cluster.name: "docker-cluster"

network.host: 0.0.0.0

# 下面的配置是关闭跨域验证

http.cors.enabled: true

http.cors.allow-origin: "*"

四、使用/调试

1、拉起容器服务

在docker-compose.yml文件目录下执行:

docker-compose up -d

确认服务起来后没有报错

docker ps

2、往日志文件添加数据:/usr/share/filebeat/log,为了方便调试,我把此文件挂载到了宿主机。

echo "xxx" > /usr/share/filebeat/log

3、查看容器输出日志是否正常

docker logs xxx

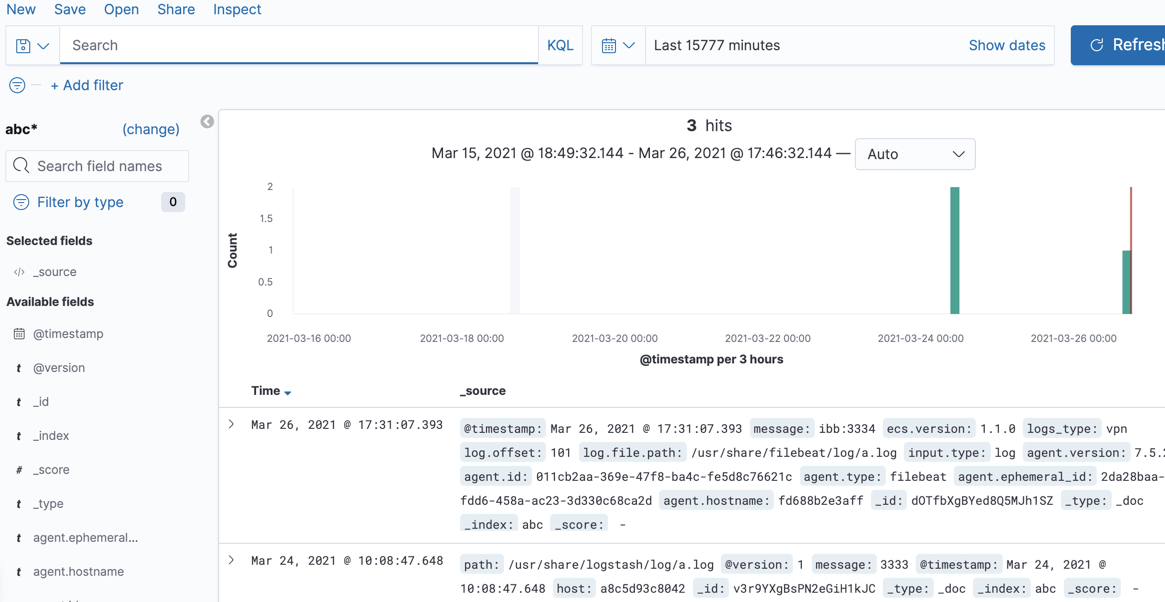

4、到kibana添加index patterns,并查看数据。

- 五、部署过程中可能会遇到的问题:

一、es的三个节点起来后,某个节点会自动退出,并且docker ps的时候,偶尔会卡顿。

解决:

1、调高docker desktop的内存到4g以上

2、调高宿主机vm.max_map_count的值

二、Elasticsearch-head跨域问题

elasticsearch.yml加上

# 下面的配置是关闭跨域验证

http.cors.enabled: true

http.cors.allow-origin: "*"

三、kibana版本问题

启动后访问报错:Kibana server is not ready yet,解决改用和es相同版本号的kibana

四、logstash的配置问题

1、关闭logstash.yml里面的xpack相关配置,暂不用这个插件

2、配置pipeline文件夹里面的配置(如果pipeline文件夹里面有配置,则会读取,可以配置多个,另外如果要指定特定的conf,则在启动logstash的时候指定配置文件-f)

五、es-head浏览器控制台报406问题

在调试es-head的时候,浏览器控制台发现406错误,解决https://blog.csdn.net/catoop/article/details/103737698

六、关于kiabna的配置查看数据面板

kibana设置默认Index patterns,则在discover就可以看到这个index的数据情况