hdfs java api操作

代码地址:https://github.com/zengfa1988/study/blob/master/src/main/java/com/study/hadoop/hdfs/HdfsTest.java

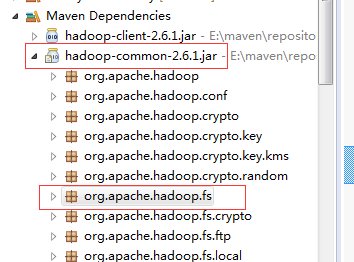

1,导入jar包用maven构建项目,添加pom文件:

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.1</version>

</dependency>

测试时可导入Junit:

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.9</version>

</dependency>

2,获取文件系统

hadoop的文件系统操作类基本都在org.apache.hadoop.fs中

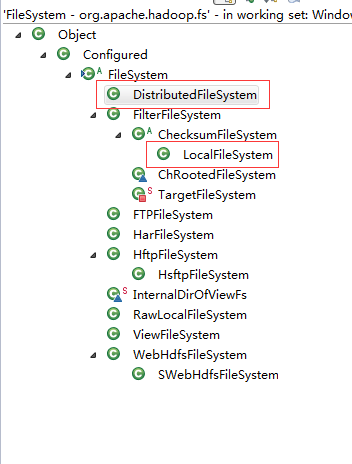

所有的操作都是通过抽象的文件系统FileSystem,要拿到具体实现类进行操作,下图是FileSystem所有的实现类,常用的DistributedFileSystem(分布式文件系统)、LocalFileSystem(本地文件系统)。

FileSystem的两个静态工厂方法可以得到具体实现类:

public static FileSystem get(Configuration conf) throws IOException

public static FileSystem get(URI uri, Configuration conf) throws IOException

第一个获取的是本地文件系统,第二个可以获取分布式文件系统。

实例代码:

private static final String hdfsUrl = "hdfs://192.168.103.137:9000"; private FileSystem fs; @Before public void getFileSystem() throws Exception{ Configuration conf = new Configuration(); // fs = FileSystem.get(new URI(hdfsUrl), conf);//带URL参数的是分布式文件系统,fs类型为DistributedFileSystem fs = FileSystem.get(conf);//不带URL参数的是本地文件系统,fs类型为LocalFileSystem } @Test public void pFileSystem(){ System.out.println(fs); //分布式文件系统输出为DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1468464288_1, ugi=hadoop (auth:SIMPLE)]] //本地文件系统输出为org.apache.hadoop.fs.LocalFileSystem@675c2785 }

3,创建文件目录

通过public boolean mkdirs(Path f) throws IOException创建目录.

@Test public void testMkdir() throws Exception{ String path = "/user/test"; fs.mkdirs(new Path(path)); }

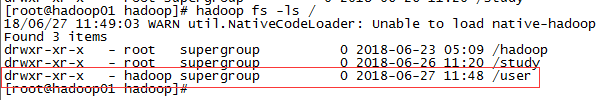

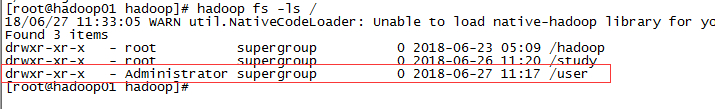

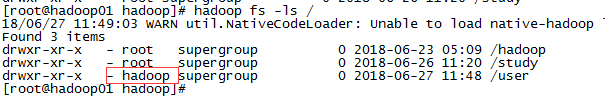

执行后,在服务器端用命令行hadoop fs -ls /查看:

4,上传文件

1)通过copyFromLocalFile上传

@Test public void testUpFile() throws Exception{ String localPathStr = "E:\\study\\hadoop\\test.txt"; String remotePathStr = "/user/test/"; fs.copyFromLocalFile(new Path(localPathStr), new Path(remotePathStr)); }

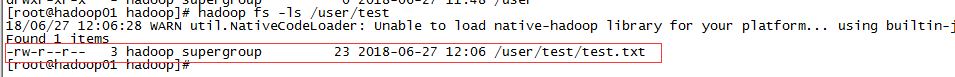

上传后通过命令查看hadoop fs -ls /user/test:

2)通过输入输出流上传

@Test public void testUpFile2() throws Exception{ String localPathStr = "E:\\study\\hadoop\\test.txt"; String remotePathStr = "/user/test/test2.txt"; OutputStream out = fs.create(new Path(remotePathStr)); InputStream input = new FileInputStream(new File(localPathStr)); int lentth = IOUtils.copy(input, out); System.out.println(lentth); }

5,下载文件

1)通过copyToLocalFile下载

@Test public void testDownFile() throws Exception{ String localPathStr = "E:\\study\\hadoop"; String remotePathStr = "/user/test/test.txt"; fs.copyToLocalFile(new Path(remotePathStr), new Path(localPathStr)); }

运行前先把本地的文件删除掉,运行后可看到本地又有了。

2)通过输入输出流下载

@Test public void testDownFile2() throws Exception{ String localPathStr = "E:\\study\\hadoop\\test.txt"; String remotePathStr = "/user/test/test2.txt"; InputStream input = fs.open(new Path(remotePathStr)); OutputStream out = new FileOutputStream(new File(localPathStr)); int lentth = IOUtils.copy(input, out); System.out.println(lentth); }

6,查看文件或目录

listFiles(final Path f, final boolean recursive):列出文件,recursive为false为当前文件夹下的,为true列出子文件夹下的。

listStatus(Path f):列出文件和目录

/** * 列出文件 * @throws Exception */ @Test public void testListFile() throws Exception{ String remotePathStr = "/"; Path path = new Path(remotePathStr); RemoteIterator<LocatedFileStatus> it = fs.listFiles(path, true);//recursive为false,当前文件夹下,为true,当前文件夹和子文件夹下 while(it.hasNext()){ LocatedFileStatus locatedFileStatus = it.next(); System.out.println("url路径:"+locatedFileStatus.getPath().toString()); System.out.println("fileName:"+locatedFileStatus.getPath().getName()); System.out.println("replication:"+locatedFileStatus.getReplication()); System.out.println("isDirectory:"+locatedFileStatus.isDirectory()); System.out.println("--------------------------------------------"); } }

/** * 列出文件和目录 * @throws Exception */ @Test public void testListPath() throws Exception{ String remotePathStr = "/"; Path path = new Path(remotePathStr); FileStatus[] list = fs.listStatus(path); for(FileStatus fileStatus : list){ System.out.println("url路径:"+fileStatus.getPath().toString()); System.out.println("fileName:"+fileStatus.getPath().getName()); System.out.println("isDirectory:"+fileStatus.isDirectory()); System.out.println("--------------------------------------------"); } }

7,删除文件或目录

delete(Path f, boolean recursive):如果删除目录,则recursive为true,目录删除可以递归删除。

@Test public void testDelDir() throws Exception{ String remotePathStr = "/user"; boolean result = fs.delete(new Path(remotePathStr), true); System.out.println(result); }

执行后/user下的子文件夹都会删除。

8,文件或目录是否存在

@Test public void testExist() throws Exception{ String remotePathStr = "/study"; boolean result = fs.exists(new Path(remotePathStr)); System.out.println(result); }

9,问题

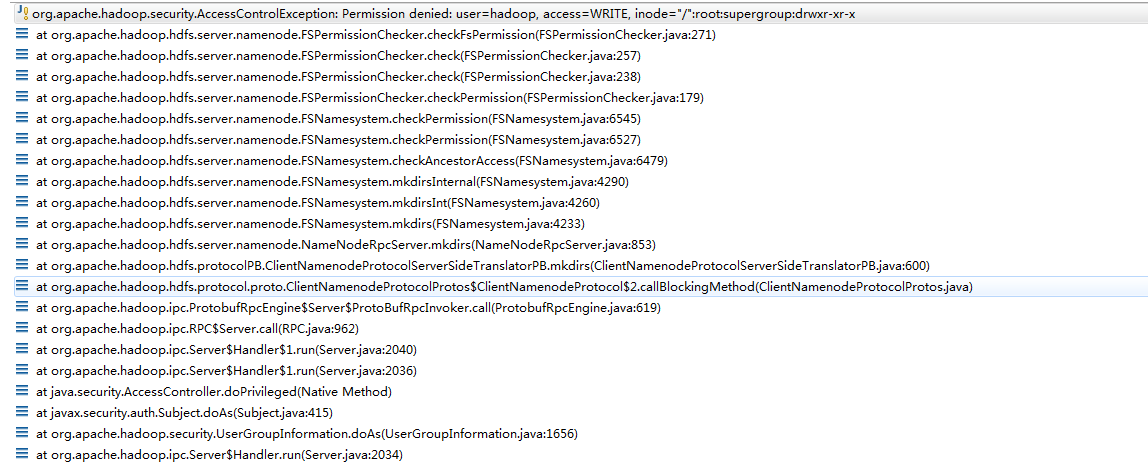

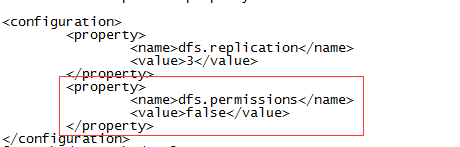

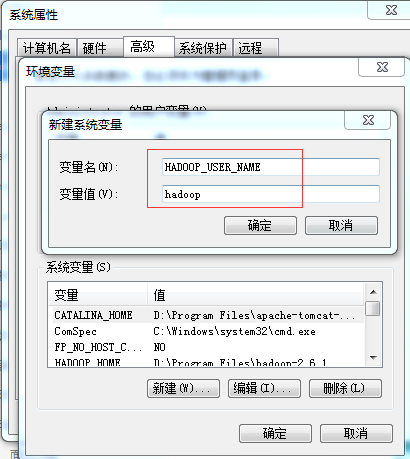

1)通过FileSystem操作时可能会报错:

org.apache.hadoop.security.AccessControlException: Permission denied: user=hadoop, access=WRITE, inode="/":root:supergroup:drwxr-xr-x

浙公网安备 33010602011771号

浙公网安备 33010602011771号