#ifndef PCH_H

#define PCH_H

extern "C"

{

#include "libavutil/opt.h"

#include "libavutil/channel_layout.h"

#include "libavutil/common.h"

#include "libavutil/imgutils.h"

#include "libavutil/mathematics.h"

#include "libavutil/samplefmt.h"

#include "libavutil/time.h"

#include "libavutil/fifo.h"

#include "libavcodec/avcodec.h"

#include "libavcodec/qsv.h"

#include "libavformat/avformat.h"

// #include "libavformat/url.h"

#include "libavformat/avio.h"

// #include "libavfilter/avcodec.h"

//#include "libavfilter/avfiltergraph.h"

#include "libavfilter/avfilter.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavdevice/avdevice.h"

}

#endif

pch.h

// Transcode.cpp : 定义控制台应用程序的入口点。

//

/* Copyright [c] 2016-2026 By www.chungen90.com Allrights Reserved

this file shows how to add text on video.Any questions, you

can join QQ group for help, QQ

Group number:127903734 or 766718184.

*/

//#include "stdafx.h"

#include "pch.h"

#include <string>

#include <iostream>

#include <thread>

#include <memory>

#include <iostream>

#include<fstream>

#include <Winsock2.h>

#include <condition_variable>

#include <concurrent_queue.h>

#include <thread>

using namespace std;

AVFormatContext * context[2];// nullptr;

AVFormatContext* outputContext = nullptr;

int64_t lastPts = 0;

int64_t lastDts = 0;

int64_t lastFrameRealtime = 0;

int64_t firstPts = AV_NOPTS_VALUE;

int64_t startTime = 0;

AVCodecContext* outPutEncContext = NULL;

AVCodecContext *decoderContext[2];

#define SrcWidth 1920

#define SrcHeight 1080

#define DstWidth 640

#define DstHeight 480

struct SwsContext* pSwsContext;

const char *filter_descr = "overlay=100:100";

AVFilterInOut *inputs;

AVFilterInOut *outputs;

AVFilterGraph * filter_graph = nullptr;

AVFilterContext *inputFilterContext[2];

AVFilterContext *outputFilterContext = nullptr;

int interrupt_cb(void *ctx)

{

return 0;

}

string GetLocalIpAddress()

{

WORD wVersionRequested = MAKEWORD(2, 2);

WSADATA wsaData;

if (WSAStartup(wVersionRequested, &wsaData) != 0)

return "";

char local[255] = {0};

gethostname(local, sizeof(local));

hostent* ph = gethostbyname(local);

if (ph == NULL)

return "";

in_addr addr;

memcpy(&addr, ph->h_addr_list[0], sizeof(in_addr)); // 这里仅获取第一个ip

string localIP;

localIP.assign(inet_ntoa(addr));

WSACleanup();

return localIP;

}

void Init()

{

av_register_all();

avfilter_register_all();

avformat_network_init();

//avdevice_register_all();

av_log_set_level(AV_LOG_ERROR);

}

int OpenInput(char *fileName,int inputIndex)

{

context[inputIndex] = avformat_alloc_context();

context[inputIndex]->interrupt_callback.callback = interrupt_cb;

AVDictionary *format_opts = nullptr;

int ret = avformat_open_input(&context[inputIndex], fileName, nullptr, &format_opts);

if(ret < 0)

{

return ret;

}

ret = avformat_find_stream_info(context[inputIndex],nullptr);

av_dump_format(context[inputIndex], 0, fileName, 0);

if(ret >= 0)

{

std::cout <<"open input stream successfully" << endl;

}

return ret;

}

shared_ptr<AVPacket> ReadPacketFromSource(int inputIndex)

{

std::shared_ptr<AVPacket> packet(static_cast<AVPacket*>(av_malloc(sizeof(AVPacket))), [&](AVPacket *p) { av_packet_free(&p); av_freep(&p); });

av_init_packet(packet.get());

int ret = av_read_frame(context[inputIndex], packet.get());

if(ret >= 0)

{

return packet;

}

else

{

return nullptr;

}

}

int OpenOutput(char *fileName,int inputIndex)

{

int ret = 0;

ret = avformat_alloc_output_context2(&outputContext, nullptr, "mpegts", fileName);

if(ret < 0)

{

goto Error;

}

ret = avio_open2(&outputContext->pb, fileName, AVIO_FLAG_READ_WRITE,nullptr, nullptr);

if(ret < 0)

{

goto Error;

}

for(int i = 0; i < context[inputIndex]->nb_streams; i++)

{

AVStream * stream = avformat_new_stream(outputContext, outPutEncContext->codec);

stream->codec = outPutEncContext;

if(ret < 0)

{

goto Error;

}

}

av_dump_format(outputContext, 0, fileName, 1);

ret = avformat_write_header(outputContext, nullptr);

if(ret < 0)

{

goto Error;

}

if(ret >= 0)

cout <<"open output stream successfully" << endl;

return ret ;

Error:

if(outputContext)

{

avformat_close_input(&outputContext);

}

return ret ;

}

void CloseInput(int inputIndex)

{

if(context[inputIndex] != nullptr)

{

avformat_close_input(&context[inputIndex]);

}

}

void CloseOutput()

{

if(outputContext != nullptr)

{

for(int i = 0 ; i < outputContext->nb_streams; i++)

{

AVCodecContext *codecContext = outputContext->streams[i]->codec;

avcodec_close(codecContext);

}

//avformat_close_input(&outputContext);

}

}

int InitEncoderCodec( int iWidth, int iHeight,int inputIndex)

{

AVCodec * pH264Codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if(NULL == pH264Codec)

{

printf("%s", "avcodec_find_encoder failed");

return -1;

}

outPutEncContext = avcodec_alloc_context3(pH264Codec);

outPutEncContext->gop_size = 30;

outPutEncContext->has_b_frames = 0;

outPutEncContext->max_b_frames = 0;

outPutEncContext->codec_id = pH264Codec->id;

outPutEncContext->time_base.num =context[inputIndex]->streams[0]->codec->time_base.num;

outPutEncContext->time_base.den = context[inputIndex]->streams[0]->codec->time_base.den;

outPutEncContext->pix_fmt = *pH264Codec->pix_fmts;

outPutEncContext->width = iWidth;

outPutEncContext->height = iHeight;

outPutEncContext->me_subpel_quality = 0;

outPutEncContext->refs = 1;

outPutEncContext->scenechange_threshold = 0;

outPutEncContext->trellis = 0;

AVDictionary *options = nullptr;

outPutEncContext->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

int ret = avcodec_open2(outPutEncContext, pH264Codec, &options);

if (ret < 0)

{

printf("%s", "open codec failed");

return ret;

}

return 1;

}

int InitDecodeCodec(AVCodecID codecId,int inputIndex)

{

auto codec = avcodec_find_decoder(codecId);

if(!codec)

{

return -1;

}

decoderContext[inputIndex] = context[inputIndex]->streams[0]->codec;

if (!decoderContext) {

fprintf(stderr, "Could not allocate video codec context\n");

exit(1);

}

int ret = avcodec_open2(decoderContext[inputIndex], codec, NULL);

return ret;

}

bool DecodeVideo(AVPacket* packet, AVFrame* frame,int inputIndex)

{

int gotFrame = 0;

auto hr = avcodec_decode_video2(decoderContext[inputIndex], frame, &gotFrame, packet);

if(hr >= 0 && gotFrame != 0)

{

frame->pts = packet->pts;

return true;

}

return false;

}

int InitInputFilter(AVFilterInOut *input,const char *filterName, int inputIndex)

{

char args[512];

memset(args,0, sizeof(args));

AVFilterContext *padFilterContext = input->filter_ctx;

auto filter = avfilter_get_by_name("buffer");

auto codecContext = context[inputIndex]->streams[0]->codec;

sprintf_s(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

codecContext->width, codecContext->height, codecContext->pix_fmt,

codecContext->time_base.num, codecContext->time_base.den /codecContext->ticks_per_frame,

codecContext->sample_aspect_ratio.num, codecContext->sample_aspect_ratio.den);

int ret = avfilter_graph_create_filter(&inputFilterContext[inputIndex],filter,filterName, args,

NULL, filter_graph);

if(ret < 0) return ret;

ret = avfilter_link(inputFilterContext[inputIndex],0,padFilterContext,input->pad_idx);

return ret;

}

int InitOutputFilter(AVFilterInOut *output,const char *filterName)

{

AVFilterContext *padFilterContext = output->filter_ctx;

auto filter = avfilter_get_by_name("buffersink");

int ret = avfilter_graph_create_filter(&outputFilterContext,filter,filterName, NULL,

NULL, filter_graph);

if(ret < 0) return ret;

ret = avfilter_link(padFilterContext,output->pad_idx,outputFilterContext,0);

return ret;

}

void FreeInout()

{

avfilter_inout_free(&inputs->next);

avfilter_inout_free(&inputs);

avfilter_inout_free(&outputs);

}

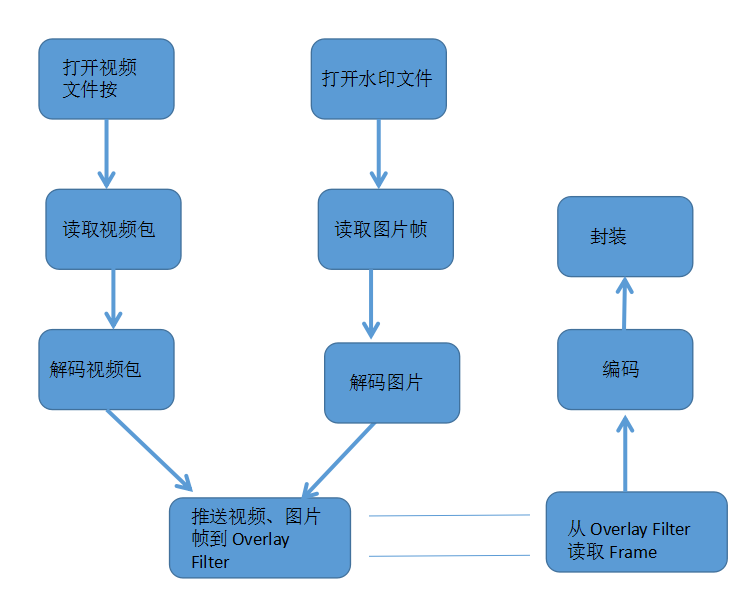

int main(int argc, char* argv[])

{

//string fileInput = "D:\\test11.ts";

string fileInput[2];

fileInput[0]= "D:\\test11.ts";

fileInput[1]= "D:\\liuchanghai.jpg";

string fileOutput = "D:\\draw.ts";

std::thread decodeTask;

Init();

int ret = 0;

for(int i = 0; i < 2;i++)

{

if(OpenInput((char *)fileInput[i].c_str(),i) < 0)

{

cout << "Open file Input 0 failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

ret = InitDecodeCodec(context[i]->streams[0]->codec->codec_id,i);

if(ret <0)

{

cout << "InitDecodeCodec failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

}

ret = InitEncoderCodec(decoderContext[0]->width,decoderContext[0]->height,0);

if(ret < 0)

{

cout << "open eccoder failed ret is " << ret<<endl;

cout << "InitEncoderCodec failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

//ret = InitFilter(outPutEncContext);

if(OpenOutput((char *)fileOutput.c_str(),0) < 0)

{

cout << "Open file Output failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

AVFrame *pSrcFrame[2];

pSrcFrame[0] = av_frame_alloc();

pSrcFrame[1] = av_frame_alloc();

AVFrame *inputFrame[2];

inputFrame[0] = av_frame_alloc();

inputFrame[1] = av_frame_alloc();

auto filterFrame = av_frame_alloc();

int got_output = 0;

int64_t timeRecord = 0;

int64_t firstPacketTime = 0;

int64_t outLastTime = av_gettime();

int64_t inLastTime = av_gettime();

int64_t videoCount = 0;

filter_graph = avfilter_graph_alloc();

if(!filter_graph)

{

cout <<"graph alloc failed"<<endl;

goto End;

}

avfilter_graph_parse2(filter_graph, filter_descr, &inputs, &outputs);

InitInputFilter(inputs,"MainFrame",0);

InitInputFilter(inputs->next,"OverlayFrame",1);

InitOutputFilter(outputs,"output");

FreeInout();

ret = avfilter_graph_config(filter_graph, NULL);

if(ret < 0)

{

goto End;

}

decodeTask.swap(thread([&]{

bool ret = true;

while(ret)

{

auto packet = ReadPacketFromSource(1);

ret = DecodeVideo(packet.get(),pSrcFrame[1],1);

if(ret) break;

}

}));

decodeTask.join();

while(true)

{

outLastTime = av_gettime();

auto packet = ReadPacketFromSource(0);

if(packet)

{

if(DecodeVideo(packet.get(),pSrcFrame[0],0))

{

av_frame_ref( inputFrame[0],pSrcFrame[0]);

if (av_buffersrc_add_frame_flags(inputFilterContext[0], inputFrame[0], AV_BUFFERSRC_FLAG_PUSH) >= 0)

{

pSrcFrame[1]->pts = pSrcFrame[0]->pts;

//av_frame_ref( inputFrame[1],pSrcFrame[1]);

if(av_buffersrc_add_frame_flags(inputFilterContext[1],pSrcFrame[1], AV_BUFFERSRC_FLAG_PUSH) >= 0)

{

ret = av_buffersink_get_frame_flags(outputFilterContext, filterFrame,AV_BUFFERSINK_FLAG_NO_REQUEST);

this_thread::sleep_for(chrono::milliseconds(10));

if ( ret >= 0)

{

std::shared_ptr<AVPacket> pTmpPkt(static_cast<AVPacket*>(av_malloc(sizeof(AVPacket))), [&](AVPacket *p) { av_packet_free(&p); av_freep(&p); });

av_init_packet(pTmpPkt.get());

pTmpPkt->data = NULL;

pTmpPkt->size = 0;

ret = avcodec_encode_video2(outPutEncContext, pTmpPkt.get(), filterFrame, &got_output);

if (ret >= 0 && got_output)

{

int ret = av_write_frame(outputContext, pTmpPkt.get());

}

//this_thread::sleep_for(chrono::milliseconds(10));

}

av_frame_unref(filterFrame);

}

}

}

}

else break;

}

CloseInput(0);

CloseOutput();

std::cout <<"Transcode file end!" << endl;

End:

this_thread::sleep_for(chrono::hours(10));

return 0;

}

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!