10-K8S之pod网络插件Flannel和canel(网络策略)

配置网络插件flannel

# docker 4种网络模型 docker bridge:自由网络名称空间 joined:共享使用另一个容器的名称空间 host(open):容器共享宿主机的网络名称空间 none(closed):不使用任何网络名称空间

kubernetes集群的管理方式: 1.容器间通信:同一个pod内的多个容器间的通信,lo 2.Pod通信:Pod IP<-->Pod IP 3.Pod与Service通信:Pod IP<-->Cluster IP 4.Service与集群外部客户端的通信;

CNI:container network interface,容器网络接口 flannel:地址分配,网络管理 calico:地址分配,网络管理,网络策略 canel kube-router ... 解决方案: 虚拟网桥 多路复用:MacVLAN 硬件交换:SR-IOV kubelet,/etc/cni/net.d/ flannel: 支持多种后端: VxLAN (1) vxlan (2) Directrouting:各节点进行通信时,pod和pod之间只要节点的网络在同一个网段,pod和pod之间是通过直接路由(Directrouting)通信的,而不是通过叠加(underlay和overlay)方式通信。 host-gw: Host Gateway UDP:

查看flannel配置文件

/etc/cni/net.d下会自动生成我们配置容器网络插件的一个配置文件供kubelet调用时使用;比如使用flannel插件:

cd /etc/cni/net.d/ cat 10-flannel.conflist { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] }

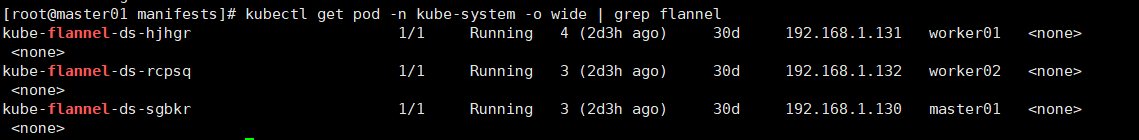

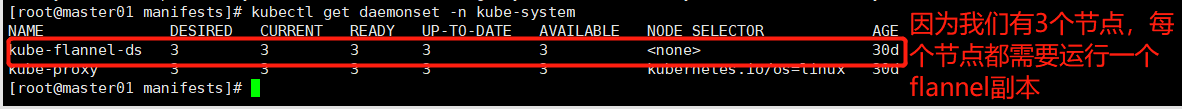

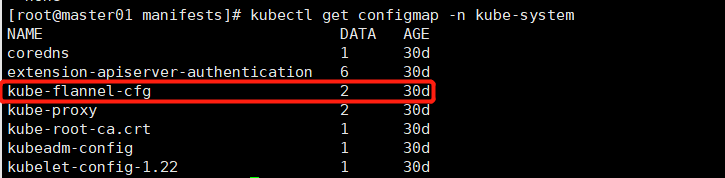

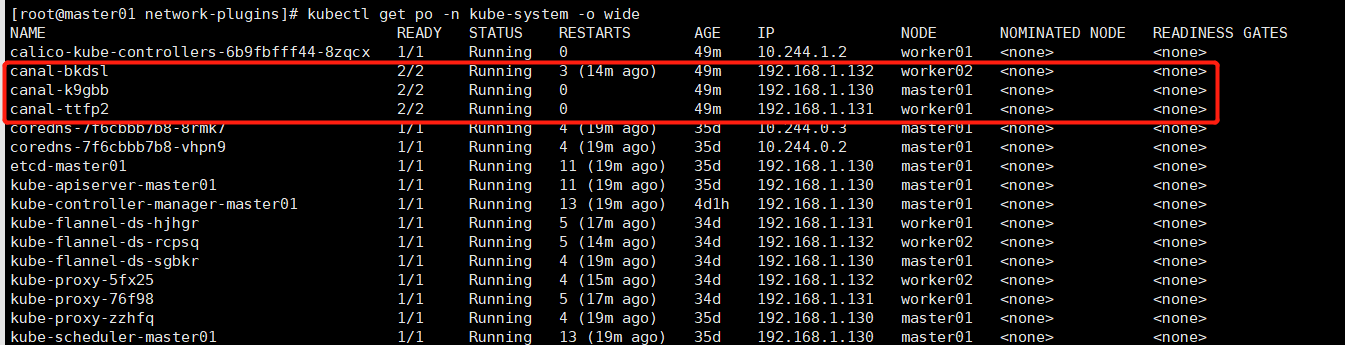

由于kubelet在每个节点运行pod,都需要控制docker运行pod里的容器,每个pod都需要分配IP,所以每个节点上都需要运行flannel插件(传统方式:运行为守护进程;kubeadm:在kube-system名称空间下使用daemonset控制器运行为pod),以便kubelet调用。 kubectl get pod -n kube-system # 图1 kubectl get daemonset -n kube-system # 图2 kubectl get configmap -n kube-system # 图3

图1:

图2:

图3:

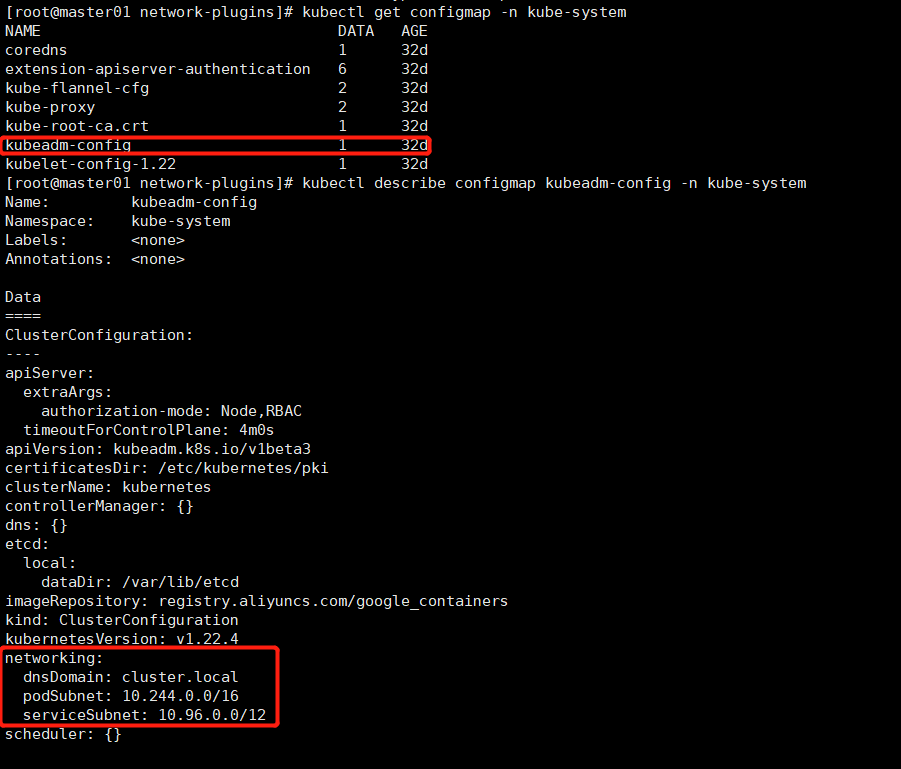

kubectl get configmap kube-flannel-cfg -o json -n kube-system { "apiVersion": "v1", "data": { "cni-conf.json": "{\n \"name\": \"cbr0\",\n \"cniVersion\": \"0.3.1\",\n \"plugins\": [\n {\n \"type\": \"flannel\",\n \"delegate\": {\n \"hairpinMode\": true,\n \"isDefaultGateway\": true\n }\n },\n {\n \"type\": \"portmap\",\n \"capabilities\": {\n \"portMappings\": true\n }\n }\n ]\n}\n", "net-conf.json": "{\n \"Network\": \"10.244.0.0/16\",\n \"Backend\": {\n \"Type\": \"vxlan\"\n }\n}\n" }, "kind": "ConfigMap", "metadata": { "annotations": { "kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"data\":{\"cni-conf.json\":\"{\\n \\\"name\\\": \\\"cbr0\\\",\\n \\\"cniVersion\\\": \\\"0.3.1\\\",\\n \\\"plugins\\\": [\\n {\\n \\\"type\\\": \\\"flannel\\\",\\n \\\"delegate\\\": {\\n \\\"hairpinMode\\\": true,\\n \\\"isDefaultGateway\\\": true\\n }\\n },\\n {\\n \\\"type\\\": \\\"portmap\\\",\\n \\\"capabilities\\\": {\\n \\\"portMappings\\\": true\\n }\\n }\\n ]\\n}\\n\",\"net-conf.json\":\"{\\n \\\"Network\\\": \\\"10.244.0.0/16\\\",\\n \\\"Backend\\\": {\\n \\\"Type\\\": \\\"vxlan\\\"\\n }\\n}\\n\"},\"kind\":\"ConfigMap\",\"metadata\":{\"annotations\":{},\"labels\":{\"app\":\"flannel\",\"tier\":\"node\"},\"name\":\"kube-flannel-cfg\",\"namespace\":\"kube-system\"}}\n" }, "creationTimestamp": "2021-11-24T11:43:24Z", "labels": { "app": "flannel", "tier": "node" }, "name": "kube-flannel-cfg", "namespace": "kube-system", "resourceVersion": "8934", "uid": "82f29971-a7eb-4f2f-80bd-44b24b75e388" } } flannel的配置参数: Network:10.244.0.0/16 # 使用的CIDR格式的网络地址,用于为Pod配置网络功能。在每个节点上分配一个子网 10.244.0.0/16 -> master:10.244.0.0/24 woker01:10.244.1.0/24 ... woker255:10.244.255.0/24 如果场景所需的node节点数量较大,而16位子网掩码只能分配256个子网段(256台node节点),可以换成8位子网掩码,但是一定不要忘记,别和service段 10.96.0.0/12位冲突 10.0.0.0/8 10.0.0.0/24 ... 10.255.255.0/24 比如,我使用kubeadm安装的k8s集群,集群初始化的配置时可以修改这些参数配置信息:service、podSubnet # 如下图 kubectl describe configmap kubeadm-config -n kube-system SubnetLen: 把network切分子网供各节点使用时,使用多长的掩码进行切分,默认为24位; SubnetMin: 10.244.10.0/24 限制节点可分配的子网地址段的最小值是多少 SubnetMax:10.244.100.0/24 限制节点可分配的子网地址段的最大值是多少 Backend:pod与pod之间通信方式,VxLAN(vxlan/Directrouting vxlan)/host-gw/udp Type:vxlan # flannel默认使用的网络类型是vxlan

calico网络策略

看官方文档,获取calico详细介绍

curl https://docs.projectcalico.org/manifests/canal.yaml -O kubectl apply -f canal.yaml

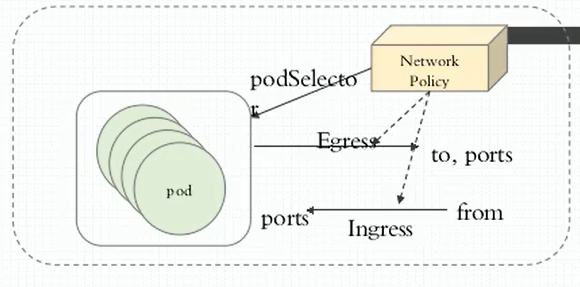

kubectl explain networkpolicy KIND: NetworkPolicy VERSION: networking.k8s.io/v1 apiVersion kind metadata spec: egress <[]Object> # 出站规则 ports <[]Object> # 目标端口(destination port) endPort <integer> # 端口范围,与下面port字段组成端口范围,只要endport定义了,port就只能定义成端口号,且比endPort数字要小。 port <string> # 端口名称或者端口号 protocol <string> # 协议 to <[]Object> # 目标地址(destination address),选择以下三种情况的一种 ipBlock <Object> # 访问一个地址块(一个地址范围内的所有端点,可以pod ip、或者主机ip,只要在地址范围内) cidr <string> -required- # 放行某个网段 except <[]string> # 放行某个网段,但除了某个ip namespaceSelector <Object> # 访问名称空间选择器中的pod,指定但为空,表示名称空间所有pod matchExpressions <[]Object> matchLabels <map[string]string> podSelector <Object> # 访问pod标签选择器标出的pod,如果也设置了namespaceSelector,则选择 namespaceSelector指定的名称空间的pod,没指定的话,选择pod policy所在名称空间的pod。 matchExpressions <[]Object> matchLabels <map[string]string> ingress <[]Object> # 入站规则 from <[]Object> # 目标地址(destination address),哪些pod访问当前有一组pod ports <[]Object> podSelector <Object> -required- matchExpressions <[]Object> matchLabels <map[string]string> policyTypes <[]string> # ["Ingress"], ["Egress"], or ["Ingress", "Egress"],不指定的话,默认只要有egress或者ingress都生效。

1.入站规则限制

创建名称空间和ingress networkPolicy

[root@master01 networkPolicy]# vim ingress-def.yaml apiVersion: v1 kind: Namespace metadata: name: dev --- apiVersion: v1 kind: Namespace metadata: name: prod --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: deny-all-ingress # namespace: dev spec: podSelector: {} # 不指定任何pod选择器 policyTypes: - Ingress # 表示拒绝dev名称空间下所有入站请求 kubectl apply -f ingress-def.yaml -n dev

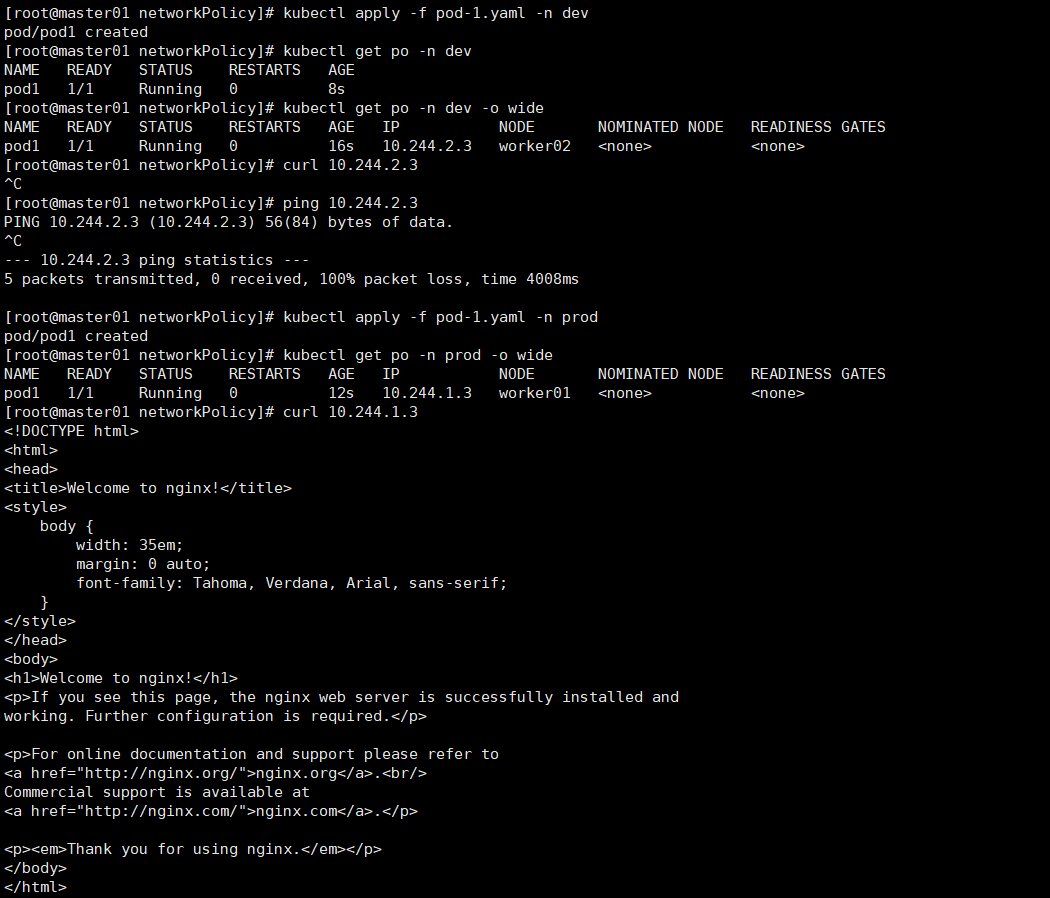

在dev名称空间下创建pod

[root@master01 networkPolicy]# vim pod-1.yaml apiVersion: v1 kind: Pod metadata: name: pod1 spec: containers: - name: myapp image: nginx:1.20

# 在dev名称空间创建一个pod [root@master01 networkPolicy]# kubectl apply -f pod-1.yaml -n dev [root@master01 networkPolicy]# kubectl get po -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod1 1/1 Running 0 16s 10.244.2.3 worker02 <none> <none> # 请求10.244.2.3 pod的nginx 80端口,处在等待状态,一直没有响应。 curl 10.244.2.3

在prod名称空间下创建pod

[root@master01 networkPolicy]# kubectl apply -f pod-1.yaml -n prod [root@master01 networkPolicy]# kubectl get po -n prod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod1 1/1 Running 0 12s 10.244.1.3 worker01 <none> <none> # 请求10.244.1.3 pod的nginx 80端口响应其主页 curl 10.244.1.3

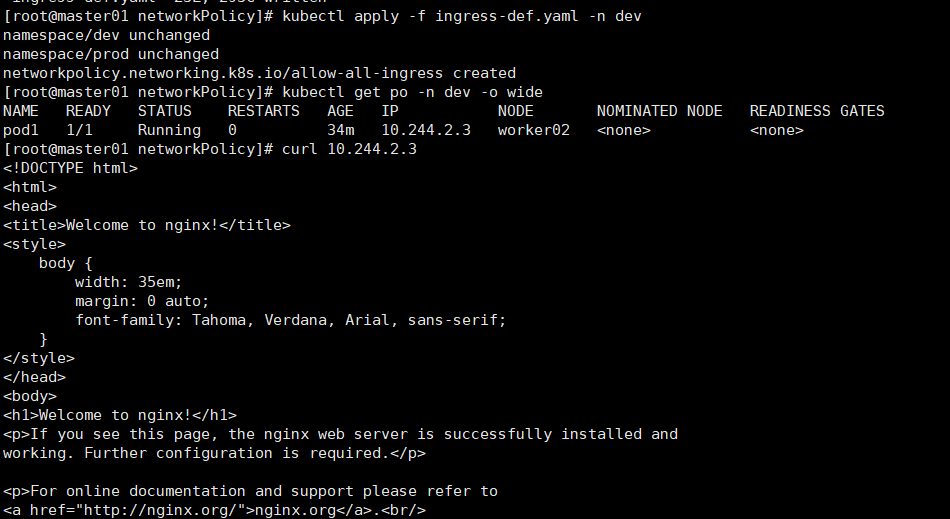

定义在dev名称空间中,入站时允许pod1被别人访问

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-all-ingress # namespace: dev spec: podSelector: {} ingress: - {} # 表示允许所有入站访问dev名称空间下的pod1 policyTypes: - Ingress kubectl apply -f ingress-def.yaml -n dev

kubectl get po -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod1 1/1 Running 0 34m 10.244.2.3 worker02 <none> <none> # 请求10.244.2.3 pod的nginx 80端口能响应其主页了 curl 10.244.2.3

在dev名称空间下,拥有标签app=myapp的pod,允许所有用户访问

kubectl label pod pod1 app=myapp -n dev

设置networkpolicy,放行特定的入站访问流量:

vim allow-netpol-demo.yaml apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-myapp-ingress spec: podSelector: matchLabels: app: myapp ingress: - from: - ipBlock: cidr: 10.244.0.0/16 except: - 10.244.2.2/32 ports: - protocol: TCP port: 80 kubectl apply -f allow-netpol-demo.yaml -n dev curl 10.244.2.3 # 能正常请求主页 curl 10.244.2.3:443 # 因为443端口没有放行,所以curl请求会阻塞 添加如下两行,即可请求443端口: - protocol: TCP port: 80 再次curl 10.244.2.3:443,会报错: curl: (7) Failed connect to 10.244.2.3:443; Connection refused 报错没问题,是因为我们443端口没被监听。但没被阻塞,是我们在ingress规则里放行了443

2.出站规则限制

限制所有出站的流量

kubectl create namespace prod vim egress-def.yaml apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: deny-all-egress spec: podSelector: {} policyTypes: - Egress # Egress 拒绝所有出站的流量 [root@master01 networkPolicy]# kubectl apply -f egress-def.yaml -n prod

在prod名称空间中运行一个busybox pod,为了在其容器内部去访问外部

[root@master01 networkPolicy]# vim pod-busybox-2.yaml apiVersion: v1 kind: Pod metadata: name: pod-busybox spec: containers: - name: myapp image: busybox:latest command: - /bin/sh - -c - sleep 7200 [root@master01 networkPolicy]# kubectl get po -n prod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-busybox 1/1 Running 0 8m12s 10.244.1.5 worker01 <none> <none>

在kube-system名称空间内寻找一个pod,给busybox pod访问

kubectl get po -n kube-system -o wide coredns-7f6cbbb7b8-8rmk7 1/1 Running 4 (3h39m ago) 35d 10.244.0.3 master01 <none> <none>

进入prod名称空间pod-busybox pod的busybox容器内,ping coredns pod

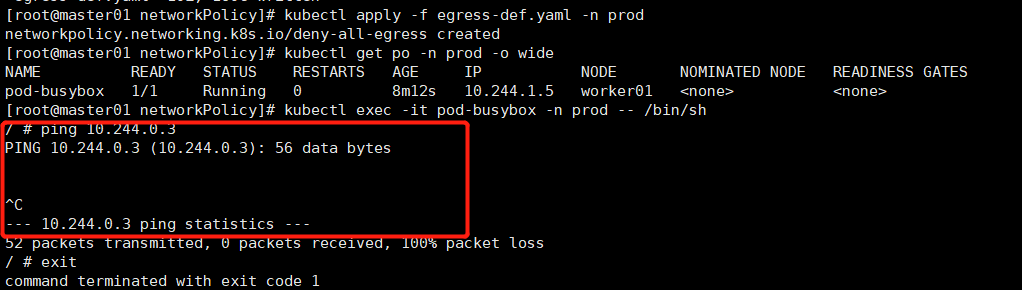

[root@master01 networkPolicy]# kubectl exec -it pod-busybox -n prod -- /bin/sh / # ping 10.244.0.3 # 如下图,无法ping通

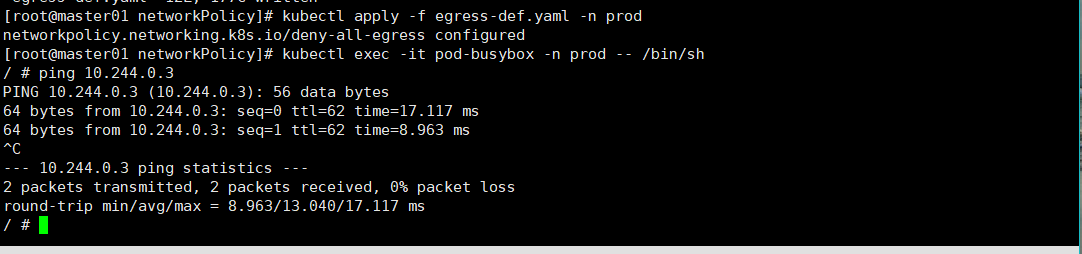

允许prod名称空间所有pod出站

[root@master01 networkPolicy]# vim egress-def.yaml --- apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: deny-all-egress namespace: prod spec: podSelector: {} egress: - {} policyTypes: - Egress kubectl apply -f egress-def.yaml -n prod [root@master01 networkPolicy]# kubectl exec -it pod-busybox -n prod -- /bin/sh / # ping 10.244.0.3 # 可以ping通 PING 10.244.0.3 (10.244.0.3): 56 data bytes 64 bytes from 10.244.0.3: seq=0 ttl=62 time=17.117 ms 64 bytes from 10.244.0.3: seq=1 ttl=62 time=8.963 ms

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· winform 绘制太阳,地球,月球 运作规律

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人