09-K8S之kubernetes-dashboard(addons) 认证及分级授权

目录

kubernetes-dashboard 认证及分级授权

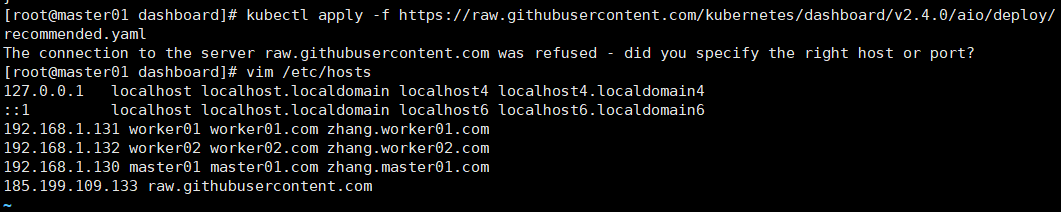

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml 如果出现以下错误,可以百度域名解析出IP地址配置在自己的/etc/hosts文件中,再次请求。。。

cat kubernetes-dashboard.yaml apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort # 新添加 ports: - port: 443 nodePort: 32001 # 新添加 targetPort: 8443 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.4.0 imagePullPolicy: Always ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.7 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}

cd /etc/kubernetes/pki/ (umask 077;openssl genrsa -out dashboard.key 2048) openssl req -new -key dashboard.key -out dashboard.csr -subj "/O=xiaochao/CN=dashboard" openssl x509 -req -in dashboard.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out dashboard.crt -days 3650 kubectl create secret generic dashboard-cert --from-file=dashboard.crt=./dashboard.crt --from-file=dashboard.key -n kubernetes-dashboard

给dashboard创建可以操作整个集群的管理员token

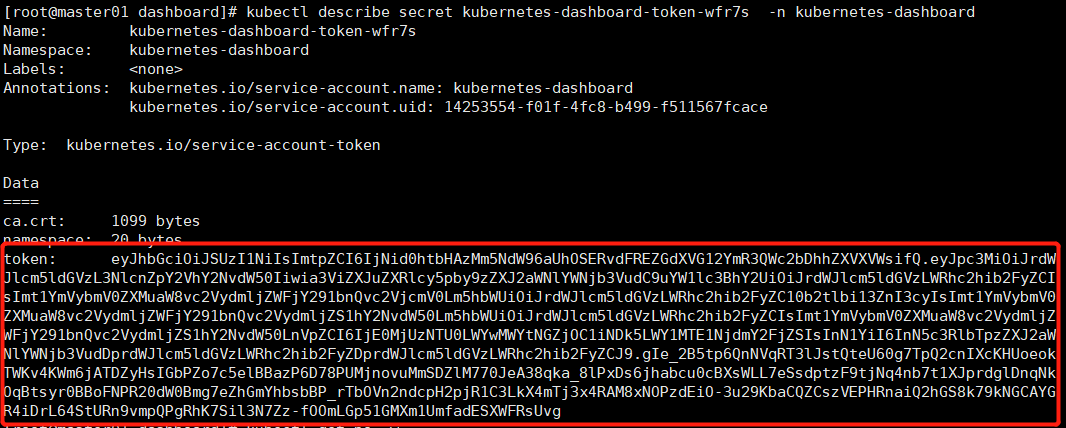

# 创建管理员token,可查看任何空间权限 kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard # kubernetes-dashboard:dashboard-admin 名称空间:sa账号名称 ''' clusterrolebinding: clusterrolebinding名称为dashboard-cluster-admin --clusterrole=cluster-admin: 将serviceacount指定的账号绑定到clusterrole为cluster-admin角色上,这样使用此sa账号的pod就拥有了访问整个k8s集群的权限,此处sa名称kubernetes-dashboard,被定义在 dashboard pod中 deployment.spec.template.spec.serviceAccountName,所以dashboard pod 就拥有了访问整个k8s集群的权限 serviceaccount=kubernetes-dashboard:kubernetes-dashboard:kubernetes-dashboard:dashboard-admin 名称空间:sa账号名称 ''' # 复制token,进行登录,即可查看所有名称空间的资源。 kubectl describe secret kubernetes-dashboard-token-ngcmg -n kubernetes-dashboard

给dashboard创建可以操作集群某个名称空间的token

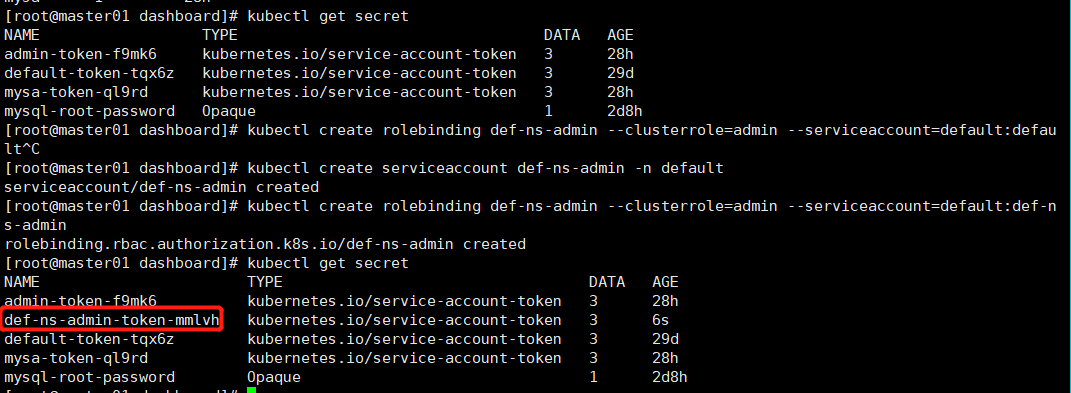

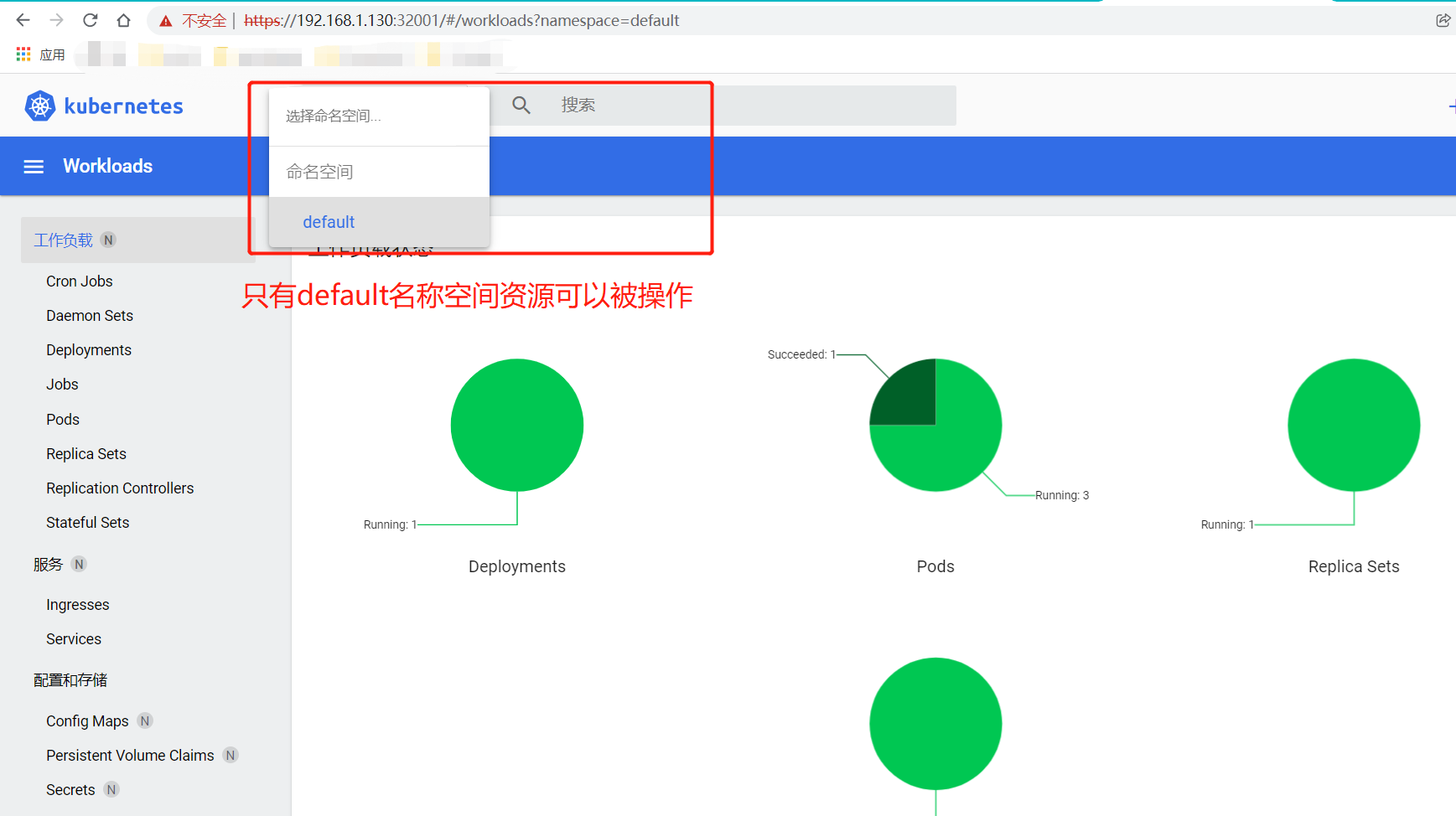

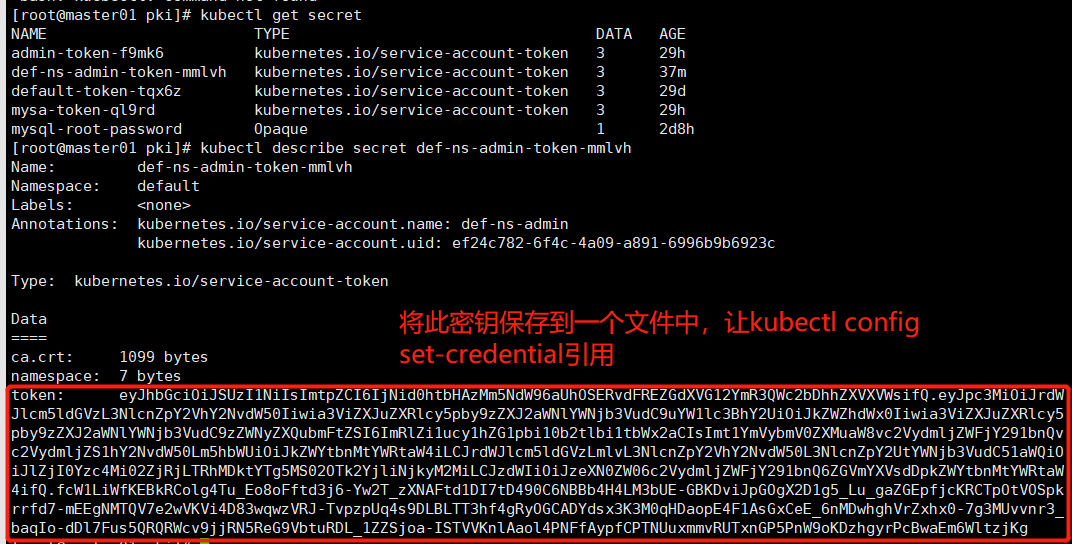

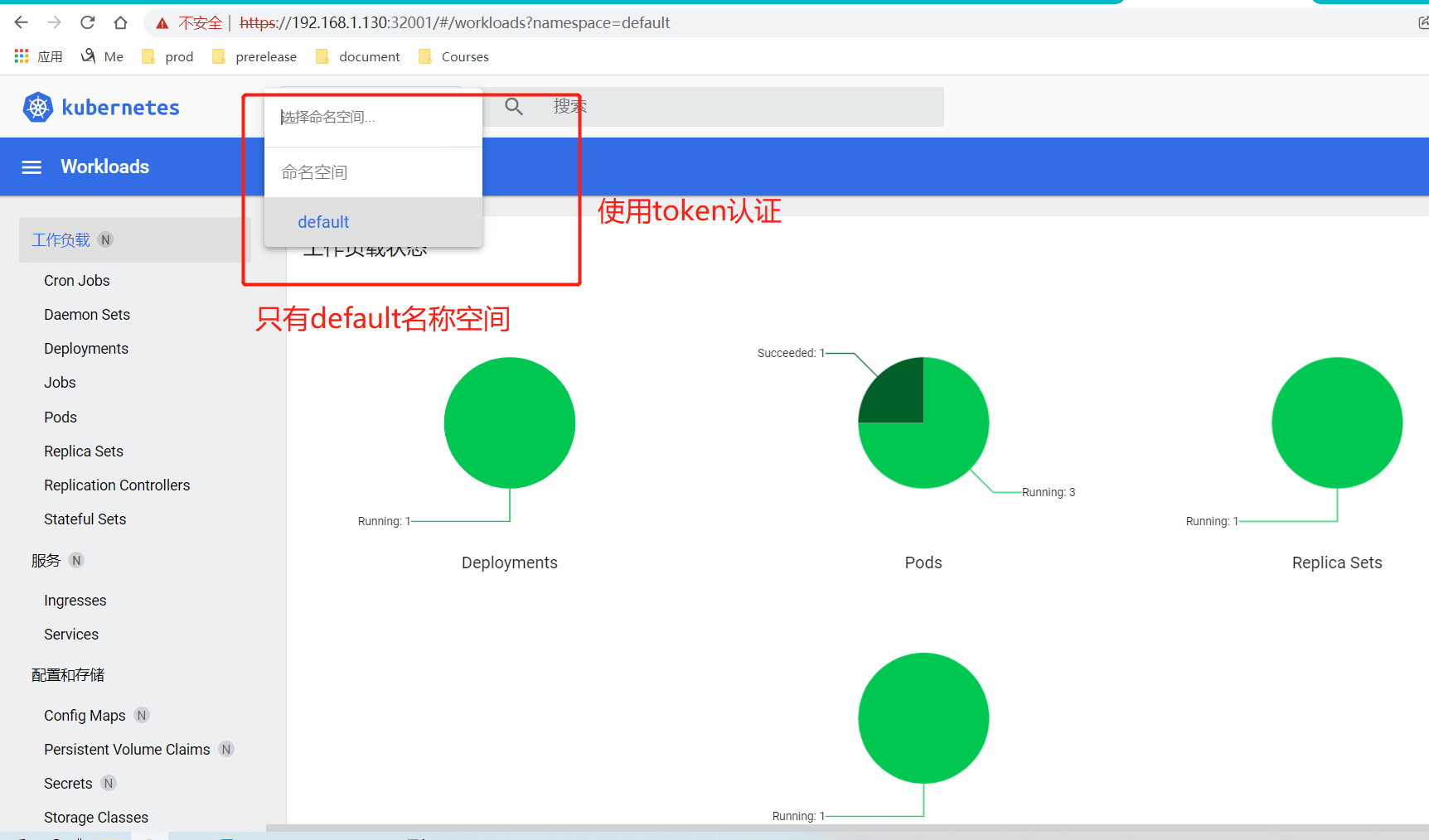

kubectl create serviceaccount def-ns-admin -n default # 创建一个rolebinding,将serviceaccount为def-ns-admin的名称绑定到clusterrole名称为为amdin上,且只能操作default名称空间资源的 kubectl create rolebinding def-ns-admin --clusterrole=admin --serviceaccount=default:def-ns-admin kubectl get secret # 如下图1 我们只要拿到secret为def-ns-admin-token-mmlvh 的token就能认证登录dashboard,并且只能访问default名称空间

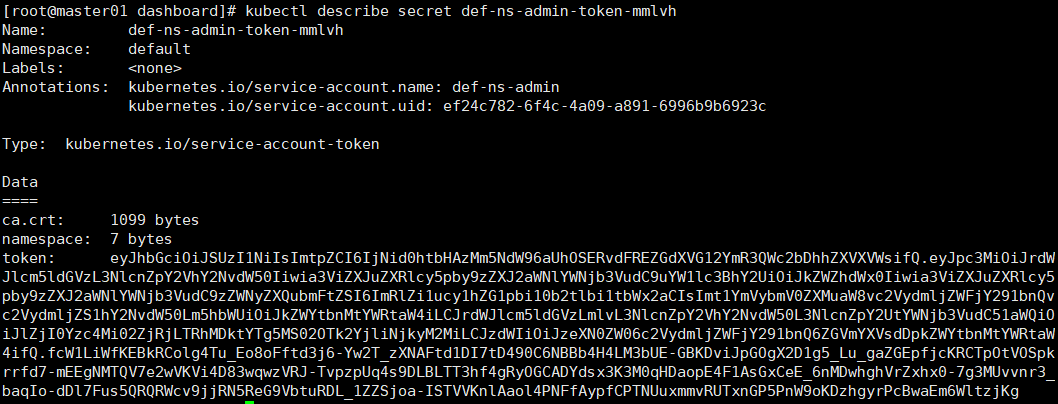

# 获取分级授权用户的token认证码 kubectl describe secret def-ns-admin-token-mmlvh

浏览器输入 https:192.168.1.131:32001,输入token,进入dashboard,如下图:

基于kubeconfig分级授权的文件登录操作资源

cd /etc/kubernetes/pki/ kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://192.168.1.130:6443" --embed-certs=true --kubeconfig=/root/def-ns-admin.conf kubectl config view --kubeconfig=/root/def-ns-admin.conf # 等价cat /root/def-ns-admin.conf,但是cat 证书不被隐藏

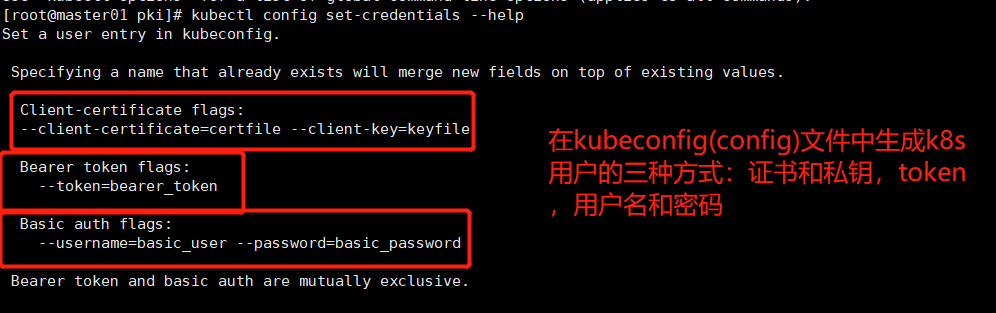

kubectl config set-credentials --help # 生成kubeconfig 中user信息

基于token来生成k8s kubeconfig 用户做认证:

kubectl get secret kubectl describe secret def-ns-admin-token-mmlvh # 是我们上面创建rolebinding def-ns-admin 生成的secret

# 先将secret用base64解码 kubectl get secret def-ns-admin-token-mmlvh -o json # 将secret以json格式输出 如下图1 # 将token保存到变量中去引用 DEF_NS_ADMIN_TOKEN=ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklqTmlkMGh0YkhBek1tNU5kVzk2YVVoT1NFUnZkRlJFWkdkWFZHMTJZbVIzUVdjMmJEaGhaWFZYVldzaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUprWldaaGRXeDBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpXTnlaWFF1Ym1GdFpTSTZJbVJsWmkxdWN5MWhaRzFwYmkxMGIydGxiaTF0Yld4MmFDSXNJbXQxWW1WeWJtVjBaWE11YVc4dmMyVnlkbWxqWldGalkyOTFiblF2YzJWeWRtbGpaUzFoWTJOdmRXNTBMbTVoYldVaU9pSmtaV1l0Ym5NdFlXUnRhVzRpTENKcmRXSmxjbTVsZEdWekxtbHZMM05sY25acFkyVmhZMk52ZFc1MEwzTmxjblpwWTJVdFlXTmpiM1Z1ZEM1MWFXUWlPaUpsWmpJMFl6YzRNaTAyWmpSakxUUmhNRGt0WVRnNU1TMDJPVGsyWWpsaU5qa3lNMk1pTENKemRXSWlPaUp6ZVhOMFpXMDZjMlZ5ZG1salpXRmpZMjkxYm5RNlpHVm1ZWFZzZERwa1pXWXRibk10WVdSdGFXNGlmUS5mY1cxTGlXZktFQmtSQ29sZzRUdV9FbzhvRmZ0ZDNqNi1ZdzJUX3pYTkFGdGQxREk3dEQ0OTBDNk5CQmI0SDRMTTNiVUUtR0JLRHZpSnBHT2dYMkQxZzVfTHVfZ2FaR0VwZmpjS1JDVHBPdFZPU3BrcnJmZDctbUVFZ05NVFFWN2Uyd1ZLVmk0RDgzd3F3elZSSi1UdnB6cFVxNHM5RExCTFRUM2hmNGdSeU9HQ0FEWWRzeDNLM00wcUhEYW9wRTRGMUFzR3hDZUVfNm5NRHdoZ2hWclp4aHgwLTdnM01VdnZucjNfYmFxSW8tZERsN0Z1czVRUlFSV2N2OWpqUk41UmVHOVZidHVSRExfMVpaU2pvYS1JU1RWVktubEFhb2w0UE5GZkF5cGZDUFROVXV4bW12UlVUeG5HUDVQblc5b0tEemhneXJQY0J3YUVtNldsdHpqS2c= echo $DEF_NS_ADMIN_TOKEN | base64 -d # 如下图2

图1:

图2:

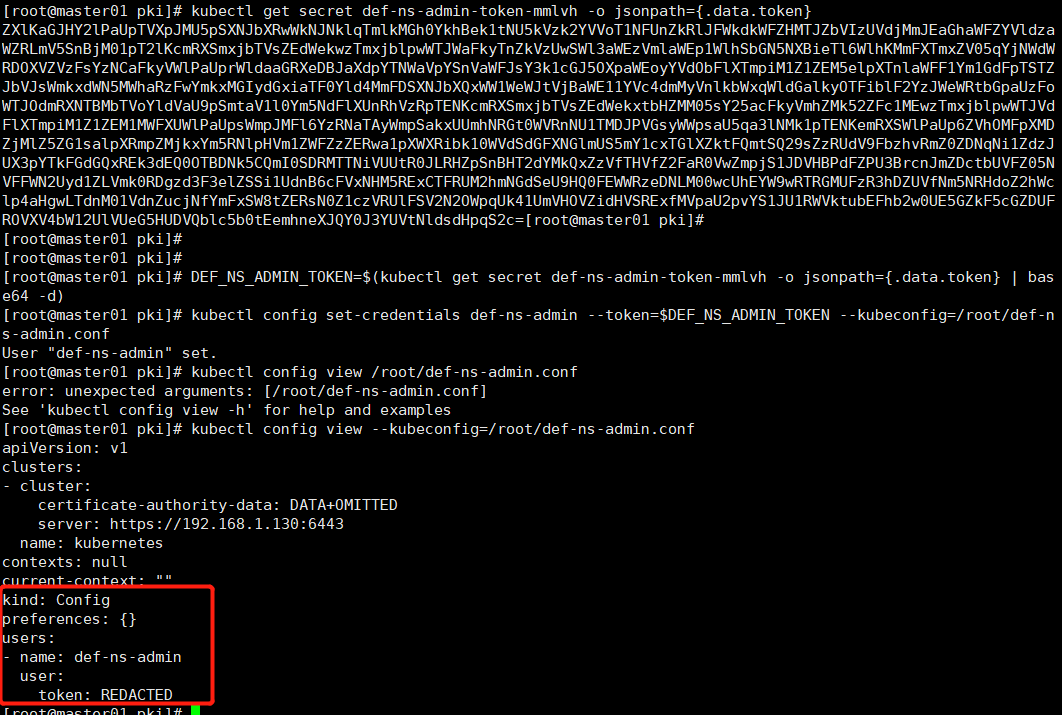

DEF_NS_ADMIN_TOKEN=$(kubectl get secret def-ns-admin-token-mmlvh -o jsonpath={.data.token} | base64 -d) kubectl config set-credentials def-ns-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/def-ns-admin.conf kubectl config view --kubeconfig=/root/def-ns-admin.conf

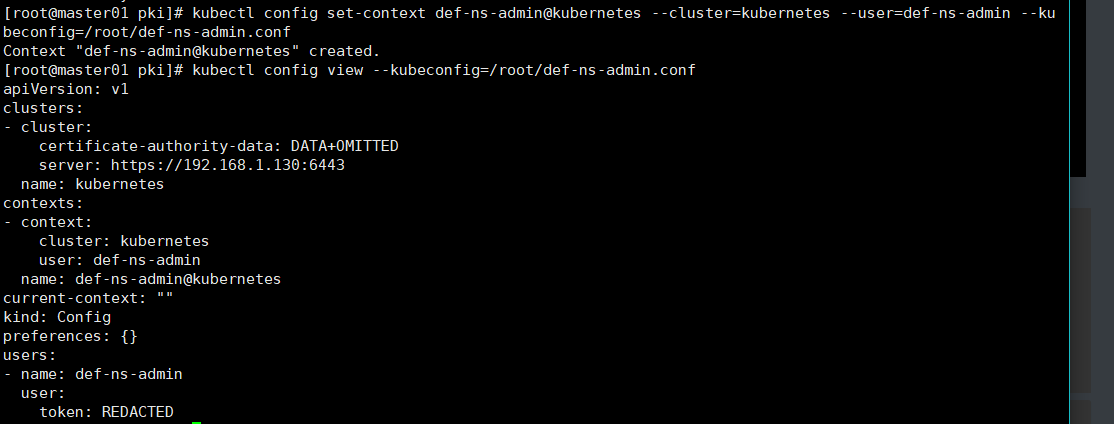

# 设置集群上下文 kubectl config set-context def-ns-admin@kubernetes --cluster=kubernetes --user=def-ns-admin --kubeconfig=/root/def-ns-admin.conf kubectl config view --kubeconfig=/root/def-ns-admin.conf

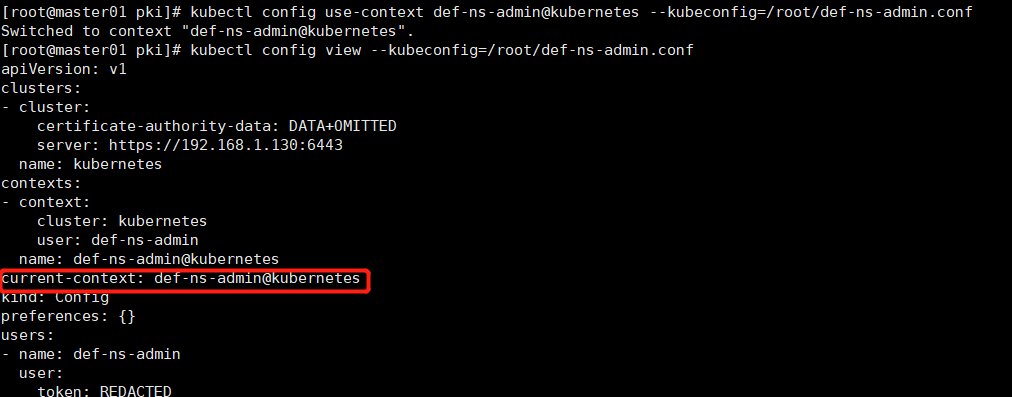

# 切换上下文 kubectl config use-context def-ns-admin@kubernetes --kubeconfig=/root/def-ns-admin.conf

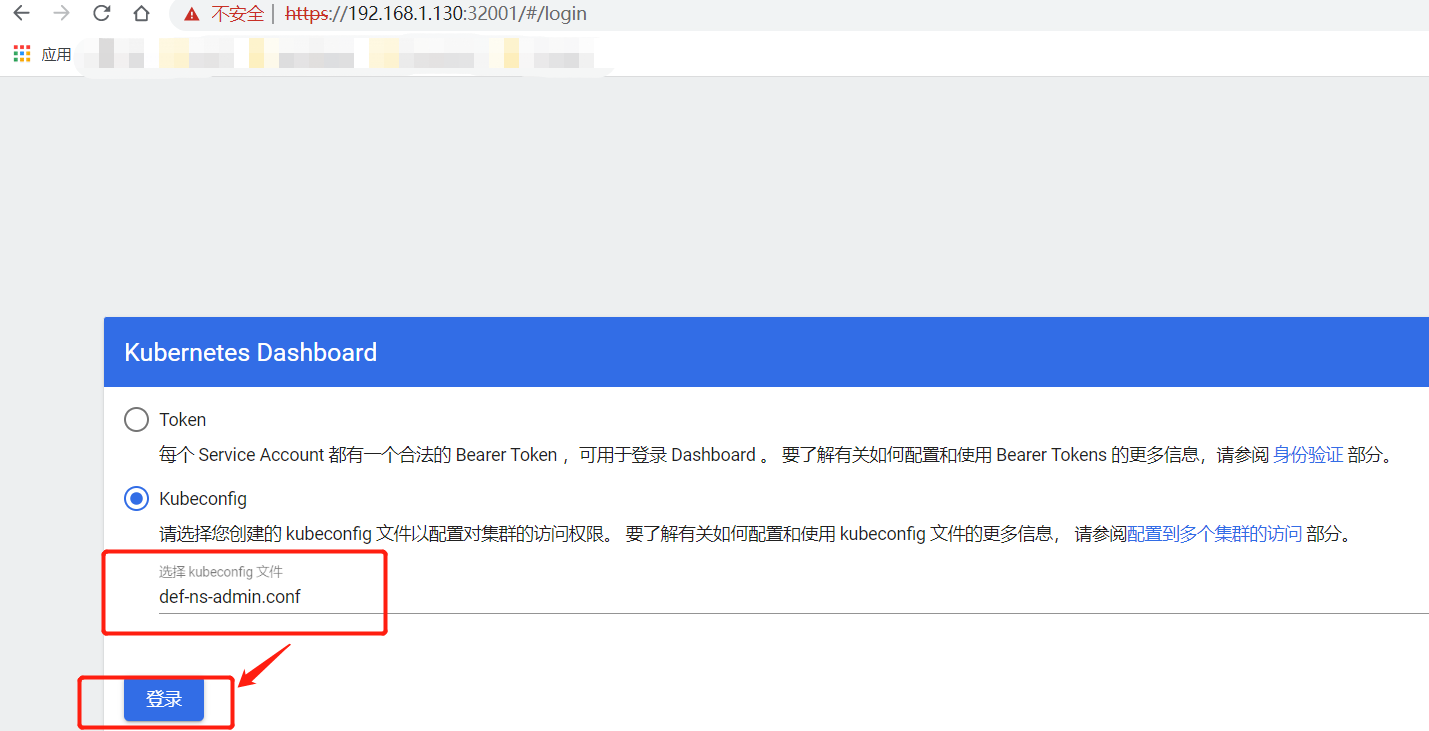

# 将切换上下文后的def-ns-admin.conf文件拷贝到物理机(Windows),并上传def-ns-admin.conf文件登录kubernetes-dashboard UI scp root@192.168.1.130:~/def-ns-admin.conf C:\Users\zhangchao\Desktop\

总结dashboard部署过程

1.部署: kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml 2.将Service改为NodePort kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kube-system 3.认证: 认证是的账号必须为ServiceAccount:被dashboard pod 拿来由kbernetes进行认证 token: (1) 创建ServiceAccount,根据其管理目标,使用rolebinding或clusterrolebinding绑定至合理的role或clusterrole; (2) 获取到此ServiceAccount的secret,查看secret的详细信息,其中就有token; kubeconfig:把ServiceAccount的token封装为kubeconfig文件 (1) 创建ServiceAccount,根据其管理目标,使用rolebinding或clusterrolebinding绑定至合理的role或clusterrole; (2) kubectl get secret | awk '/^ServiceAccount/{print $1}' KUBE_TOKEN=$(kubectl get secret SERVICEACCOUNT_SECRET_NAME -o jsonpath={.data.token} | base64 -d) (3) 生成kubeconfig文件 kubectl config set-cluster --kubecofig=/PATH/TO/SOMEFILE kubectl config set-credentials NAME --token=$KUBE_TOKEN --kubeconfig=/PATH/TO/SOMEFILE kubectl config set-context kubectl config use-context kubernetes集群的管理方式: 1. 命令式:create,run,expose,delete,edit,... 2. 命令式配置文件: create -f /PATH/TO/RESOURCE_CONFIGURATION_FILE, delete -f, replace -f 3. 声明式配置文件: apply -f, patch

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!

2019-12-24 RESTful API