用kubeadm+dashboard部署一个k8s集群

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具。

这个工具能通过两条指令完成一个kubernetes集群的部署:

1. 安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

一台或多台机器,操作系统 CentOS7.x-86_x64 硬件配置:

2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

集群中所有机器之间网络互通 可以访问外网,需要拉取镜像

禁止swap分区

2. 学习目标

1. 在所有节点上安装Docker和kubeadm

2. 部署Kubernetes Master

3. 部署容器网络插件

4. 部署 Kubernetes Node,将节点加入Kubernetes集群中

5. 部署Dashboard Web页面,可视化查看Kubernetes资源

3. 准备环境

关闭防火墙: systemctl stop firewalld

systemctl disable firewalld

Iptables -F

关闭selinux: $ sed -i 's/enforcing/disabled/' /etc/selinux/config $ setenforce 0

临时 $ setenforce 0

关闭swap: $ swapoff -a $ 临时 $ vim /etc/fstab $ 永久

添加主机名与IP对应关系(记得设置主机名):

$ cat /etc/hosts

192.168.30.21 k8s-master

192.168.30.22 k8s-node1

192.168.30.23 k8s-node2

将桥接的IPv4流量传递到iptables的链:

$

[root@k8s-node1 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@k8s-node1 ~]# sysctl --system

4. 所有节点安装Docker/kubeadm/kubelet

4.1 安装Docker

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

[root@k8s-node1 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@k8s-node1 ~]# yum -y install docker-ce

[root@k8s-node1 ~]#systemctl enable docker && systemctl start docker

[root@k8s-node1 ~]#docker --version

4.2 添加阿里云YUM软件源

[root@k8s-node2 ~]# vim /etc/repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

4.3 安装kubeadm,kubelet和kubectl

[root@k8s-node1 ~]# yum -y install kubelet kubeadm kubectl

[root@k8s-node1 ~]# systemctl enable kubelet.service

kubeadm init --apiserver-advertise-address=192.168.30.21 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.15.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

按照自己的版本和master主机IP去填

5. 部署Kubernetes Master

master主机操作

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.30.21 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.15.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

初始化完成会显示以下内容:这里的东西很重要,颜色表明的要按自己的去复制

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.30.21:6443 --token x8gdiq.sbcj8g4fmoocd5tl \

--discovery-token-ca-cert-hash sha256:0b48e70fa8a268f8b88cd69b02cf87d8a2bf2efe519bb88dfa558de20d4a9993

使用kubectl工具

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

目前node节点没有准备

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 10m v1.15.0

6. 安装Pod网络插件(CNI)

在master中操作

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

确保节点就绪情况我们的master节点已经开启

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 32m v1.15.0

确保pod已经启动 kube-syetem 命名空间

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-5j5fd 1/1 Running 0 33m

coredns-bccdc95cf-6plrt 1/1 Running 0 33m

etcd-k8s-master 1/1 Running 0 32m

kube-apiserver-k8s-master 1/1 Running 0 33m

kube-controller-manager-k8s-master 1/1 Running 0 32m

kube-flannel-ds-amd64-l8dg8 1/1 Running 0 8m1s

kube-proxy-lxn4w 1/1 Running 0 33m

kube-scheduler-k8s-master 1/1 Running 0 33m

7. 加入Kubernetes Node

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令

[root@k8s-node2 ~]# kubeadm join 192.168.30.21:6443 --token x8gdiq.sbcj8g4fmoocd5tl \

> --discovery-token-ca-cert-hash sha256:0b48e70fa8a268f8b88cd69b02cf87d8a2bf2efe519bb88dfa558de20d4a9993

查看node节点

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 81m v1.15.0

k8s-node1 Ready <none> 23m v1.15.0

k8s-node2 Ready <none> 26m v1.15.0

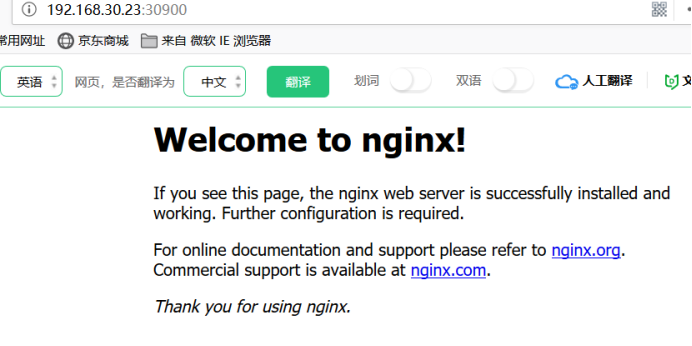

8. 测试在Kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

查看pod的详细信息

这里我启动了一个node,另外一个swap有问题,所以没有开,不过不影响我们的部署

[root@k8s-master ~]# kubectl get pods,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-554b9c67f9-sfxh2 1/1 Running 0 5m7s 10.244.1.2 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 96m <none>

service/nginx NodePort 10.1.66.144 <none> 80:30900/TCP 3m44s app=nginx

访问我们的Node节点的应用

http://nodeip:port 也就是30900端口

9. 部署 Dashboard

[root@k8s-master ~]#

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

[root@k8s-master ~]# vim kubernetes-dashboard.yaml

112行 image: lizhenliang/kubernetes-dashboard-amd64:v1.10.1 指定国内的镜像,这里默认是谷歌的

158行 type: NodePort 添加类型

159 ports:

160 - port: 443

应用dashboard

[root@k8s-master ~]# kubectl apply -f kubernetes-dashboard.yaml

查看pods状态

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-5j5fd 1/1 Running 0 121m

coredns-bccdc95cf-6plrt 1/1 Running 0 121m

etcd-k8s-master 1/1 Running 0 120m

kube-apiserver-k8s-master 1/1 Running 0 121m

kube-controller-manager-k8s-master 1/1 Running 0 120m

kube-flannel-ds-amd64-6m7ct 1/1 Running 0 64m

kube-flannel-ds-amd64-l8dg8 1/1 Running 0 95m

kube-proxy-lxn4w 1/1 Running 0 121m

kube-proxy-xdcgv 1/1 Running 0 64m

kube-scheduler-k8s-master 1/1 Running 0 121m

kubernetes-dashboard-79ddd5-t7q57 1/1 Running 0 82s

查看端口进行访问31510:需要用https://192.168.30.23:31510

[root@k8s-master ~]# kubectl get pods,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-bccdc95cf-5j5fd 1/1 Running 0 127m

pod/coredns-bccdc95cf-6plrt 1/1 Running 0 127m

pod/etcd-k8s-master 1/1 Running 0 126m

pod/kube-apiserver-k8s-master 1/1 Running 0 126m

pod/kube-controller-manager-k8s-master 1/1 Running 0 126m

pod/kube-flannel-ds-amd64-6m7ct 1/1 Running 0 69m

pod/kube-flannel-ds-amd64-l8dg8 1/1 Running 0 101m

pod/kube-proxy-lxn4w 1/1 Running 0 127m

pod/kube-proxy-xdcgv 1/1 Running 0 69m

pod/kube-scheduler-k8s-master 1/1 Running 0 126m

pod/kubernetes-dashboard-79ddd5-t7q57 1/1 Running 0 6m53s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.1.0.10 <none> 53/UDP,53/TCP,9153/TCP 127m

service/kubernetes-dashboard NodePort 10.1.45.160 <none> 443:31510/TCP 6m53s

这里有选择kubeconfig

还有令牌,我们选择令牌登录

先创建service account并绑定默认cluster-admin管理员集群角色:

[root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

[root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

找到dashboard的admin

[root@k8s-master ~]# kubectl get secret -n kube-system

复制这个dashboard-admin-token-sx5gl

查看详细信息,并复制粘贴在我们的web页面的令牌上

[root@k8s-master ~]# kubectl describe secret dashboard-admin-token-sx5gl -n kube-system

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tc3g1Z2wiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMTBmNTk4YWUtMWFlNS00YzNjLTgzZjUtOWRmNDg3MzJhNDVjIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.QYPP7SDQnPp062yb_4XrdOE8xkmTergaTTTPulDADvXzyG2udAEU5AKfHNDH1ZXu5pw9RN9OgX5xUwE_OzQXpPoE5Qt0x2M3VOdpscW2pOw_KOUfnYtf-Aq6Z8c9KgsNdAtUkBHwFbMucL3tDH-Uxb9AdBMX6q5W9jbGlfMa0M6tp2o4zIcoqpli1qAMI_FjvNfkmWX0x4akIzsVeoocewdjzB8Ca-VyqEFZXCMULQv5L8z1RszCXZ4VgOnkHQB6AiVUGmJ9B8iwtCZu-SW2iwWaT-4iQeQvtM3HQTl5aZycaI26qUlsuUtBj5eqyJqugSGlidXJs5TPdn_xmF-FZg

浙公网安备 33010602011771号

浙公网安备 33010602011771号