#kafka和rocketmq区别

rocketmq 是基于队列划分的broker,kafka是基于分区划分的broker,rocketmq是基于NameServer进行元数据管理,kafka基于zookeeper ,其他原理类似

#消费堆积思路

分区数与消费组内消费者是一对一关系

消费组内消费者与分区数是一对多关系

1、分区数小于消费者数,增加分区数同时和消费消费者数保持一致

2、分区数多于消费者数,则需要增加消费者数

#### 部署zookeeper集群【单数节点 3,5,7等】

1、先部署zookeeper集群一般是3个节点,安装zookeeper 依赖java 环境先安装 jdk

yum install java-1.8.0-openjdk-devel -y

java -version #查看jdk版本

2、创建工作目录(3个节点都需要执行)

mkdir -p /usr/local/zookeeper

日志存储目录(3个节点都需要执行)

mkdir -p /usr/local/zookeeper/dataDir

3、下载安装包并解压(3个节点都需要执行)

cd /usr/local/zookeeper

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.8.3/apache-zookeeper-3.8.3-bin.tar.gz

浏览器访问下载: https://dlcdn.apache.org/zookeeper/zookeeper-3.8.3/apache-zookeeper-3.8.3-bin.tar.gz

mv apache-zookeeper-3.8.3-bin.tar.gz apache-zookeeper.tar.gz

tar -xvf apache-zookeeper.tar.gz

4、新增实例文件(3个节点都需要执行)myid文件后续zoo.cfg中使用

echo "1" > /usr/local/zookeeper/dataDir/myid

echo "2" > /usr/local/zookeeper/dataDir/myid

echo "3" > /usr/local/zookeeper/dataDir/myid

5、配置修改,并scp 到另外2台机器上面

cd /usr/local/zookeeper/apache-zookeeper/conf

mv zoo_sample.cfg zoo.cfg

vim zoo.cfg

egrep -v "^#|^$" zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/zookeeper/dataDir

server.1=10.x.x.2:2888:3888

server.2=10.x.x.3:2888:3888

server.3=10.x.x.4:2888:3888

clientPort=2181

maxClientCnxns=60

6、关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

7、配置systemd 服务

cd /usr/lib/systemd/system/

创建zookeeper 服务文件

cat zookeeper.service

[Unit]

Description=Zookeeper Server

After=network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=forking

ExecStart=/usr/local/zookeeper/apache-zookeeper/bin/zkServer.sh start

ExecStop=/usr/local/zookeeper/apache-zookeeper/bin/zkServer.sh stop

User=root

Group=root

[Install]

WantedBy=multi-user.target

刷新 systemctl

systemctl daemon-reload

systemctl enable --now zookeeper

8、 查看集群状态

/usr/local/zookeeper/apache-zookeeper/bin/zkServer.sh status

#######部署kafka集群###########

1、创建工作目录(3个节点都需要执行)

mkdir -p /usr/local/kafka

2、下载安装包解压(3个节点都需要执行)

cd /usr/local

wget https://archive.apache.org/dist/kafka/2.7.2/kafka_2.12-2.7.2.tgz #2.7.2版本

mv kafka_2.12-2.7.2.tgz kafka.tgz

tar zxf kafka.tgz

3、cd /usr/local/kafka/kafka/config (3个节点都需要只需要关注修改项broker.id、listeners、zookeeper.connect、log.dirs)

#节点1#

vim server.properties

broker.id=0

listeners=PLAINTEXT://10.x.x.2:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.x.x.2:2181,10.x.x.3:2181,10.x.x.4:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

#节点2#

broker.id=1

listeners=PLAINTEXT://10.x.x.3:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.x.x.2:2181,10.x.x.3:2181,10.x.x.4:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

#节点3#

broker.id=3

listeners=PLAINTEXT://10.x.x.4:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.x.x.2:2181,10.x.x.3:2181,10.x.x.4:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

4、 配置 systemd kafka服务,创建kafka服务文件(3台节点都需要执行)

cd /lib/systemd/system/

vim kafka.service

[Unit]

Description=Kafka Server

After=network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=forking

ExecStart=/usr/local/kafka/kafka/bin/kafka-server-start.sh -daemon /usr/local/kafka/kafka/config/server.properties

ExecStop=/usr/local/kafka/kafka/bin/kafka-server-stop.sh

User=root

Group=root

[Install]

WantedBy=multi-user.target

5、 刷新systemctl

systemctl daemon-reload

设置kafka开机自启动

systemctl enable --now kafka

#Kafka基于SASL框架PLAIN认证机制配置用户密码认证

1、【节点1,2,3修改 server.properties】

cat /usr/local/kafka/kafka/config/server.properties

broker.id=0

listeners=SASL_PLAINTEXT://10.x.x.2:9092

advertised.listeners=SASL_PLAINTEXT://10.x.x.2:9092

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.enabled.mechanisms=PLAIN

sasl.mechanism.inter.broker.protocol=PLAIN

authorizer.class.name=kafka.security.authorizer.AclAuthorizer

super.users=User:admin

allow.everyone.if.no.acl.found=true

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka-logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.x.x.2:2181,10.x.x.3:2181,10.x.x.4:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

delete.topic.enable=true

auto.create.topics.enable=true

log.retention.hours=168

2、添加SASL配置文件

cat /usr/local/kafka/kafka/config/kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Aa123456"

user_admin="Aa123456"

user_producer="Aa123456"

user_consumer="Aa123456";

};

注:该配置通过org.apache.org.apache.kafka.common.security.plain.PlainLoginModule指定采用PLAIN机制,定义了用户,usemame和password指定该代理与集群其他代理初始化连接的用户名和密码,"user_"为前缀后接用户名方式创建连接代理的用户名和密码,例如,user_producer=“Aa123456”是指用户名为producer,密码为Aa123456,username为admin的用户,和user为admin的用户,密码要保持一致,否则会认证失败

3、修改启动脚本,添加-Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_server_jaas.conf

cat /usr/local/kafka/kafka/bin/kafka-server-start.sh

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G -Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_server_jaas.conf"

4、重启kafka服务

5、创建客户端认证配置文件

cat /usr/local/kafka/kafka/config/kafka_client_jaas.conf

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="Aa123456";

};

6、添加kafka-console-producer.sh认证文件和kafka-console-consumer.sh认证文件

cat /usr/local/kafka/kafka/bin/kafka-console-producer.sh

export KAFKA_HEAP_OPTS="-Xmx512M -Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_client_jaas.conf"

cat /usr/local/kafka/kafka/bin/kafka-console-consumer.sh

export KAFKA_HEAP_OPTS="-Xmx512M -Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_client_jaas.conf"

7、客户端验证

在config文件夹新建文件sasl.conf,用来认证

cat /usr/local/kafka/sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

设置环境变量指定jaas文件

export KAFKA_OPTS="-Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_client_jaas.conf"

#配置环境变量

echo "export KAFKA_OPTS="-Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_client_jaas.conf"" >>/etc/profile

source /etc/profile

/usr/local/kafka/kafka/bin/kafka-topics.sh --list --bootstrap-server 10.x.x.2:9092 --command-config /usr/local/kafka/sasl.conf

/usr/local/kafka/bin/kafka-console-producer.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj-kafka-topic --producer.config /usr/local/kafka/kafka/config/producer.properties --producer-property security.protocol=SASL_PLAINTEXT --producer-property sasl.mechanism=PLAIN # 生产者生产消息

/usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj-kafka-topic --from-beginning --consumer-property security.protocol=SASL_PLAINTEXT --consumer-property sasl.mechanism=PLAIN # 消费者新客户端从头开始消费消费

#Kafka基于SASL框架SCRAM-SHA-256认证机制配置用户密码认证

1、创建SCRAM证书

创建broker通信用户:admin(在使用sasl之前必须先创建,否则启动报错)

/usr/local/kafka/kafka/bin/kafka-configs.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --alter --add-config 'SCRAM-SHA-256=[password=Aa123456],SCRAM-SHA-512=[password=Aa123456]' --entity-type users --entity-name admin --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

#查看所有用户凭证

/usr/local/kafka/kafka/bin/kafka-configs.sh --zookeeper 10.x.x.2:2181,10.x.x.3:2181,10.x.x.4:2181 --describe --entity-type users

#删除凭证

/usr/local/kafka/kafka/bin/kafka-configs.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --alter --delete-config 'SCRAM-SHA-256,SCRAM-SHA-512' --entity-type users --entity-name admin --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

#更新密码

/usr/local/kafka/kafka/bin/kafka-configs.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --entity-type users --entity-name admin --alter --add-config 'SCRAM-SHA-256=[password=LtSwpjau2VHHLmpZxZ],SCRAM-SHA-512=[password=LtSwpjau2VHHLmpZxZ]' --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

#客户端配置

#cat /usr/local/kafka/sasl.conf

#security.protocol=SASL_PLAINTEXT

#sasl.mechanism=SCRAM-SHA-256

2、【节点1,2,3修改 server.properties】

cat /usr/local/kafka/kafka/config/server.properties

broker.id=0

listeners=SASL_PLAINTEXT://10.x.x.2:9092

advertised.listeners=SASL_PLAINTEXT://10.x.x.2:9092

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.enabled.mechanisms=SCRAM-SHA-256

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-256

authorizer.class.name=kafka.security.authorizer.AclAuthorizer

allow.everyone.if.no.acl.found=true

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka-logs

num.partitions=10

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.x.x.2:2181,10.x.x.3:2181,10.x.111.4:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

delete.topic.enable=true

auto.create.topics.enable=true

3、添加SASL配置文件

cat /usr/local/kafka/kafka/config/kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required

username="admin"

password="Aa123456";

};

4、修改启动脚本

vim /usr/local/kafka/kafka/bin/kafka-server-start.sh

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G -Djava.security.auth.login.config=/usr/local/kafka/kafka/config/kafka_server_jaas.conf"

5、重启kafka

systemctl daemon-reload

systemctl restart kafka

#6、创建客户端认证配置文件

#cat /usr/local/kafka/kafka/sasl/kafka_client_jaas.conf

#KafkaClient {

# org.apache.kafka.common.security.scram.ScramLoginModule required

# username="admin"

# password="Aa123456";

#};

7、客户端验证

#生产者

/usr/local/kafka/kafka/bin/kafka-console-producer.sh --broker-list 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj-topic --producer-property security.protocol=SASL_PLAINTEXT --producer-property sasl.mechanism=SCRAM-SHA-256 --producer-property sasl.jaas.config='org.apache.kafka.common.security.scram.ScramLoginModule required username="xxx" password="xxxxxx";'

#消费者

/usr/local/kafka/kafka/bin/kafka-console-consumer.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj --group myj-group --from-beginning --consumer-property security.protocol=SASL_PLAINTEXT --consumer-property sasl.mechanism=SCRAM-SHA-256 --consumer-property sasl.jaas.config='org.apache.kafka.common.security.scram.ScramLoginModule required username="xxx" password="xxxxxx";' #创建消费者组myj-group

#消费者组列表

/usr/local/kafka/kafka/bin/kafka-consumer-groups.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --list --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

#查看消费组是否有消息堆积【LAG字段下】

/usr/local/kafka/kafka/bin/kafka-consumer-groups.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --describe --group myj-group --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

#删除消费者组

/usr/local/kafka/kafka/bin/kafka-consumer-groups.sh --delete --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --group myj-group --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";')

###常用命令###

1、/usr/local/kafka/bin/kafka-topics.sh --create --topic kafka-myj-topic --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --partitions 2 --replication-factor 2 --config retention.ms=604800000 --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";') #创建kafka-myj-topic主题 2个分区 ,2个副本,老化时间为7天

2、/usr/local/kafka/bin/kafka-topics.sh --list --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --exclude-internal --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";') # 列出主题

3、/usr/local/kafka/bin/kafka-topics.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --delete --topic kafka-myj-topic --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";') #删除主题

4、/usr/local/kafka/bin/kafka-topics.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --describe --topic myj-kafka-topic --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";') #查看主题的详细信息

5、/usr/local/kafka/bin/kafka-configs.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --describe --version # 查看kafka版本

6、/usr/local/kafka/bin/kafka-topics.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --alter --topic myj-kafka-topic --partitions 3 --command-config <(echo -e 'security.protocol=SASL_PLAINTEXT\nsasl.mechanism=SCRAM-SHA-256\nsasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="xxxx" password="xxxx";') #扩容主题分区到3

7、/usr/local/kafka/bin/kafka-console-producer.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj-kafka-topic --producer.config /usr/local/kafka/kafka/config/producer.properties --producer-property security.protocol=SASL_PLAINTEXT --producer-property sasl.mechanism=PLAIN # 生产者生产消息

8、/usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server 10.x.x.2:9092,10.x.x.3:9092,10.x.x.4:9092 --topic myj-kafka-topic --from-beginning --consumer-property security.protocol=SASL_PLAINTEXT --consumer-property sasl.mechanism=PLAIN # 消费者新客户端从头开始消费消费

####kafka Manager部署#####

介绍:为了简化开发者和服务工程师维护Kafka集群的工作,yahoo构建了一个叫做Kafka管理器的基于Web工具,叫做 Kafka Manager。这个管理工具可以很容易地发现分布在集群中的哪些topic分布不均匀,或者是分区在整个集群分布不均匀的的情况。它支持管理多个集群、选择副本、副本重新分配以及创建Topic。同时,这个管理工具也是一个非常好的可以快速浏览这个集群的工具

1.管理多个kafka集群

2.便捷的检查kafka集群状态(topics,brokers,备份分布情况,分区分布情况)

3.选择你要运行的副本

4.基于当前分区状况进行

5.可以选择topic配置并创建topic(0.8.1.1和0.8.2的配置不同)

6.删除topic(只支持0.8.2以上的版本并且要在broker配置中设置delete.topic.enable=true)

7.Topic list会指明哪些topic被删除(在0.8.2以上版本适用)

8.为已存在的topic增加分区

9.为已存在的topic更新配置

10.在多个topic上批量重分区

11.在多个topic上批量重分区(可选partition broker位置)

1、安装包下载解压

https://pan.baidu.com/s/1qYifoa4 密码:el4o

2、unzip kafka-manager-1.3.3.7.zip

mv kafka-manager-1.3.3.7 kafka-manager

cd kafka-manager/

vim /home/kafka-manager/conf/application.conf

kafka-manager.zkhosts="10.103.x.2:2181,10.103.x.3:2181,10.103.x.4:2181"

3、启动服务

kafka-manager 默认的端口是9000,可通过 -Dhttp.port,指定端口; -Dconfig.file=conf/application.conf指定配置文件:

nohup /home/kafka-manager/bin/kafka-manager -Dconfig.file=/root/kafka-manager/conf/application.conf -Dhttp.port=8888 &

3.1 、 启动脚本

start-kafkaManager.sh

#!/bin/bash

# kafka-manager的安装目录

dir_home=/home/kafka-manager

# 指定了web访问端口为8888

nohup $dir_home/bin/kafka-manager -Dconfig.file=$dir_home/conf/application.conf -Dhttp.port=8888 >> $dir_home/start-kafkaManager.log 2>&1 &

4、访问服务

http://10.103.x.2:8888

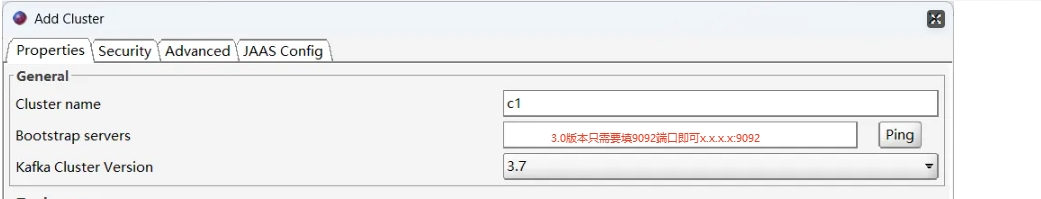

###kafka可视化工具 Offset Explorer ####

###Offset Explorer-3.0版本

下载地址:https://www.kafkatool.com/download2/offsetexplorer_64bit.exe #版本2

https://www.kafkatool.com/download3/offsetexplorer_64bit.exe #版本3

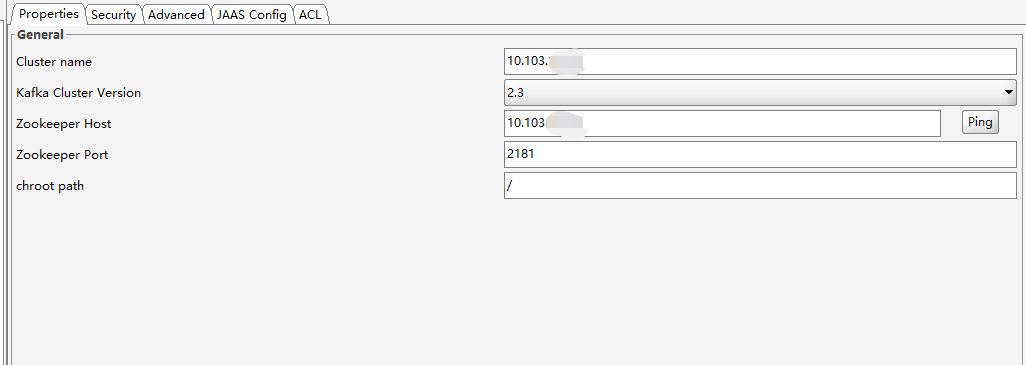

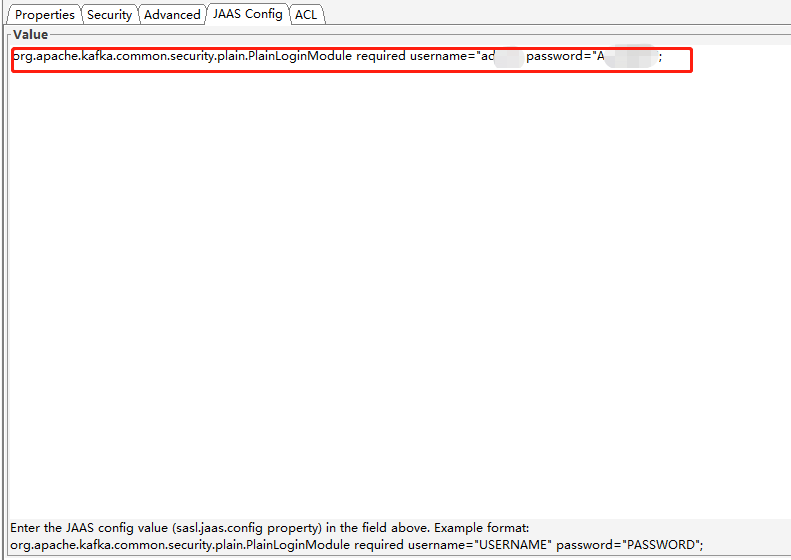

#认证配置链接,Offset Explorer-2.0版本

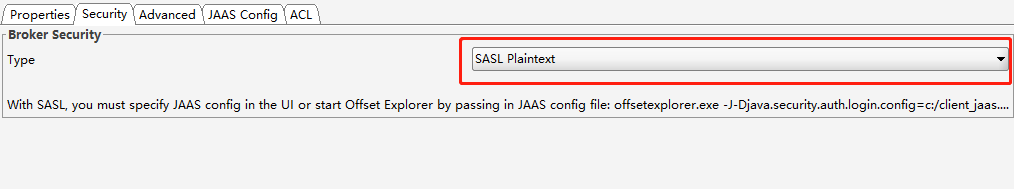

1、主机ip端口

2、Type 选SASL Plaintext

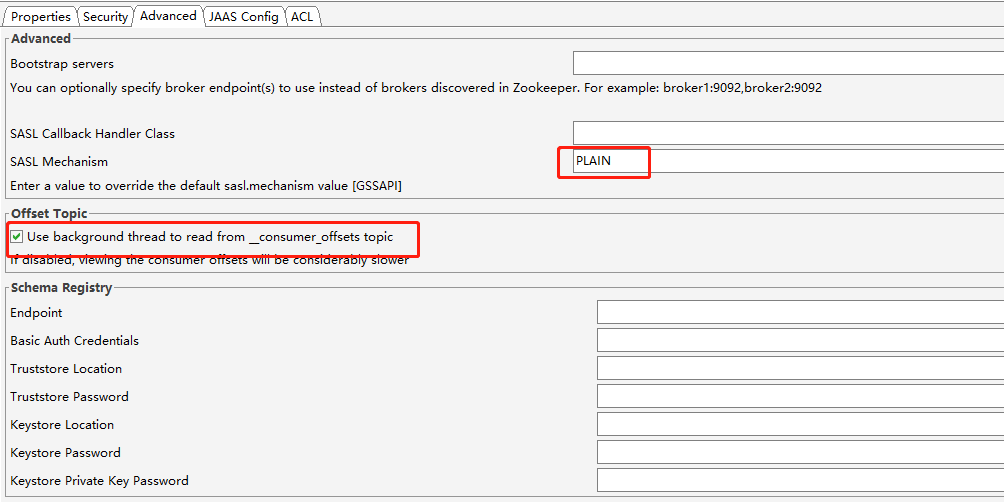

3、SASL Mechanism 选配置的 PLAIN 或者SCRAM-SHA-256

4、配置账号密码,点击链接即可

#PLAIN认证

org.apache.kafka.common.security.plain.PlainLoginModule required username="USERNAME" password="PASSWORD";

#SCRAM-SHA-256认证

org.apache.kafka.common.security.scram.ScramLoginModule required username="USERNAME" password="PASSWORD";

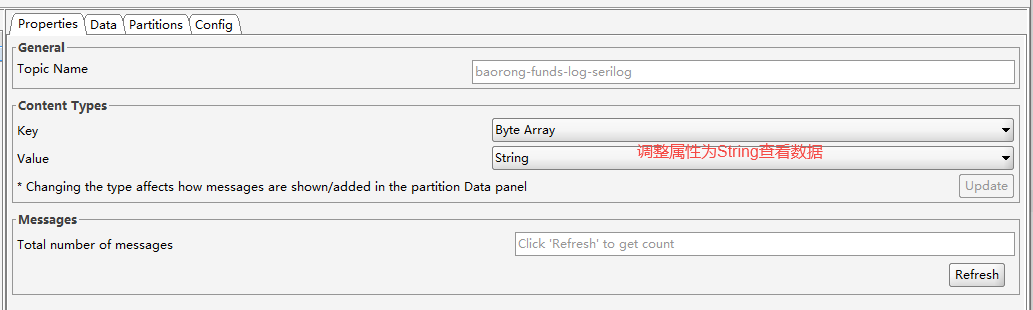

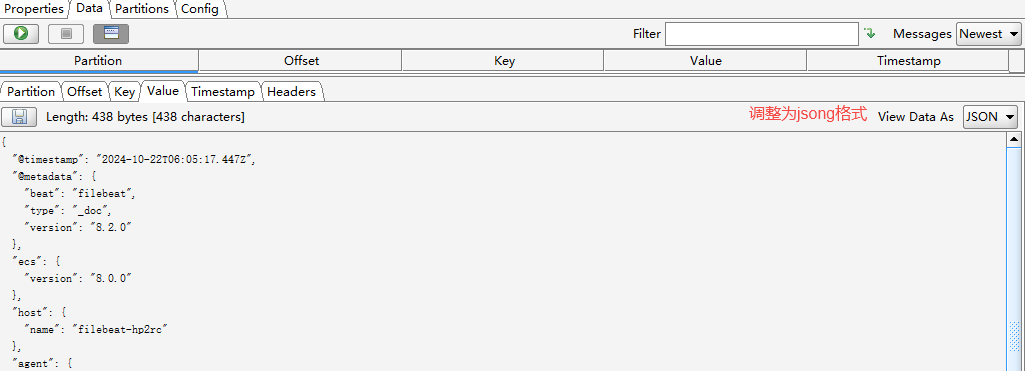

5、查看topic 数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号