作业一

要求:

▪

熟练掌握 Selenium 查找 HTML 元素、爬取 Ajax 网页数据、等待 HTML 元素等内

容。

▪

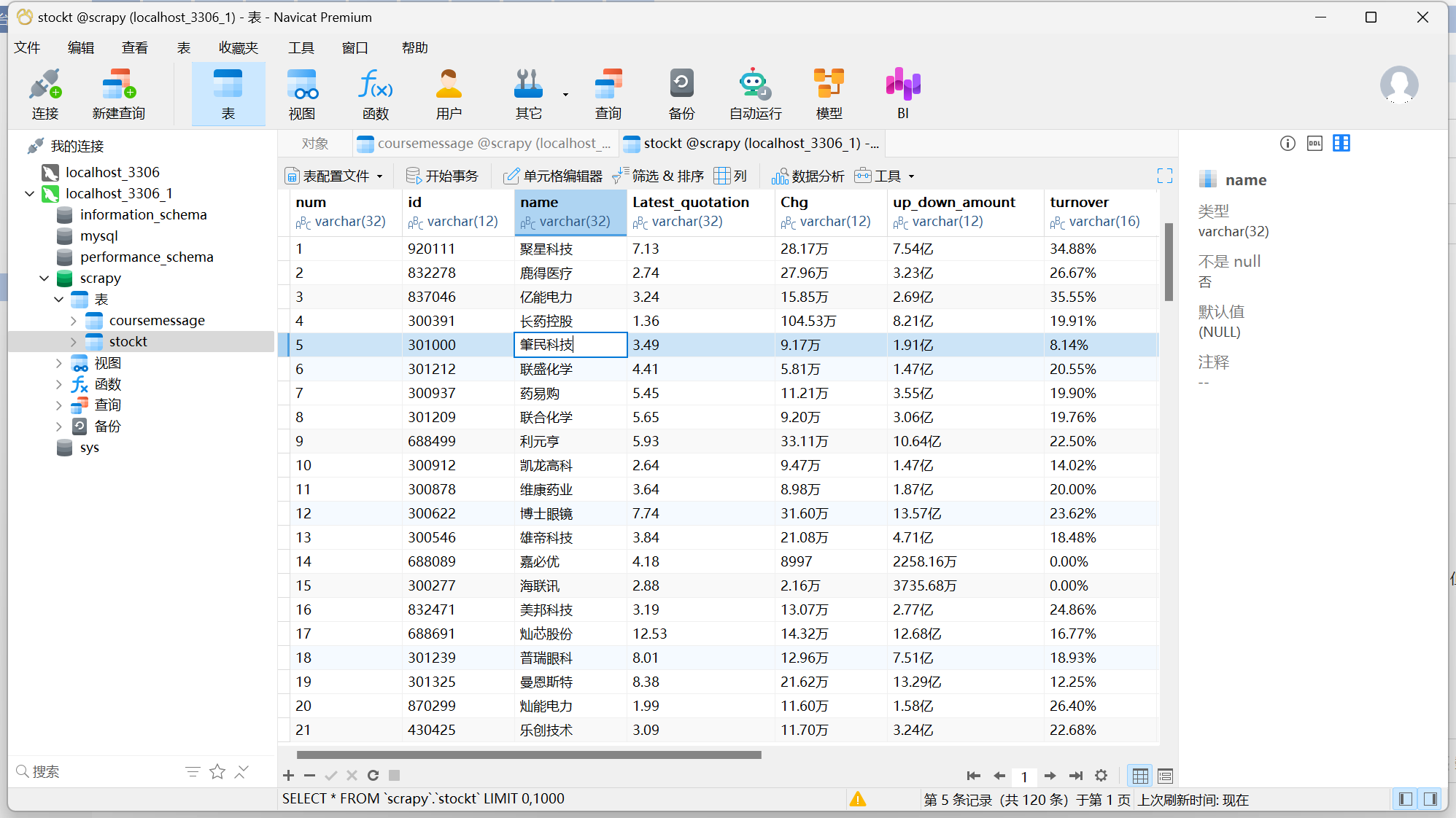

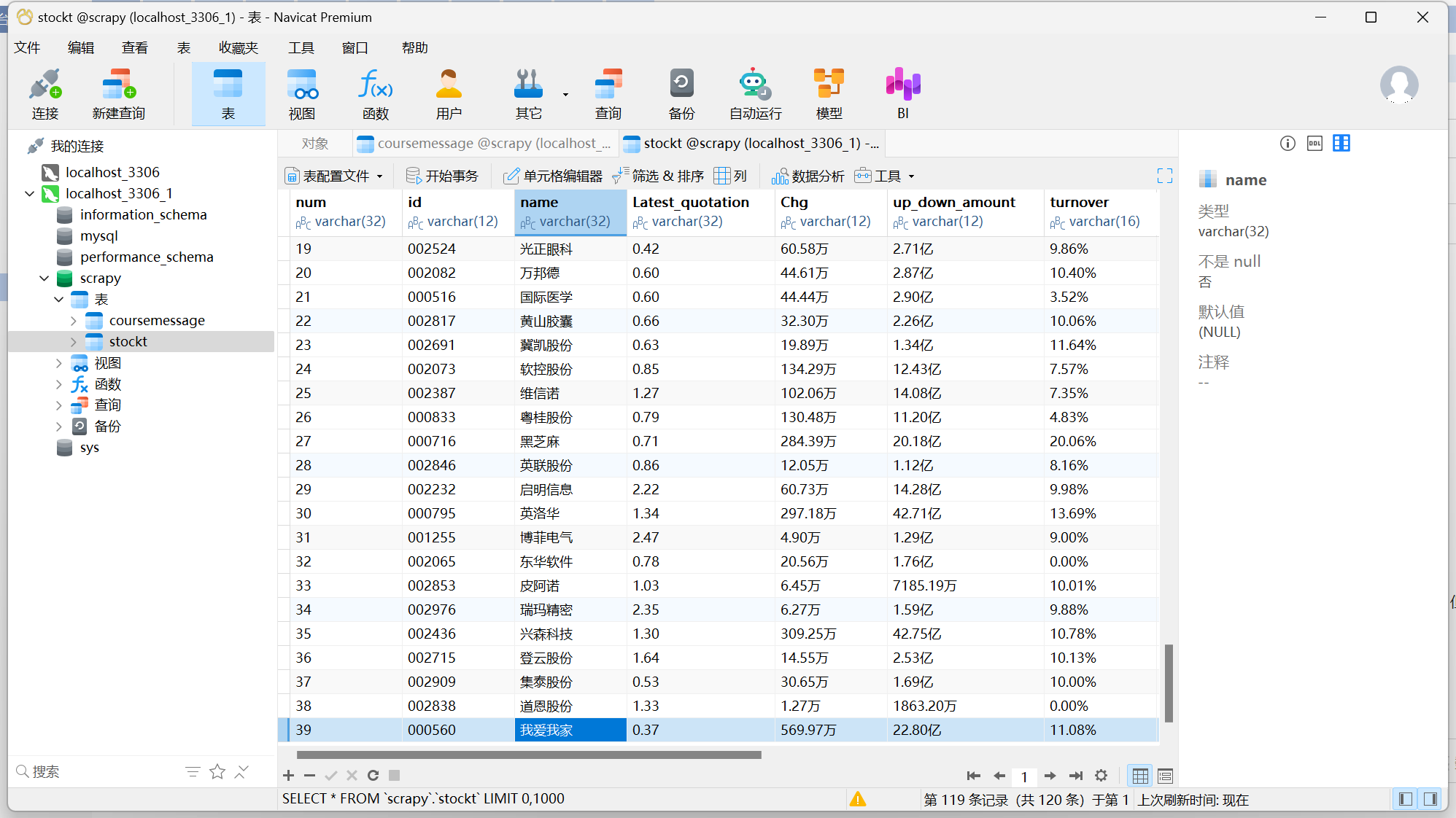

使用 Selenium 框架+ MySQL 数据库存储技术路线爬取“沪深 A 股”、“上证 A 股”、

“深证 A 股”3 个板块的股票数据信息。

1)代码

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver import ChromeService

from selenium.webdriver import ChromeOptions

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

import pymysql

chrome_options = ChromeOptions()

chrome_options.add_argument('--disable-gpu')

chrome_options.binary_location = r"C:\Program Files\Google\Chrome\Application\chrome.exe"

chrome_options.add_argument('--disable-blink-features=AutomationControlled')

service = ChromeService(executable_path=r"C:\Users\ASUS\Desktop\chromedriver.exe")

driver = webdriver.Chrome(service=service,options=chrome_options)

driver.maximize_window()

# 连接scrapy数据库,创建表

try:

db = pymysql.connect(host='127.0.0.1',user='root',password='+ODKJmiQV6ye',port=3306,database='scrapy')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS stockT')

sql = '''CREATE TABLE stockT(num varchar(32),id varchar(12),name varchar(32),Latest_quotation varchar(32),Chg varchar(12),up_down_amount varchar(12),

turnover varchar(16),transaction_volume varchar(16),amplitude varchar(16),highest varchar(32), lowest varchar(32),today varchar(32),yesterday varchar(32))'''

cursor.execute(sql)

except Exception as e:

print(e)

def spider(page_num):

cnt = 0

while cnt < page_num:

spiderOnePage()

driver.find_element(By.XPATH,'//a[@class="next paginate_button"]').click()

cnt +=1

time.sleep(2)

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(3)

trs = driver.find_elements(By.XPATH,'//table[@id="table_wrapper-table"]//tr[@class]')

for tr in trs:

tds = tr.find_elements(By.XPATH,'.//td')

num = tds[0].text

id = tds[1].text

name = tds[2].text

Latest_quotation = tds[6].text

Chg = tds[7].text

up_down_amount = tds[8].text

turnover = tds[9].text

transaction_volume = tds[10].text

amplitude = tds[11].text

highest = tds[12].text

lowest = tds[13].text

today = tds[14].text

yesterday = tds[15].text

cursor.execute('INSERT INTO stockT VALUES ("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")' % (num,id,name,Latest_quotation,

Chg,up_down_amount,turnover,transaction_volume,amplitude,highest,lowest,today,yesterday))

db.commit()

# 访问东方财富网

driver.get('https://www.eastmoney.com/')

# 访问行情中心

driver.get(WebDriverWait(driver,15,0.48).until(EC.presence_of_element_located((By.XPATH,'/html/body/div[6]/div/div[2]/div[1]/div[1]/a'))).get_attribute('href'))

# 访问沪深京A股

driver.get(WebDriverWait(driver,15,0.48).until(EC.presence_of_element_located((By.ID,'menu_hs_a_board'))).get_attribute('href'))

# 爬取两页的数据

spider(2)

driver.back()

# 访问上证A股

driver.get(WebDriverWait(driver,15,0.48).until(EC.presence_of_element_located((By.ID,'menu_sh_a_board'))).get_attribute('href'))

spider(2)

driver.back()

# 访问深证A股

driver.get(WebDriverWait(driver,15,0.48).until(EC.presence_of_element_located((By.ID,'menu_sz_a_board'))).get_attribute('href'))

spider(2)

try:

cursor.close()

db.close()

except:

pass

time.sleep(3)

driver.quit()

2)心得

在这次作业中,我深入学习了 Selenium。掌握查找 HTML 元素、爬取 Ajax 数据和等待元素的方法。利用 Selenium 和 MySQL,成功爬取沪深、上证、深证 A 股股票数据。过程中虽有困难,但解决问题后,提升了技能,也感受到技术融合的强大。

作业二

要求:

▪

熟练掌握 Selenium 查找 HTML 元素、实现用户模拟登录、爬取 Ajax 网页数据、

等待 HTML 元素等内容。

▪

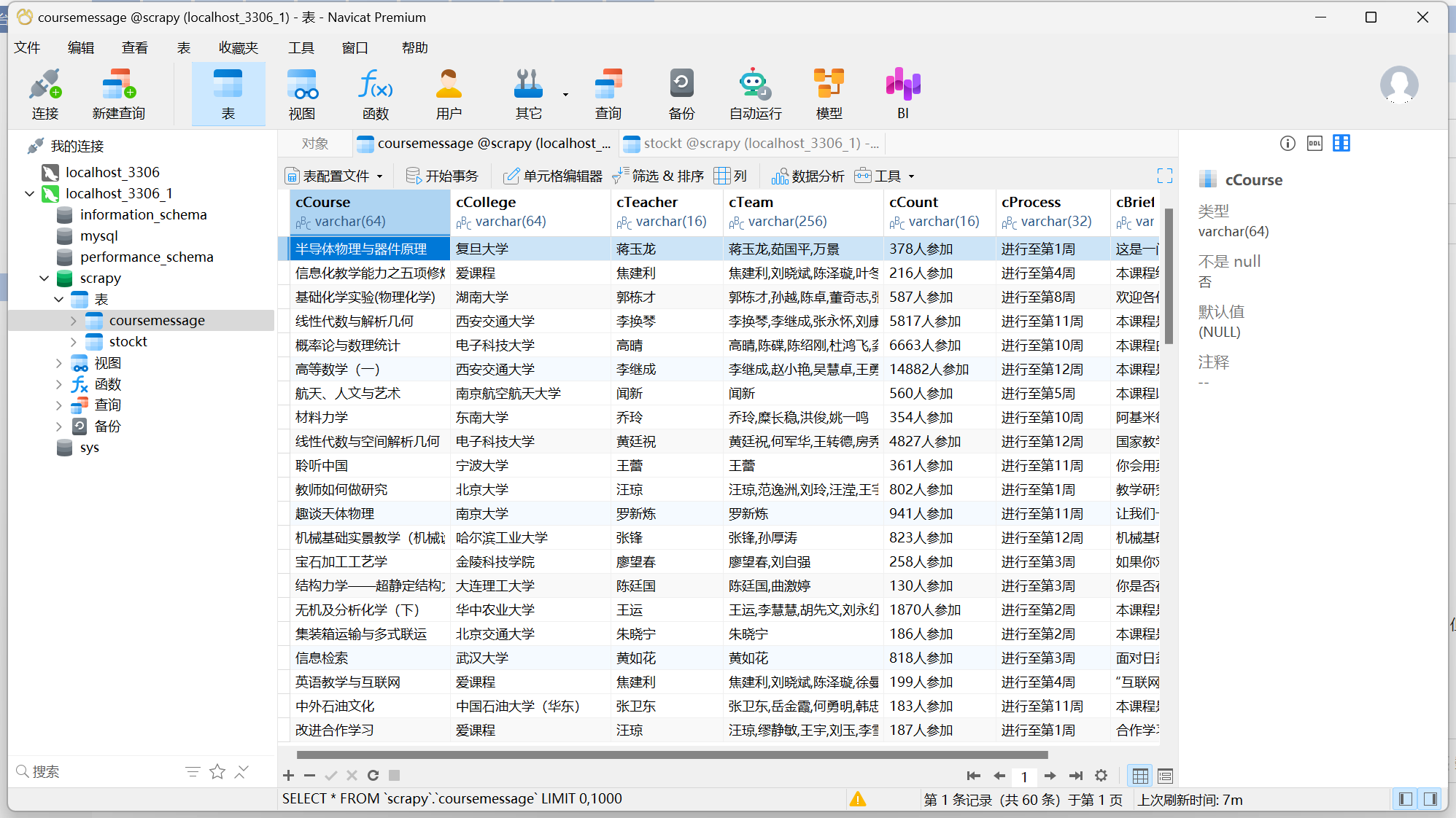

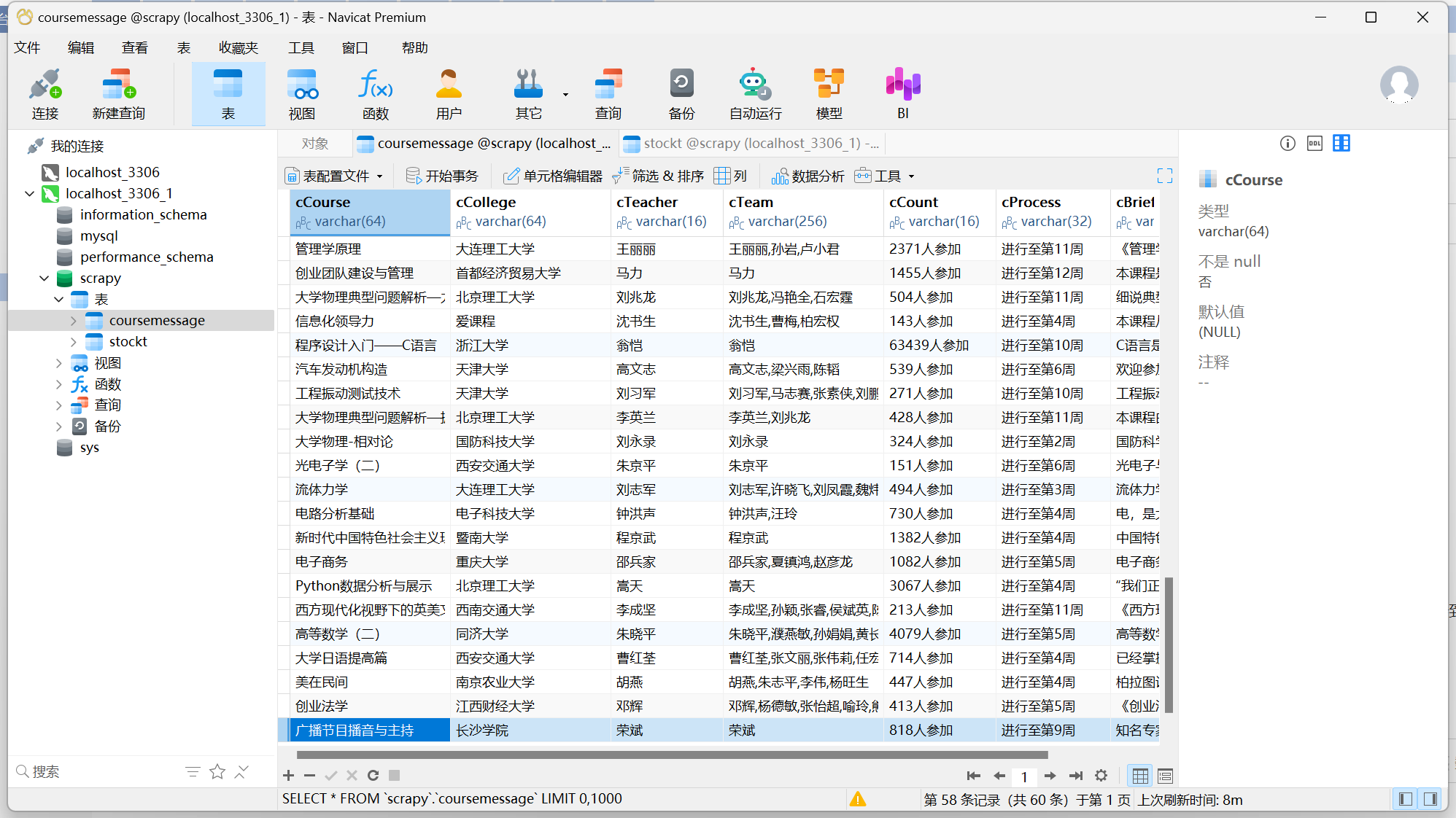

使用 Selenium 框架+MySQL 爬取中国 mooc 网课程资源信息(课程号、课程名

称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

1)代码

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver import ChromeService

from selenium.webdriver import ChromeOptions

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

import pymysql

chrome_options = ChromeOptions()

chrome_options.add_argument('--disable-gpu')

chrome_options.binary_location = r"C:\Program Files\Google\Chrome\Application\chrome.exe"

chrome_options.add_argument('--disable-blink-features=AutomationControlled')

# chrome_options.add_argument('--headless') # 无头模式

service = ChromeService(executable_path=r"C:\Users\ASUS\Desktop\chromedriver.exe")

driver = webdriver.Chrome(service=service, options=chrome_options)

driver.maximize_window() # 使浏览器窗口最大化

# 连接MySql

try:

db = pymysql.connect(host='127.0.0.1', user='root', password='+ODKJmiQV6ye', port=3306, database='scrapy')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS courseMessage')

sql = '''CREATE TABLE courseMessage(cCourse varchar(64),cCollege varchar(64),cTeacher varchar(16),cTeam varchar(256),cCount varchar(16),

cProcess varchar(32),cBrief varchar(2048))'''

cursor.execute(sql)

except Exception as e:

print(e)

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(3) # 等待页面加载完成

courses = driver.find_elements(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[1]/div')

current_window_handle = driver.current_window_handle

for course in courses:

cCourse = course.find_element(By.XPATH, './/h3').text # 课程名

cCollege = course.find_element(By.XPATH, './/p[@class="_2lZi3"]').text # 大学名称

cTeacher = course.find_element(By.XPATH, './/div[@class="_1Zkj9"]').text # 主讲老师

cCount = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/span').text # 参与该课程的人数

cProcess = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/div').text # 课程进展

course.click() # 点击进入课程详情页,在新标签页中打开

Handles = driver.window_handles # 获取当前浏览器的所有页面的句柄

driver.switch_to.window(Handles[1]) # 跳转到新标签页

time.sleep(3) # 等待页面加载完成

# 爬取课程详情数据

# cBrief = WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'j-rectxt2'))).text

cBrief = driver.find_element(By.XPATH, '//*[@id="j-rectxt2"]').text

if len(cBrief) == 0:

cBriefs = driver.find_elements(By.XPATH, '//*[@id="content-section"]/div[4]/div//*')

cBrief = ""

for c in cBriefs:

cBrief += c.text

# 将文本中的引号进行转义处理,防止插入表格时报错

cBrief = cBrief.replace('"', r'\"').replace("'", r"\'")

cBrief = cBrief.strip()

# 爬取老师团队信息

nameList = []

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]').text.strip()

nameList.append(name)

# 如果有下一页的标签,就点击它,然后继续爬取

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

while len(nextButton)!= 0:

nextButton[0].click()

time.sleep(3)

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]').text.strip()

nameList.append(name)

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

cTeam = ','.join(nameList)

driver.close() # 关闭新标签页

driver.switch_to.window(current_window_handle) # 跳转回原始页面

try:

cursor.execute('INSERT INTO courseMessage VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (

cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief))

db.commit()

except Exception as e:

print(e)

# 访问中国大学慕课

driver.get('https://www.icourse163.org/')

# 访问国家精品课程

driver.get(WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//*[@id="app"]/div/div/div[1]/div[1]/div[1]/span[1]/a'))).get_attribute(

'href'))

spiderOnePage() # 爬取第一页的内容

count = 1

'''翻页操作'''

# 下一页的按钮

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

# 如果还有下一页,那么该标签的class属性为_3YiUU

while next_page.get_attribute('class') == '_3YiUU ':

if count == 3:

break

count += 1

next_page.click() # 点击按钮实现翻页

spiderOnePage() # 爬取一页的内容

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

try:

cursor.close()

db.close()

except:

pass

time.sleep(3)

driver.quit()

2)心得

熟练掌握 Selenium 各项技能,从查找元素、模拟登录,到应对 Ajax 数据。利用 Selenium 与 MySQL,成功爬取中国 mooc 网课程信息。过程中克服不少难题,不仅提升了技术水平,更体会到网络数据挖掘的价值。

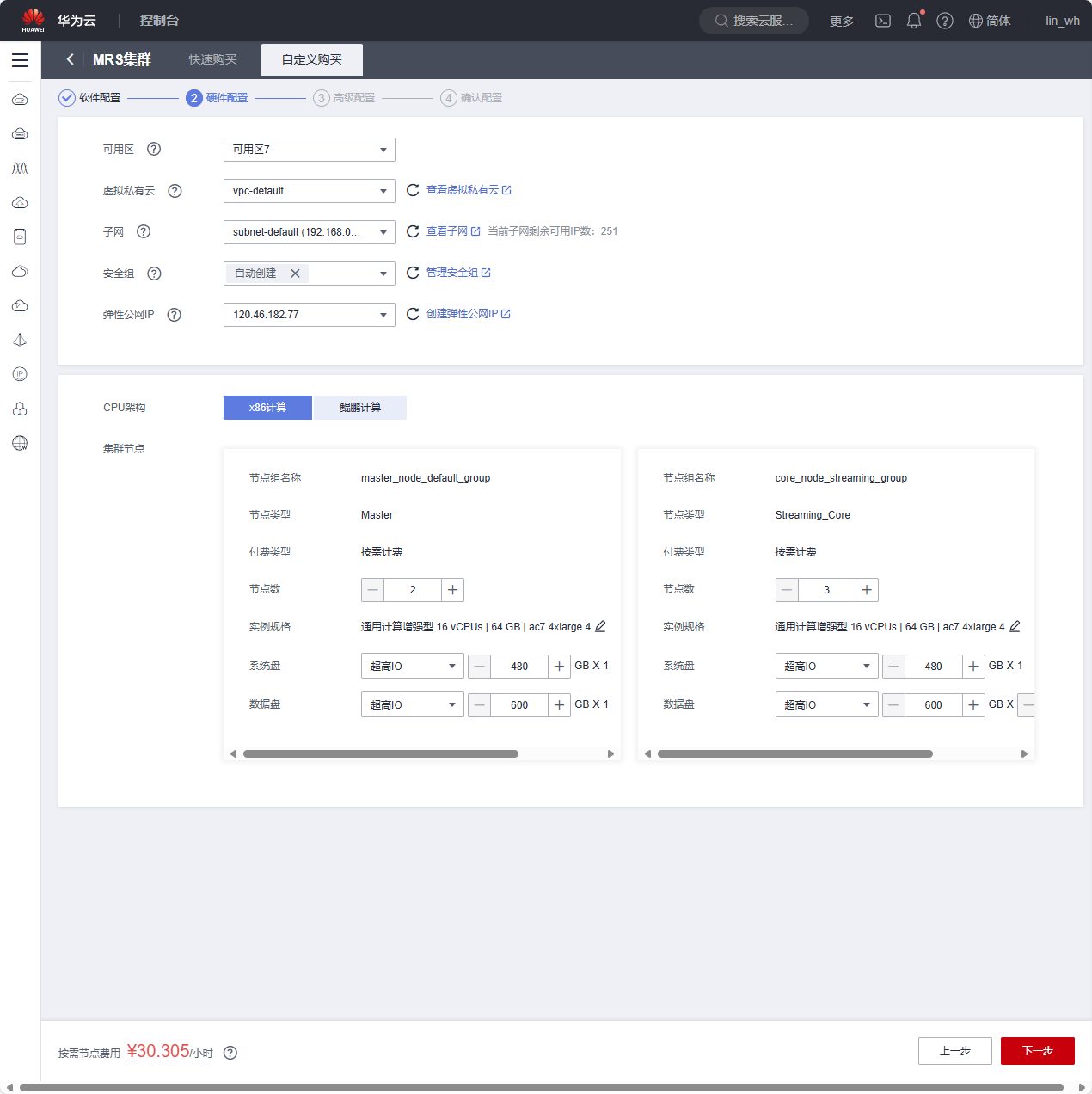

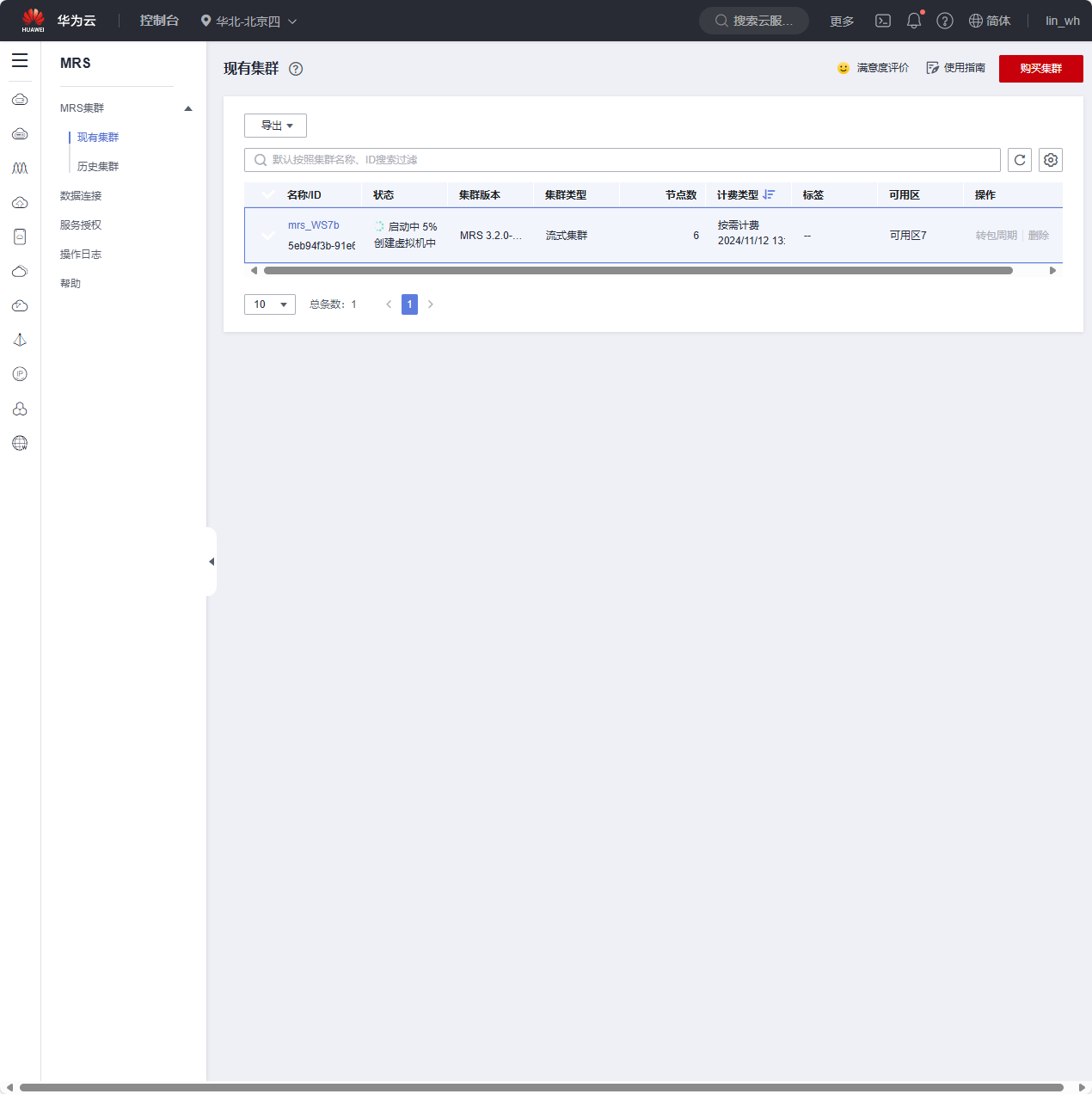

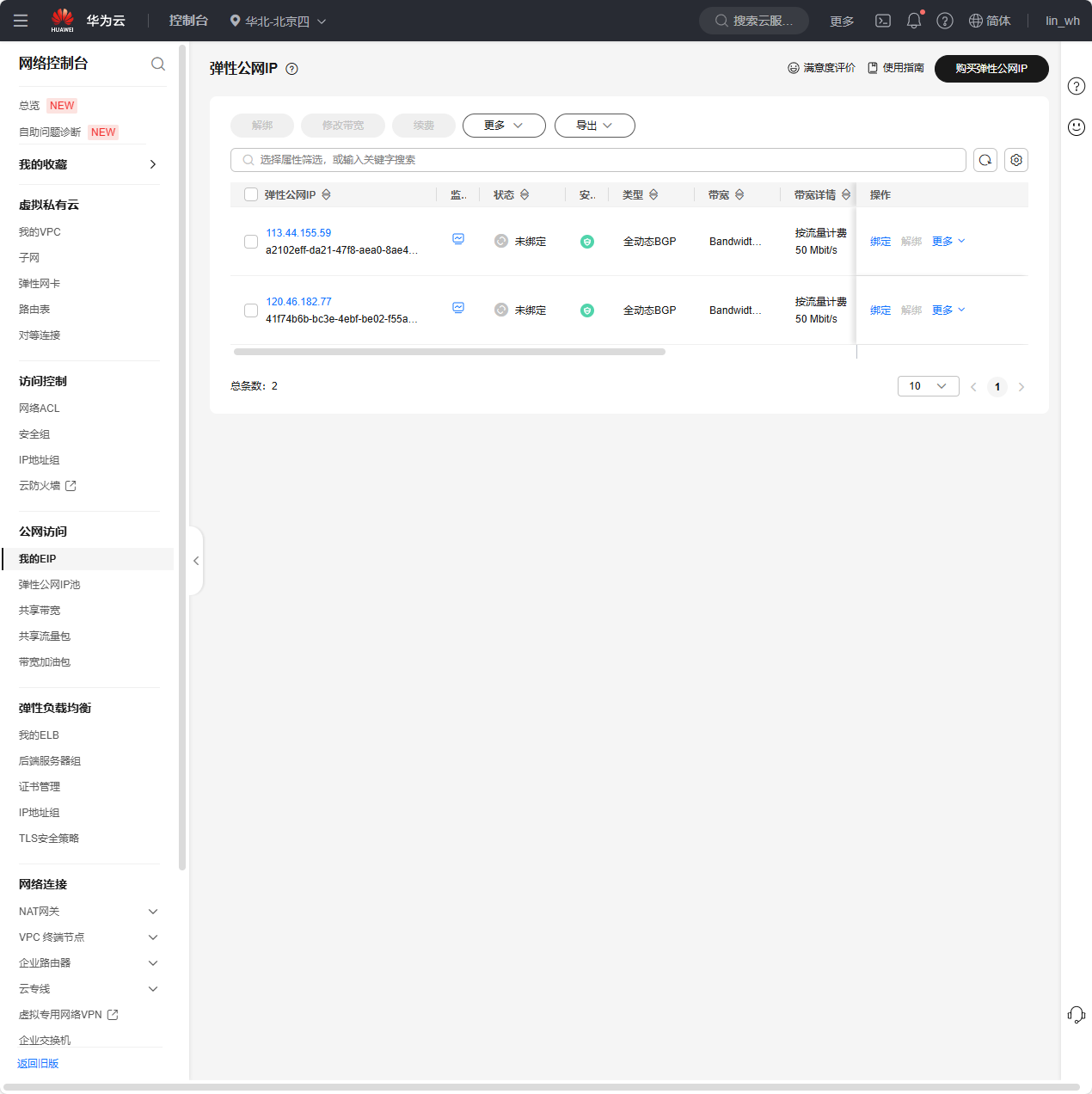

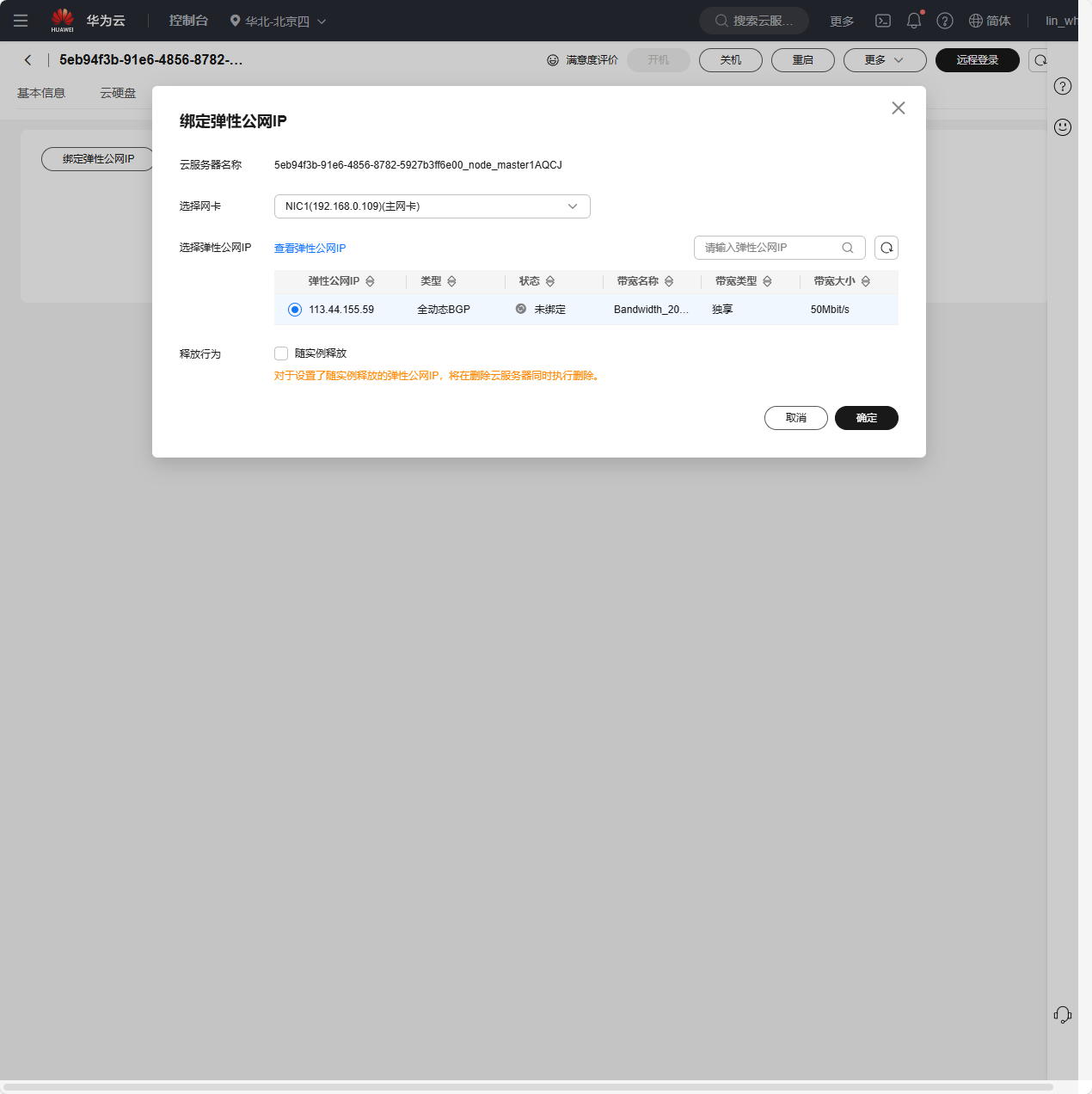

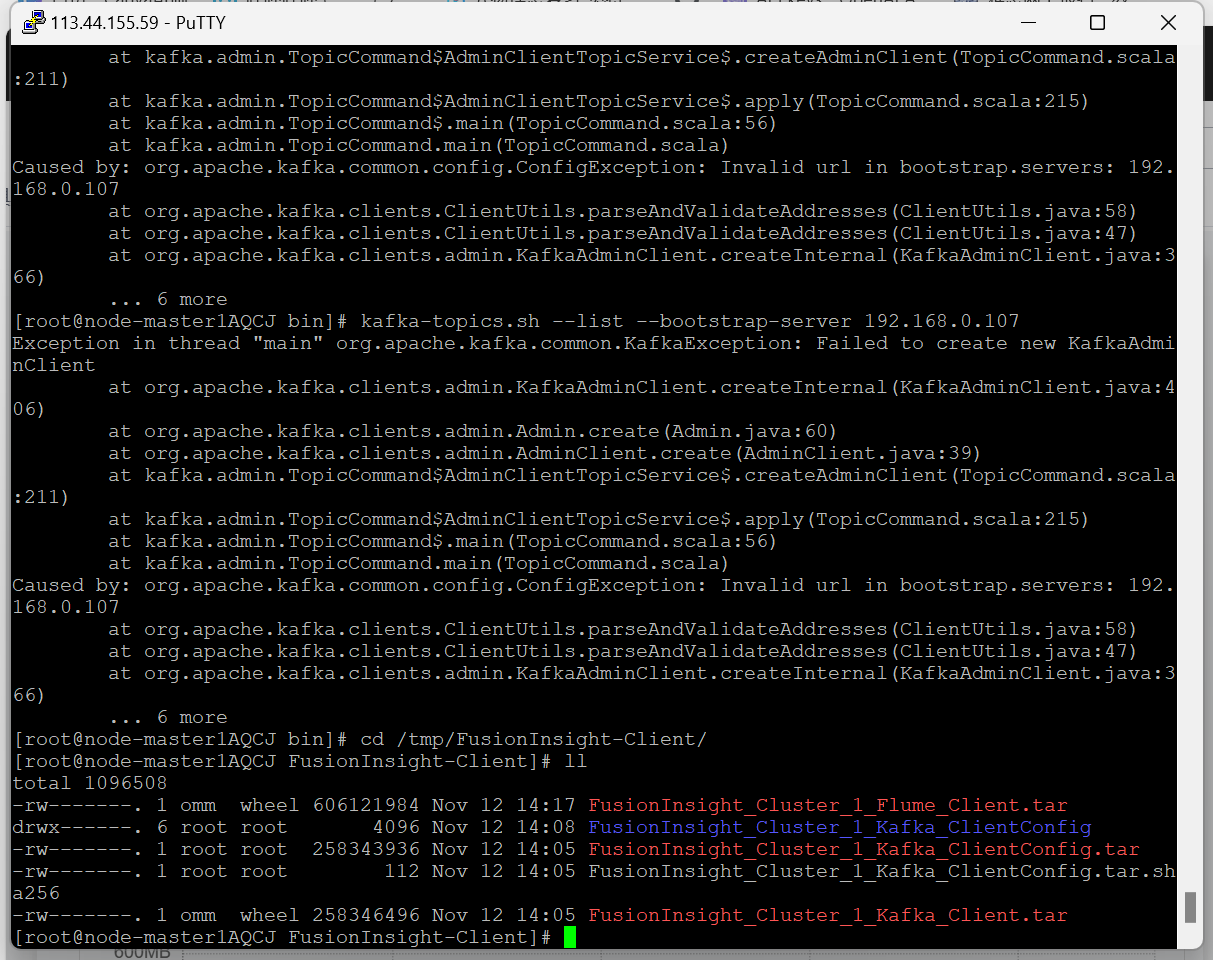

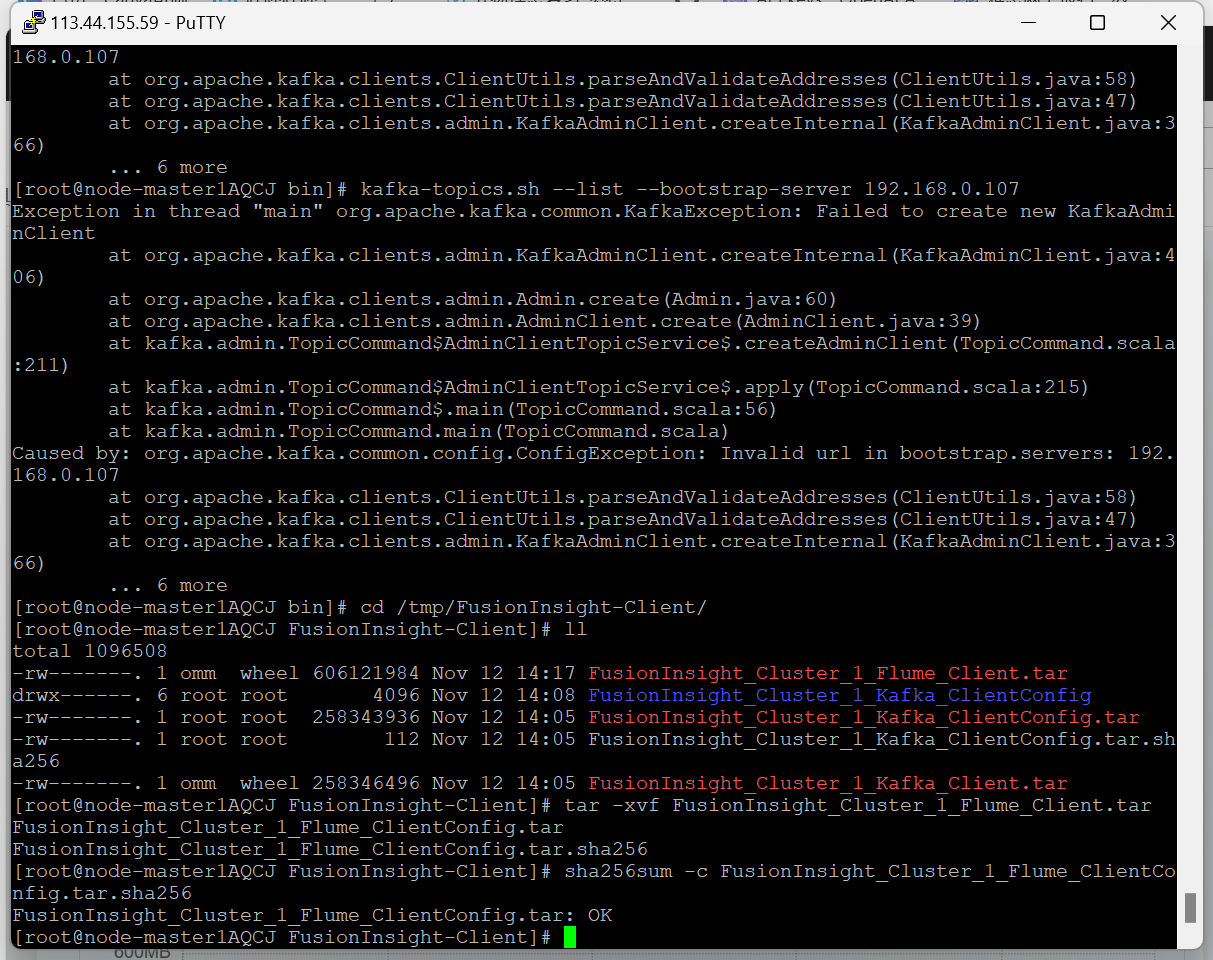

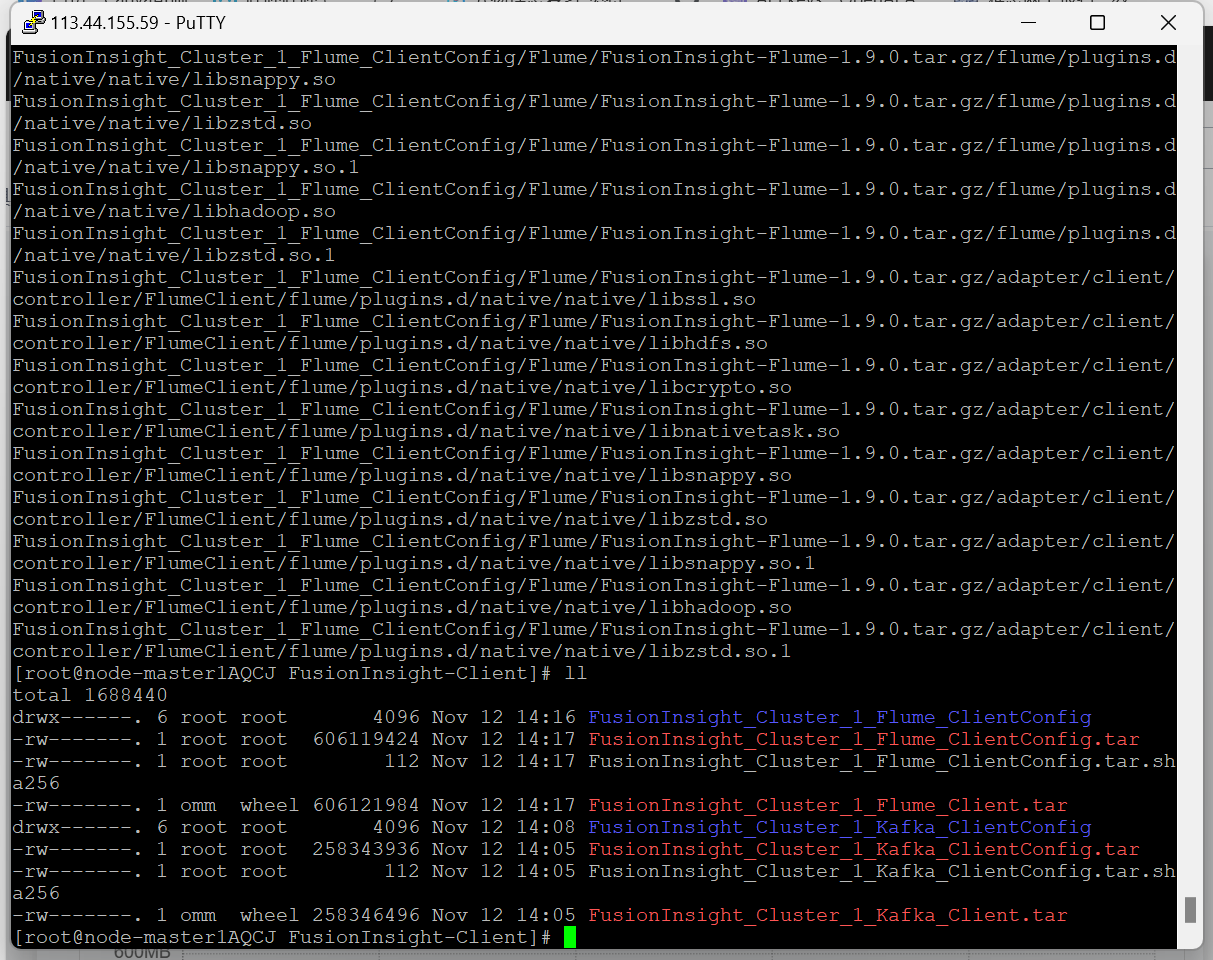

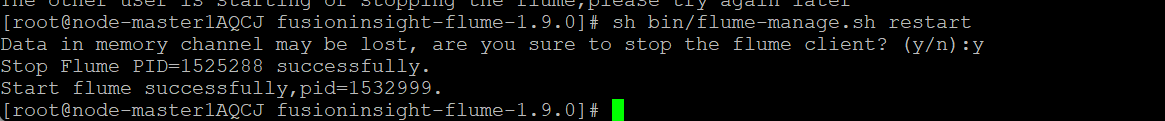

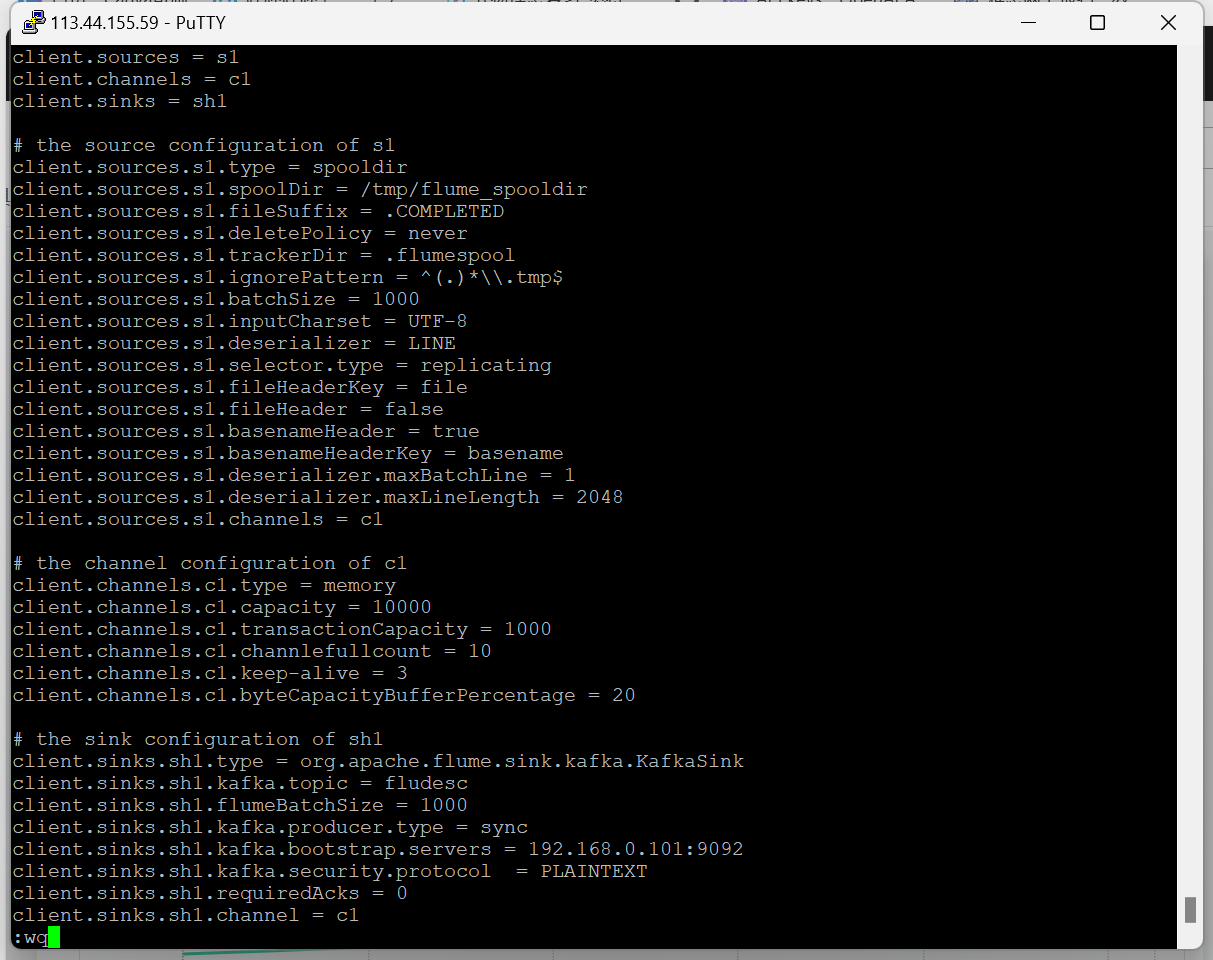

作业三

要求:

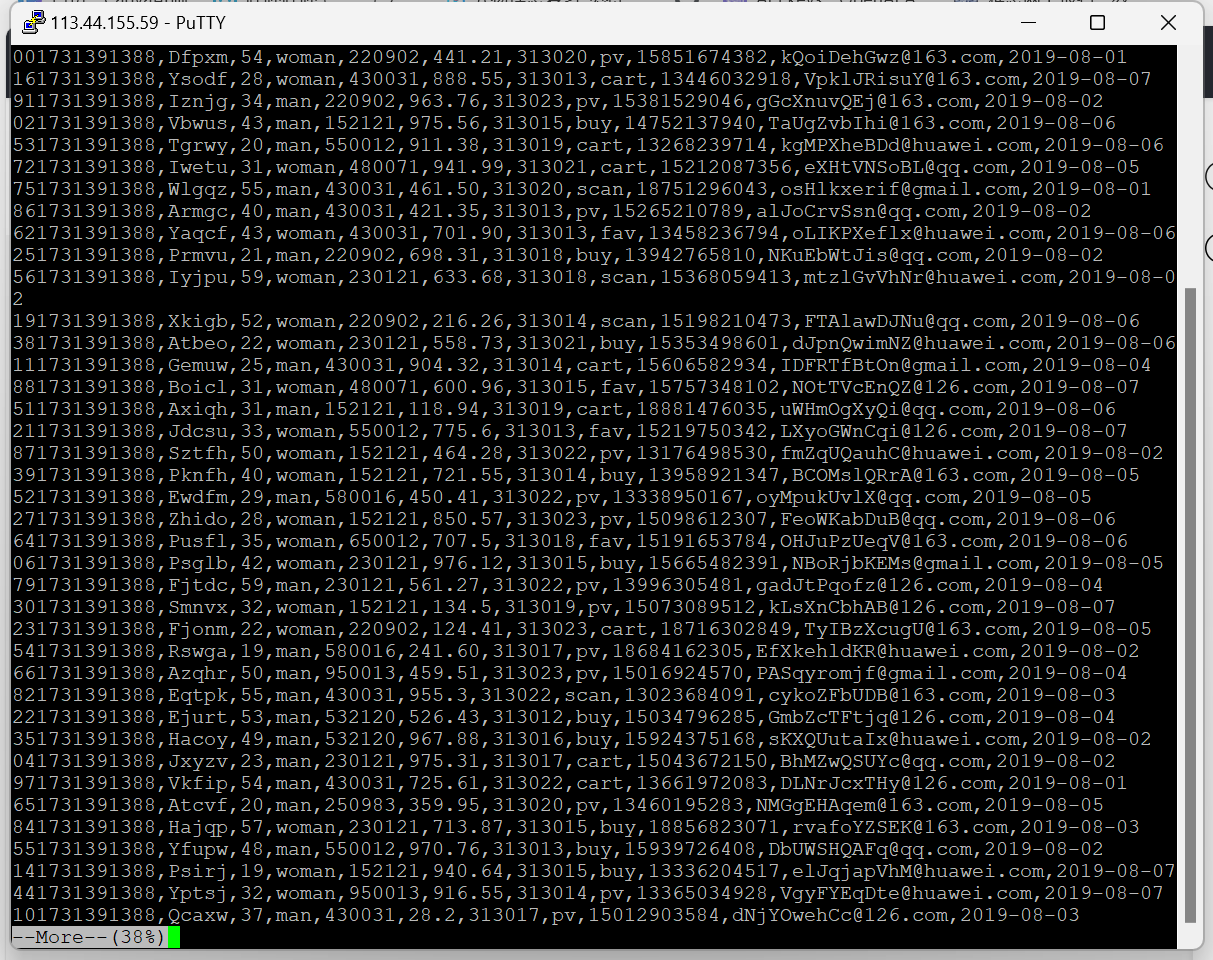

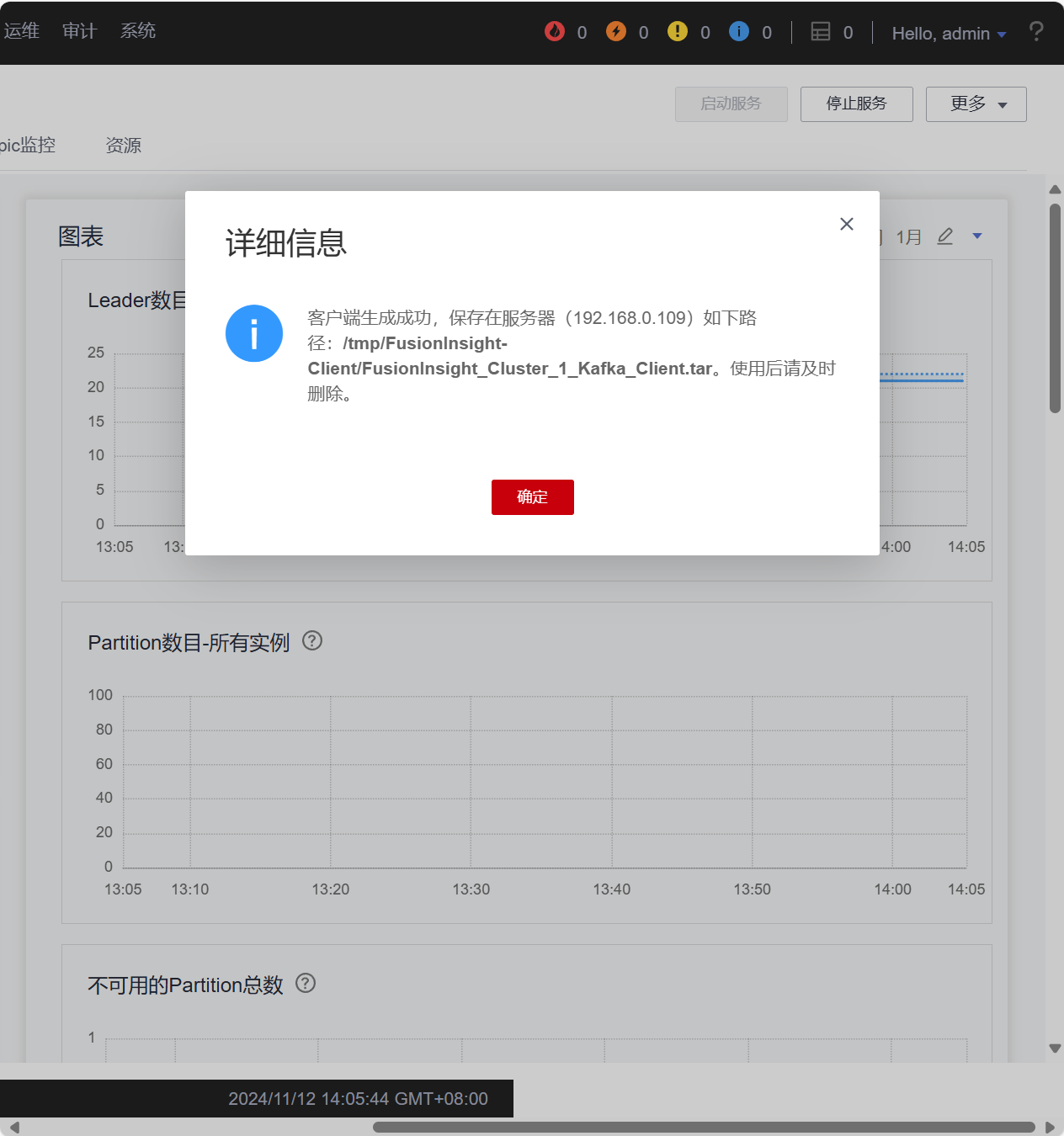

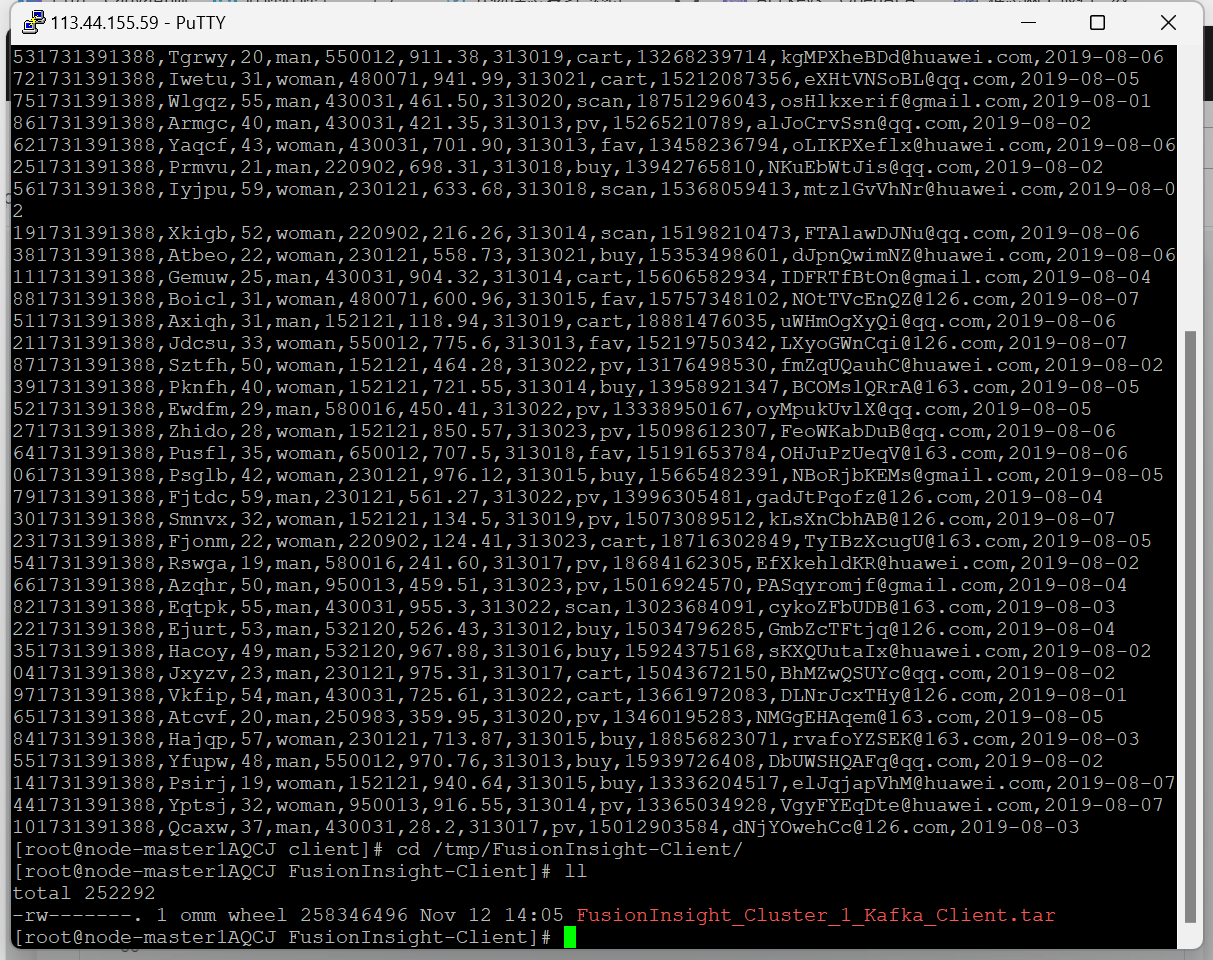

• 掌握大数据相关服务,熟悉 Xshell 的使用

• 完成文档 华为云_大数据实时分析处理实验手册-Flume 日志采集实验(部

分)v2.docx 中的任务,即为下面 5 个任务,具体操作见文档

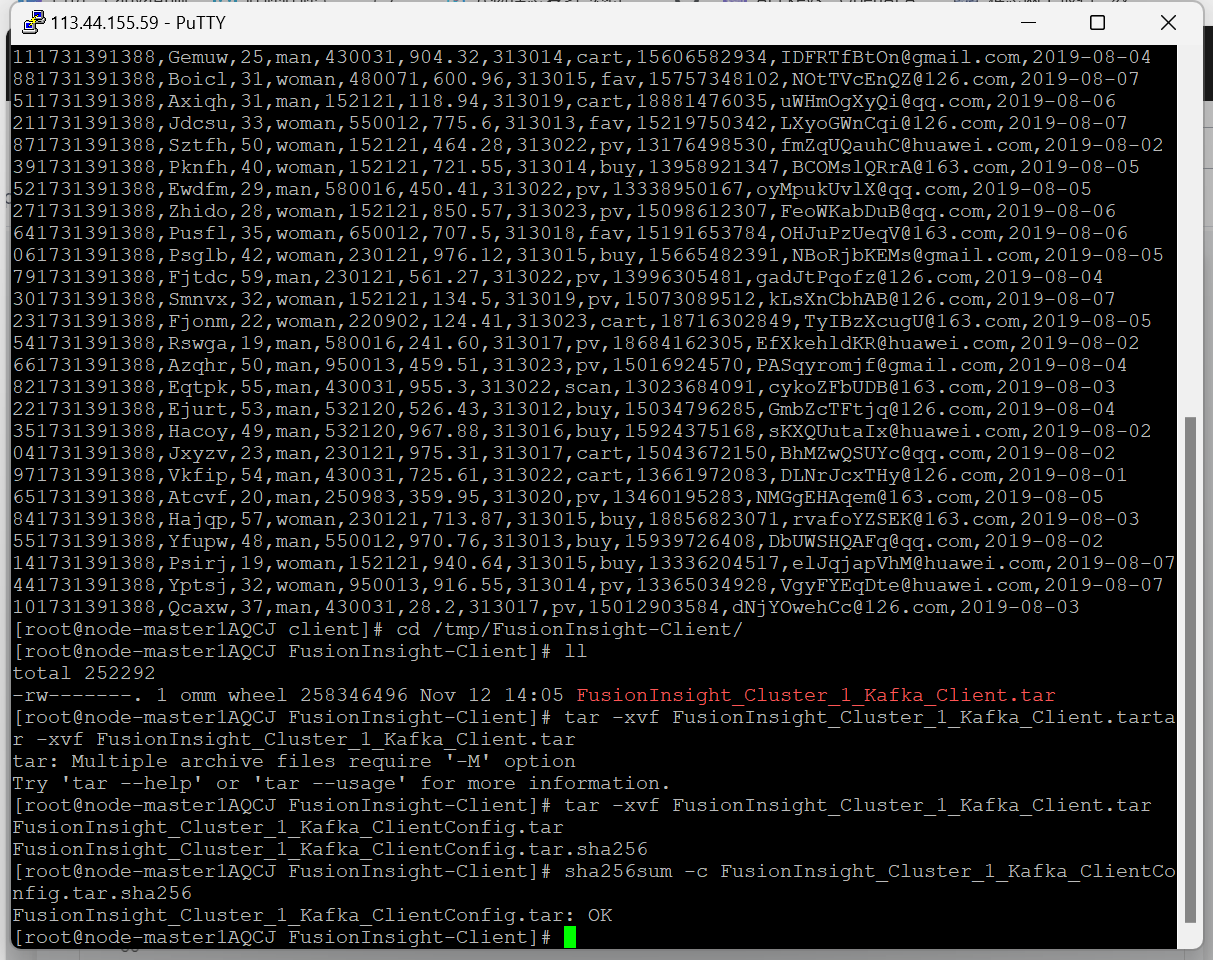

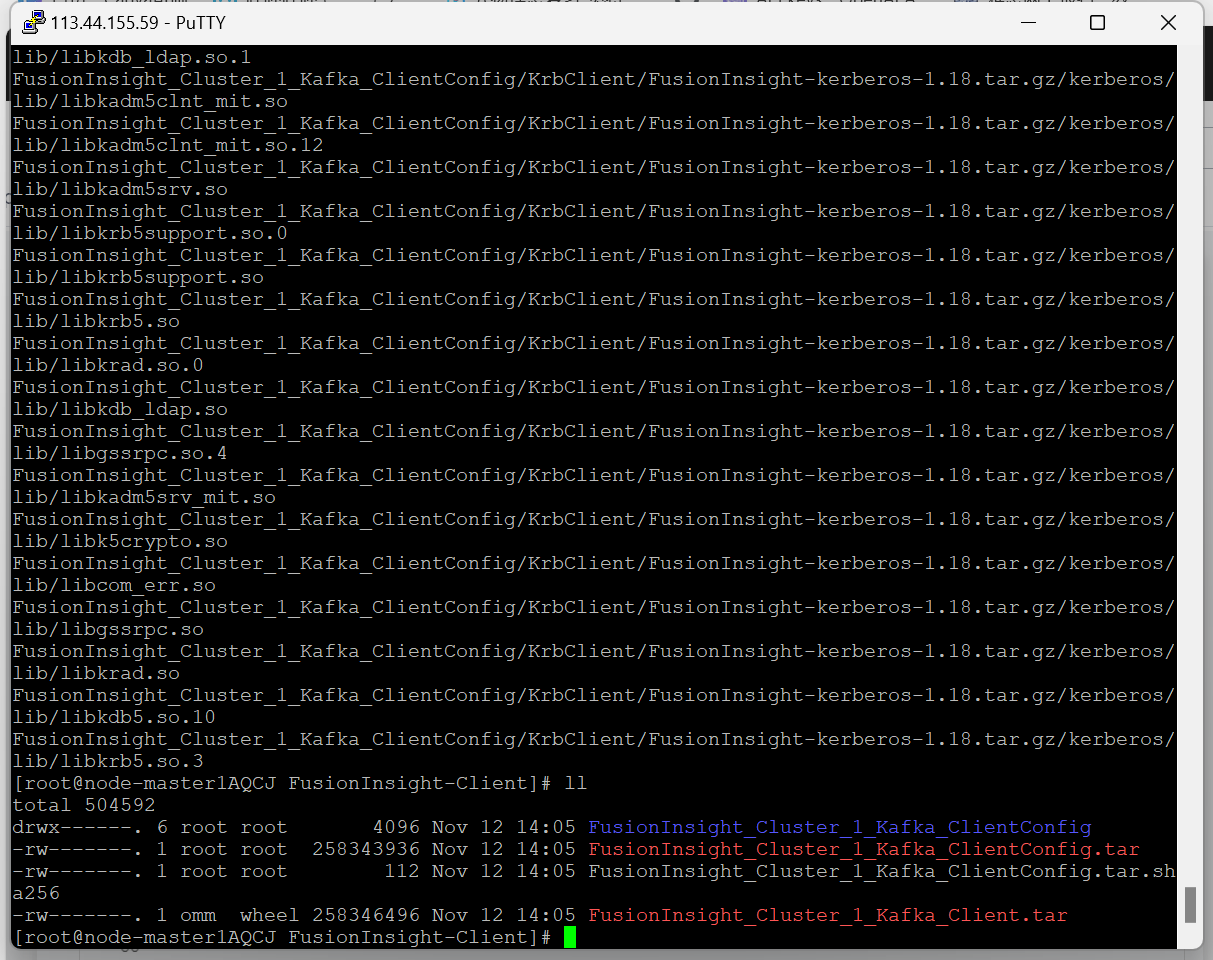

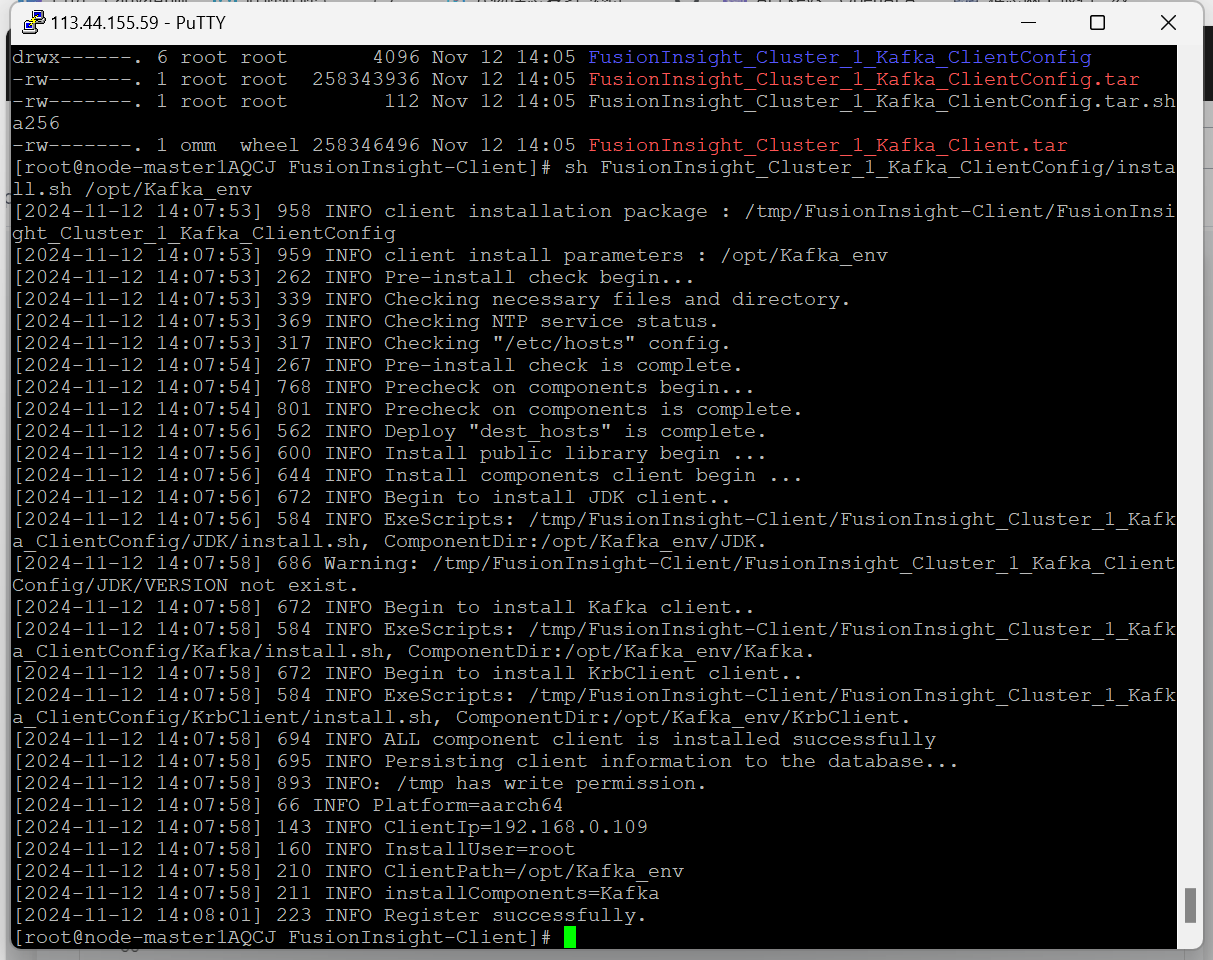

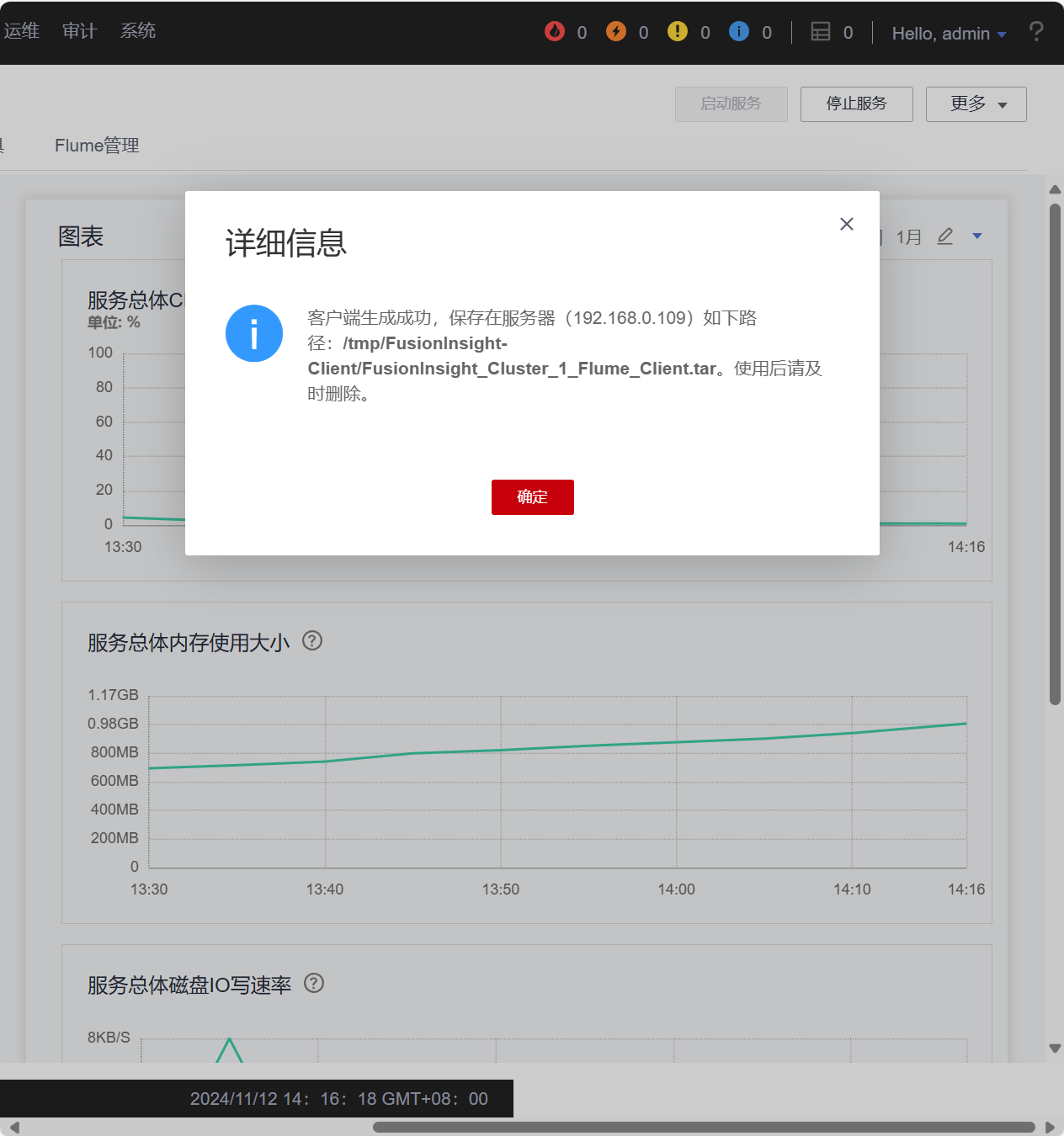

1)截图

2)心得

通过这次实验,我掌握了大数据相关服务,对 Xshell 的使用更加熟悉。在完成华为云 Flume 日志采集实验部分任务时,深入理解了流程,提升了实践能力,也感受到大数据处理的魅力与挑战。