作业1

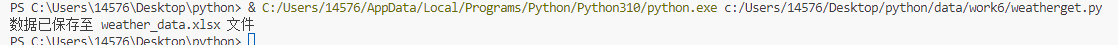

1在中国气象网(http://www.weather.com.cn)给定城市集的 7

日天气预报,并保存在数据库。

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import pandas as pd

url = "https://www.weather.com.cn/weather/101230101.shtml"

try:

headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

date_list = []

weather_list = []

temp_list = []

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

date_list.append(date)

weather_list.append(weather)

temp_list.append(temp)

except Exception as err:

print(err)

df = pd.DataFrame({

"日期": date_list,

"天气": weather_list,

"温度": temp_list

})

df.to_excel("weather_data.xlsx", index=False)

print("数据已保存至 weather_data.xlsx 文件")

except Exception as err:

print(err)

2心得体会

初步掌握用Beautifulsoup 学会分析网页html结构,进一步了解re库

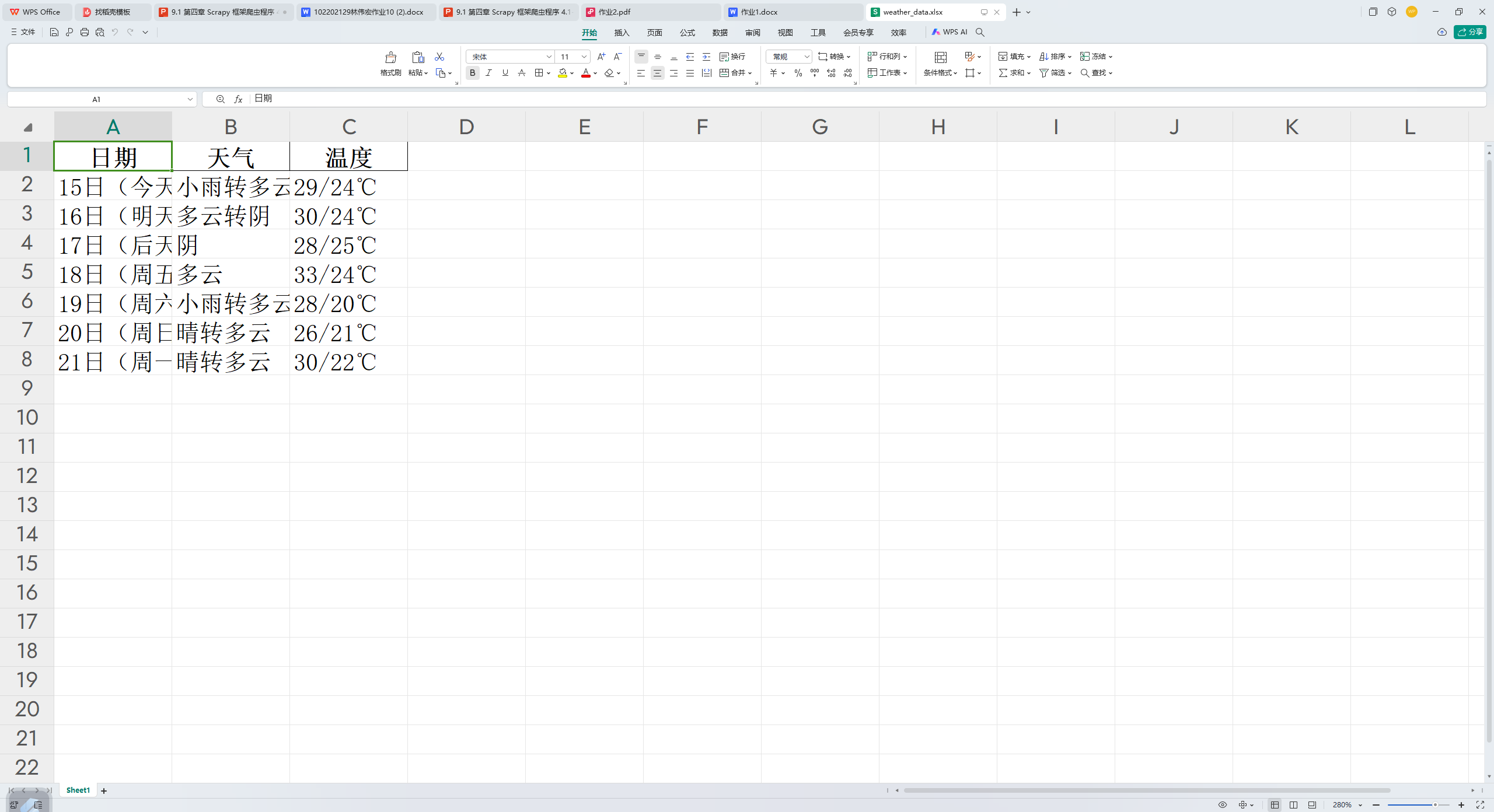

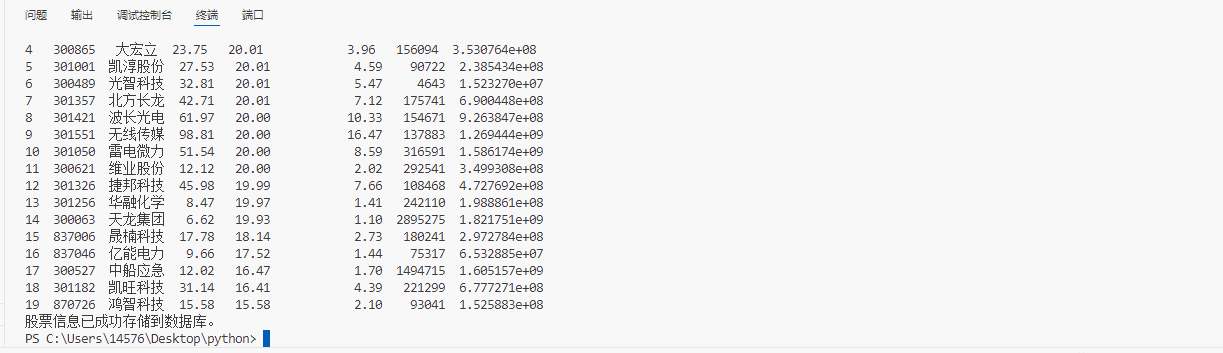

作业2

1用 requests 和 BeautifulSoup 库方法定向爬取股票相关信息,并

存储在数据库中。

import requests

import pandas as pd

import sqlite3

import json

def getHtml(page):

url = f"https://78.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112408723133727080641_1728978540544&pn={page}&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&dect=1&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1728978540545"

try:

r = requests.get(url)

r.raise_for_status()

json_data = r.text[r.text.find("{"):r.text.rfind("}")+1]

data = json.loads(json_data)

return data

except requests.RequestException as e:

print(f"Error fetching data: {e}")

return None

def getOnePageStock(page):

data = getHtml(page)

if not data or 'data' not in data or 'diff' not in data['data']:

return []

return data['data']['diff']

def saveToDatabase(stock_list):

conn = sqlite3.connect('stocks.db')

c = conn.cursor()

try:

c.execute('''CREATE TABLE IF NOT EXISTS stocks

(code TEXT PRIMARY KEY, name TEXT, price REAL, change REAL, percent_change REAL, volume INTEGER, amount REAL)''')

for stock in stock_list:

c.execute("INSERT OR IGNORE INTO stocks VALUES (?, ?, ?, ?, ?, ?, ?)",

(stock.get('f12'), stock.get('f14'), stock.get('f2'), stock.get('f3'), stock.get('f4'), stock.get('f5'), stock.get('f6')))

conn.commit()

except sqlite3.Error as e:

print(f"Database error: {e}")

finally:

c.execute("SELECT * FROM stocks")

rows = c.fetchall()

df = pd.DataFrame(rows, columns=['Code', 'Name', 'Price', 'Change', 'Percent Change', 'Volume', 'Amount'])

print(df)

conn.close()

def main():

page = 1

stock_list = getOnePageStock(page)

if stock_list:

print("爬取到的股票数据:")

for stock in stock_list:

print(stock)

saveToDatabase(stock_list)

print("存储到数据库。")

else:

print("未能获取")

if __name__ == "__main__":

main()

2心得体会

学会如何抓包分析网页 爬取相应数据 了解json

作业3

1爬取中国大学 2021 主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器 F12 调试分析的过程录制 Gif 加入至博客中。

import requests

import re

if __name__=="__main__":

url = 'https://www.shanghairanking.cn/_nuxt/static/1728872418/rankings/bcur/2024/payload.js'

headers={

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/69.0.3947.100 Safari/537.36'

}

response=requests.get(url=url,headers=headers).text

x='univNameCn:"(.*?)"'

y='score:(.*?),'

name=re.findall(x,response,re.S)

score=re.findall(y,response,re.S)

print("排名 学校 总分")

fp = open("./大学排名.txt", "w", encoding='utf-8')

fp.write("排名 学校 总分\n")

for i in range(0,len(name)):

print(str(i+1)+' '+name[i]+' '+score[i])

fp.write(str(i+1)+' '+name[i]+' '+score[i]+'\n')

fp.close()

print("任务完成")

2心得体会

数据进一步处理 综合以上内容的做法