Apache Kafka(七)- Kafka ElasticSearch Comsumer

Kafka ElasticSearch Consumer

对于Kafka Consumer,我们会写一个例子用于消费Kafka 数据传输到ElasticSearch。

1. 构造ElasticSearch 基本代码

我们使用如下代码构造一个 Elastic Search Client,并向 ES写入一个index:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | import org.apache.http.HttpHost;import org.apache.http.impl.nio.client.HttpAsyncClientBuilder;import org.elasticsearch.action.index.IndexRequest;import org.elasticsearch.action.index.IndexResponse;import org.elasticsearch.client.RequestOptions;import org.elasticsearch.client.RestClient;import org.elasticsearch.client.RestClientBuilder;import org.elasticsearch.client.RestHighLevelClient;import org.elasticsearch.common.xcontent.XContentType;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import java.io.IOException;public class ElasticSearchConsumer { public static void main(String[] args) throws IOException { Logger logger = LoggerFactory.getLogger(ElasticSearchConsumer.class.getName()); RestHighLevelClient client = createClient(); String jsonString = "{\"foo\": \"bar\"}"; // create an index IndexRequest indexRequest = new IndexRequest ( "kafkademo" ).source(jsonString, XContentType.JSON); IndexResponse indexResponse = client.index(indexRequest, RequestOptions.DEFAULT); String id = indexResponse.getId(); logger.info(id); // close the client client.close(); } public static RestHighLevelClient createClient(){ String hostname = "xxxxx"; RestClientBuilder builder = RestClient.builder( new HttpHost(hostname, 443, "https")) .setHttpClientConfigCallback(new RestClientBuilder.HttpClientConfigCallback() { @Override public HttpAsyncClientBuilder customizeHttpClient(HttpAsyncClientBuilder httpAsyncClientBuilder) { return httpAsyncClientBuilder; } }); RestHighLevelClient client = new RestHighLevelClient(builder); return client; }} |

在 ES 端查看index 以及条目信息:

> curl https://xxx/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana_1 tQuukokDTbWg9OyQI8Bh4A 1 1 0 0 566b 283b

green open .kibana_2 025DtfBLR3CUexrUkX9x9Q 1 1 0 0 566b 283b

green open kafkademo elXjncvwQPam7dqMd5gedg 5 1 1 0 9.3kb 4.6kb

green open .kibana ZvzR21YqSOi-8nbjffSuTA 5 1 1 0 10.4kb 5.2kb

> curl https://xxx/kafkademo/

{"kafkademo":{"aliases":{},"mappings":{"properties":{"foo":{"type":"text","fields":{"keyword":{"type":"keyword","ignore_above":256}}}}},"settings":{"index":{"creation_date":"1566985949656","number_of_shards":"5","number_of_replicas":"1","uuid":"elXjncvwQPam7dqMd5gedg","version":{"created":"7010199"},"provided_name":"kafkademo"}}}}

2. 向Kafka 生产消息

为了模拟输入到 Kafka 的消息,我们使用一个开源的json-data-generator,github地址如下:

https://github.com/everwatchsolutions/json-data-generator

使用此工具可以很方便地向 Kafka 生产随机的 json数据。

下载此工具后,配置好Kafka broker list地址,启动向Kafka 生产消息:

> java -jar json-data-generator-1.4.0.jar jackieChanSimConfig.json

3. 将消息发往ElasticSearch

在原有Kafka Consumer 的基础上,我们增加以下代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | // poll for new datawhile(true){ ConsumerRecords<String, String> records = consumer.poll(Duration.ofMinutes(100)); for(ConsumerRecord record : records) { // where we insert data into ElasticSearch IndexRequest indexRequest = new IndexRequest( "kafkademo" ).source(record.value(), XContentType.JSON); IndexResponse indexResponse = client.index(indexRequest, RequestOptions.DEFAULT); String id = indexResponse.getId(); logger.info(id); try { Thread.sleep(1000); // introduce a small delay } catch (InterruptedException e) { e.printStackTrace(); } } } |

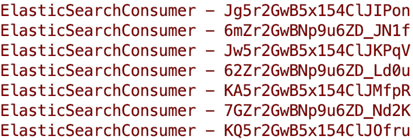

可以看到消息被正常发往ElasticSearch,其中随机字符串为插入ES后的 _id:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现