linux系统lnmp架构keepalived高可用

keepalived高可用

keepalived

注意:任何软件都可以使用keepalived来做高可用

keepalived如何实现高可用

VRRP:虚拟路由冗余协议

比如公司的网络是通过网关进行上网的,那么如果该路由器故障了,网关无法转发报文了,此时所有人都无法上网了,怎么办?

通常做法是给路由器增加一台备节点,但是问题是,如果我们的主网关master故障了,用户是需要手动指向backup的,如果用户过多修改起来会非常麻烦。

问题一:假设用户将指向都修改为backup路由器,那么master路由器修好了怎么办?

问题二:假设Master网关故障,我们将backup网关配置为master网关的ip是否可以?

其实是不行的,因为PC第一次通过ARP广播寻找到Master网关的MAC地址与IP地址后,会将信息写到ARP的缓存表中,那么PC之后连接都是通过那个缓存表的信息去连接,然后进行数据包的转发,即使我们修改了IP但是Mac地址是唯一的,pc的数据包依然会发送给master。(除非是PC的ARP缓存表过期,再次发起ARP广播的时候才能获取新的backup对应的Mac地址与IP地址)

VIP:虚拟IP地址

VMAC:虚拟mac地址

如何才能做到出现故障自动转移,此时VRRP就出现了,我们的VRRP其实是通过软件或者硬件的形式在Master和Backup外面增加一个虚拟的MAC地址(VMAC)与虚拟IP地址(VIP),那么在这种情况下,PC请求VIP的时候,无论是Master处理还是Backup处理,PC仅会在ARP缓存表中记录VMAC与VIP的信息。

练习题:

1.两台lb,nginx keepalived

2.三台web,nginx php

3.一台db,mariadb

4.一台nfs,nfs-utils sersync

5.一台backup sync nfs-utils

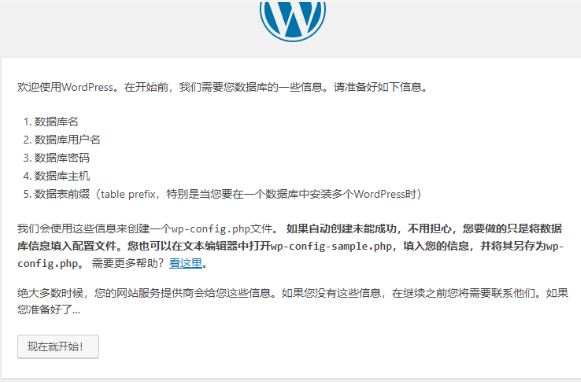

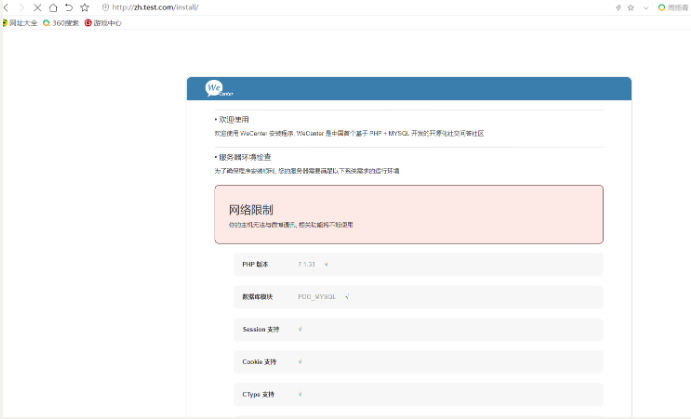

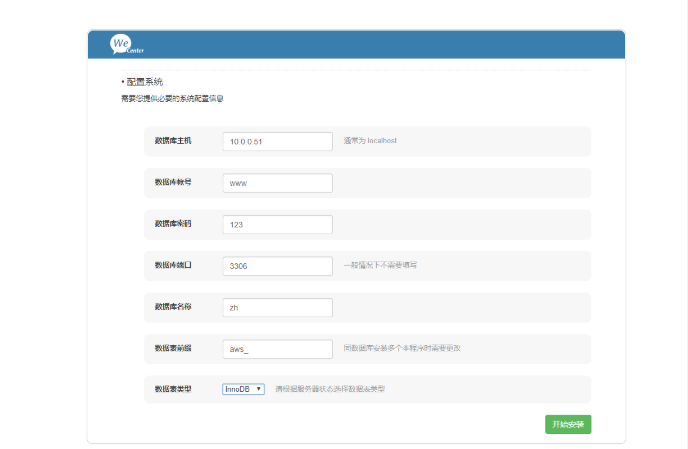

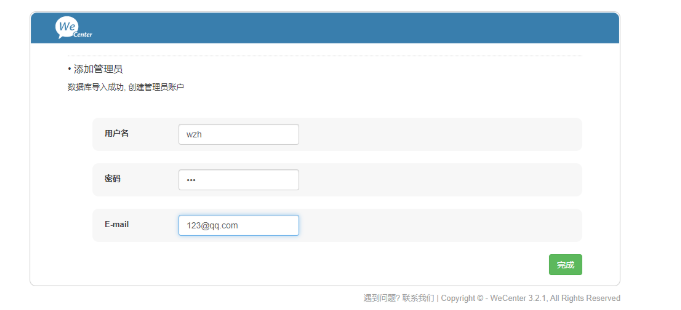

6.部署wordpress和wecenter

环境准备

| 服务器 | 外网IP | 内网IP | 安装服务 | 角色 |

|---|---|---|---|---|

| web01 | 10.0.0.7 | 172.16.1.7 | nginx,php-fpm | web服务器 |

| web02 | 10.0.0.8 | 172.16.1.8 | nginx,php-fpm | web服务器 |

| web03 | 10.0.0.9 | 172.16.1.9 | nginx,php-fpm | web服务器 |

| db01 | 10.0.0.51 | 172.16.1.51 | mariadb-server | 数据库服务器 |

| nfs | 10.0.0.31 | 172.16.1.31 | nfs-utils,sersync | 共享存储服务器 |

| backup | 10.0.0.41 | 172.16.1.41 | rsync,nfs-utils | 备份服务器 |

| lb01 | 10.0.0.5 | 172.16.1.5 | nginx,keepalived | 负载均衡服务器 |

| lb02 | 10.0.0.6 | 172.16.1.6 | nginx,keepalived | 负载均衡高可用服务器 |

操作准备

别着急,点根烟,一点一点来......

首先把三台web服务器给整好,该装的服务装上,

先解决一台,然后连接完数据库之后把配置文件啥的都

scp到其他web服务器,那就得创建数据库了,也可以先把数据库创建出来

再做三台web,然后就是nfs共享存储和backup,两个关联着,边做nfs,边做backup

完活之后就该负载均衡代理了,lb01和lb02两个一个做负载均衡,一个做负载均衡的高可用

db01数据库部署

# 下载mysql的小伙伴mariadb

[root@db01 ~]# yum install -y mariadb-server

# 启动并加入开机自启

[root@db01 ~]# systemctl start mariadb.service

[root@db01 ~]# systemctl enable mariadb.service

# 给root用户添加密码

[root@db01 ~]# mysqladmin -uroot password '123'

# 登录

[root@db01 ~]# mysql -uroot -p123

# 创建库

MariaDB [(none)]> create database wordpress;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> create database zh;

Query OK, 1 row affected (0.00 sec)

# 创建管理库的用户和密码

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> grant all on *.* to www@'%' identified by '123'

-> ;

Query OK, 0 rows affected (0.01 sec)

# 查看用户是否创建成功

MariaDB [(none)]> select user,host from mysql.user;

+------+-----------+

| user | host |

+------+-----------+

| www | % |

| root | 127.0.0.1 |

| root | ::1 |

| | db01 |

| root | db01 |

| | localhost |

| root | localhost |

+------+-----------+

# 查看数据库

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

| wordpress |

| zh |

+--------------------+

6 rows in set (0.00 sec)

# nginx和PHP-fpm的安装方法

# 1.更换nginx源

vim /etc/yum.repos.d/nginx.repo

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

# 2.安装nginx

[root@web01 nginx_php]# yum install -y nginx

# 3.更换php源

[root@web01 ~]# vim /etc/yum.repos.d/php.repo

[php-webtatic]

name = PHP Repository

baseurl = http://us-east.repo.webtatic.com/yum/el7/x86_64/

gpgcheck = 0

# 4.安装php

[root@web01 ~]# yum -y install php71w php71w-cli php71w-common php71w-devel php71w-embedded php71w-gd php71w-mcrypt php71w-mbstring php71w-pdo php71w-xml php71w-fpm php71w-mysqlnd php71w-opcache php71w-pecl-memcached php71w-pecl-redis php71w-pecl-mongodb

web服务器部署

web01

# 安装nginx和php-fpm,这里我用的是打包好的nginx和php-fpm的rpm包

# 从windows上传rpm包

[root@web01 ~]# rz

# 查看

[root@web01 ~]# ll

total 19984

-rw-------. 1 root root 1444 Apr 30 20:50 anaconda-ks.cfg

-rw-r--r--. 1 root root 287 May 3 20:07 host_ip.sh

-rw-r--r-- 1 root root 20453237 May 22 15:20 php_nginx.tgz

# 解压

[root@web01 ~]# tar xf php_nginx/tgz

# 进入站点目录安装nginx和php-fpm

[root@web01 ~]# cd /root/root/nginx_php

[root@web01 nginx_php]# rpm -Uvh *rpm

# 创建www用户组和用户指定gid 666 uid 666不登录系统,没有家目录

[root@web01 ~]# groupadd www -g 666

[root@web01 ~]# useradd www -u 666 -g 666 -s /sbin/nologin -M

# 修改nginx启动用户

[root@web01 ~]# vim /etc/nginx/nginx.conf

user www;

worker_processes 1;

# 修改php-fpm启动用户

[root@web01 ~]# cat /etc/php-fpm.d/www.conf

; Start a new pool named 'www'.

[www]

user = www

; RPM: Keep a group allowed to write in log dir.

group = www

# 编辑nginx的配置文件

[root@web01 ~]# cat /etc/nginx/conf.d/www.test.com.conf

server {

listen 80;

server_name blog.test.com;

root /code/wordpress;

index index.php index.html;

location ~ \.php$ {

root /code/wordpress;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include /etc/nginx/fastcgi_params;

}

}

server {

listen 80;

server_name zh.test.com;

root /code/zh;

index index.php index.html;

location ~ \.php$ {

root /code/zh;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include /etc/nginx/fastcgi_params;

}

}

# 检测语法

[root@web01 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# 启动nginx和php-fpm并加入开机自启

[root@web01 ~]# systemctl start nginx php-fpm && systemctl enable nginx php-fpm

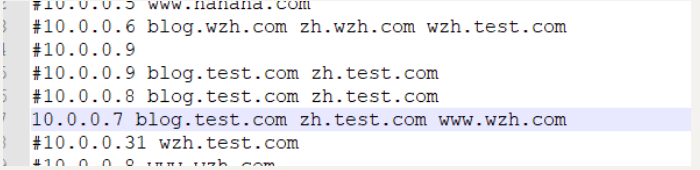

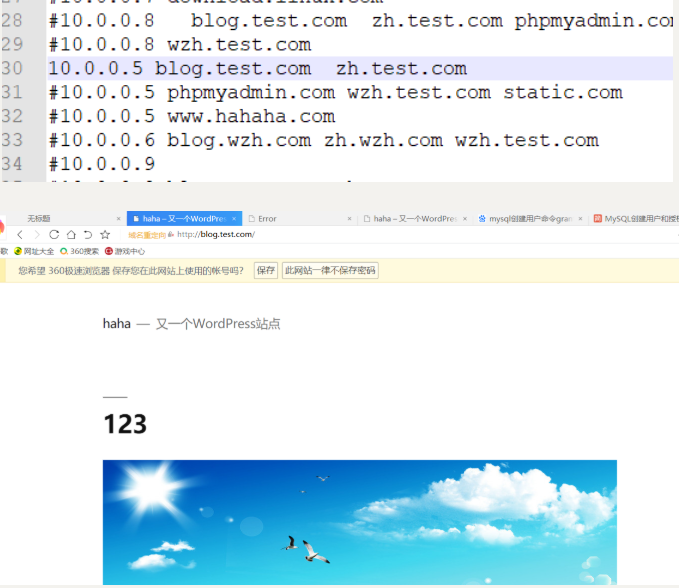

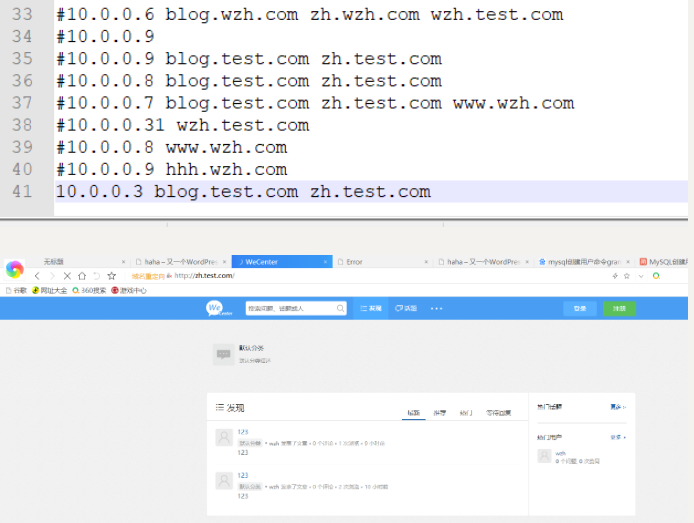

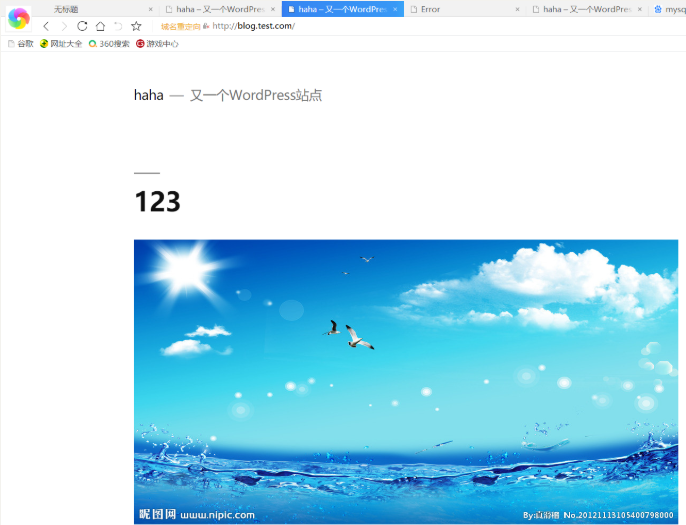

# 域名解析

web01连接数据库

# 根据配置文件填写相关内容

# 1.在站点目录下创建一个php连接数据库的代码(可做可不做)

[root@web01 ~]# vim /website/wordpress/mysql.php

<?php

$servername = "10.0.0.51";

$username = "www";

$password = "123";

// 创建连接

$conn = mysqli_connect($servername, $username, $password);

// 检测连接

if (!$conn) {

die("Connection failed: " . mysqli_connect_error());

}

echo "小兄弟,php可以连接MySQL...";

?>

<img style='width:100%;height:100%;' src=https://blog.driverzeng.com/zenglaoshi/php_mysql.png>

web02

# 上传nginx和php-fpm的rpm包

[root@web01 ~]# rz

# 查看

[root@web01 ~]# ll

total 19984

-rw-------. 1 root root 1444 Apr 30 20:50 anaconda-ks.cfg

-rw-r--r--. 1 root root 287 May 3 20:07 host_ip.sh

-rw-r--r-- 1 root root 20453237 May 22 15:20 php_nginx.tgz

# 解压

[root@web01 ~]# tar xf php_nginx/tgz

# 进入站点目录安装nginx和php-fpm

[root@web01 ~]# cd /root/root/nginx_php

[root@web01 nginx_php]# rpm -Uvh *rpm

# 创建www用户组和用户指定gid 666 uid 666不登录系统,没有家目录

[root@web01 ~]# groupadd www -g 666

[root@web01 ~]# useradd www -u 666 -g 666 -s /sbin/nologin -M

# 把web01的配置文件传到web02

[root@web01 ~]# scp -r /code 10.0.0.8:/

The authenticity of host '10.0.0.8 (10.0.0.8)' can't be established.

ECDSA key fingerprint is SHA256:0LmJJQAFxWMarCtpHr+bkYdqoSpp3j7O+TDbK1chOqI.

ECDSA key fingerprint is MD5:a1:a9:30:13:5f:44:ad:da:fb:a1:65:34:b5:dd:a3:d3.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.8' (ECDSA) to the list of known hosts.

root@10.0.0.8's password:

[root@web01 ~]# scp -r /etc/nginx/* 10.0.0.8:/etc/nginx

[root@web01 ~]# scp -r /etc/php-fpm.d 10.0.0.8:/etc/

root@10.0.0.8's password:

www.conf

# 启动nginx和php-fpm并加入开机自启

[root@web02 ~]# systemctl start nginx php-fpm && systemctl enable nginx php-fpm

# 域名解析

web03

# 上传nginx和php-fpm的rpm包

[root@web03 ~]# rz

# 查看

[root@web03 ~]# ll

total 19984

-rw-------. 1 root root 1444 Apr 30 20:50 anaconda-ks.cfg

-rw-r--r--. 1 root root 287 May 3 20:07 host_ip.sh

-rw-r--r-- 1 root root 20453237 May 22 15:20 php_nginx.tgz

# 解压

[root@web03 ~]# tar xf php_nginx/tgz

# 进入站点目录安装nginx和php-fpm

[root@web03 ~]# cd /root/root/nginx_php

[root@web03 nginx_php]# rpm -Uvh *rpm

# 创建www用户组和用户指定gid 666 uid 666不登录系统,没有家目录

[root@web03 ~]# groupadd www -g 666

[root@web03 ~]# useradd www -u 666 -g 666 -s /sbin/nologin -M

# 把web01的配置文件传到web02

[root@web01 ~]# scp -r /code 10.0.0.9:/

The authenticity of host '10.0.0.9 (10.0.0.9)' can't be established.

ECDSA key fingerprint is SHA256:0LmJJQAFxWMarCtpHr+bkYdqoSpp3j7O+TDbK1chOqI.

ECDSA key fingerprint is MD5:a1:a9:30:13:5f:44:ad:da:fb:a1:65:34:b5:dd:a3:d3.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.9' (ECDSA) to the list of known hosts.

root@10.0.0.9's password:

[root@web01 ~]# scp -r /etc/nginx/* 10.0.0.9:/etc/nginx

[root@web01 ~]# scp -r /etc/php-fpm.d 10.0.0.9:/etc/

root@10.0.0.9's password:

www.conf

# 启动nginx和php-fpm并加入开机自启

[root@web02 ~]# systemctl start nginx php-fpm && systemctl enable nginx php-fpm

# 域名解析

nfs服务器部署

## 下载nfs-utils

[root@nfs ~]# yum install -y nfs-utils

# 编辑配置

[root@nfs ~]# vim /etc/exports

/tset 172.16.1.0/24(sync,rw,all_squash,anonuid=666,anongid=666)

# 创建用户

[root@nfs ~]# groupadd www -g 666

[root@nfs ~]# useradd www -u 666 -g 666 -s /sbin/nologin -M

# 创建挂载目录

[root@nfs ~]# mkdir /tset/zh_data -p

[root@nfs ~]# mkdir /tset/wp_data

# 目录授权

[root@nfs ~]# chown www.www /tset -R

# 启动并加入开机自启

[root@nfs ~]# systemctl start nfs-server

[root@nfs ~]# systemctl enable nfs-server

# 查看挂载目录

[root@nfs ~]# showmount -e

Export list for nfs:

/tset 172.16.1.0/24

web01,02,03,挂载目录

# web01 挂载

[root@web01 ~]# mount -t nfs 172.16.1.31:/tset/zh_data /code/zh/uploads

[root@web01 ~]# mkdir /code/wordpress/wp-content/uploads

[root@web01 ~]# mount -t nfs 172.16.1.31:/tset/wp_data /code/wordpress/wp-content/uploads

[root@web01 ~]# df -h

172.16.1.31:/tset/zh_data 19G 1.4G 18G 8% /code/zh/uploads

172.16.1.31:/tset/wp_data 19G 1.4G 18G 8% /code/wordpress/wp-content/uploads

# web02 挂载

[root@web02 ~]# mkdir /code/wordpress/wp-content/uploads

[root@web02 ~]# mount -t nfs 172.16.1.31:/tset/wp_data /code/wordpress/wp-content/uploads

[root@web02 ~]# mount -t nfs 172.16.1.31:/tset/zh_data /code/zh/uploads

# web03 挂载

[root@web03 ~]# mkdir /code/wordpress/wp-content/uploads

[root@web03 ~]# mount -t nfs 172.16.1.31:/tset/wp_data /code/wordpress/wp-content/uploads

[root@web03 ~]# mount -t nfs 172.16.1.31:/tset/zh_data /code/zh/uploads

backup备份服务器部署

## 服务端

安装rsync

[root@backup ~]# yum install -y rsync

# 修改配置文件

[root@backup ~]# vim /etc/rsyncd.conf

[root@backup ~]# cat /etc/rsyncd.conf

uid = www

gid = www

port = 873

fake super = yes

use chroot = no

max connections = 200

timeout = 600

ignore errors

read only = false

list = false

auth users = nfs_bak

secrets file = /etc/rsync.passwd

log file = /var/log/rsyncd.log

#####################################

[nfs]

comment = welcome to oldboyedu backup!

path = /backup

# 创建用户组和用户

[root@backup ~]# groupadd www -g 666

[root@backup ~]# useradd www -g 666 -u 666 -s /sbin/nologin -M

# 创建密码文件并写入用户名密码

[root@backup ~]# vim /etc/rsync.passwd

[root@backup ~]# cat /etc/rsync.passwd

nfs_bak:123

# 给密码文件授权600

[root@backup ~]# chmod 600 /etc/rsync.passwd

# 创建备份目录

[root@backup ~]# mkdir /backup/

# 给备份目录授权属主和属组权限为www

[root@backup ~]# chown www.www /backup/

[root@backup ~]# ll /backup/ -d

drwxr-xr-x 2 www www 20 May 10 08:21 /backup/

# 启动服务并开机自启

[root@backup ~]# systemctl start rsyncd

[root@backup ~]# systemctl enable rsyncd

Created symlink from /etc/systemd/system/multi-user.target.wants/rsyncd.service to /usr/lib/systemd/system/rsyncd.service.

# 检测端口和进程

[root@backup ~]# netstat -lntup|grep 873

tcp 0 0 0.0.0.0:873 0.0.0.0:* LISTEN 7201/rsync

tcp6 0 0 :::873 :::* LISTEN 7201/rsync

[root@backup ~]# ps -ef|grep rsync

root 7201 1 0 00:13 ? 00:00:00 /usr/bin/rsync --daemon --no-detach

root 7224 6982 0 00:14 pts/0 00:00:00 grep --color=auto rsync

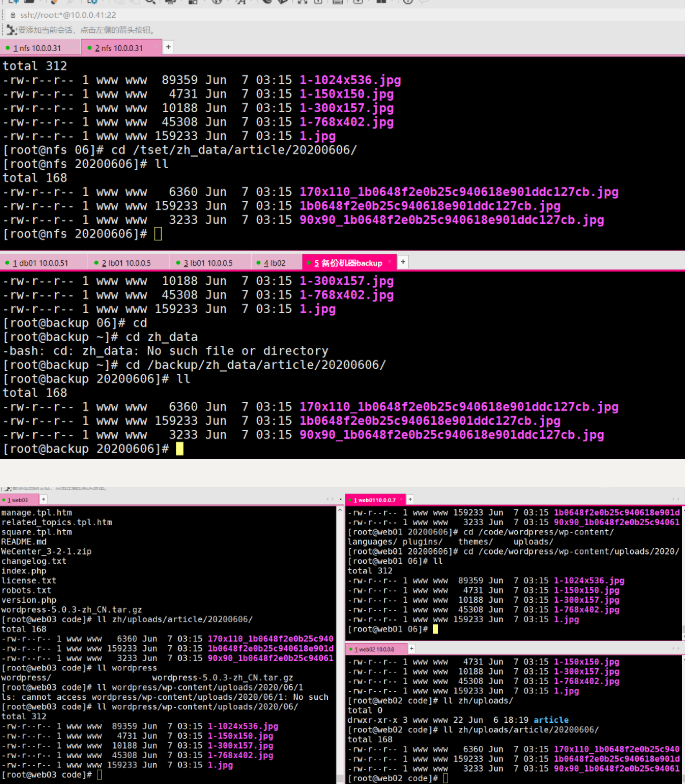

nfs实时同步backup服务器

## sersync部署在客户端

# 下载sersync的依赖

[root@nfs ~]# yum install -y rsync inotify-tools

# 下载sersync的安装包

[root@nfs ~]# wget https://raw.githubusercontent.com/wsgzao/sersync/master/sersync2.5.4_64bit_binary_stable_final.tar.gz

# 解压安装包

[root@nfs ~]# tar xf sersync2.5.4_64bit_binary_stable_final.tar.gz

#移动并改名

[root@nfs ~]# mv GNU-Linux-x86 /usr/local/sersync

[root@nfs ~]#

# 修改配置文件

vim /usr/local/sersync/confxml.xml

<inotify>

<delete start="true"/>

<createFolder start="true"/>

<createFile start="true"/>

<closeWrite start="true"/>

<moveFrom start="true"/>

<moveTo start="true"/>

<attrib start="true"/>

<modify start="true"/>

</inotify>

<sersync>

<localpath watch="/tset">

<remote ip="172.16.1.41" name="nfs"/>

<!--<remote ip="192.168.8.39" name="tongbu"/>-->

<!--<remote ip="192.168.8.40" name="tongbu"/>-->

</localpath>

<rsync>

<commonParams params="-az"/>

<auth start="true" users="nfs_bak" passwordfile="/etc/rsync.pass"/>

<userDefinedPort start="false" port="874"/><!-- port=874 -->

<timeout start="false" time="100"/><!-- timeout=100 -->

<ssh start="false"/>

</rsync>

<failLog path="/tmp/rsync_fail_log.sh" timeToExecute="60"/><!--default every 60mins execute once-->

<crontab start="false" schedule="600"><!--600mins-->

<crontabfilter start="false">

<exclude expression="*.php"></exclude>

# 写密码到密码文件

[root@nfs ~]# echo 123 >/etc/rsync.pass

# 授权600权限到密码文件

[root@nfs ~]# chmod 600 /etc/rsync.pass

# 启动服务

[root@nfs ~]# /usr/local/sersync/sersync2 -rdo /usr/local/sersync/confxml.xml

# 启动完成后进入客户端的/tset目录。创建一个1.txt文件,然后查看服务端是不是实时同步

[root@nfs tset]# ll

total 0

drwxr-xr-x 3 www www 18 Jun 6 18:17 wp_data

drwxr-xr-x 3 www www 21 Jun 6 18:19 zh_data

[root@nfs tset]# touch 1.txt

[root@backup backup]# ll /backup

total 0

-rw-r--r-- 1 www www 0 Jun 7 03:11 1.txt

drwxr-xr-x 3 www www 18 Jun 6 18:17 wp_data

drwxr-xr-x 3 www www 21 Jun 6 18:19 zh_data

# 然后客户端echo 123 写入到这个文件里,再次查看服务端

[root@nfs yonghu]# echo 123 >1.txt

[root@backup backup]# cat 1.txt

123

负载均衡lb服务器部署

# 安装nginx,上传数据包

[root@lb01 ~]# rz

# 解压

[root@lb01 ~]# tar xf php_nginx.tgz

[root@lb01 ~]# cd root/nginx_php/

# 安装

[root@lb01 nginx_php]# yum localinstall -y nginx-1.18.0-1.el7.ngx.x86_64.rpm

# 创建用户组和用户

[root@lb01 nginx_php]# groupadd www -g 666

[root@lb01 nginx_php]# useradd www -u 666 -g 666 -s /sbin/nologin -M

# 修改nginx启动用户

[root@lb01 nginx_php]# vim /etc/nginx/nginx.conf

user www;

worker_processes 1;

# 编辑nginx的proxy的优化文件,以后可以直接调用参数

[root@lb01 ~]# cat /etc/nginx/proxy_params

proxy_set_header HOST $host;

proxy_set_header X-Forwarded-for $proxy_add_x_forwarded_for;

proxy_connect_timeout 60s;

proxy_read_timeout 60s;

proxy_send_timeout 60s;

proxy_buffering on;

proxy_buffers 8 4k;

proxy_buffer_size 4k;

proxy_next_upstream error timeout http_500 http_502 http_503 http_504;

# 编辑nginx的代理文件

# 需要用到 ngx_http_upstream_module模块

[root@lb01 ~]# vim /etc/nginx/conf.d/blog.test.com.conf

upstream blog {

server 172.16.1.7;

server 172.16.1.8;

server 172.16.1.9;

}

server {

listen 80;

server_name blog.test.com;

location / {

proxy_pass http://blog;

include proxy_params;

}

}

[root@lb01 ~]# vim /etc/nginx/conf.d/zh.test.com.conf

upstream zh {

server 172.16.1.7;

server 172.16.1.8;

server 172.16.1.8;

}

server {

listen 80;

server_name zh.test.com;

location / {

proxy_pass http://zh;

include proxy_params;

}

}

# 检查语法

[root@lb01 ~]# nginx -t

# 开启nginx并加入开机自启

[root@lb01 ~]# systemctl start nginx

[root@lb01 ~]# systemctl enable nginx

# 域名解析

负载均衡高可用服务器部署

# lb01和lb02都下载keepalived服务

[root@lb01 conf.d]# yum install -y keepalived

[root@lb02 conf.d]# yum install -y keepalived

# 编辑配置文件

[root@lb01 conf.d]# vim /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id lb01 #标识身份->名称

}

*****添加nginx连接keepalived脚本**************

#vrrp_script check_ssh { *

# script "/root/nginx_keep.sh" *

# interval 5 *

#} *

**********从这向上注释部分*********************

vrrp_instance VI_1 { *

state MASTER #标识角色状态 *

interface eth0 #网卡绑定接口 *

virtual_router_id 50 #虚拟路由id *

priority 150 #优先级 *

nopreempt *

advert_int 1 #监测间隔时间 *

authentication { #认证 *

auth_type PASS #认证方式 *

auth_pass 1111 #认证密码 *

} *

virtual_ipaddress { *

10.0.0.3 #虚拟的VIP地址*

} *

*****下面是调用变量****************************

#track_script { *

# check_ssh *

*

# } *

**********************************************

}

[root@lb02 conf.d]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id lb02

}

#vrrp_script check_ssh {

# script "/root/nginx_keep.sh"

# interval 5

#}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 50

priority 100

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

#vrrp_script {

# check_ssh

# }

}

# 判断nginx是否存活脚本内容(如果是抢占式只需要写在主节点上面,如果是非抢占式主备节点都需要配置)

[root@lb01 ~]# cat nginx_keep.sh

#!/bin/sh

nginx_status=$(ps -C nginx --no-header|wc -l)

#1.判断Nginx是否存活,如果不存活则尝试启动Nginx

if [ $nginx_status -eq 0 ];then

systemctl start nginx

sleep 3

#2.等待3秒后再次获取一次Nginx状态

nginx_status=$(ps -C nginx --no-header|wc -l)

#3.再次进行判断, 如Nginx还不存活则停止Keepalived,让地址进行漂移,并退出脚本

if [ $nginx_status -eq 0 ];then

systemctl stop keepalived

fi

fi

***# 让nginx不重启执行下面脚本***

#!/bin/sh

nginx_status=$(ps -C nginx --no-header|wc -l)

if [ $nginx_status -eq 0 ];then

systemctl stop keepalived

fi

**就是检测到nginx没有存活直接停掉keepalived,转移到备节点上**

# 脚本编辑完成后要加执行权限

[root@lb01 ~]# chmod +x nginx_keep.sh

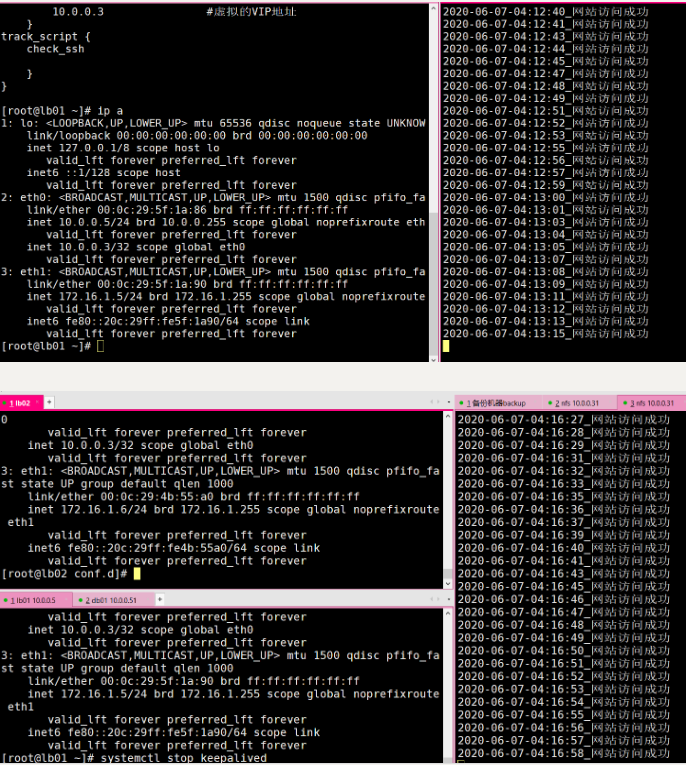

# 部署完成后启动keepalived两台都要启动,然后看一下10.0.0.3的IP绑定在哪台机器上面

[root@lb01 ~]# systemctl start keepalived

[root@lb02 ~]# systemctl start keepalived

[root@lb01 ~]# ip a|grep 10.0.0.3

[root@lb02 conf.d]# ip a|grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0

# 关闭lb02再看一下是不是发生变化

[root@lb02 conf.d]# systemctl stop keepalived

[root@lb02 conf.d]# ip a|grep 10.0.0.3

[root@lb01 ~]# ip a|grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0

# 这时候可以看到发生了变化,再编辑一个脚本在别的服务器上面监控一下访问状态

[root@nfs ~]# cat wzfwtest.sh

#!/bin/bash

while true;do

code_status=$(curl -I -m 10 -o /dev/null -s -w %{http_code} blog.test.com)

if [ $code_status -eq 200 ];then

echo "$(date +%F-%T)_网站访问成功" >> /tmp/web.log

else

echo "$(date +%F-%T)_网站访问失败,状态码是: $code_status" >> /tmp/web.log

fi

sleep 1

done

# 打开lb01主节点keepalived关联nginx的配置,然后把域名10.0.0.3做解析

[root@lb01 ~]# cat /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id lb01 #标识身份->名称

}

vrrp_script check_ssh {

script "/root/nginx_keep.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER #标识角色状态

interface eth0 #网卡绑定接口

virtual_router_id 50 #虚拟路由id

priority 150 #优先级

nopreempt

advert_int 1 #监测间隔时间

authentication { #认证

auth_type PASS #认证方式

auth_pass 1111 #认证密码

}

virtual_ipaddress {

10.0.0.3 #虚拟的VIP地址

}

track_script {

check_ssh

}

}

[root@nfs ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.3 blog.test.com zh.test.com

# 域名绑定在10.0.0.3上面

# 抢占式和非抢占式的配置

1、两个节点的state都必须配置为BACKUP

2、两个节点都必须加上配置 nopreempt

3、其中一个节点的优先级必须要高于另外一个节点的优先级。

两台服务器都角色状态启用nopreempt后,必须修改角色状态统一为BACKUP,唯一的区分就是优先级。

Master配置

vrrp_instance VI_1 {

state BACKUP

priority 150

nopreempt

}

Backup配置

vrrp_instance VI_1 {

state BACKUP

priority 100

nopreempt

}

# 就是两台都要是backup和加入nopreempt

高可用会存在的问题:

1、如何确定谁是主节点谁是备节点?

- MASTER(主节点)

- BACKUP(备节点)

- priority(优先级,主节点的优先级要大于备节点的优先级)

2、如果Master故障,Backup自动接管,那么Master回复后会夺权吗?

- 如果配置的是抢占式,MASTER会抢回VIP

- 如果配置的是非抢占式,两个都BACKUP,额外加一个参数:nopreempt,非抢占式不会抢VIP

3、如果两台服务器都认为自己是Master会出现什么问题

两台机器上面都有VIP,两台机器都认为自己是主节点,如果都有VIP,会导致网站访问不了

1、服务器网线松动等网络故障

2、服务器硬件故障发生损坏现象而崩溃

3、主备都开启firewalld防火墙

注意:

负载均衡:lvs harproxy nginx

1.如果企业中用的是物理服务,机房。我们可以做keepalived的高可用

2.如果企业中用的是云服务器,(阿里云...)不能做keepalived.... slb

打开防火墙重启主节点keepalived就会出现两台都有10.0.0.3的IP

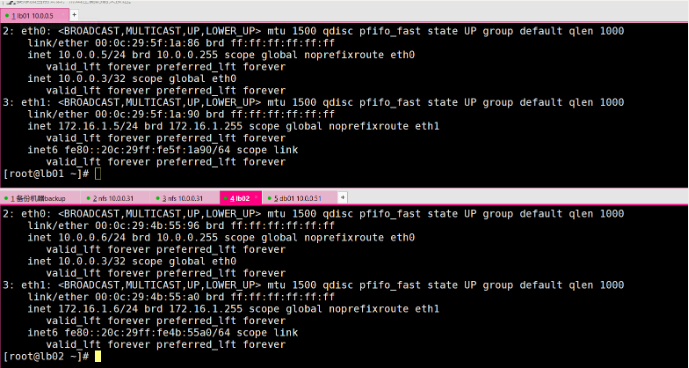

# 解决方法在备节点上面编辑脚本,检测到主节点上面有10.0.0.3而且自己上面也有就关闭备节点的keepalived

# 获取公钥

[root@lb02 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:1JwxWufRc2FSIYQcyiEbZ3l1CjlTDbzkLISkKJo5ITs root@lb02

The key's randomart image is:

+---[RSA 2048]----+

| o.=Bo@O+*o|

| . OBo#.+O..|

|.. . . ooo* B..o |

|..= . . . + |

|E= S . |

| .. |

| |

| |

| |

+----[SHA256]-----+

# 把公钥传送到10.0.0.5

[root@lb02 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@10.0.0.5

# 在备节点编辑检测脚本

[root@lb02 ~]# cat jcip.sh

#!/bin/sh

vip=10.0.0.3

lb01_ip=10.0.0.5

#while true;do

ping -c 2 $lb01_ip &>/dev/null

if [ $? -eq 0 ];then

lb01_vip_status=$(ssh $lb01_ip "ip add|grep $vip|wc -l")

lb02_vip_status=$(ip add|grep $vip|wc -l)

if [ $lb01_vip_status -eq 1 -a $lb02_vip_status -eq 1 ];then

echo '主节点和备节点都有VIP,开始关闭备节点的VIP...'

systemctl stop keepalived

fi

else

echo '主节点无法通信'

fi

#sleep 5

#done

# 运行脚本

[root@lb02 ~]# ip a|grep 10.0.0.3 && sh jcip.sh && ip a|grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0

主节点和备节点都有VIP,开始关闭备节点的VIP...

[root@lb02 ~]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号