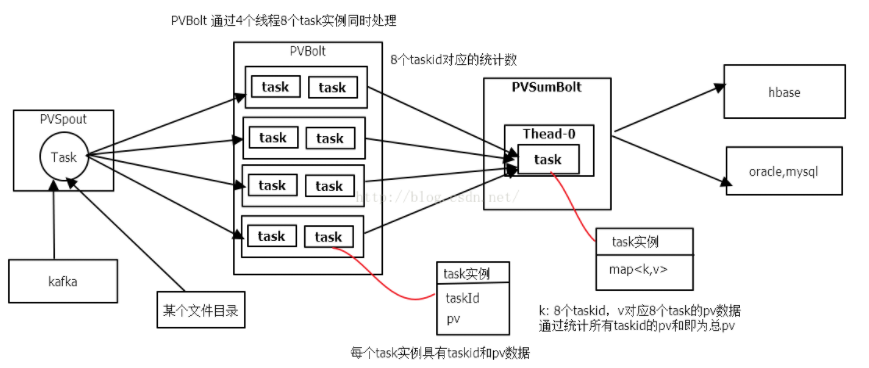

Storm计算网站PV

1.统计PV的流程图

2.Storm代码

模拟数据源:

import java.io.File; import java.io.IOException; import java.util.Collection; import java.util.List; import java.util.Map; import org.apache.commons.io.FileUtils; import com.yun.redis.PropertyReader; import backtype.storm.spout.SpoutOutputCollector; import backtype.storm.task.TopologyContext; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.base.BaseRichSpout; import backtype.storm.tuple.Fields; import backtype.storm.tuple.Values; /** * 实时读取PV用户行为日志数据 可以从数据库读取数据,可以从kafka读取数据,可以从文件系统读取数据 */ public class PVSpout extends BaseRichSpout { /** * */ private static final long serialVersionUID = 1L; private SpoutOutputCollector collector; private Map stormConf; /** * 当PVSpout初始化时候调用一次 * * @param conf * The Storm configuration for this spout. * @param context * 可以获取每个任务的TaskID * @param collector * The collector is used to emit tuples from this spout. */ @Override public void open(Map stormConf, TopologyContext context, SpoutOutputCollector collector) { this.collector = collector; this.stormConf = stormConf; } /** * 死循环,一直会调用 */ @Override public void nextTuple() { // 获取数据源 try { String dataDir = PropertyReader.getProperty("pv.properties", "data.dir"); File file = new File(dataDir); //获取文件列表 Collection<File> listFiles = FileUtils.listFiles(file, new String[]{"log"},true); for (File f : listFiles) { //处理文件 List<String> readLines = FileUtils.readLines(f); for (String line : readLines) { this.collector.emit(new Values(line)); } } } catch (Exception e) { e.printStackTrace(); } } /** * Declare the output schema for all the streams of this topology. * * @param declarer * this is used to declare output stream ids, output fields, and * whether or not each output stream is a direct stream */ @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("line")); } }

一级bolt:

import java.util.Map; import org.apache.commons.lang.StringUtils; import backtype.storm.task.OutputCollector; import backtype.storm.task.TopologyContext; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.base.BaseRichBolt; import backtype.storm.tuple.Fields; import backtype.storm.tuple.Tuple; import backtype.storm.tuple.Values; /** * 获取PVSpout发送的数据,PVTopology开启多线程。 给出每个线程处理的PV数 * * 在多线程情况下,对PV数据只能局部汇总,不能整体汇总,可以把局部汇总的结果给一个单线程的BOLT进行整体汇总(PVSumBolt) */ public class PVBolt extends BaseRichBolt { /** * */ private static final long serialVersionUID = 1L; private OutputCollector collector; private TopologyContext context; /** * 实例初始化的时候调用一次 * * @param stormConf * The Storm configuration for this bolt. * @param context * This object can be used to get information about this task's * place within the topology, including the task id and component * id of this task, input and output information, etc. * @param collector * The collector is used to emit tuples from this bolt */ @Override public void prepare(Map stormConf, TopologyContext context, OutputCollector collector ) { this.context = context ; this.collector = collector ; } private long pv = 0; /** * Process a single tuple of input. * * @param input * The input tuple to be processed. */ @Override public void execute(Tuple input ) { try { String line = input.getStringByField("line" ); if (StringUtils.isNotBlank( line)) { pv++; } //System.out.println(Thread.currentThread().getName() + "[" + Thread.currentThread().getId() + "]" +context.getThisTaskId()+ "->" + pv); //this.collector.emit(new Values(Thread.currentThread().getId(),pv));//仅适合一个线程和一个task情况 this.collector .emit(new Values(context.getThisTaskId(),pv ));// 一个线程和1或多个task的情况,TaskId唯一 this.collector .ack(input ); } catch(Exception e ){ e.printStackTrace(); this.collector .fail(input ); } } /** * Declare the output schema for all the streams of this topology. * * @param declarer * this is used to declare output stream ids, output fields, and * whether or not each output stream is a direct stream */ @Override public void declareOutputFields(OutputFieldsDeclarer declarer ) { declarer.declare( new Fields("taskId","pv" )); } }

二级bolt:

import java.util.HashMap; import java.util.Map; import java.util.Map.Entry; import org.apache.commons.collections.MapUtils; import org.apache.hadoop.hbase.client.Result; import com.yun.hbase.HBaseUtils; import backtype.storm.task.OutputCollector; import backtype.storm.task.TopologyContext; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.base.BaseRichBolt; import backtype.storm.tuple.Tuple; /** * 汇总PVBolt多个线程的结果(必须是单线程,多线程是无法做全局汇总的) * */ public class PVSumBolt extends BaseRichBolt{ /** * */ private static final long serialVersionUID = 1L; private OutputCollector collector; private Map<Integer,Long> map = new HashMap<Integer,Long>(); @Override public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) { this.collector = collector; } @Override public void execute(Tuple input) { try { Integer taskId = input.getIntegerByField("taskId"); Long pv = input.getLongByField("pv"); map.put(taskId, pv);//map个数为task实例数 long sum = 0;//获取总数,遍历map 的values,进行sum for (Entry<Integer, Long> e : map.entrySet()) { sum += e.getValue(); } System.out.println("当前时间:"+System.currentTimeMillis()+"pv汇总结果:" + "->" + sum); this.collector.ack(input); }catch(Exception e){ e.printStackTrace(); this.collector.fail(input); } } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { } }

PVTopology主程序

import java.util.HashMap; import java.util.Map; import backtype.storm.Config; import backtype.storm.LocalCluster; import backtype.storm.topology.TopologyBuilder; /** * 实时统计PV拓扑 */ public class PVTopology { public final static String SPOUT_ID = PVSpout.class.getSimpleName(); public final static String PVBOLT_ID = PVBolt.class.getSimpleName(); public final static String PVTOPOLOGY_ID = PVTopology.class.getSimpleName(); public final static String PVSUMBOLT_ID = PVSumBolt.class.getSimpleName(); public static void main(String[] args) { TopologyBuilder builder = new TopologyBuilder(); builder.setSpout( SPOUT_ID, new PVSpout(),1); builder.setBolt( PVBOLT_ID, new PVBolt(), 4).setNumTasks(8).shuffleGrouping(SPOUT_ID );//2个线程,4个task(并发度) builder.setBolt( PVSUMBOLT_ID, new PVSumBolt(), 1).shuffleGrouping(PVBOLT_ID );//单线程汇总 Map<String,Object> conf = new HashMap<String,Object>(); conf.put(Config. TOPOLOGY_RECEIVER_BUFFER_SIZE , 8); conf.put(Config. TOPOLOGY_TRANSFER_BUFFER_SIZE , 32); conf.put(Config. TOPOLOGY_EXECUTOR_RECEIVE_BUFFER_SIZE , 16384); conf.put(Config. TOPOLOGY_EXECUTOR_SEND_BUFFER_SIZE , 16384); /* try { StormSubmitter.submitTopology(PVTOPOLOGY_ID, conf, builder.createTopology()); } catch (Exception e) { e.printStackTrace(); } */ LocalCluster cluster = new LocalCluster(); cluster.submitTopology( PVTOPOLOGY_ID, conf ,builder.createTopology()); } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号