基于STM32结合CubeMX学习Free-RT-OS的源码之消息队列

目录

在CubeMX上的配置(简洁一览)

CMSIS_V1与CMSIS_V2两个API接口,除了前者无法用到事件集,其余都一样。

队列

存储构成:

队列结构体+数据部分

其数据部分紧跟队列的结构体。

队列的结构体定义

typedef struct QueueDefinition

{

int8_t *pcHead; /*< Points to the beginning of the queue storage area. */

int8_t *pcTail; /*< Points to the byte at the end of the queue storage area. Once more byte is allocated than necessary to store the queue items, this is used as a marker. */

int8_t *pcWriteTo; /*< Points to the free next place in the storage area. */

union /* Use of a union is an exception to the coding standard to ensure two mutually exclusive structure members don't appear simultaneously (wasting RAM). */

{

int8_t *pcReadFrom; /*< Points to the last place that a queued item was read from when the structure is used as a queue. */

UBaseType_t uxRecursiveCallCount;/*< Maintains a count of the number of times a recursive mutex has been recursively 'taken' when the structure is used as a mutex. */

} u;

List_t xTasksWaitingToSend; /*< List of tasks that are blocked waiting to post onto this queue. Stored in priority order. */

List_t xTasksWaitingToReceive; /*< List of tasks that are blocked waiting to read from this queue. Stored in priority order. */

volatile UBaseType_t uxMessagesWaiting;/*< The number of items currently in the queue. */

UBaseType_t uxLength; /*< The length of the queue defined as the number of items it will hold, not the number of bytes. */

UBaseType_t uxItemSize; /*< The size of each items that the queue will hold. */

volatile int8_t cRxLock; /*< Stores the number of items received from the queue (removed from the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

volatile int8_t cTxLock; /*< Stores the number of items transmitted to the queue (added to the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

#if( ( configSUPPORT_STATIC_ALLOCATION == 1 ) && ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) )

uint8_t ucStaticallyAllocated; /*< Set to pdTRUE if the memory used by the queue was statically allocated to ensure no attempt is made to free the memory. */

#endif

#if ( configUSE_QUEUE_SETS == 1 )

struct QueueDefinition *pxQueueSetContainer;

#endif

#if ( configUSE_TRACE_FACILITY == 1 )

UBaseType_t uxQueueNumber;

uint8_t ucQueueType;

#endif

} xQUEUE;这个队列与RT_Thread中的邮箱简直是非常相似,不同在于RT-Thread中的邮箱的邮件必须是32位的整形,而Free -RT-OS的队列的成员类型可以是自定义类型。

在动态创建队列时:

首先判断其队列中成员的类型,若是0则不分其队列成员配空间(用于信号量和互斥量)

分配总大小。(队列结构体+数据成员总大小)

pxNewQueue = ( Queue_t * ) pvPortMalloc( sizeof( Queue_t ) + xQueueSizeInBytes );

初始化队列 : 这个队列像极了RT-Thread中的邮箱。

- 进入临界区(关闭所有中断(包括tick中断,这样就不会发生tick中断产生的调度)

- 初始化写队列的指针,初始化读队列的指针。当队列为空时,写数据的指针与读数据的指针指向同一个位置。(根据uxItemSize 这个值的大小判断头指针指向队列的数据部分第一个成员位置还是其指向整个队列。(用做互斥锁,没有存储数据部分)

- 初始化等待发送的链表,初始化等待接受的链表

- 退出临界区(开启所有中断)

读数据的位置 =ptr(读数据的指针)++%队列总长度Length(这创建开始已定义)

写数据的位置=ptr(写数据的指针) ++ %队列总长度(Length)

所以这个队列就是一个环形数组,当 |ptr写-ptr读|= 队列长度时则队列已满。

写队列 (写到队列尾部)

BaseType_t xQueueSend(QueueHandle_t xQueue,

const void *pvItemToQueue,

TickType_t xTicksToWait);每次写队列则让队列中的个数加1。

队列结构体中(当前队列的个数)

![]()

最后一个参数为超时时间,若设置为0则无需等待立刻返回成功或失败。

在配置为portMAX_DELAY (一直阻塞等待唤醒)

在写队列时,如果没有空间可写,又设置为 portMAX_DELAY则会一直阻塞。直到别的任务从队列里读数据后再唤醒该任务:

- 这时等待写的任务会从当前的就绪链表中移除并移动到FREE-RTOS阻塞链表。

- 同时此任务会加入到队列的等待写的链表中。也就是xTasksWaitingToSend中。

- 当一个任务读取队列的数据,则会进行一次判断,判断是否有任务在等待读链表中(xTasksWaitingToReceive),若是则唤醒。此时被唤醒的任务从FREE-RTOS 阻塞链表移动到它所在优先级的就绪链表。

若是队列有空间则直接写入数据。

读队列

BaseType_t xQueueReceive( QueueHandle_t xQueue, void * const pvBuffer, TickType_t xTicksToWait )

{

BaseType_t xEntryTimeSet = pdFALSE;

TimeOut_t xTimeOut;

Queue_t * const pxQueue = ( Queue_t * ) xQueue;

/* Check the pointer is not NULL. */

configASSERT( ( pxQueue ) );

/* The buffer into which data is received can only be NULL if the data size

is zero (so no data is copied into the buffer. */

configASSERT( !( ( ( pvBuffer ) == NULL ) && ( ( pxQueue )->uxItemSize != ( UBaseType_t ) 0U ) ) );

/* Cannot block if the scheduler is suspended. */

#if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) )

{

configASSERT( !( ( xTaskGetSchedulerState() == taskSCHEDULER_SUSPENDED ) && ( xTicksToWait != 0 ) ) );

}

#endif

/* This function relaxes the coding standard somewhat to allow return

statements within the function itself. This is done in the interest

of execution time efficiency. */

for( ;; )

{

taskENTER_CRITICAL();

{

const UBaseType_t uxMessagesWaiting = pxQueue->uxMessagesWaiting;

/* Is there data in the queue now? To be running the calling task

must be the highest priority task wanting to access the queue. */

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* Data available, remove one item. */

prvCopyDataFromQueue( pxQueue, pvBuffer );

traceQUEUE_RECEIVE( pxQueue );

pxQueue->uxMessagesWaiting = uxMessagesWaiting - ( UBaseType_t ) 1;

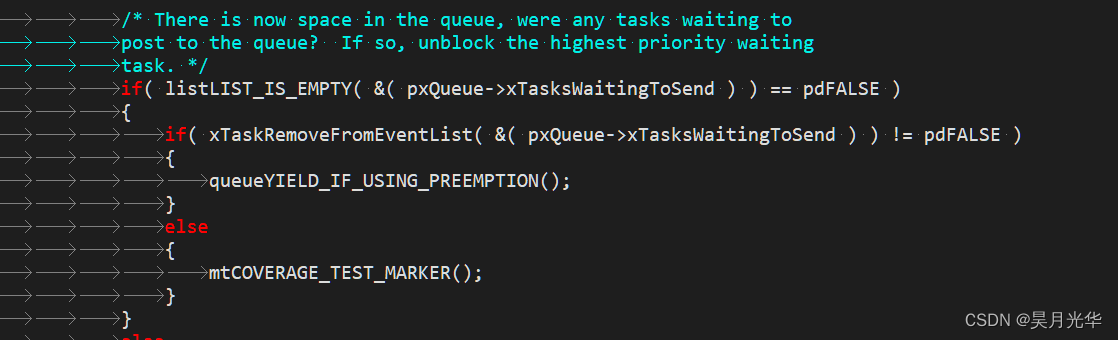

/* There is now space in the queue, were any tasks waiting to

post to the queue? If so, unblock the highest priority waiting

task. */

if( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE )

{

if( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) != pdFALSE )

{

queueYIELD_IF_USING_PREEMPTION();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

taskEXIT_CRITICAL();

return pdPASS;

}

else

{

if( xTicksToWait == ( TickType_t ) 0 )

{

/* The queue was empty and no block time is specified (or

the block time has expired) so leave now. */

taskEXIT_CRITICAL();

traceQUEUE_RECEIVE_FAILED( pxQueue );

return errQUEUE_EMPTY;

}

else if( xEntryTimeSet == pdFALSE )

{

/* The queue was empty and a block time was specified so

configure the timeout structure. */

vTaskInternalSetTimeOutState( &xTimeOut );

xEntryTimeSet = pdTRUE;

}

else

{

/* Entry time was already set. */

mtCOVERAGE_TEST_MARKER();

}

}

}

taskEXIT_CRITICAL();

/* Interrupts and other tasks can send to and receive from the queue

now the critical section has been exited. */

vTaskSuspendAll();

prvLockQueue( pxQueue );

/* Update the timeout state to see if it has expired yet. */

if( xTaskCheckForTimeOut( &xTimeOut, &xTicksToWait ) == pdFALSE )

{

/* The timeout has not expired. If the queue is still empty place

the task on the list of tasks waiting to receive from the queue. */

if( prvIsQueueEmpty( pxQueue ) != pdFALSE )

{

traceBLOCKING_ON_QUEUE_RECEIVE( pxQueue );

vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToReceive ), xTicksToWait );

prvUnlockQueue( pxQueue );

if( xTaskResumeAll() == pdFALSE )

{

portYIELD_WITHIN_API();

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

/* The queue contains data again. Loop back to try and read the

data. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

}

}

else

{

/* Timed out. If there is no data in the queue exit, otherwise loop

back and attempt to read the data. */

prvUnlockQueue( pxQueue );

( void ) xTaskResumeAll();

if( prvIsQueueEmpty( pxQueue ) != pdFALSE )

{

traceQUEUE_RECEIVE_FAILED( pxQueue );

return errQUEUE_EMPTY;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

}

}每次读队列则使队列中的个数减1,与写队列同理。

BaseType_t xQueueReceive( QueueHandle_t xQueue,

void * const pvBuffer,

TickType_t xTicksToWait );最后一个参数为超时时间,若设置为0则无需等待立刻返回成功或失败。

在配置为portMAX_DELAY (一直阻塞等待唤醒)

在读队列时,如果没有数据可读,又设置为 portMAX_DELAY则会一直阻塞。直到有任务写入数据。

- 这时等待写的任务会从当前的就绪链表中移除并移动到阻塞链表。

- 同时此任务会加入到队列的等待读的链表中。也就是xTasksWaitingToReceive中。

- 当一个任务写数据进队列使得队列有数据,则会进行一次判断,判断是否有任务在等待读链表(xTasksWaitingToSend),若是则唤醒,并将该任务从等待读链表(xTasksWaitingToSend)中移除。此时被唤醒的任务从阻塞链表移动到它所在优先级的就绪链表中。

若是队列已经有数据,则直接拷贝数据,队列现有个数减1返回。

当写队列和读队列设置的超时时间为具体的时间时,由于读或写而阻塞的任务被唤醒一般有两种情况:

- 在超时时间内:在别的任务中进行了写队列或读队列而被唤醒。(读阻塞唤醒由于写队列,写阻塞唤醒由于读队列)

- 到了超时时间,由与tick中断判断被阻塞任务的计数值到了超时时间而唤醒并从阻塞链表中移除加入他们对应优先级的就绪链表中。

总结:写队列与读队列,每次都会进行判断,判断是否有任务因为读/写而等待超时阻塞,若是则唤醒。若是设置了超时阻塞时间,则在超时后由tick中断唤醒。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?