K8S构建1台master2台node+Harbor

部署环境:

master、node:centos7虚拟机

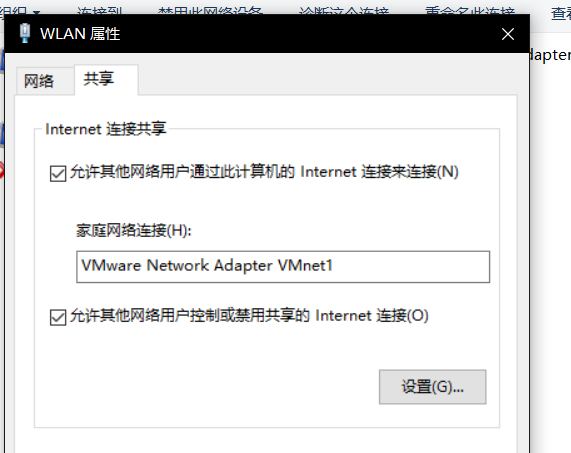

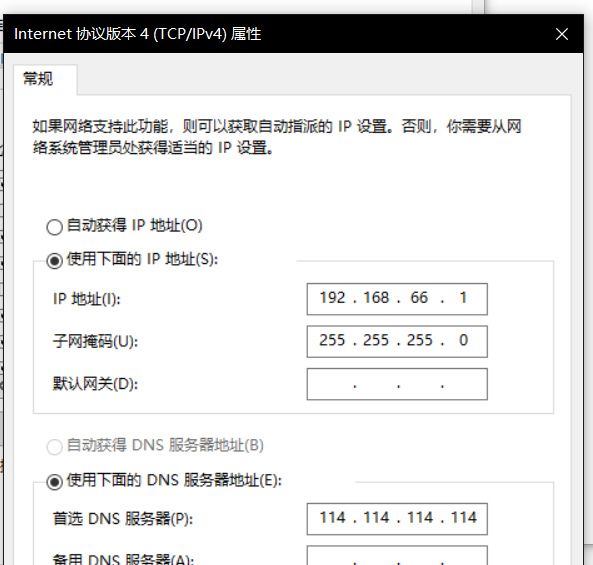

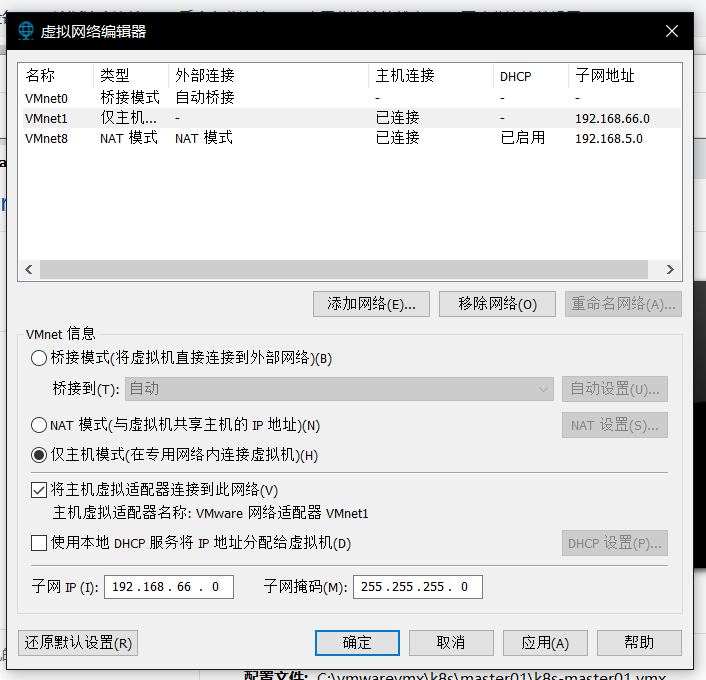

网络使用本地网卡共享到VMnet1(仅主机),虚拟机使用VMnet1。

准备文件:

CentOS-7-x86_64-Minimal-1810.iso

kubeadm-basic.images.tar.gz

网卡配置:

VMnet1配置:

vmware网络配置:

关闭DHCP,网段改为192.168.66.0

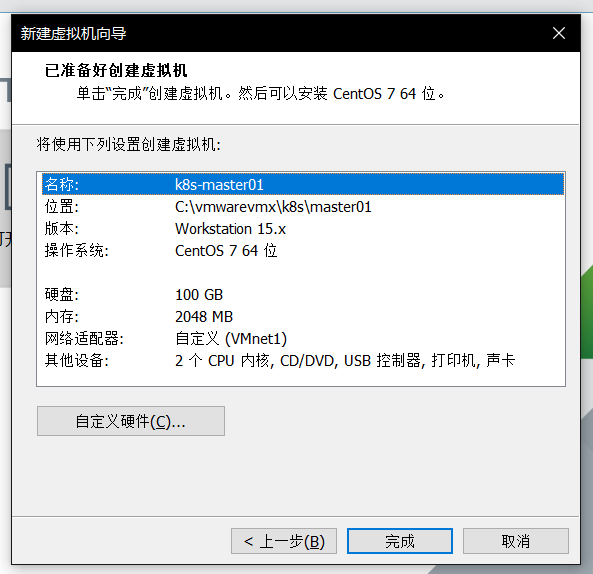

master、node虚拟机配置:

安装过程略过,最小化安装,全部默认,设置root密码。

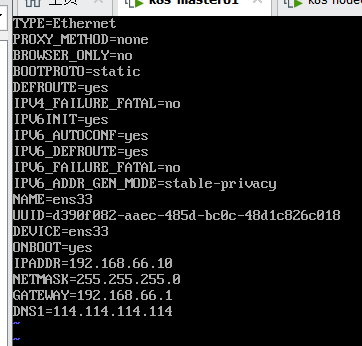

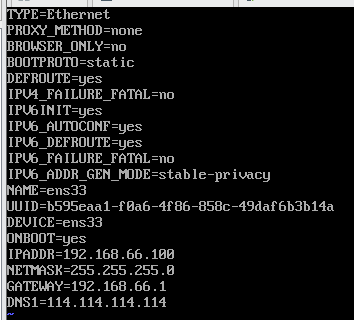

master01网络配置

node01 192.168.66.20

node02 192.168.66.21

设置主机名:

master01\node01\node02:

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

各节点配置:

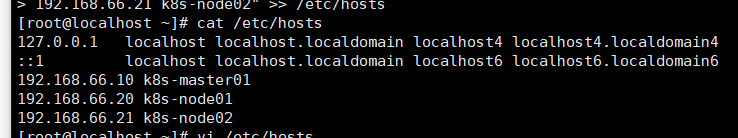

hosts:

echo "192.168.66.10 master01

192.168.66.20 node01

192.168.66.21 node02" >> /etc/hosts

安装依赖包:

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

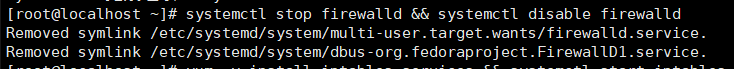

设置防火墙为 Iptables 并设置空规则

systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

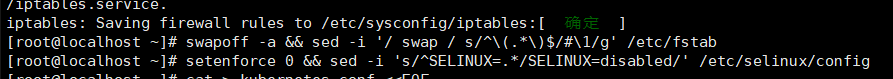

关闭swap

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

关闭selinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

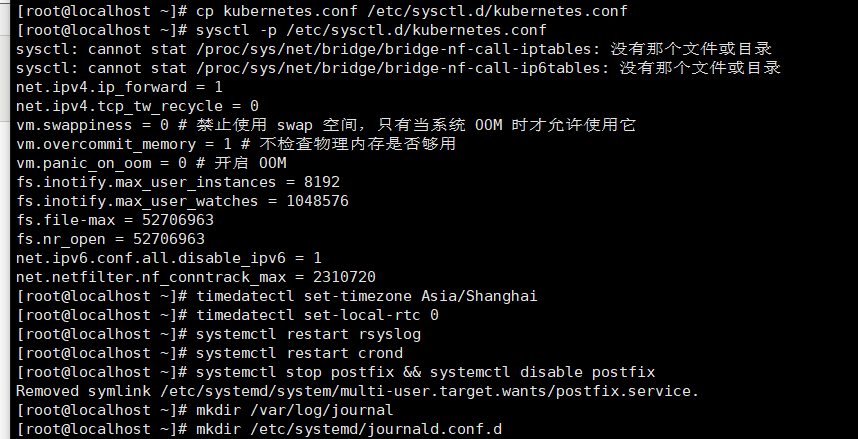

调整内核参数,对于 K8S

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

# 设置系统时区为 中国/上海

timedatectl set-timezone Asia/Shanghai

# 将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

# 重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

关闭系统不需要服务

systemctl stop postfix && systemctl disable postfix

设置 rsyslogd 和 systemd journald

mkdir /var/log/journal

# 持久化保存日志的目录

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到 syslog

ForwardToSyslog=no

EOF

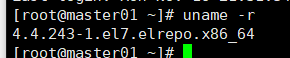

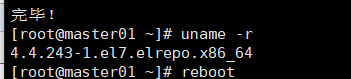

uname -r

systemctl restart systemd-journald

升级系统内核为 4.44

CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes 不稳定,例如:

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装 一次!

yum --enablerepo=elrepo-kernel install -y kernel-lt

cat /etc/grub2.cfg

# 设置开机从新内核启动

grub2-set-default 'CentOS Linux (4.4.243-1.el7.elrepo.x86_64) 7 (Core)'

rebo

uname -r

升级成功

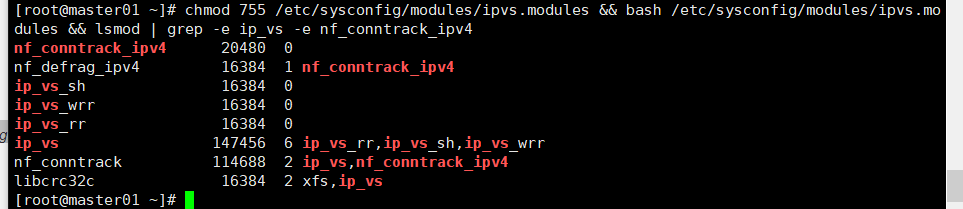

kube-proxy开启ipvs的前置条件

modprobe br_netfilter

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

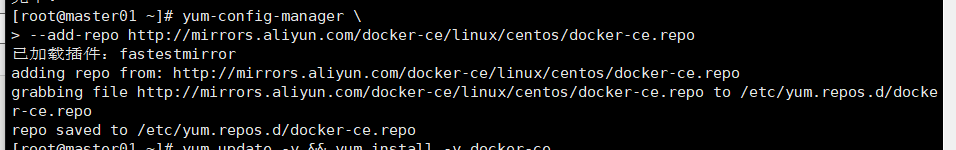

安装 Docker 软件

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum update -y && yum install -y docker-ce

uname -r

rebo

uname -r

grub2-set-default 'CentOS Linux (4.4.243-1.el7.elrepo.x86_64) 7 (Core)'

rebo

uname -r

systemctl start docker

systemcel enable docker

## 创建 /etc/docker 目录

#mkdir /etc/docker

# 配置 daemon.

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# 重启docker服务

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

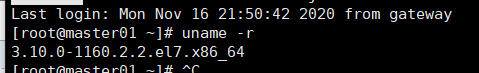

安装 Kubeadm (主从配置)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubeadm-1.15.1 kubectl-1.15.1 kubelet-1.15.1

systemctl enable kubelet.service

导入kubeadm-basic.images.tar.gz 到 /root

vi /root/load-images.sh

#!/bin/bash

cd /root

tar xzvf kubeadm-basic.images.tar.gz

ls /root/kubeadm-basic.images > /tmp/image-list.txt

cd /root/kubeadm-basic.images

for i in $( cat /tmp/image-list.txt )

do

docker load -i $i

done

rm -rf /tmp/image-list.txt

scp -r kubeadm-basic.images.tar.gz load-images.sh root@node01:/root/

scp -r kubeadm-basic.images.tar.gz load-images.sh root@node02:/root/

sh load-images.sh

初始化主节点

kubeadm config print init-defaults > kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.66.10

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

加入主节点以及其余工作节点

执行安装日志中的加入命令即可

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

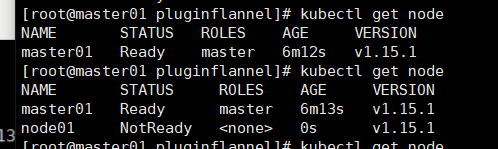

kubectl get node

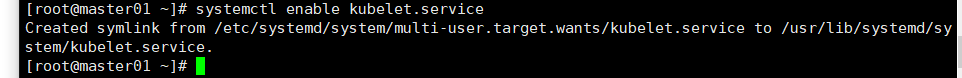

mkdir -p install-k8s/core/pluginflannel

mv kubeadm-init.log kubeadm-config.yaml install-k8s/core/

cd install-k8s/core/pluginflannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

echo "151.101.76.133 raw.githubusercontent.com" >> /etc/hosts

kubectl create -f kube-flannel.yml

kubectl get pod -n kube-system

等待

kubectl get node

cat /root/install-k8s/core/kubeadm-init.log

最后一行复制到其它节点执行

kubeadm join 192.168.66.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ad7a47f32e31817c0544708e380ddf55909542517280ac0abc74aac378795423

kubectl get node

kubectl get pod -n kube-system

kubectl get pod -n kube-system -o wide

等待全run

kubectl get pod -n kube-system -o wide -w

https://www.bilibili.com/video/BV1w4411y7Go?p=10

主机名不一致是因为之前测试环境是笔记本现在在台式机

+Harbor:

主机名:hostnamectl set-hostname hub

需要互相添加hosts

其他配置相同,主机名:hub

IP:192.168.66.100

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond

systemctl stop postfix && systemctl disable postfix

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

45 Storage=persistent

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

SystemMaxUse=10G

SystemMaxFileSize=200M

MaxRetentionSec=2week

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum --enablerepo=elrepo-kernel install -y kernel-lt

cat /etc/grub2.cfg | grep "4.4"

grub2-set-default 'CentOS Linux (4.4.243-1.el7.elrepo.x86_64) 7 (Core)'

uname -r

reboot

uname -r

modprobe br_netfilter

cat > /etc/sysconfig/modules/ipvs.modules <<EOF modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum update -y && yum install -y docker-ce

reboot

uname -r

grub2-set-default 'CentOS Linux (4.4.243-1.el7.elrepo.x86_64) 7 (Core)' && reboot

uname -r

systemctl start docker

systemctl enable docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["https://hub.yyq.com"]

}

EOF

各节点都需要添加

systemctl restart docker

导入 docker-compose

harbor-offline-installer-v1.2.0.tgz

yum -y install lrzsz

mv docker-compose /usr/local/bin/

chmod a+x /usr/local/bin/docker-compose

tar xzvf harbor-offline-installer-v1.2.0.tgz

mv harbor /usr/local

cd /usr/local/harbor

vi harbor.cfg

hostname = hub.yyq.com

ui_url_protocol = https

db_password = root123

ssl_cert = /data/cert/server.crt

ssl_cert_key = /data/cert/server.key

clair_db_password = password

harbor_admin_password = Harbor12345

mkdir -p /data/cert/

cd /data/cert/

创建 https 证书以及配置相关目录权限

openssl genrsa -des3 -out server.key 2048

openssl req -new -key server.key -out server.csr

cp server.key server.key.org

openssl rsa -in server.key.org -out server.key

openssl x509 -req -days 365 -in server.csr -signkey server.key -out server.crt

chmod a+x *

cd -

ls

./install.sh

docker ps -a

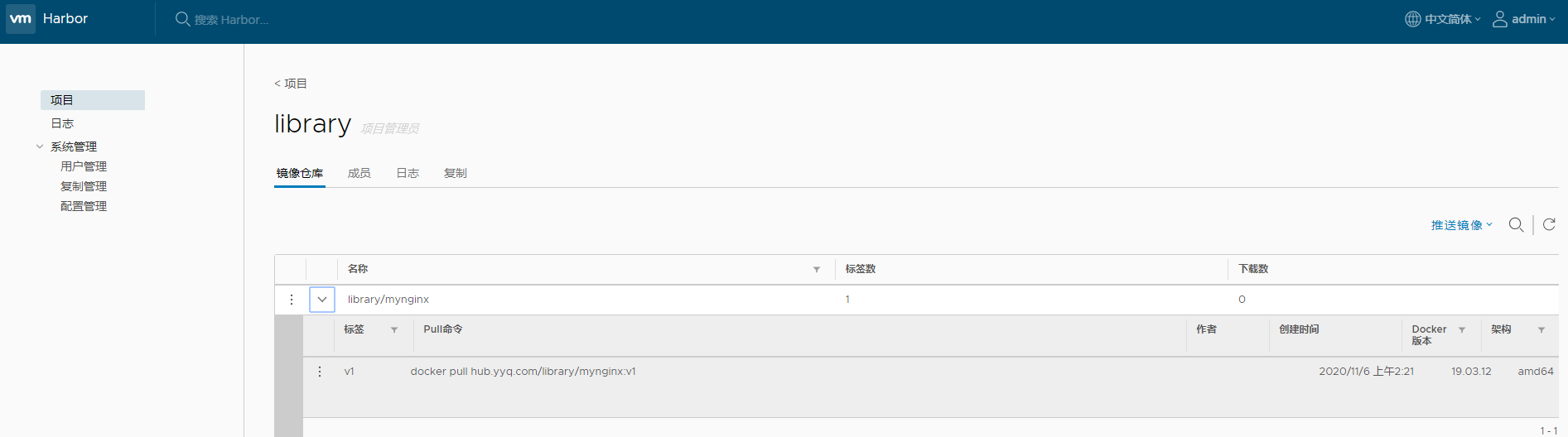

使用PC浏览器访问hub.yyq.com

使用node节点访问

docker login https://hub.yyq.com

随便下载一个镜像

docker run -d --name nginx01 -p:3344:80 nginx

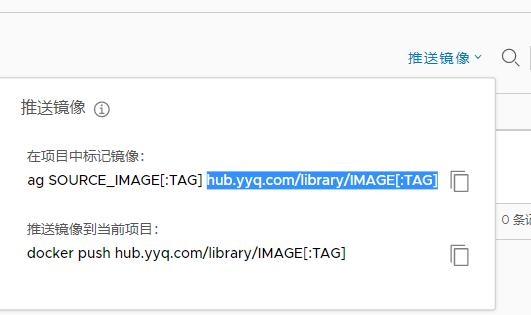

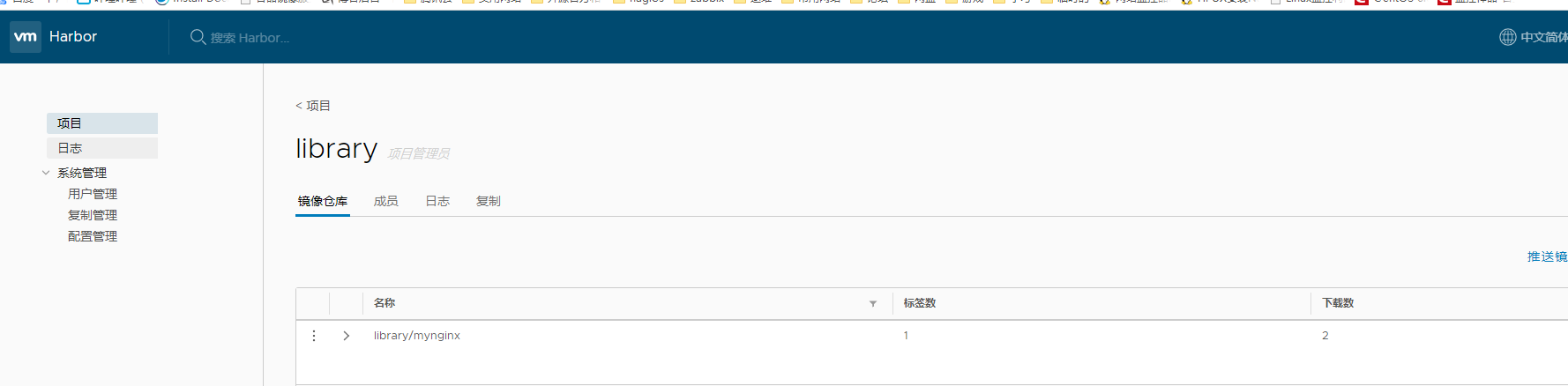

docker tag nginx hub.yyq.com/library/mynginx:v1

[root@k8s-node01 ~]# docker push hub.yyq.com/library/mynginx:v1

The push refers to repository [hub.yyq.com/library/mynginx]

7b5417cae114: Pushed

aee208b6ccfb: Pushed

2f57e21e4365: Pushed

2baf69a23d7a: Pushed

d0fe97fa8b8c: Pushed

v1: digest: sha256:34f3f875e745861ff8a37552ed7eb4b673544d2c56c7cc58f9a9bec5b4b3530e size: 1362

测试通过

docker stop fb469ac8ff40

docker rmi -f hub.yyq.com/library/mynginx:v1

docker rmi -f nginx

继续测试:

master节点

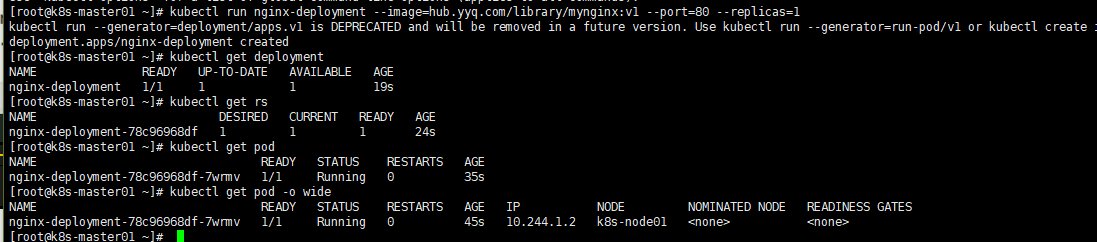

kubectl run nginx-deployment --image=hub.yyq.com/library/mynginx:v1 --port=80 --replicas=1

kubectl get deployment

kubectl get rs

kubectl get pod

kubectl get pod -o wide

history

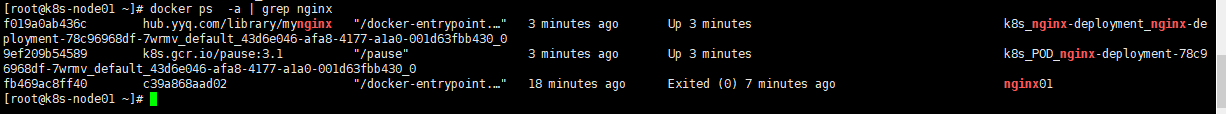

发现在node1上运行

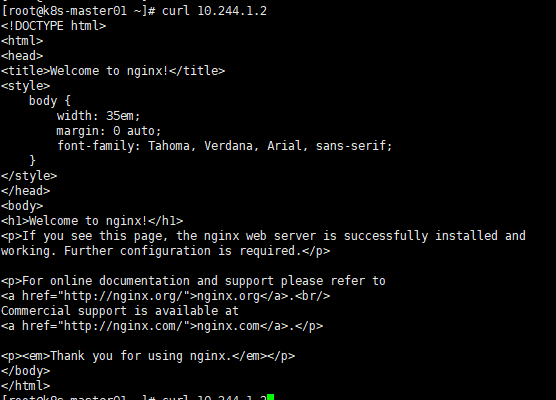

访问curl 10.244.1.2

get pod

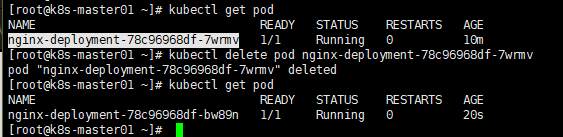

delete pod nginx-deployment-78c96968df-7wrmv

get pod

之前启动pod设置的replicas=1 副本数量=1 删除后会自己马上运行一个新的,保持副本数。

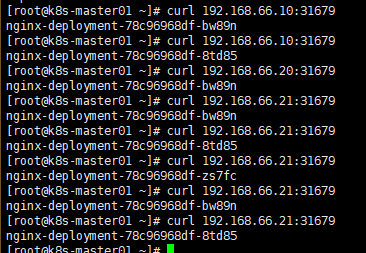

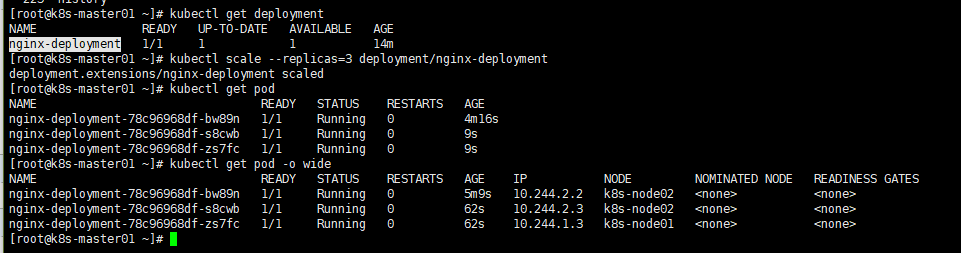

调整副本数为3个

kubectl get deployment

kubectl scale --replicas=3 deployment/nginx-deployment

kubectl get pod

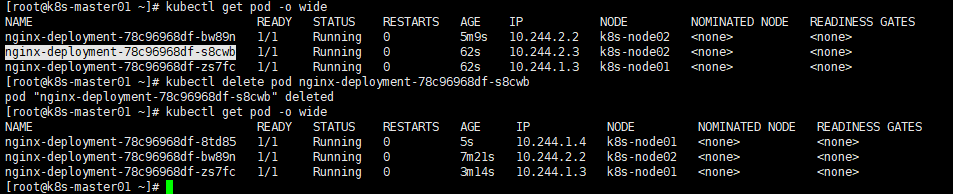

任意删除1个,查看效果

发现k8s继续创建一个新的,维持副本数

kubectl expose --help

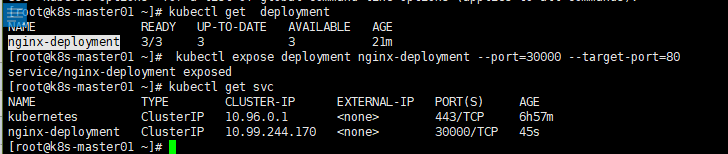

kubectl get deployment

kubectl expose deployment nginx-deployment --port=30000 --target-port=80

80是刚才kubectl run设置的端口 30000是为svc设置的端口

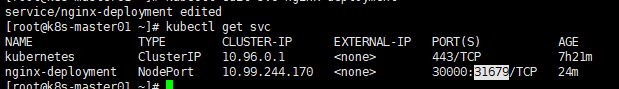

kubectl get svc

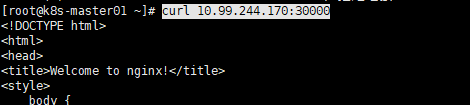

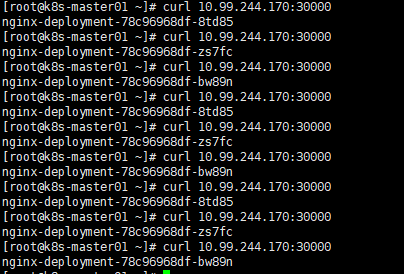

curl 10.99.244.170:30000

测试通过

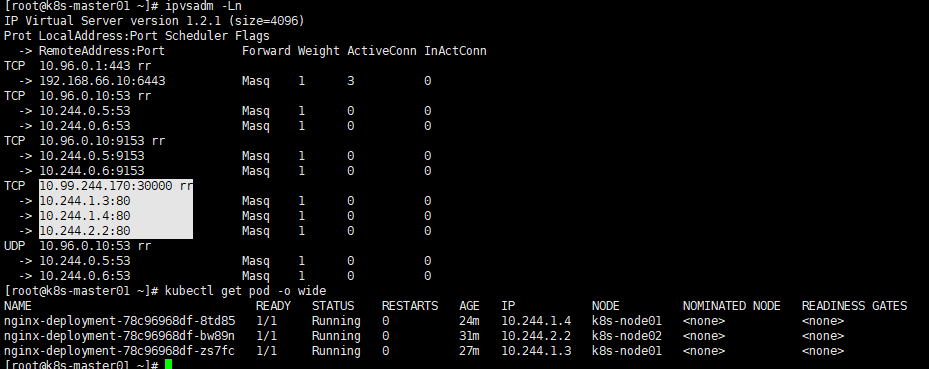

查看轮询规则

ipvsadm -Ln

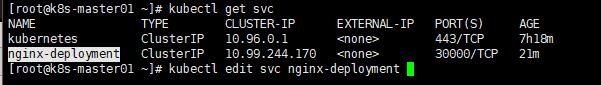

调整 svc配置供外部访问

kubectl get svc

kubectl edit svc nginx-deployment

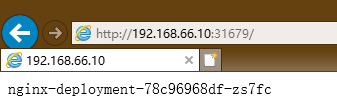

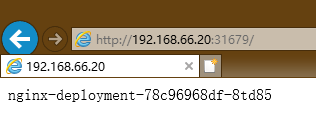

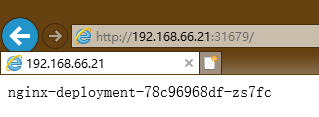

调整type: NodePort

验证: