Some trends of deep learning researches

Some trends of deep learning researches

As some people already know, I have made an awesome-list named Most Cited Deep Learning Papers at my GitHub repo. Although I know some awesome-lists for deep learning researches exist such as awesome-deep-learning, awesome-deep-vision, and awesome-deep-RNN, anything satisfies my needs to catch up the trend of deep learning researches regardless of the applied area.

[Link] Awesome-Deep-Learning-Papers

That is why I made the awesome-deep-laerning-papers by myself. It was not a work only for others, but also for myself as well. As surveying on deep learning papers, I could realize what I have missed among the recent advances in deep learning researches, and also get some ideas how I can integrate the spread ideas which appear in different fields.

Here, I briefly summerazie some trends of deep learning researches that appear in the list of most cited deep learning papers

People start thinking why deep neural networks works

Enraptured by the amazing performance of deep neural network (DNN), people have been delved into the structure of DNN and improved recognition accuracy for years. For example, AlexNet, VGG Net, NIN, GoogLeNet, and ResNet are the outcomes for that. (See the neural network models that have recently been developed.)

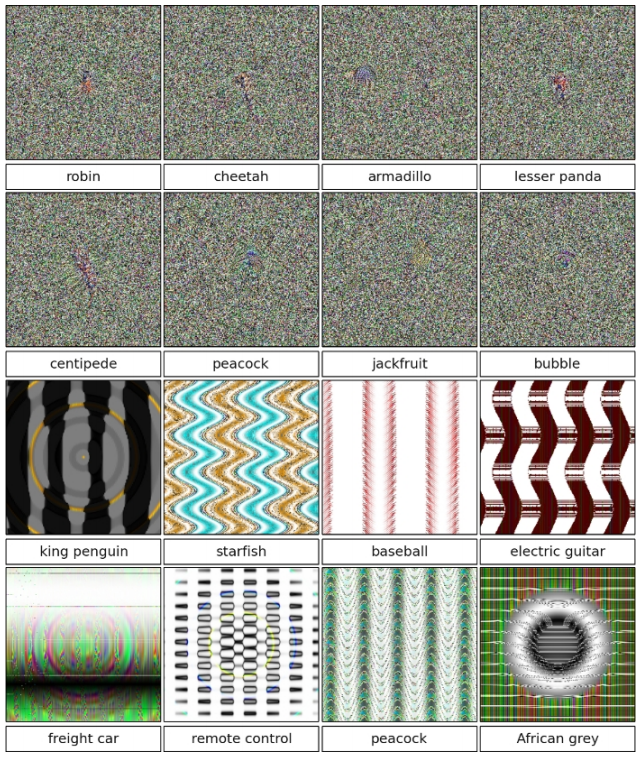

However, we know that we cannot go further without a sound understanding of fundamentals. Researchers recently have found some interesting phenomena, for examples, DNN can be easily fooled [1] by some manipulated images and the first layers of CNN learn Gabor filter regardless of tasks or initial weight values [2]. These interesting findings lead discussions on the transferability of the learned knowledge and the role of adversarial examples [1], [2]. Also theories that explain why CNN is successful are being investigated (e.g. [3], [4]). I hope theoretical approaches will lead advances in practical approaches, and vice versa.

From “Going deeper” to “Compressing more lightly”

GoogLeNet and ResNet empirically have proved that deeper networks with more data give better performance in terms of accuracy. However, accuracy may not be the only metric which appreciates the excellency of learning methods. SqueezeNet[5] which aims at developing AlexNet-level accuracy with smal size model reveals the three motivations for their work as follows.

(i) Smaller CNNs require less communication across servers during distributed training, (ii) less bandwidth to export a new model from the cloud to an autonomous car, (iii) and are more feasible to deploy on FPGAs and other hardware with limited memory.

Now, accuracy is not the only metric which deep learning researchers concerns. Based on better knowledge on the mechanisms of CNN, researchers are trying to reduce the size of CNNs. Also researchers are trying to reuse the learned knowledge to another task (e.g. [6]) to avoid repeatedly training large data for different tasks. They are all from the better understanding on CNN (or DNN). The better you know about the method, the smaller model (or less training time) you will need.

CNN can be easily fooled by manipulations [1]

CNN can be easily fooled by manipulations [1]CNN is extending its realm

When DNN was first emerged by Hinton’s group researchers, the main players who take a key role in deep learning was unsupervised learning techniques such as RBM and autoendcoder. However, despite of being emphasized by many researchers, unsupervised learning techniques have not been spotlighted for years (except for [7]). Instead, CNN is aggressively extending its realm while RNN and LSTM is still taking an important role for sequence learning.

For example, sentences were natural to be analyzed by using RNN (or LSTM) because they can be considered as sequences of words or characters. However, CNN is showing state-of-the-art performance in sentence classification [8] thanks to its ability to capture knowledge from windowed data (the data in sliding windows). Also, recognition tasks in videos (e.g. activity recognition) are being tackled by CNN, although videos can be considered as sequences of images. It is true that RNN (or LSTM) is still serving as a key player in sequence learning, e.g., in machine translations, but it is not deniable that CNN proves its usefulness in a variety of areas.

DNN in robotics?

In fact, I’m not a deep learning researcher, but a researcher conducting a research on human and robot motions. Thus, my interest is on the expectable benefits from the advances of DNN to robotics or human motion analysis, rather than DNN itself.

Last year, I found only five papers which use deep neural network from ICRA2015. (ICRA is one of the biggest conference in robotics field.) Except for [9] from Abbeel’s group, Most of them are vision researches which are not quite different from the researches in CVPR. This year, I found around 50 papers from ICRA2016 and most of them still lean towards vision researches, e.g., 3D object recognition and scene understanding for autonomous vehicles.

But some new streams of researches are noticeable. Visual recognition is being combined with grasping strategy under DNN framework as in [10] and [11]. Also, DNN is being used for robot control with the combination of policy gradient search[12]. It is interesting because traditional control schemes used for humanoids or manipulators are quite different from the policy search framework. Some robotics researcher do not like policy search approaches (which is closely related to RL) because learning from failures is often very expensive in robotics. That is why humanoid researchers are willing to be served as cushion when their humanoid fall down. But the realm of policy search for robot control is getting extended thanks to the benefits of DNN. Where will be the point of compromise between the tradional control and policy search schemes? That would be an interesting point to look ahead of future robotics.

Recent movements for getting better understanding of DNN will accelerate the advent of future deep learning based robots. Robots are required to make a decision in real time. Decisions are not only about high-level choices, but also about moving limbs, avoiding obstacles, maintaining balance, etc. To achieve them, reducing the size of DNN and transferring learned knowledge for a new task will be essential conditions. Also, I think the other problem, expensive failure cost in robotics, can be eased by combining with cloud robotics or learning from demonstration (LfD) techniques. As humans learn knowledge not only from their direct experiences but also from shared knowledge and other’s demonstrations, I believe robots will learn knowledge through internet or youtube videos in some days.

Reference

[1] Deep neural networks are easily fooled: High confidence predictions for unrecognizable images (2015), A. Nguyen et al.

[2] How transferable are features in deep neural networks? (2014), J. Yosinski et al.

[3] Understanding convolutional neural networks (2016), J. Koushik

[4] A theoretical analysis of deep neural networks for texture classification (2016), S. Basu et al.,

[5] SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and < 1MB model size (2016), F. Iandola et al.

[6] Learning and Transferring Mid-Level Image Representations using Convolutional Neural Networks (2014), M. Oquab et al.

[7] Generative adversarial nets (2014), I. Goodfellow et al.

[8] Convolutional neural networks for sentence classification (2014), Y. Kim.

[9] Deep learning helicopter dynamics models (2015), A. Punjani et al.

[10] Supersizing Self-Supervision: Learning to Grasp from 50K Tries and 700 Robot Hours (2015), L. Pinto and A. Gupta

[11] Learning Hand-Eye Coordination for Robotic Grasping with Deep Learning and Large-Scale Data Collection (2016), S. Levine et al.

[12] End-to-End Training of Deep Visuomotor Policies (2016), S. Levine et al.

浙公网安备 33010602011771号

浙公网安备 33010602011771号