数据采集实践作业2

作业一

1.实验内容

要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库

代码如下:

import requests

import sqlite3

from bs4 import BeautifulSoup

# 定义获取天气信息的函数

def get_weather_info(city_id):

url_template = 'http://www.weather.com.cn/weather/{}.shtml'

complete_url = url_template.format(city_id)

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36'

}

response = requests.get(complete_url, headers=headers)

response.encoding = 'utf-8'

soup = BeautifulSoup(response.text, 'html.parser')

forecast_data = []

days = soup.find('ul', class_='t clearfix').find_all('li')

for day in days:

day_date = day.find('h1').get_text(strip=True)

weather_condition = day.find('p', class_='wea').get_text(strip=True)

high_temp = day.find('span').get_text(strip=True) if day.find('span') else ''

low_temp = day.find('i').get_text(strip=True)

temperature = f"{high_temp}/{low_temp}"

forecast_data.append((day_date, weather_condition, temperature))

return forecast_data

# 创建数据库和表格

def initialize_database():

connection = sqlite3.connect('weather_data.db')

cursor = connection.cursor()

cursor.execute('''

CREATE TABLE IF NOT EXISTS forecast (

record_id INTEGER PRIMARY KEY AUTOINCREMENT,

location TEXT,

forecast_date TEXT,

condition TEXT,

temp_range TEXT

)

''')

connection.commit()

return connection

# 存储天气信息到数据库

def store_weather_data(location, weather_info, connection):

cursor = connection.cursor()

for day_date, condition, temp in weather_info:

cursor.execute("INSERT INTO forecast (location, forecast_date, condition, temp_range) VALUES (?, ?, ?, ?)",

(location, day_date, condition, temp))

connection.commit()

# 打印数据库中的天气信息

def print_weather_data(connection):

cursor = connection.cursor()

cursor.execute("SELECT * FROM forecast")

records = cursor.fetchall()

print(f"{'ID':<5} {'城市':<10} {'日期':<15} {'天气状况':<20} {'温度范围':<15}")

for record in records:

print(f"{record[0]:<5} {record[1]:<10} {record[2]:<15} {record[3]:<20} {record[4]:<15}")

def main():

# 城市及其对应的代码

cities = {

'北京': '101010100',

'上海': '101020100',

'福州': '101230101',

'天津': '101030100'

}

# 初始化数据库连接

db_connection = initialize_database()

# 获取并存储每个城市的天气信息

for city_name, city_id in cities.items():

print(f"获取城市 {city_name} ({city_id}) 的天气信息...")

weather_info = get_weather_info(city_id)

store_weather_data(city_name, weather_info, db_connection)

# 打印数据库中的天气信息

print_weather_data(db_connection)

db_connection.close()

if __name__ == '__main__':

main()

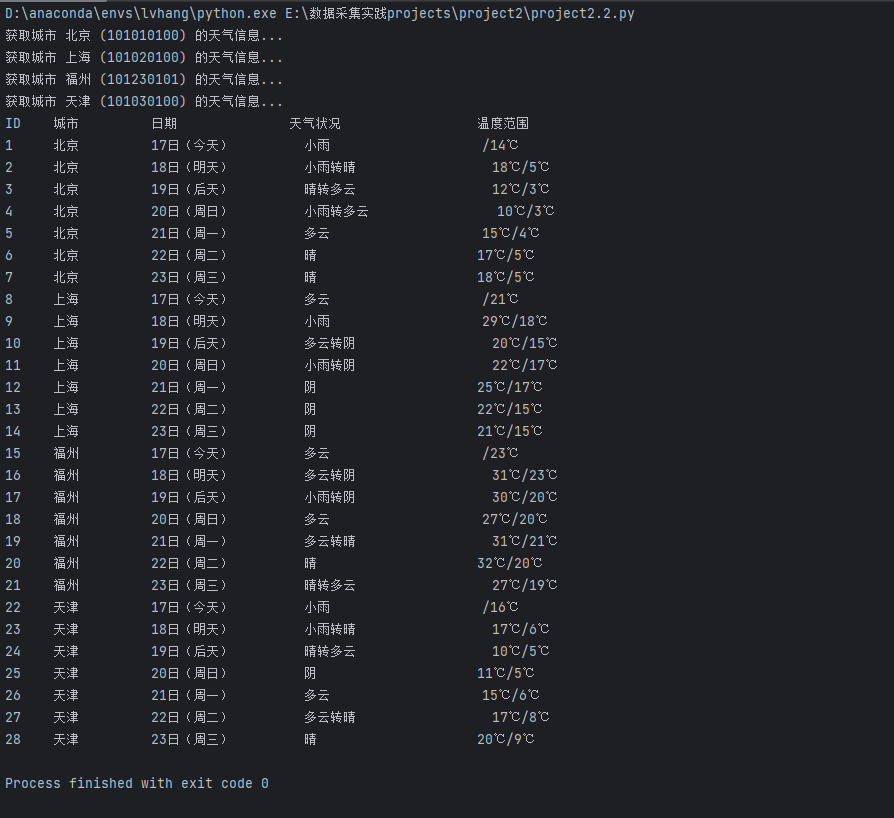

结果如下:

2.心得体会

写代码时遇到bug:TypeError: 'bool' object is not callable

仔细查找发现是strip=True后面多了一个括号,判断应该是python版本不同导致的写法不同

作业二

1.实验内容

-

要求:用requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。

-

候选网站:东方财富网:https://www.eastmoney.com/

-

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

-

参考链接:https://zhuanlan.zhihu.com/p/50099084

代码如下:

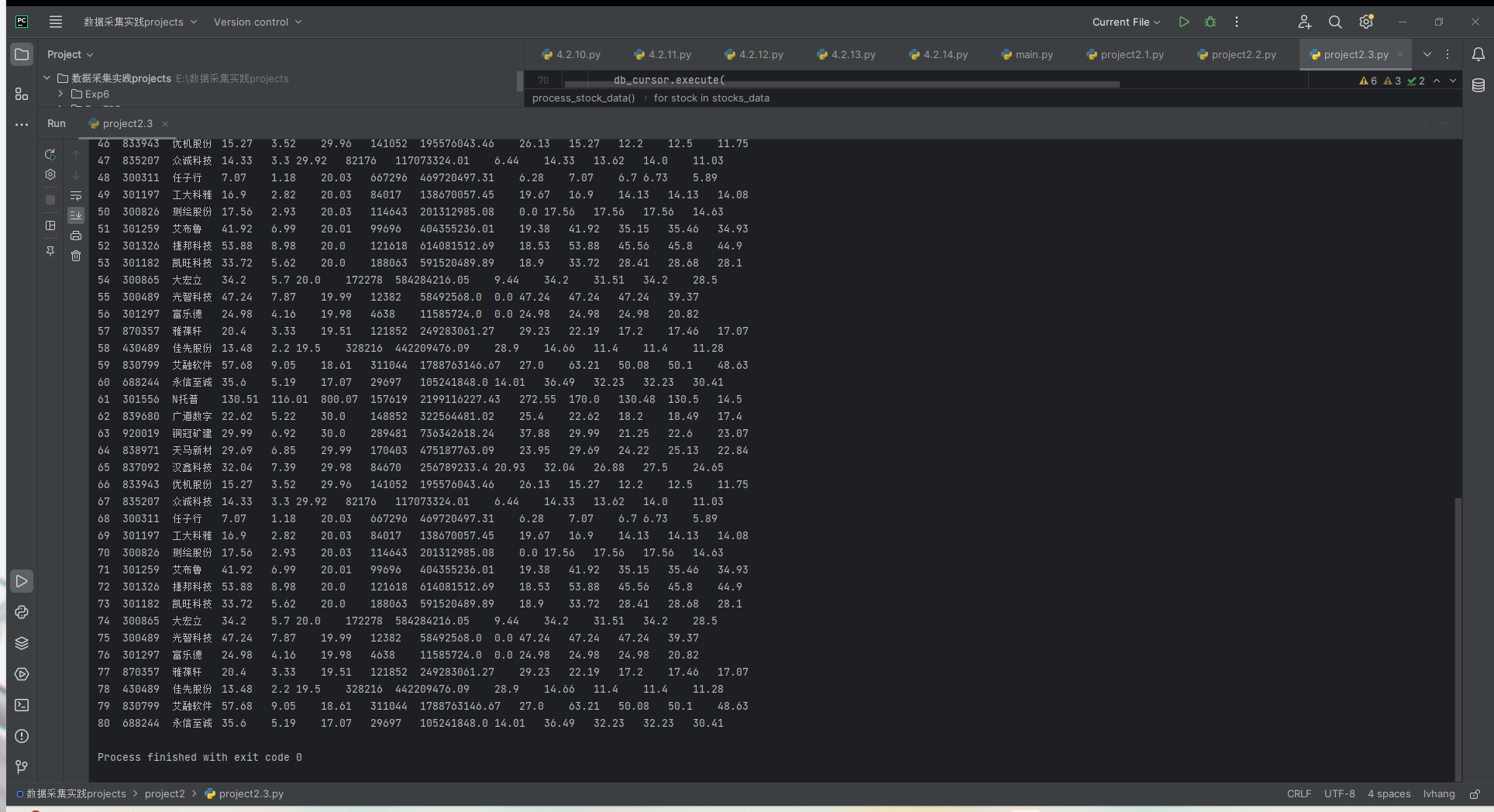

import requests import re import sqlite3 def fetch_html(page_num, query_parameters): base_url = ("http://66.push2.eastmoney.com/api/qt/clist/get?" "cb=jQuery112409097606620255823_1696662149317&pn=1&pz=20&po=1&np=" + str(page_num) + "&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&" + query_parameters + "&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696662149318") response = requests.get(base_url) regex_pattern = "\"diff\":\\[(.*?)\\]" extracted_data = re.findall(regex_pattern, response.text, re.S) return extracted_data def process_stock_data(query_params, page_num): # Retrieve the JSON data from the API json_data = fetch_html(page_num, query_params) stocks_data = json_data[0].split("},") # Create/connect to a SQLite database database_connection = sqlite3.connect('stock_data.db') db_cursor = database_connection.cursor() # Create the stocks table if it doesn't exist db_cursor.execute('''CREATE TABLE IF NOT EXISTS stock_info ( id INTEGER PRIMARY KEY, stock_code TEXT, stock_name TEXT, stock_price REAL, price_change REAL, price_change_percent REAL, volume INTEGER, turnover REAL, amplitude REAL, highest REAL, lowest REAL, open_price REAL, last_close REAL )''') for stock in stocks_data: # Parse stock data stock_info = {} attributes = stock.split(',') for attribute in attributes: key_value = attribute.split(':') key = key_value[0].strip('"') value = key_value[1].strip('"') stock_info[key] = value # Extract relevant stock information stock_code = stock_info.get('f12', 'N/A') stock_name = stock_info.get('f14', 'N/A') stock_price = float(stock_info.get('f2', 0.0)) price_change = float(stock_info.get('f4', 0.0)) price_change_percent = float(stock_info.get('f3', 0.0)) volume = int(stock_info.get('f5', 0)) turnover = float(stock_info.get('f6', 0.0)) amplitude = float(stock_info.get('f7', 0.0)) highest = float(stock_info.get('f15', 0.0)) lowest = float(stock_info.get('f16', 0.0)) open_price = float(stock_info.get('f17', 0.0)) last_close = float(stock_info.get('f18', 0.0)) # Insert stock data into the database db_cursor.execute( "INSERT INTO stock_info (stock_code, stock_name, stock_price, price_change, price_change_percent, volume, turnover, amplitude, highest, lowest, open_price, last_close) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)", (stock_code, stock_name, stock_price, price_change, price_change_percent, volume, turnover, amplitude, highest, lowest, open_price, last_close)) database_connection.commit() # Query all stock data db_cursor.execute("SELECT * FROM stock_info") all_stocks = db_cursor.fetchall() # Get column names for displaying data column_names = [description[0] for description in db_cursor.description] print("\t".join(column_names)) # Display each stock's information for stock in all_stocks: print("\t".join(map(str, stock))) # Close the database connection database_connection.close() # Execute the function with specified parameters page_number = 1 process_stock_data("fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048", page_number)结果如下:

2.心得体会

目前代码是一次性抓取数据,可以考虑添加功能以定期更新数据或只插入新数据,而不是每次都插入,且如果数据量增加,插入数据库时可以考虑使用批量插入的方式提高性能。

作业三

1.实验内容

- 要求:爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

- 技巧:分析该网站的发包情况,分析获取数据的api

代码如下:

import requests

from bs4 import BeautifulSoup

import sqlite3

# 目标网页URL

url = 'https://www.shanghairanking.cn/rankings/bcur/2021' # 请替换为实际的URL

# 使用 requests 获取网页内容

response = requests.get(url)

content = response.content

# 解析网页内容

soup = BeautifulSoup(content, 'html.parser')

# 找到包含大学排名信息的表格

ranking_table = soup.find('table', class_='rk-table')

# 创建或连接到SQLite数据库

database = sqlite3.connect('schools_rank.db')

db_cursor = database.cursor()

# 创建数据表,如果表不存在

db_cursor.execute('''CREATE TABLE IF NOT EXISTS university_ranking

(rank TEXT, school_name TEXT, province_city TEXT, school_type TEXT, total_score TEXT)''')

# 遍历表格中的每一行,获取数据

for entry in ranking_table.find_all('tr')[1:]: # 跳过表头

columns = entry.find_all('td')

rank_value = columns[0].get_text(strip=True)

university_name = columns[1].get_text(strip=True)

location = columns[2].get_text(strip=True)

type_of_school = columns[3].get_text(strip=True)

score = columns[4].get_text(strip=True)

# 插入数据到数据库中

db_cursor.execute('''INSERT INTO university_ranking (rank, school_name, province_city, school_type, total_score)

VALUES (?, ?, ?, ?, ?)''', (rank_value, university_name, location, type_of_school, score))

# 提交数据到数据库

database.commit()

# 查询并打印数据库中的所有记录

db_cursor.execute("SELECT * FROM university_ranking")

records = db_cursor.fetchall()

for record in records:

# 清除记录中的换行符

cleaned_record = [field.replace('\n', '') for field in record]

# 打印处理后的记录

print(tuple(cleaned_record))

# 关闭数据库连接

database.close()

print("大学排名数据已成功保存至数据库")

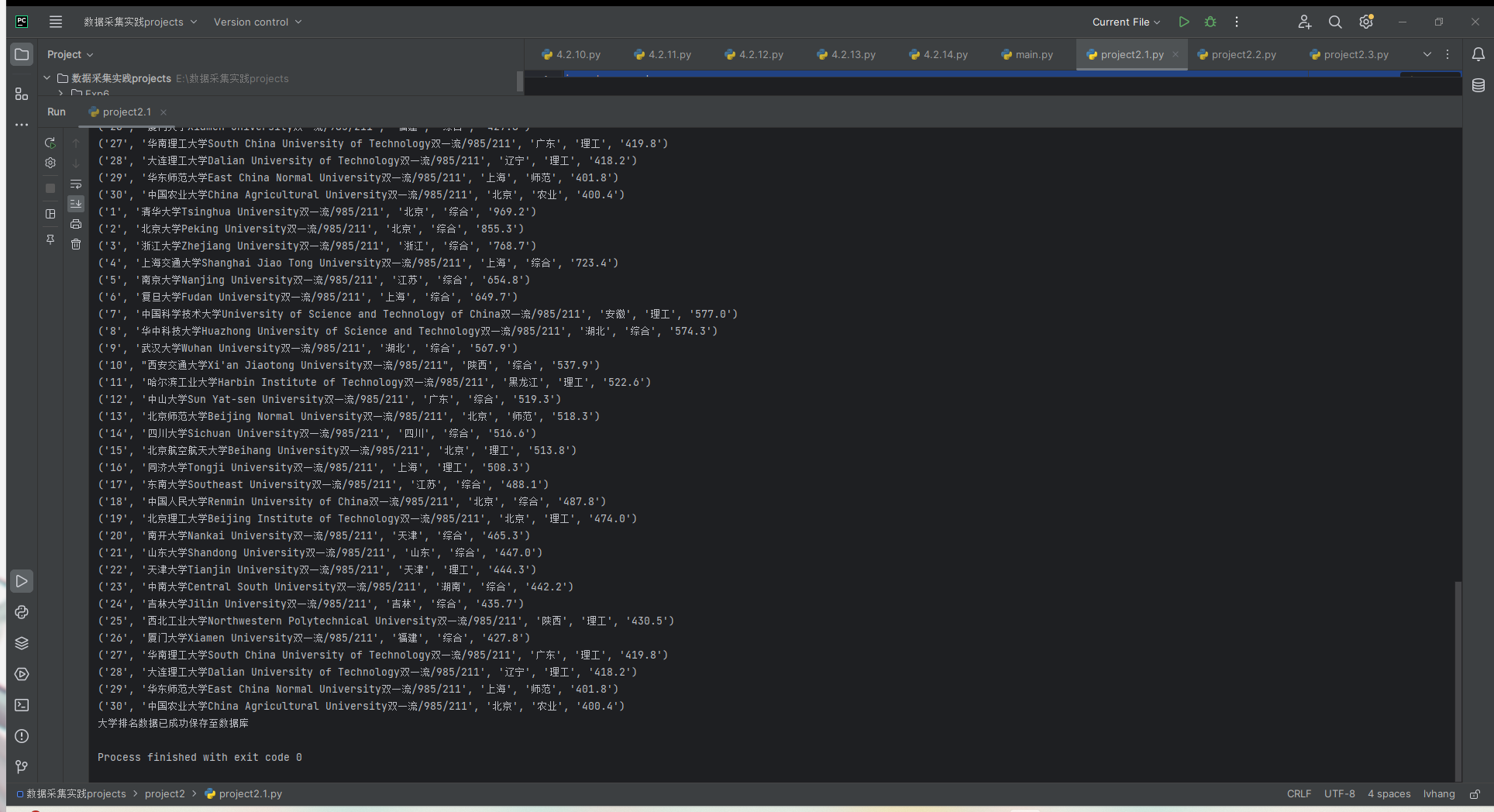

结果如下:

F12调试过程GIF如下:

2.心得体会

当前代码没有包括任何异常处理逻辑,导致我在debug时花费较多时间。例如在数据库操作或网页请求时,如果发生错误,程序将直接崩溃。下次将在关键部分加入 try-except 块,以便能够捕获并处理潜在的异常。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步