pytorch学习笔记(8)--搭建简单的神经网络以及Sequential的使用

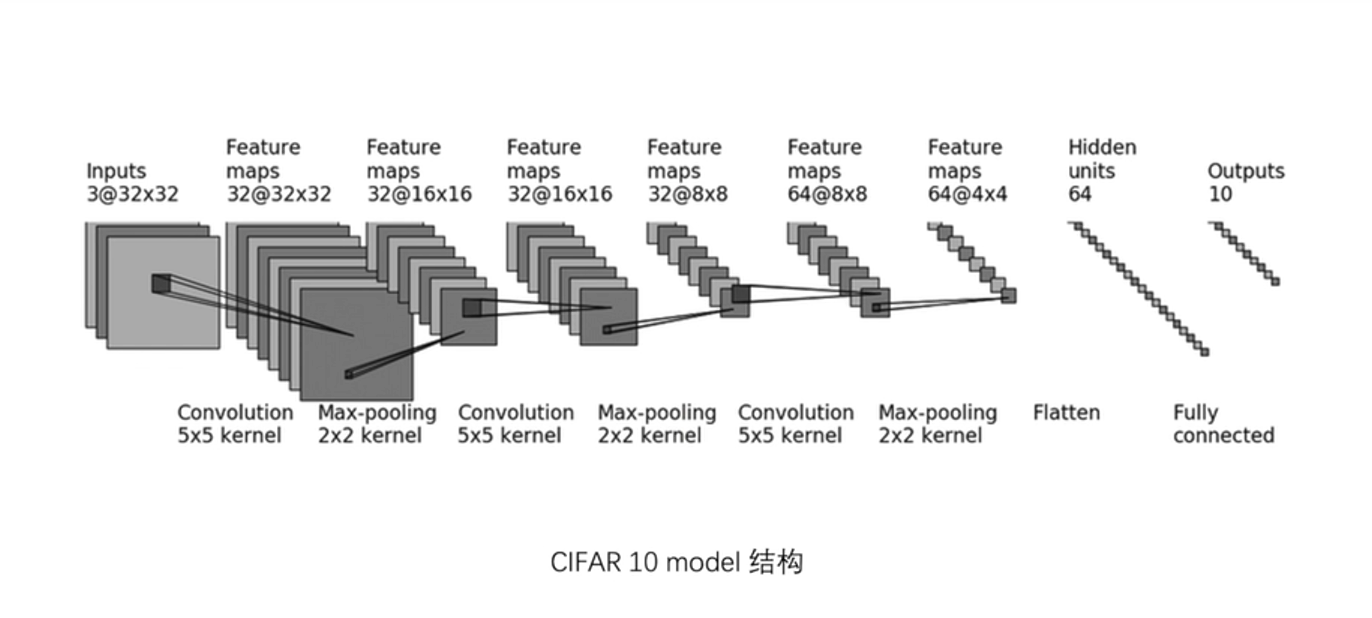

1、神经网络图

输入图像是3通道的32×32的,先后经过卷积层(5×5的卷积核)、最大池化层(2×2的池化核)、卷积层(5×5的卷积核)、最大池化层(2×2的池化核)、卷积层(5×5的卷积核)、最大池化层(2×2的池化核)、拉直、全连接层的处理,最后输出的大小为10。

注:(1)通道变化时通过调整卷积核的个数(即输出通道)来实现的,再nn.conv2d的参数中有out_channel这个参数就是对应输出通道

(2)32个3*5*5的卷积核,然后input对其一个个卷积得到32个32*32------通道数变不变看用几个卷积核

(3)最大池化不改变通道channel数

代码输入:

# file : nn_sequential.py # time : 2022/8/2 上午9:11 # function : 实现一个简单的神经网络 import torch from torch import nn from torch.nn import Conv2d, MaxPool2d, Flatten, Linear class Tudui(nn.Module): def __init__(self): super(Tudui, self).__init__() # stride 默认为1 所以不写也可 self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2) self.maxpool1 = MaxPool2d(kernel_size=2) self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2) self.maxpool2 = MaxPool2d(kernel_size=2) self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2) self.maxpool3 = MaxPool2d(kernel_size=2) self.flatten = Flatten() self.linear1 = Linear(in_features=1024, out_features=64) self.linear2 = Linear(in_features=64, out_features=10) def forward(self, x): x = self.conv1(x) x = self.maxpool1(x) x = self.conv2(x) x = self.maxpool2(x) x = self.conv3(x) x = self.maxpool3(x) x = self.flatten(x) x = self.linear1(x) x = self.linear2(x) return x tudui = Tudui() # 输出网络的结构情况 print(tudui)

# bitch_size = 64 ,channel通道=3,尺寸32*32 input = torch.ones((64, 3, 32, 32)) output = tudui(input) print(output.shape) # 输出output尺寸

输出:

Tudui( (conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (flatten): Flatten(start_dim=1, end_dim=-1) (linear1): Linear(in_features=1024, out_features=64, bias=True) (linear2): Linear(in_features=64, out_features=10, bias=True) ) torch.Size([64, 10])

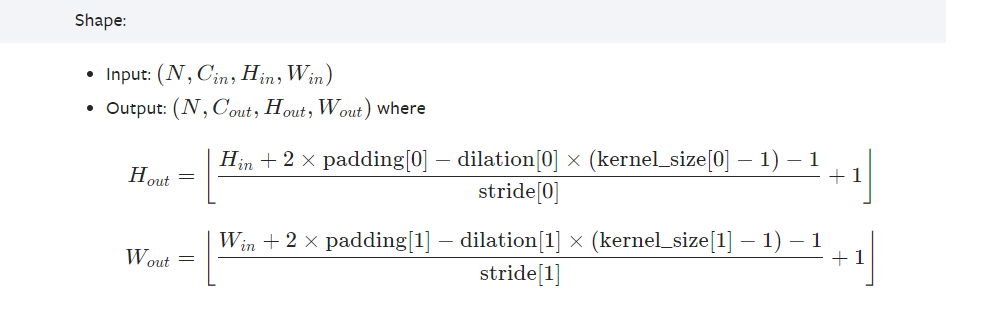

补充说明:

其中Hout=32,Hin(输入的高)=32,dilation[0]=1(默认设置为1),kernel_size[0]=5,将其带入到Hout的公式,

计算过程如下:

32 =((32+2×padding[0]-1×(5-1)-1)/stride[0])+1,简化之后的式子为:

27+2×padding[0]=31×stride[0],其中stride[0]=1,所以padding[0]=2(注若stride[0]=2则padding[0]很大舍去)

2、Sequential

Sequential是一个时序容器。Modules 会以他们传入的顺序被添加到容器中。包含在PyTorch官网中torch.nn模块中的Containers中,在神经网络搭建的过程中如果使用Sequential,代码更简洁

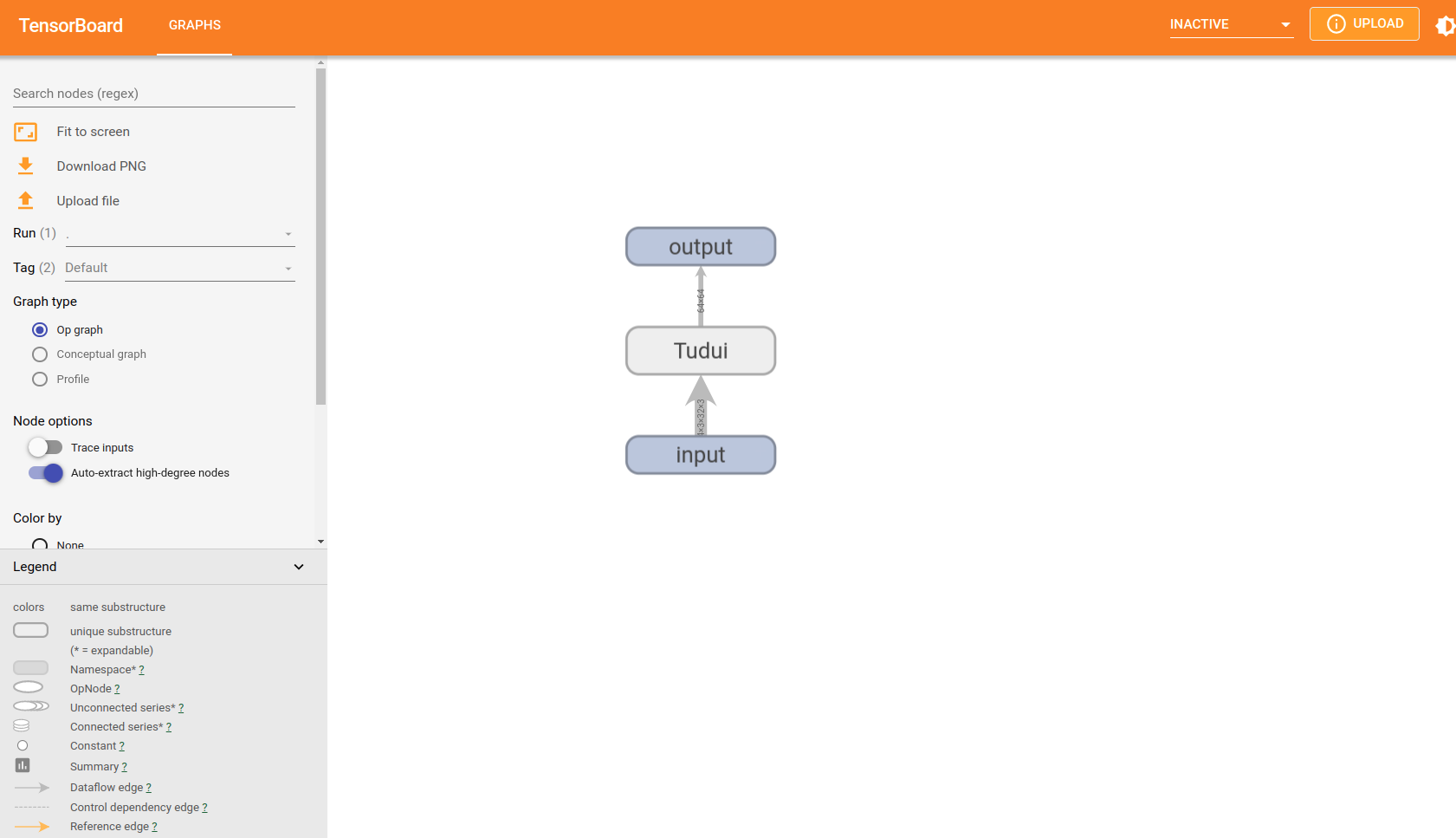

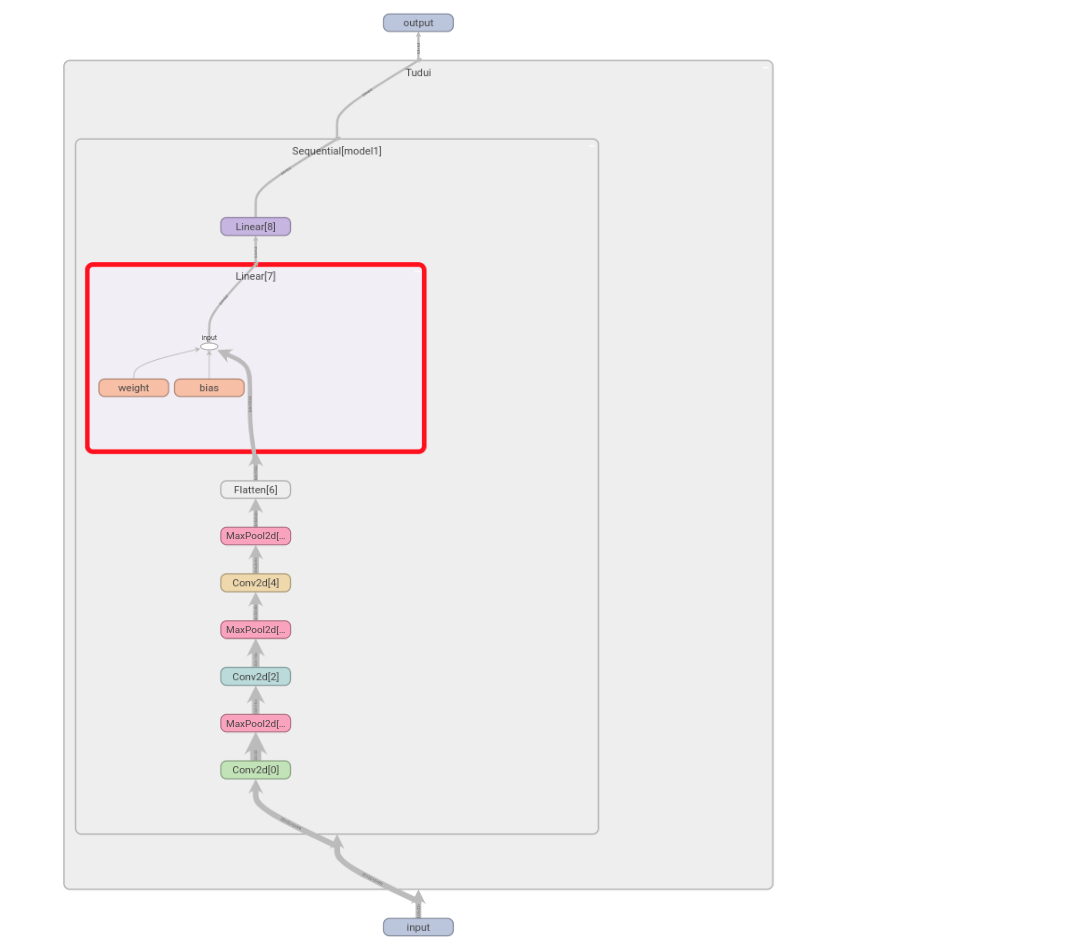

现以Sequential搭建上述一模一样的神经网络,并借助tensorboard显示计算图的具体信息。代码如下:

# file : nn_sequential.py # time : 2022/8/2 上午9:11 # function : Sequential import torch from torch import nn from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential from torch.utils.tensorboard import SummaryWriter class Tudui(nn.Module): def __init__(self): super(Tudui, self).__init__() self.model1 = Sequential( Conv2d(3, 32, 5, padding=2), MaxPool2d(2), Conv2d(32, 32, 5, padding=2), MaxPool2d(2), Conv2d(32, 64, 5, padding=2), MaxPool2d(2), Flatten(), Linear(1024, 64), Linear(64, 64) ) def forward(self, x): x = self.model1(x) return x tudui = Tudui() print(tudui) input = torch.ones((64, 3, 32, 32)) output = tudui(input) print(output.shape) writer = SummaryWriter("../logs") writer.add_graph(tudui, input) writer.close()

输出:

Tudui( (model1): Sequential( (0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2)) (5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (6): Flatten(start_dim=1, end_dim=-1) (7): Linear(in_features=1024, out_features=64, bias=True) (8): Linear(in_features=64, out_features=64, bias=True) ) ) torch.Size([64, 64])

双击打开查看具体节点信息:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)