Spark(五十):使用JvisualVM监控Spark Executor JVM

引导

Windows环境下JvisulaVM一般存在于安装了JDK的目录${JAVA_HOME}/bin/JvisualVM.exe,它支持(本地和远程)jstatd和JMX两种方式连接远程JVM。

jstatd (Java Virtual Machine jstat Daemon)——监听远程服务器的CPU,内存,线程等信息

JMX(Java Management Extensions,即Java管理扩展)是一个为应用程序、设备、系统等植入管理功能的框架。JMX可以跨越一系列异构操作系统平台、系统体系结构和网络传输协议,灵活的开发无缝集成的系统、网络和服务管理应用。

备注:针对jstatd我尝试未成功,因此也不在这里误导别人。

JMX监控

正常配置:

-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false -Djava.rmi.server.hostname=<ip> -Dcom.sun.management.jmxremote.port=<port>

添加JMX配置:

在Spark中监控executor时,需要先配置jmx然后再启动spark应用程序,配置方式有三种:

1)在spark-defaults.conf中配置那三个参数

2)在spark-env.sh中配置:配置master,worker的JavaOptions

3)在spark-submit提交时配置

这里采用以下spark-submit提交时配置:

spark-submit \ --class myTest.KafkaWordCount \ --master yarn \ --deploy-mode cluster \

--conf "spark.executor.extraJavaOptions=-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.port=0 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false" \ --verbose \ --executor-memory 1G \ --total-executor-cores 6 \ /hadoop/spark/app/spark/20151223/testSpark.jar *.*.*.*:* test3 wordcount 4 kafkawordcount3 checkpoint4

注意:

1)不能指定具体的 ip 和 port------因为spark中运行时,很可能一个节点上分配多个container进程,此时占用同一个端口,会导致spark应用程序通过spark-submit提交失败。

2)因为不指定具体的ip和port,所以在任务提交阶段会自动分配端口。

3)上边三种配置方式可能会导致监控级别不同(比如spark-submit只针对一个应用程序,spark-env.sh可能是全局一个节点所有executor监控【未验证】,请读者注意。)

查找JMX分配端口

通过yarn applicationattempt -list appicationId查找到applicationattemptid

[root@cdh-143 bin]# yarn applicationattempt -list application_1559203334026_0015 19/06/01 17:57:18 INFO client.RMProxy: Connecting to ResourceManager at CDH-143/10.dx.dx.143:8032 Total number of application attempts :1 ApplicationAttempt-Id State AM-Container-Id Tracking-URL appattempt_1559203334026_0015_000001 RUNNING container_1559203334026_0015_01_000001 http://CDH-143:8088/proxy/application_1559203334026_0015/

通过yarn container -list aaplicationattemptId查找container id list

[root@cdh-143 bin]# yarn container -list appattempt_1559203334026_0015_000001 19/06/01 17:57:52 INFO client.RMProxy: Connecting to ResourceManager at CDH-143/10.dx.dx.143:8032 Total number of containers :16 Container-Id Start Time Finish Time State Host LOG-URL container_1559203334026_0015_01_000012 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000012/dx container_1559203334026_0015_01_000013 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000013/dx container_1559203334026_0015_01_000010 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000010/dx container_1559203334026_0015_01_000011 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000011/dx container_1559203334026_0015_01_000016 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000016/dx container_1559203334026_0015_01_000014 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000014/dx container_1559203334026_0015_01_000015 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-146:8041 http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000015/dx container_1559203334026_0015_01_000004 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000004/dx container_1559203334026_0015_01_000005 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000005/dx container_1559203334026_0015_01_000002 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000002/dx container_1559203334026_0015_01_000003 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000003/dx container_1559203334026_0015_01_000008 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000008/dx container_1559203334026_0015_01_000009 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000009/dx container_1559203334026_0015_01_000006 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000006/dx container_1559203334026_0015_01_000007 Sat Jun 01 13:27:52 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000007/dx container_1559203334026_0015_01_000001 Sat Jun 01 13:27:38 +0800 2019 N/A RUNNING CDH-142:8041 http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000001/dx

到具体executor所在节点服务器上,使用如下命令找到运行的线程,和 pid

[root@cdh-146 ~]# ps -axu | grep container_1559203334026_0015_01_000013 yarn 8844 0.0 0.0 113144 1496 ? S 13:27 0:00 bash /data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/default_container_executor.sh yarn 8857 0.0 0.0 113280 1520 ? Ss 13:27 0:00 /bin/bash -c /usr/java/jdk1.8.0_171-amd64/bin/java -server -Xmx6144m '-Dcom.sun.management.jmxremote' '-Dcom.sun.management.jmxremote.port=0' '-Dcom.sun.management.jmxremote.authenticate=false' '-Dcom.sun.management.jmxremote.ssl=false' -Djava.io.tmpdir=/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/tmp '-Dspark.network.timeout=10000000' '-Dspark.driver.port=47564' '-Dspark.port.maxRetries=32' -Dspark.yarn.app.container.log.dir=/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013 -XX:OnOutOfMemoryError='kill %p' org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@CDH-143:47564 --executor-id 12 --hostname CDH-146 --cores 2 --app-id application_1559203334026_0015 --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/__app__.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/streaming-dx-perf-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-common-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-sql-kafka-0-10_2.11-2.4.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-avro_2.11-3.2.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/shc-core-1.1.2-2.2-s_2.11-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/rocksdbjni-5.17.2.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/kafka-clients-0.10.0.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/elasticsearch-spark-20_2.11-6.4.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx_Spark_State_Store_Plugin-1.0-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-core_2.11-0.9.5.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-avro_2.11-0.9.5.jar 1>/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013/stdout 2>/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013/stderr yarn 9000 143 3.3 8736712 4379648 ? Sl 13:27 24:35 /usr/java/jdk1.8.0_171-amd64/bin/java -server -Xmx6144m -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.port=0 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.io.tmpdir=/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/tmp -Dspark.network.timeout=10000000 -Dspark.driver.port=47564 -Dspark.port.maxRetries=32 -Dspark.yarn.app.container.log.dir=/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013 -XX:OnOutOfMemoryError=kill %p org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@CDH-143:47564 --executor-id 12 --hostname CDH-146 --cores 2 --app-id application_1559203334026_0015 --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/__app__.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-domain-perf-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-common-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-sql-kafka-0-10_2.11-2.4.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-avro_2.11-3.2.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/shc-core-1.1.2-2.2-s_2.11-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/rocksdbjni-5.17.2.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/kafka-clients-0.10.0.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/elasticsearch-spark-20_2.11-6.4.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx_Spark_State_Store_Plugin-1.0-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-core_2.11-0.9.5.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-avro_2.11-0.9.5.jar root 25939 0.0 0.0 112780 956 pts/1 S+ 13:45 0:00 grep --color=auto container_1559203334026_0015_01_000013

然后通过 pid 找到对应JMX的端口

[root@cdh-146 ~]# sudo netstat -antp | grep 9000 tcp 0 0 10.dx.dx.146:9000 0.0.0.0:* LISTEN 2642/python2.7 tcp6 0 0 :::48169 :::* LISTEN 9000/java tcp6 0 0 :::37692 :::* LISTEN 9000/java tcp6 0 0 10.dx.dx.146:52710 :::* LISTEN 9000/java tcp6 0 0 10.dx.dx.146:55535 10.dx.dx.142:38397 ESTABLISHED 9000/java tcp6 64088 0 10.dx.dx.146:45410 10.206.186.35:9092 ESTABLISHED 9000/java tcp6 0 0 10.dx.dx.146:60259 10.dx.dx.143:47564 ESTABLISHED 9000/java

结果中看,疑似为48169或37692,稍微尝试一下即可连上对应的 spark executor

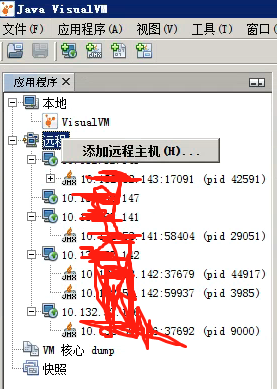

使用JvisulaVM.exe工具添加监控

在本地windows服务器上找到JDK的目录,找到文件${JAVA_HOME}/bin/JvisualVM.exe,并运行它。启动后选择“远程”右键,添加JMX监控

填写监控executor所在节点ip

然后就可以启动监控:

基础才是编程人员应该深入研究的问题,比如:

1)List/Set/Map内部组成原理|区别

2)mysql索引存储结构&如何调优/b-tree特点、计算复杂度及影响复杂度的因素。。。

3)JVM运行组成与原理及调优

4)Java类加载器运行原理

5)Java中GC过程原理|使用的回收算法原理

6)Redis中hash一致性实现及与hash其他区别

7)Java多线程、线程池开发、管理Lock与Synchroined区别

8)Spring IOC/AOP 原理;加载过程的。。。

【+加关注】。

浙公网安备 33010602011771号

浙公网安备 33010602011771号