HBase客户端开发的Java API

HBase客户端开发API

建立连接

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

private static Connection connection = null;

private static Admin admin = null;

// 建立连接

static {

try {

// 1.获取配置文件信息

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","hadoop101,hadoop102,hadoop103");

// 2.建立连接,获取connection对象

connection = ConnectionFactory.createConnection(conf);

// 获取admin对象

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

// 关闭资源

private static void close(){

if (admin!=null){

try {

admin.close();

} catch (IOException e) {

e.printStackTrace();

}

}

if (connection!=null){

try {

connection.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

DDL

1.判断表是否存在

public static boolean isTableExist(String tableName) throws IOException {

boolean exists = admin.tableExists(TableName.valueOf(tableName));

return exists;

}

2.创建表

public static void createTable(String tableName,String... args) throws IOException {

// 1.判读是否存在列族信息

if (args.length <= 0){

System.out.println("请设置列族信息:");

return;

}

// 2.判断表是否存在

if (isTableExist(tableName)){

System.out.println(tableName+"表已存在!");

return;

}

// 3.根据TableName对象创建表描述器

HTableDescriptor hTableDescriptor = new HTableDescriptor(TableName.valueOf(tableName));

// 4.循环添加列族信息

for (String arg : args) {

// 5.创建列族描述器

HColumnDescriptor hColumnDescriptor = new HColumnDescriptor(arg);

// 6.添加具体的列族信息

hTableDescriptor.addFamily(hColumnDescriptor);

}

// 7.创建表

admin.createTable(hTableDescriptor);

}

3.删除表

public static void dropTable(String tableName) throws IOException {

if (!isTableExist(tableName)){

System.out.println(tableName+"表不存在!!!");

}

// 2.使表下线

admin.disableTable(TableName.valueOf(tableName));

//3.删除表

admin.deleteTable(TableName.valueOf(tableName));

}

4.创建命名空间

public static void createNameSpace(String ns){

// 1.创建命名空间描述器

NamespaceDescriptor.Builder builder = NamespaceDescriptor.create(ns);

NamespaceDescriptor namespaceDescriptor = builder.build();

// 2.创建命名空间

try {

admin.createNamespace(namespaceDescriptor);

}catch (NamespaceExistException e){

System.out.println(ns+"命名空间已经存在!");

}

catch (IOException e) {

e.printStackTrace();

}

}

DML

1.插入数据

public static void putData(String tableName,String rowKey,String cf,String cn,String value) throws IOException {

//1.获取表对象

Table table = connection.getTable(TableName.valueOf(tableName));

//2.创建put对象

Put put = new Put(Bytes.toBytes(rowKey));

//3.给put对象赋值

put.addColumn(Bytes.toBytes(cf),Bytes.toBytes(cn),Bytes.toBytes(value));

table.put(put);

//4.关闭连接

table.close();

}

2.查询数据

public static void getData(String tableName,String rowKey,String cf,String cn) throws IOException {

//1.获取表对象

Table table = connection.getTable(TableName.valueOf(tableName));

//2.创建get对象

Get get = new Get(Bytes.toBytes(rowKey));

//2.1 指定列族

// get.addFamily(Bytes.toBytes(cf));

//2.2 指定列族和列

get.addColumn(Bytes.toBytes(cf),Bytes.toBytes(cn));

//2.3设置获取的版本数

get.setMaxVersions(2);

//3.获取数据

Result result = table.get(get);

//4.解析result

for (Cell cell : result.rawCells()) {

//5.打印数据

System.out.println("CF: "+Bytes.toString(CellUtil.cloneFamily(cell))+

" CN: "+Bytes.toString((CellUtil.cloneQualifier(cell)))+

" Value: "+Bytes.toString(CellUtil.cloneValue(cell)));

}

table.close();

}

如果表中某一个列族的数据的版本数为n,而在代码中设置的获取的最大版本数为m,则:

if n > m 返回m条数据

if n < m 返回n条数据

get对象和rowKey一一对应,rowKey指的是一行数据,里面包含了多个cell。

3.扫描数据

public static void scanTable(String tableName) throws IOException {

//1.获取表对象

Table table = connection.getTable(TableName.valueOf(tableName));

//2.创建Scan对象

Scan scan = new Scan();

//3.扫描全表

ResultScanner resultScanner = table.getScanner(scan);

//4.解析resultScanner

for (Result result : resultScanner) {

//5.解析并打印

for (Cell cell : result.rawCells()) {

//6.打印数据

System.out.println("CF: "+Bytes.toString(CellUtil.cloneFamily(cell))+

" CN: "+Bytes.toString((CellUtil.cloneQualifier(cell)))+

" Value: "+Bytes.toString(CellUtil.cloneValue(cell)));

}

}

// 关闭表连接

table.close();

}

一个ResultScanner对象包含了多个Result对象(多个rowkey对应的result),一个Result对象对应了多个Cell对象,一个Cell对象就是一条具体的实际数据。

4.删除数据

public static void deleteData(String tableName,String rowKey,String cf,String cn) throws IOException {

//1.获取表对象

Table table = connection.getTable(TableName.valueOf(tableName));

//2.构建删除对象

Delete delete = new Delete(Bytes.toBytes(rowKey));

//2.1设置删除的列

// delete.addColumn();

delete.addColumn(Bytes.toBytes(cf),Bytes.toBytes(cn));

// delete.addColumns(Bytes.toBytes(cf),Bytes.toBytes(cn),1596987728939l);

//2.2删除指定的列族

// delete.addFamily(Bytes.toBytes(cf));

//3.执行删除操作

table.delete(delete);

//4.关闭连接

table.close();

}

假设某个指定的rowKey、列族、列名下的数据有多个版本A、B、C,其中时间戳大小排序为A>B>C,如果采用上面的方法为delete对象指定列族和列名,那么只会删除时间戳最大的那个对象,同时如果使用scan查询数据,则可以显示时间戳第二大的数据。

注意:

1、在设计表的时候,如果指定了版本(Version)数量为1,那么当向表中多次对同一cell进行put操作的时候,此时内存中该cell下会有多个版本的数据;如果此时未刷写进磁盘,同时执行了API删除代码(采用addColumn方法向delete对象添加了列族和列名),那么时间戳最大的那个版本会被删除,此时scan表时,时间戳第二大的数据会显示出来。如果在调用API代码删除之前,刷写进了磁盘,则调用API代码删除之后,再进行scan操作,则不会显示时间戳小的数据。

2、如果涉及表的时候,Versions>1,则一次性put多条数据之后,然后进行刷写,再调用API代码进行删除,再scan时,会显示ts小的其他版本的数据。

命令行删除数据的方式有两种:

(1)delete:指定到列名,标记deleteColumn

(2)deleteAll:指定到rowKey,一整个列族都被删除掉,标记deleteFamily

API中的删除数据:

只向Delete对象中传入rowKey,相当于命令行中的deleteAll,最终的标记也是deleteFamily;

Delete对象的两个addColumn方法对比:

addColumn:可以只传入列族和列名,此时删除的是指定列族下的指定列名的最新版本的数据;也可以传入时间戳,删除的是指定时间戳的数据。

addColumns:可以只传入列族和列名,此时删除的是指定列族下的指定列名的所有版本的数据,相当于命令行中的delete指定到列族和列名;也可以传入时间戳,会删除所有小于等于传入的时间戳的数据。

需要注意的是,如果命令行的delete命令,只指定了(表名,rowKey,列族名)则无法实现删除,这是一个bug。但是在API代码中则可以实现删除。

HBase-MapReduce

通过HBase的相关Java API,可以实现伴随HBase操作的MR过程。

案例1:使用MR将数据从本地文件系统导入到HBase的表中

本地数据:

1001 apple red

1002 pear yellow

1003 pineapple yellow

map:

package com.fym.mr3;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class FruitMapper extends Mapper<LongWritable, Text,LongWritable,Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

context.write(key,value);

}

}

reduce:

package com.fym.mr3;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class FruitReducer extends TableReducer<LongWritable,Text,NullWritable> {

private String cf = null;

private String cn1 = null;

private String cn2 = null;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

Configuration conf = context.getConfiguration();

cf = conf.get("columnFamily");

cn1 = conf.get("columnName1");

cn2 = conf.get("columnName2");

}

@Override

protected void reduce(LongWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

for (Text value : values) {

String[] fields = value.toString().split("\t");

Put put = new Put(Bytes.toBytes(fields[0]));

put.addColumn(Bytes.toBytes(cf),Bytes.toBytes(cn1),Bytes.toBytes(fields[1]));

put.addColumn(Bytes.toBytes(cf),Bytes.toBytes(cn2),Bytes.toBytes(fields[2]));

context.write(NullWritable.get(),put);

}

}

}

Driver:

package com.fym.mr3;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class FruitDriver implements Tool {

private Configuration configuration = null;

public int run(String[] args) throws Exception {

configuration.set("columnFamily","info");

configuration.set("columnName1","name");

configuration.set("columnName2","color");

Job job = Job.getInstance(configuration);

job.setJarByClass(FruitDriver.class);

job.setMapperClass(FruitMapper.class);

job.setMapOutputKeyClass(LongWritable.class);

job.setMapOutputValueClass(Text.class);

TableMapReduceUtil.initTableReducerJob(

args[1],

FruitReducer.class,

job

);

FileInputFormat.setInputPaths(job,new Path(args[0]));

boolean res = job.waitForCompletion(true);

return res ? 0 : 1;

}

public void setConf(Configuration conf) {

configuration = conf;

}

public Configuration getConf() {

return configuration;

}

public static void main(String[] args) {

args = new String[2];

args[0] = "F:/ProgramTest/testfile/fruit.txt";

args[1] = "fruit";

try {

Configuration configuration = new Configuration();

int run = ToolRunner.run(configuration, new FruitDriver(), args);

System.exit(run);

} catch (Exception e) {

e.printStackTrace();

}

}

}

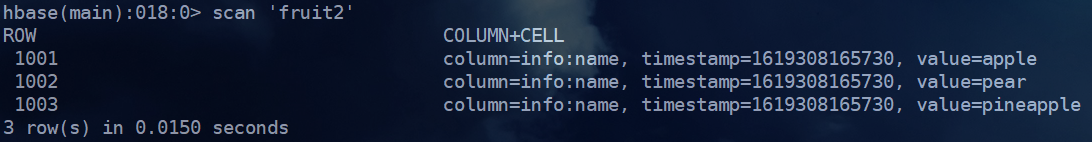

最终的结果:

案例2:使用MR从HBase中的一个表中扫描读取数据,将列名为name的数据写入到另一个HBase表中

map:此时自定义的Mapper类不再继承普通的Mapper,而是继承专门用于HBase的定制类TableMapper

package com.fym.mr2;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class Fruit2Mapper extends TableMapper<ImmutableBytesWritable, Put> {

@Override

protected void map(ImmutableBytesWritable key, Result value, Context context) throws IOException, InterruptedException {

//key.get()获得rowkey的字节数组,用于构建put对象

Put put = new Put(key.get());

//1.获取数据

for (Cell cell : value.rawCells()) {

//2.判断当前的cell是否为name列

if ("name".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))){

//3.给Put对象赋值

put.add(cell);

}

}

context.write(key,put);

}

}

reduce:此时自定义的Mapper类不再继承普通的Mapper,而是继承专门用于HBase的定制类TableReducer

package com.fym.mr2;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.io.NullWritable;

import java.io.IOException;

public class Fruit2Reducer extends TableReducer<ImmutableBytesWritable, Put, NullWritable> {

@Override

protected void reduce(ImmutableBytesWritable key, Iterable<Put> values, Context context) throws IOException, InterruptedException {

//遍历写出

for (Put put : values) {

context.write(NullWritable.get(),put);

}

}

}

driver:

package com.fym.mr2;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class Fruit2Driver implements Tool {

private Configuration configuration = null;

public int run(String[] args) throws Exception {

//1.获取Job对象

Job job = Job.getInstance(configuration);

//2设置主类路径

job.setJarByClass(Fruit2Driver.class);

//3设置Mapper和输出类型

TableMapReduceUtil.initTableMapperJob("fruit",

new Scan(),

Fruit2Mapper.class,

ImmutableBytesWritable.class,

Put.class,

job);

//4设置Reducer和输出的表

TableMapReduceUtil.initTableReducerJob("fruit2",

Fruit2Reducer.class,

job);

//5提交任务

boolean res = job.waitForCompletion(true);

return res?0:1;

}

public void setConf(Configuration conf) {

configuration = conf;

}

public Configuration getConf() {

return configuration;

}

public static void main(String[] args) {

try {

Configuration configuration = new Configuration();

ToolRunner.run(configuration,new Fruit2Driver(),args);

} catch (Exception e) {

e.printStackTrace();

}

}

}

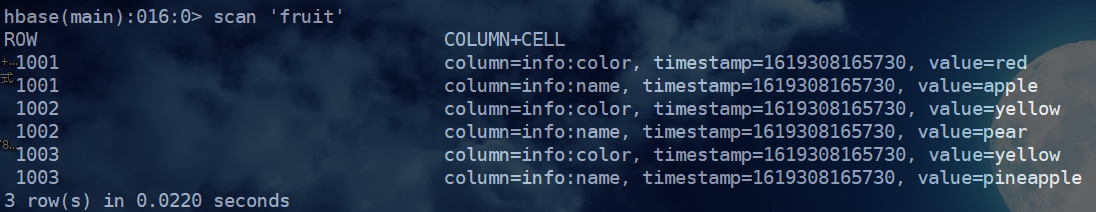

最终结果: