shell动态修改yml配置实例

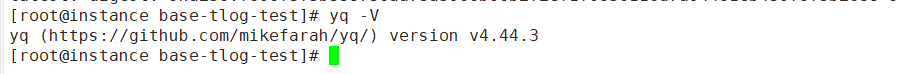

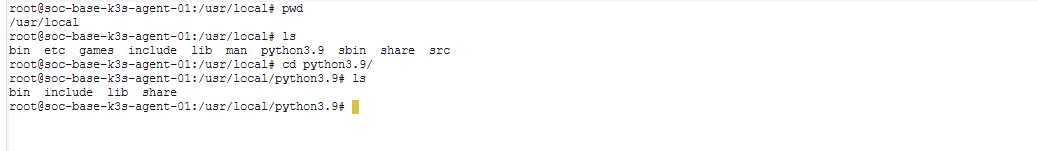

yq安装

https://github.com/mikefarah/yq/tree/master

每个yq版本支持的选项参数都不一样

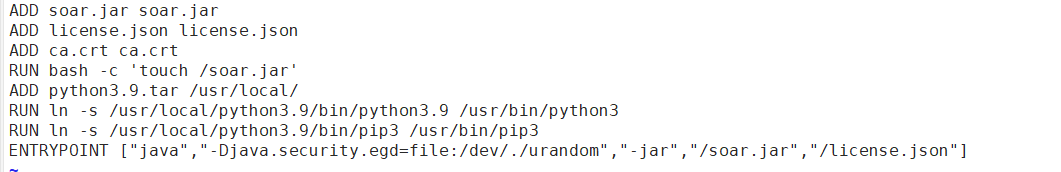

修改Dockerfile

[root@instance base-tlog-test]# vi Dockerfile FROM 192.168.30.113/library/java:latest ENV TZ=Asia/Shanghai RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo '$TZ' > /etc/timezone VOLUME /tmp ADD license.json license.json ADD ca.crt ca.crt ADD tlog tlog ADD yq /usr/bin/yq WORKDIR /tlog ADD start_tlog.sh /tlog/start_tlog.sh ENTRYPOINT ["sh","/tlog/start_tlog.sh"]

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","./lib/TLog.jar","/license.json"]

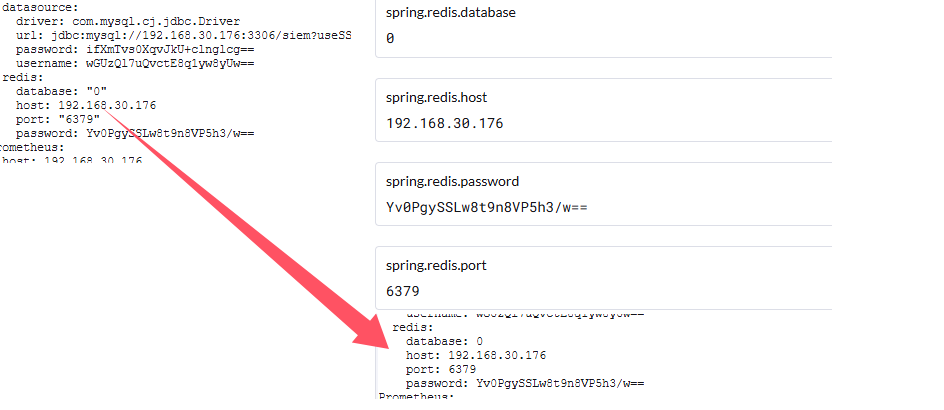

#!/bin/sh configpath="./config/application.yml" datasoureurl=`env | grep spring.datasource.url` dburl=${datasoureurl#*=} datausername=`env | grep spring.datasource.username | cut -d"=" -f2` datapassword=`env | grep spring.datasource.password | cut -d"=" -f2` redisdatabase=`env | grep spring.redis.database | cut -d"=" -f2` redishost=`env | grep spring.redis.host | cut -d"=" -f2` redisport=`env | grep spring.redis.port | cut -d"=" -f2` redispassword=`env | grep spring.redis.password | cut -d"=" -f2` promhost=`env | grep Prometheus.host | cut -d"=" -f2` promport=`env | grep Prometheus.port | cut -d"=" -f2` tlogport=`env | grep server.port | cut -d"=" -f2` [ ${dburl} ] && dburl=${dburl} yq -i '.spring.datasource.url=strenv(dburl)' ${configpath} [ ${datausername} ] && datausername=${datausername} yq -i '.spring.datasource.username=strenv(datausername)' ${configpath} [ ${datapassword} ] && datapassword=${datapassword} yq -i '.spring.datasource.password=strenv(datapassword)' ${configpath} [ ${redisdatabase} ] && redisdatabase=${redisdatabase} yq -i '.spring.redis.database=strenv(redisdatabase)' ${configpath} [ ${redishost} ] && redishost=${redishost} yq -i '.spring.redis.host=strenv(redishost)' ${configpath} [ ${redisport} ] && redisport=${redisport} yq -i '.spring.redis.port=strenv(redisport)' ${configpath} [ ${redispassword} ] && redispassword=${redispassword} yq -i '.spring.redis.password=strenv(redispassword)' ${configpath} [ ${promhost} ] && promhost=${promhost} yq -i '.Prometheus.host=strenv(promhost)' ${configpath} [ ${promport} ] && promport=${promport} yq -i '.Prometheus.port=strenv(promport)' ${configpath} [ ${tlogport} ] && tlogport=${tlogport} yq -i '.server.port=strenv(tlogport)' ${configpath} java -Djava.security.egd=file:/dev/./urandom -jar ./lib/TLog.jar /license.json

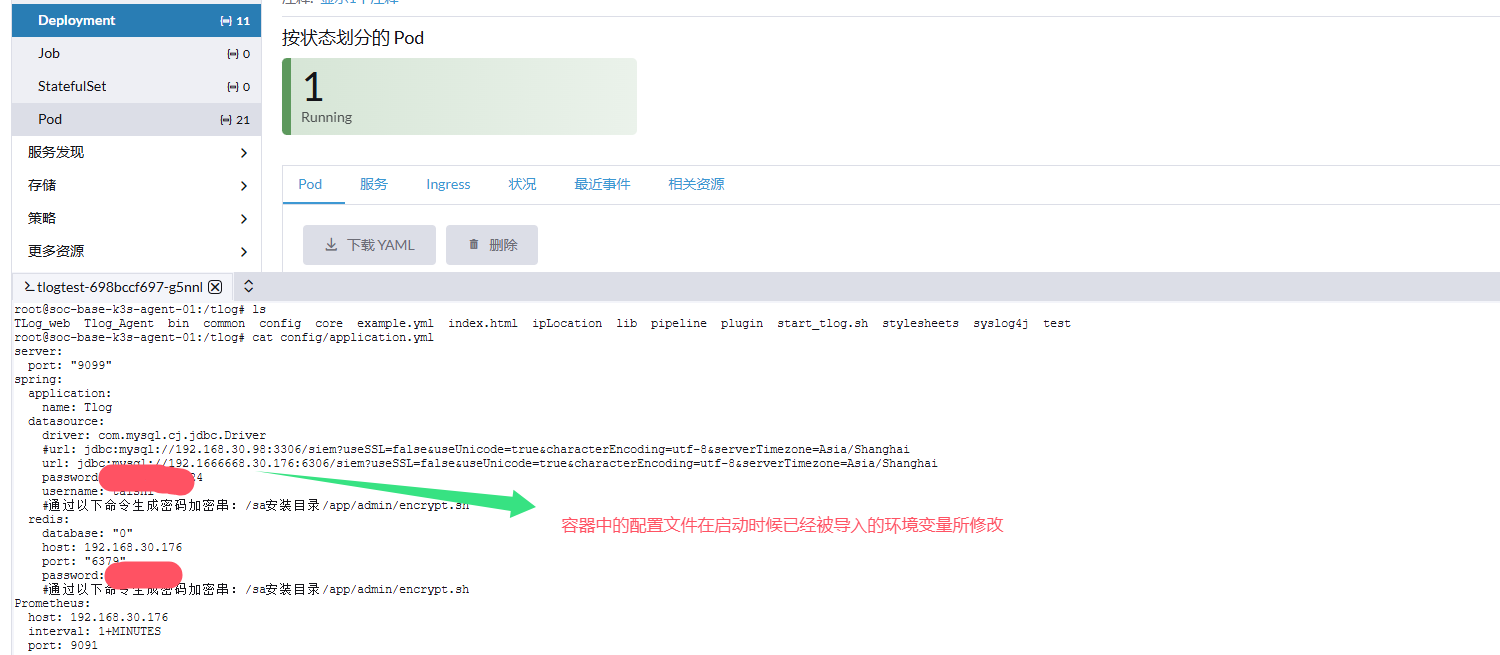

设置环境变量

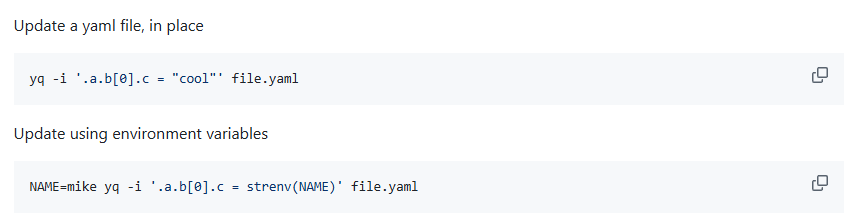

通过jq这个shell工具可以动态修改yml配置文件里面的配置项目

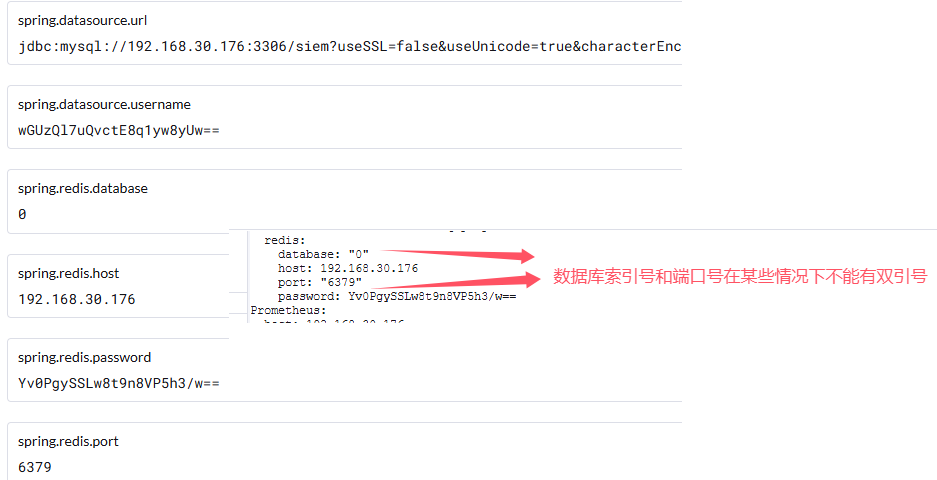

yq处理字符串和数字类型配置

yq处理的时候会自动把数字转换成字符串类型就是会给数字变量添加上双引号

[ ${redishost} ] && redishost=${redishost} yq -i '.spring.redis.host=strenv(redishost)' ${configpath}

[ ${redisport} ] && redisport=${redisport} yq -i '.spring.redis.port=env(redisport)' ${configpath}

env() 保留原来配置值的数据类型

strenv() 把配置值转换成字符串类型

#!/bin/sh configpath="./config/application.yml" datasoureurl=`env | grep spring.datasource.url` dburl=${datasoureurl#*=} datausername=`env | grep spring.datasource.username` datausername=${datausername#*=} datapassword=`env | grep spring.datasource.password` datapassword=${datapassword#*=} redisdatabase=`env | grep spring.redis.database | cut -d"=" -f2` redishost=`env | grep spring.redis.host | cut -d"=" -f2` redisport=`env | grep spring.redis.port | cut -d"=" -f2` redispassword=`env | grep spring.redis.password` redispassword=${redispassword#*=} promhost=`env | grep Prometheus.host | cut -d"=" -f2` promport=`env | grep Prometheus.port | cut -d"=" -f2` tlogport=`env | grep server.port | cut -d"=" -f2` [ ${dburl} ] && dburl=${dburl} yq -i '.spring.datasource.url=strenv(dburl)' ${configpath} [ ${datausername} ] && datausername=${datausername} yq -i '.spring.datasource.username=strenv(datausername)' ${configpath} [ ${datapassword} ] && datapassword=${datapassword} yq -i '.spring.datasource.password=strenv(datapassword)' ${configpath} [ ${redisdatabase} ] && redisdatabase=${redisdatabase} yq -i '.spring.redis.database=env(redisdatabase)' ${configpath} [ ${redishost} ] && redishost=${redishost} yq -i '.spring.redis.host=strenv(redishost)' ${configpath} [ ${redisport} ] && redisport=${redisport} yq -i '.spring.redis.port=env(redisport)' ${configpath} [ ${redispassword} ] && redispassword=${redispassword} yq -i '.spring.redis.password=strenv(redispassword)' ${configpath} [ ${promhost} ] && promhost=${promhost} yq -i '.Prometheus.host=strenv(promhost)' ${configpath} [ ${promport} ] && promport=${promport} yq -i '.Prometheus.port=env(promport)' ${configpath} [ ${tlogport} ] && tlogport=${tlogport} yq -i '.server.port=env(tlogport)' ${configpath} java -Djava.security.egd=file:/dev/./urandom -jar ./lib/TLog.jar /license.json

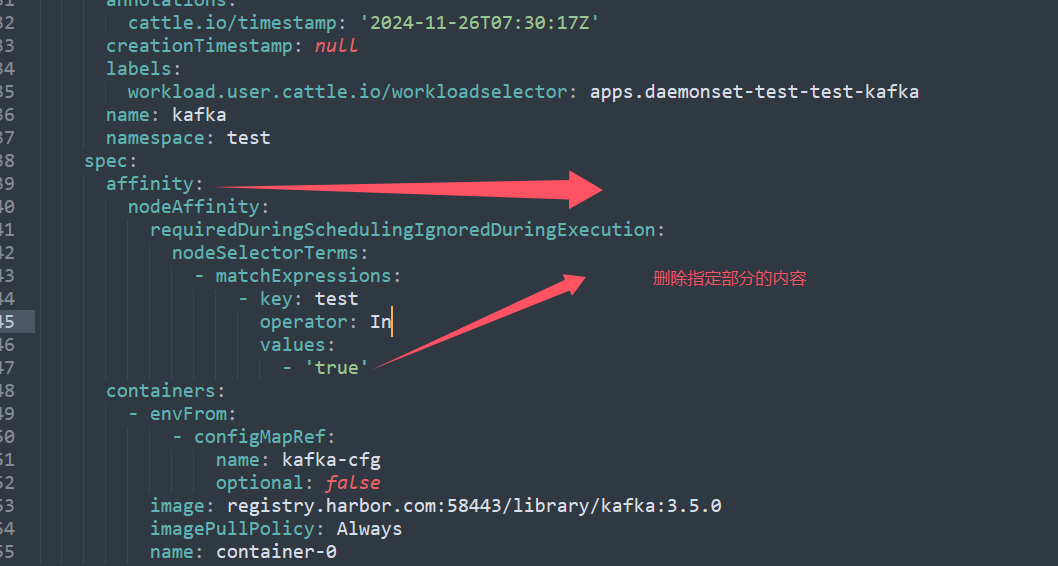

删除指定部分的文本内容

sed '/affinity:/,/containers:/d' test-kafka.yaml

删除包含affinity和containers行之间的所有内容,而且包含被匹配的两行本身

因为删除了containers行,所以多删除了一行,无法满足需求

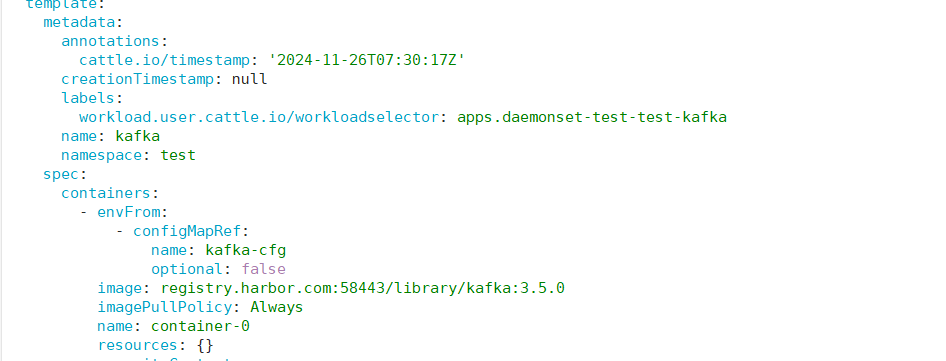

用yq工具来实现

yq e '.spec.template.spec.affinity' /home/admin/ymls/test-kafka2.yaml 查看yaml文档中是否有需要被删除的属性

./yq -i eval 'del(.spec.template.spec.affinity)' /home/admin/ymls/test-kafka2.yaml

yq eval 'del(.metadata.creationTimestamp, .metadata.resourceVersion, .metadata.uid, .metadata.annotations)' /file

批量对所有的文件进行指定的操作

ls /home/admin/ymls | xargs -I '{}' /data/persistence-data/yq -i eval 'del(.spec.template.spec.affinity, .status)' {}

Dockerfile拷贝并解压

ADD test1.jar /usr 直接把test1.jar 解压到/usr目录下

无需要再使用其它命令进行操作

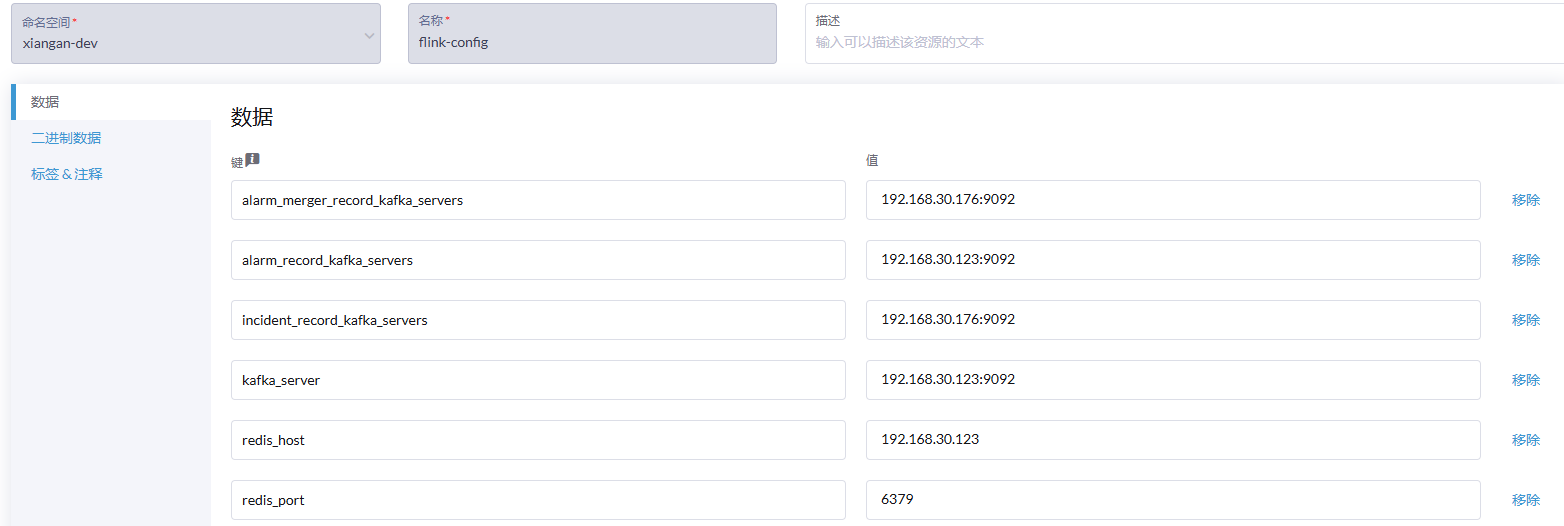

shell读取configmap动态修改普通配置文件

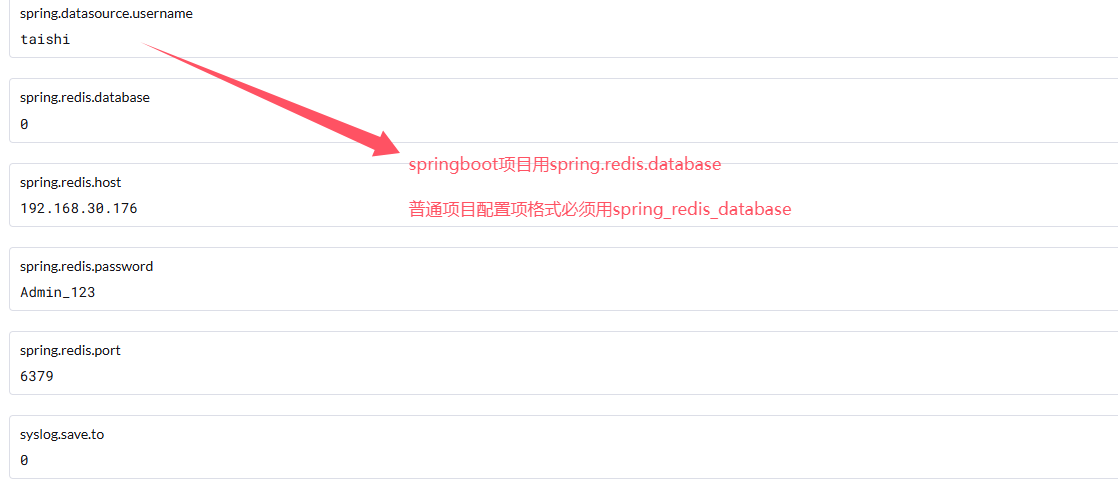

普通的配置环境变量不像springboot项目配置key能够用点做为key的级联关系,这样配置在容器中用env查看的时候读取不到任何数据

所以环境变量的key用_来串联

变量配置流程

1.创建configmap资源

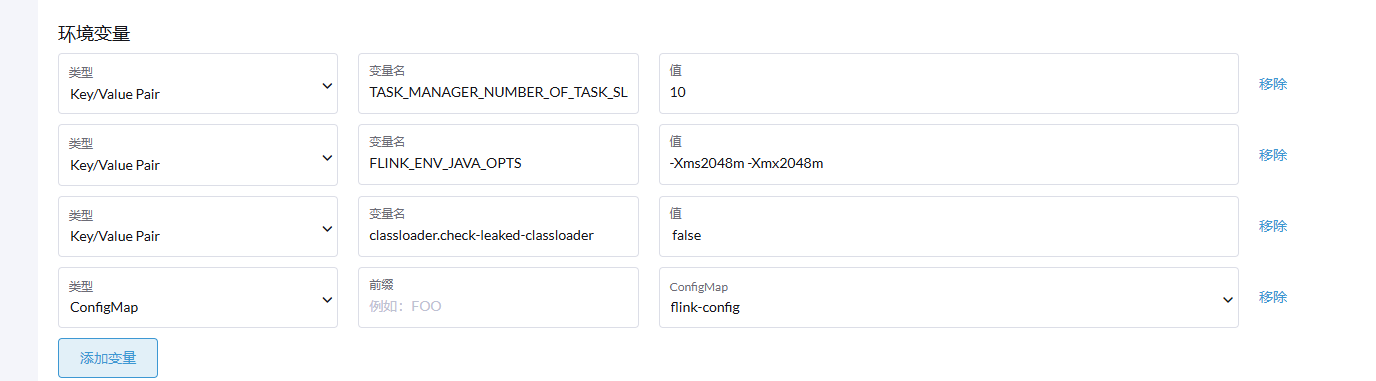

2.引用configmap

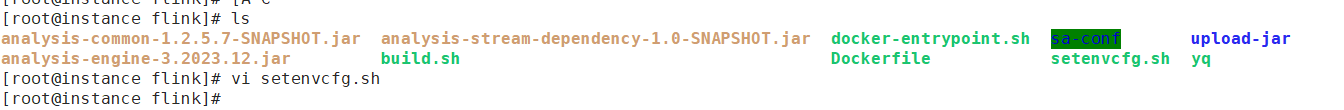

3.dockerfile编写

ENV TZ=Asia/Shanghai RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo '$TZ' > /etc/timezone # Configure container RUN set -ex; \ CONF_FILE="$FLINK_HOME/conf/flink-conf.yaml"; \ mkdir $FLINK_HOME/upload-jar; mkdir -p /opt/sa-conf; \ sed -i '$a web.upload.dir: $FLINK_HOME/upload-jar' "$CONF_FILE"; ADD analysis-*.jar /opt/flink/lib/ ADD sa-conf/* /opt/sa-conf/ COPY upload-jar /opt/flink/upload-jar/ COPY docker-entrypoint.sh / COPY yq /usr/bin/ COPY setenvcfg.sh / ENTRYPOINT ["/docker-entrypoint.sh"] EXPOSE 6123 8081 CMD ["help"]

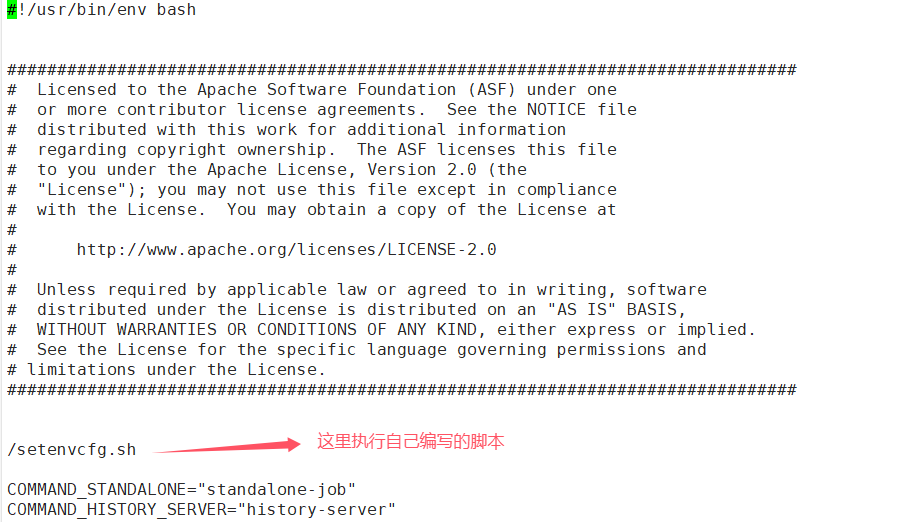

2.修改docker-entrypoint.sh

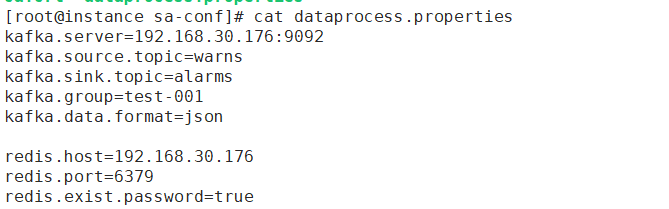

3.源配置文件内容

[root@instance sa-conf]# cat dataprocess.properties kafka.server=192.168.30.176:9092 kafka.source.topic=warns kafka.sink.topic=alarms kafka.group=test-001 kafka.data.format=json redis.host=192.168.30.176 redis.port=6379 redis.exist.password=true redis.password=Yv0PgySSLw8t9n8VP5h3/w== #redis.password=1Kp2jcS5d8Dd06ywkTf5Hw== #redis.password=VBi20yRLk0rZi5YafLhG9w== #redis.cluster=true ###聚合函数限制聚合的数量 aggregate.connect.upper.number=1000 ####################################################### ##告警kafka 配置 alarm.record.kafka.topic=es_alarm alarm.record.kafka.servers=192.168.30.176:9092 alarm.record.kafka.consumer.group.id=alarm_20201205_001 ##告警归并kafka 配置

4.实现setenvcfg.sh

#!/bin/sh configpath="/opt/sa-conf/dataprocess.properties" kafkaserver=`env | grep ^kafka_server` kafkaserver=${kafkaserver#*=} redishost=`env | grep redis_host` redishost=${redishost#*=} redisport=`env | grep redis_port` redisport=${redisport#*=} redispassword=`env | grep redis_password` redispassword=${redispassword#*=} alarmkafka=`env | grep alarm_record_kafka_servers` alarmkafka=${alarmkafka#*=} mergerkafka=`env | grep alarm_merger_record_kafka_servers` mergerkafka=${mergerkafka#*=} incidentkafka=`env | grep incident.record.kafka.servers` incidentkafka=${incidentkafka#*=} [ ${redishost} ] && sed -i "s/redis.host=.*/redis.host=${redishost}/" ${configpath} [ ${redisport} ] && redisport=${redisport} && sed -i "s/redis.port=.*/redis.port=${redisport}/" ${configpath} [ ${redispassword} ] && redispassword=${redispassword} && sed -i "s/redis.password=.*/redis.password=${redispassword}/" ${configpath} [ ${kafkaserver} ] && kafkaserver=${kafkaserver} && sed -i "s/kafka.server=.*/kafka.server=${kafkaserver}/" ${configpath} [ ${alarmkafka} ] && alarmkafka=${alarmkafka} && sed -i "s/alarm.record.kafka.servers=.*/alarm.record.kafka.servers=${alarmkafka}/" ${configpath} [ ${mergerkafka} ] && mergerkafka=${mergerkafka} && sed -i "s/alarm.merger.record.kafka.servers=.*/alarm.merger.record.kafka.servers=${mergerkafka}/" ${configpath} [ ${incidentkafka} ] && incidentkafka=${incidentkafka} && sed -i "s/incident.record.kafka.servers=.*/incident.record.kafka.servers=${incidentkafka}/" ${configpath}

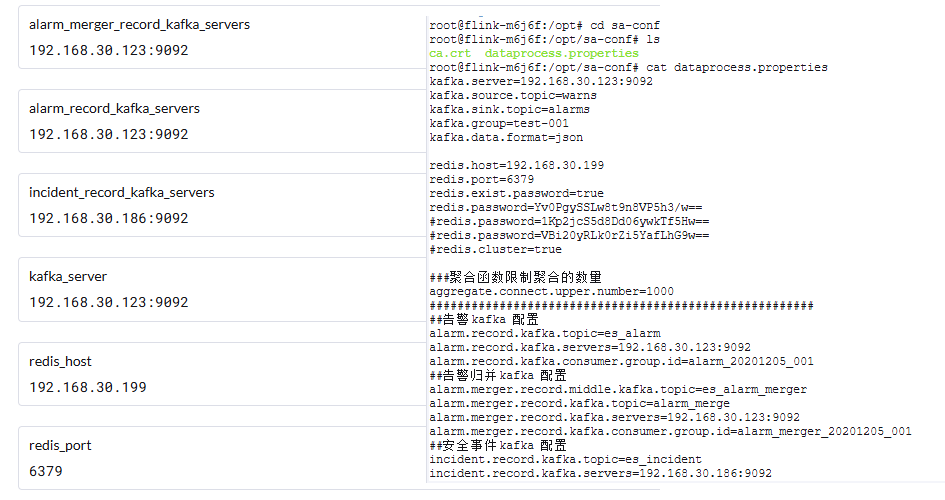

5.效果查看

容器启动后先修改配置文件再启动相应的容器服务进程

修改配置文件后成功启动服务

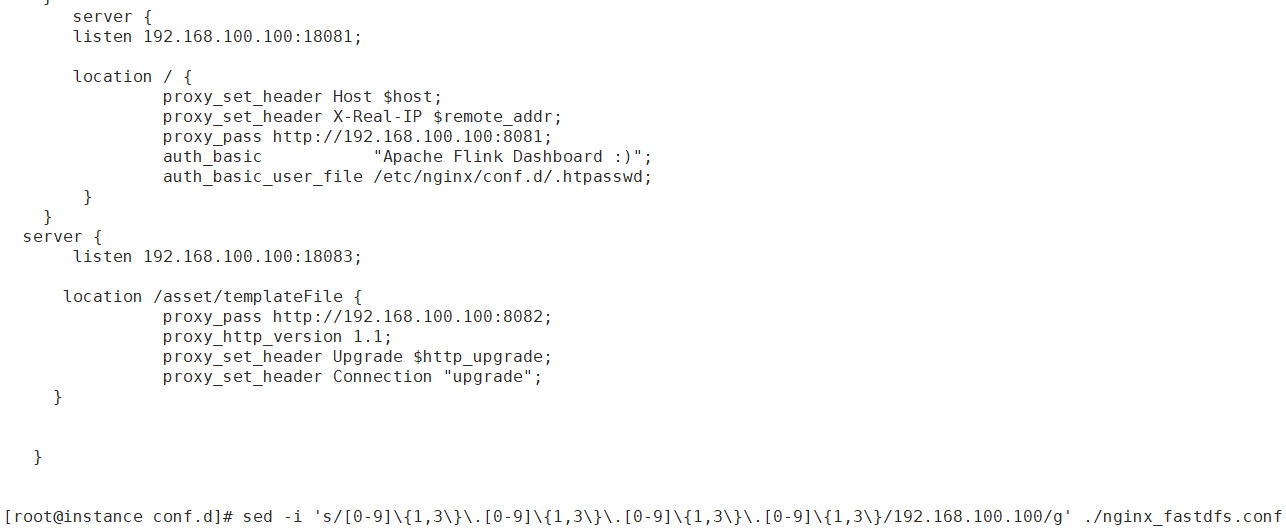

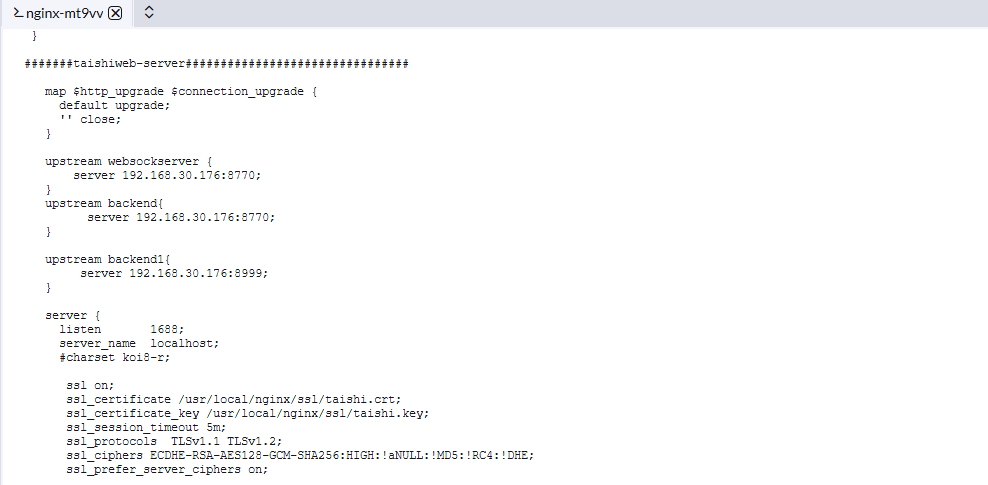

shell动态配置nginx配置文件

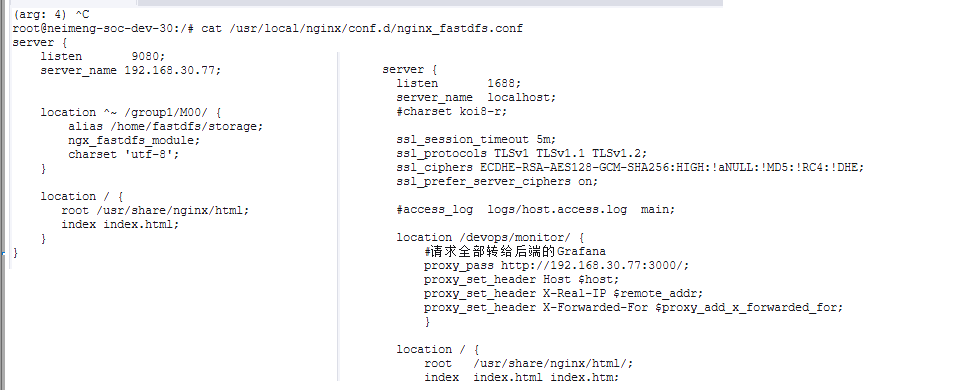

sed -i 's/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/192.168.100.100/g' ./nginx_fastdfs.conf

把nginx配置文件中所有的ip地址动态改成通过环境变量传递过来的地址

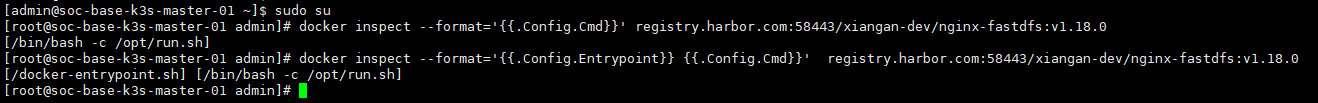

1.先查询到镜像的启动脚本命令

docker inspect --format='{{.Config.Entrypoint}} {{.Config.Cmd}}' registry.harbor.com:58443/xiangan-dev/nginx-fastdfs:v1.18.0

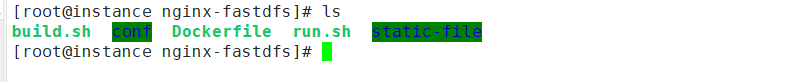

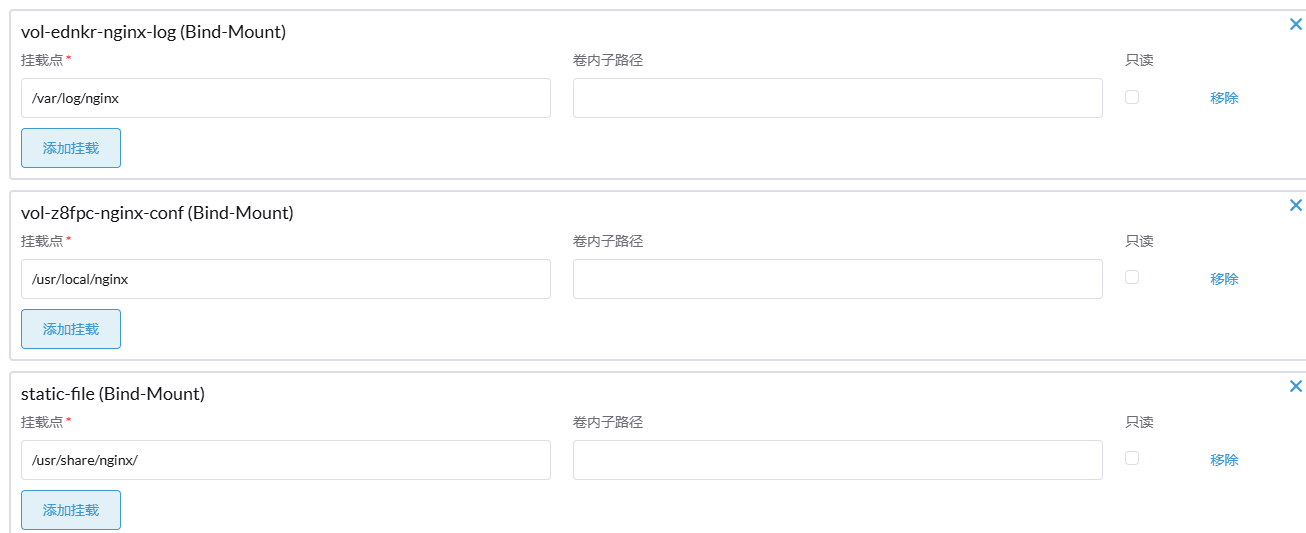

ENV TZ=Asia/Shanghai RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo '$TZ' > /etc/timezone # Configure container COPY conf /usr/local/nginx/ COPY static-file /usr/share/nginx/ COPY run.sh /opt/

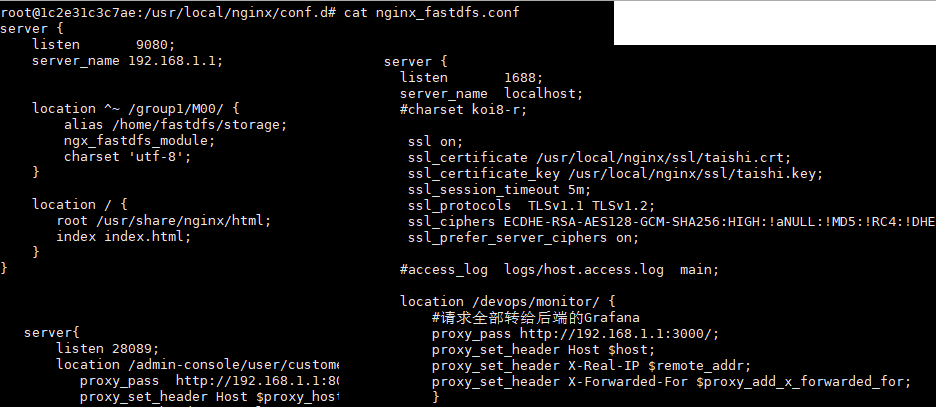

#!/bin/bash sed -i "s/tracker_server=0.0.0.0:22122/tracker_server=${TRACKER_SERVER}/g" /etc/fdfs/mod_fastdfs.conf NGINX_SERVER=`env | grep NGINX_SERVER` NGINX_SERVER=${NGINX_SERVER#*=} echo ${NGINX_SERVER} sed -i "s/[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}/$NGINX_SERVER/g" /usr/local/nginx/conf.d/nginx_fastdfs.conf sed -i "s/\${[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}}/${NGINX_SERVER}/g" /usr/local/nginx/conf.d/nginx_fastdfs.conf echo "替换ip地址结束" nginx tail -f /var/log/nginx/access.log

异常问题排查

镜像提交到仓库后在容器管理系统中更新的静态文件不生效

1.镜像本地启动排查

docker run -it --entrypoint /bin/bash registry.harbor.com:58443/xiangan-dev/nginx-fastdfs:v1.18.0

2.在rancher中重启容器后配置文件并没有生效

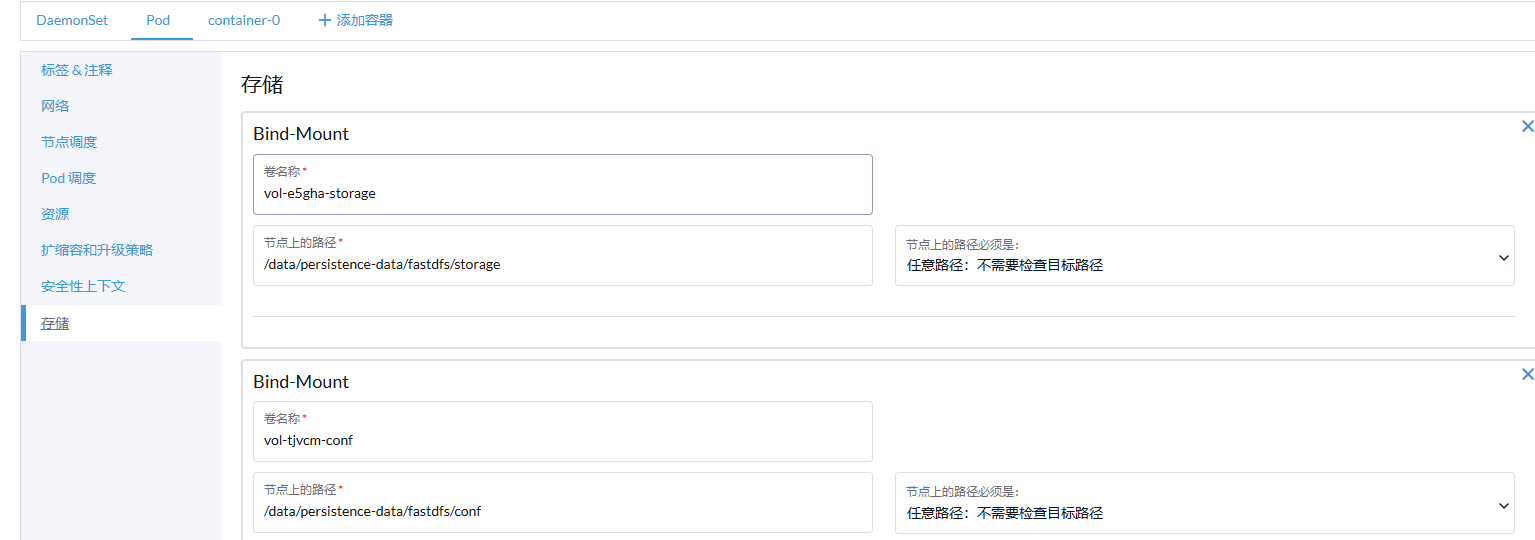

3.原因是容器启动的时候挂载了主机目录导致Dockerfile中对镜像的修改总是无法生效

4.删除掉容器的挂盘配置再重启容器即可生效

5.镜像更新后推送到仓库然后从仓库拉取到本地直接启动容器生效,但是由于在容器管理系统中做了主机目录挂载导致在容器管理系统中启动的容器无法生效

本文来自博客园,作者:不懂123,转载请注明原文链接:https://www.cnblogs.com/yxh168/p/18548686