es集群迁移操作

logstash迁移集群

/home/secure/logstash-7.8.1/bin/logstash -f /home/secure/logstash-7.8.1/config/event0515.conf -f --debug

-f 检查配置文件语法

/home/secure/logstash-7.8.1/bin/logstash -f /home/secure/logstash-7.8.1/config/event0515.conf > /home/secure/ca/event0515.log 2>&1

可以使用ssl加密传输并且忽略证书内容验证

input{ elasticsearch{ hosts => ["10.30.90.147:9200"] user => "elastic" password => "11111" index => "full_flow_file_202311,full_flow_file_202312,prob_file_description_202402" //index => "full_flow*,-.security*" //正则匹配多个索引 docinfo=>true slices => 1 size => 10000 ssl => true ca_file => "/home/secure/147/ca.crt" } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ hosts => ["https://121.229.203.43:9200"] user => "elastic" password => "111111" index => "%{[@metadata][_index]}" ilm_enabled => false ssl => true ssl_certificate_verification => false //这个配置如果配置在input那么就会提示unknow settings } }

es集群密码重置

1.先修改es的配置文件不启用安全认证 然后再重启es服务

2.重新生成keystore文件

rm -fr elasticsearch.keystore

./elasticsearch-keystore create

chown -R admin:admin /app/taishi/elasticsearch-7.8.1/config/elasticsearch.keystore

3.拷贝到其它节点

scp -r elasticsearch.keystore admin@10.72.17.15:/app/taishi/elasticsearch-7.8.1/config/

scp -r elasticsearch.keystore admin@10.72.17.23:/app/taishi/elasticsearch-7.8.1/config/

4.删除原来的系统索引

curl -X DELETE "10.72.17.7:9200/.security-*"

5.修改elasticsearch.yml文件配置重新启用安全认证

sudo -u elasticsearch ./bin/elasticsearch-keystore remove xpack.security.transport.ssl.keystore.secure_password

sudo -u elasticsearch ./bin/elasticsearch-keystore remove xpack.security.transport.ssl.truststore.secure_password

6.开始重新设置新的用户密码

./elasticsearch-setup-passwords interactive --batch --url https://instance:9200

7.集群启动正常

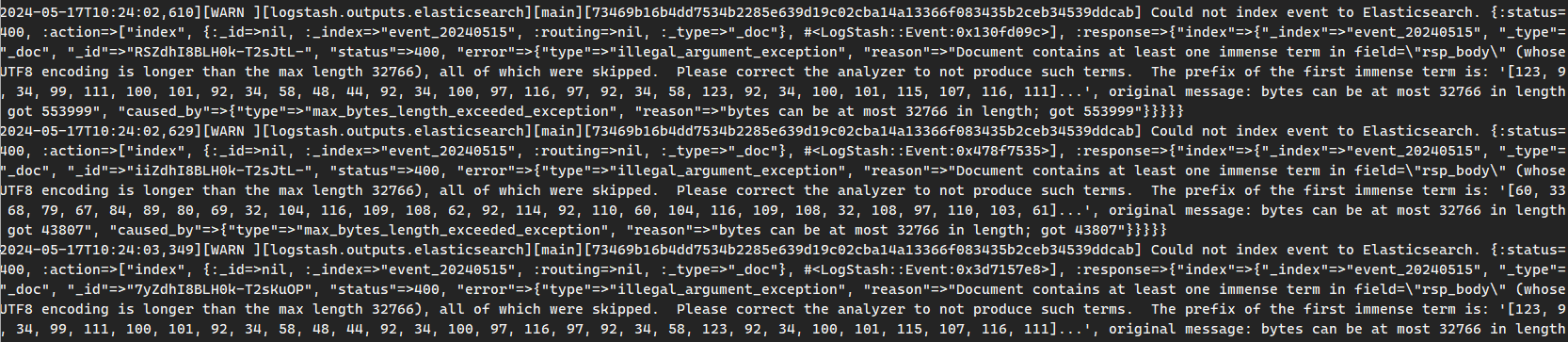

es迁移字段过大问题

迁移的时候提示字段内容太长,无法同步成功

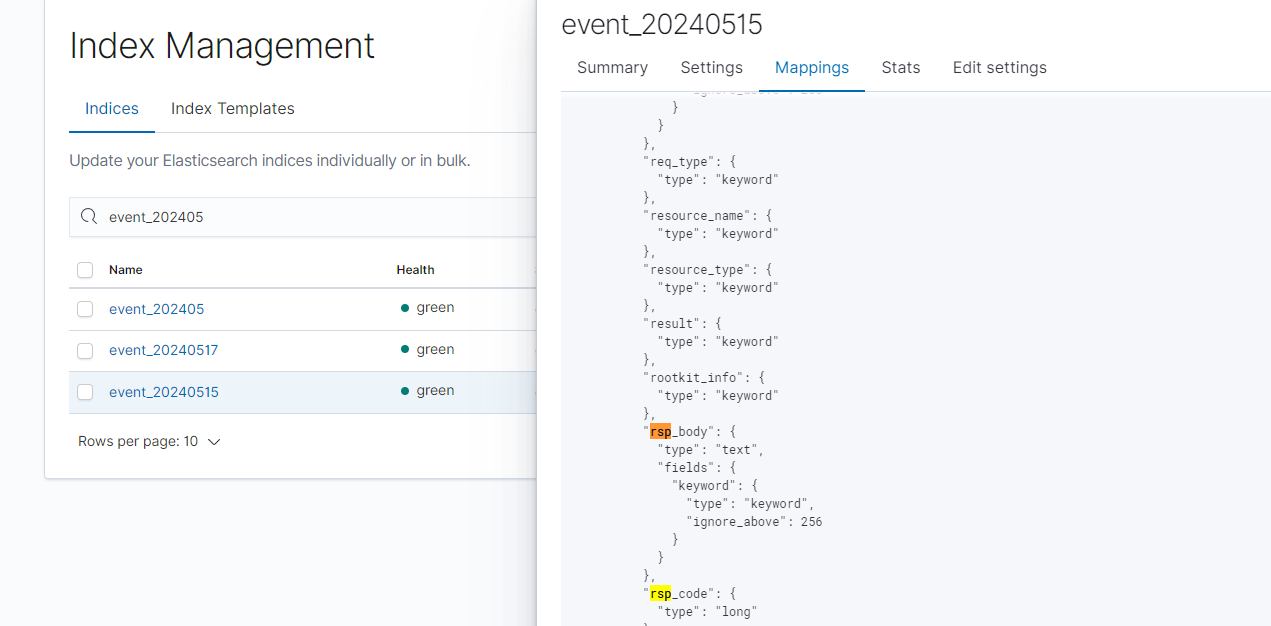

这个问题在源索引中字段是text类型,同步到新的索引中就自动设置为keyword类型

同步索引之前必须先给索引创建好索引模板

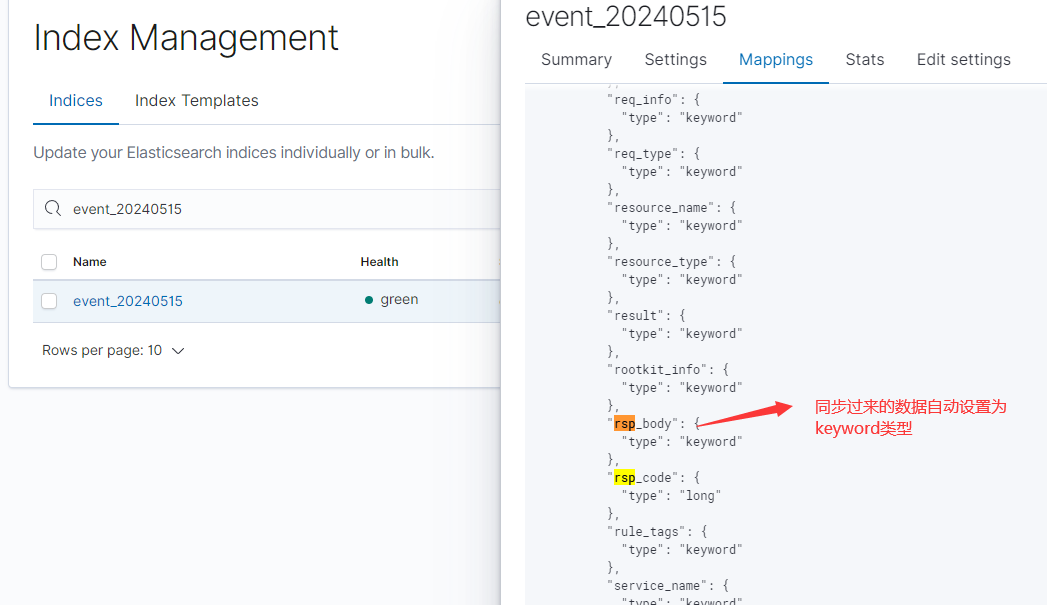

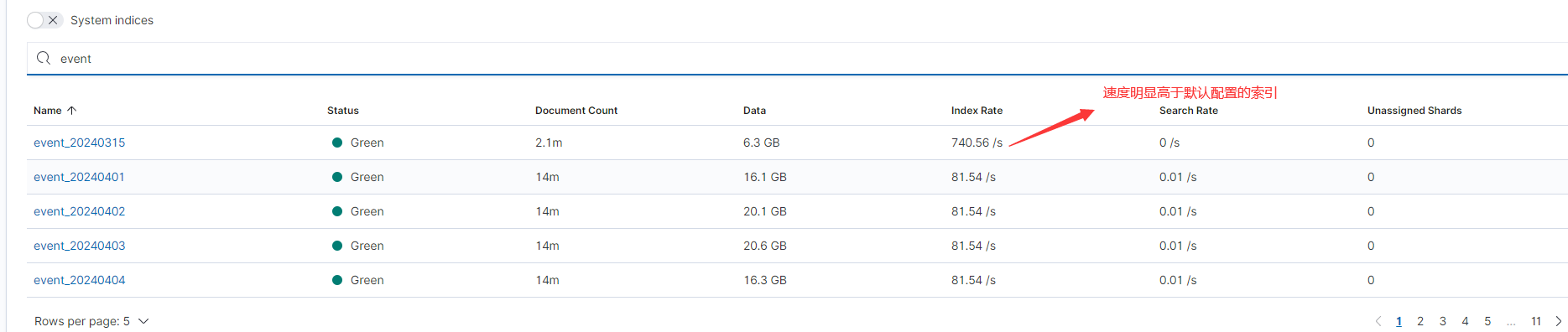

es迁移速度优化问题

es迁移的时候在模板中除了先要指定字段类型之外,还需要指定索引的分片数量和分片的副本数量

指定这两个参数合适的值可以提高es的迁移速度

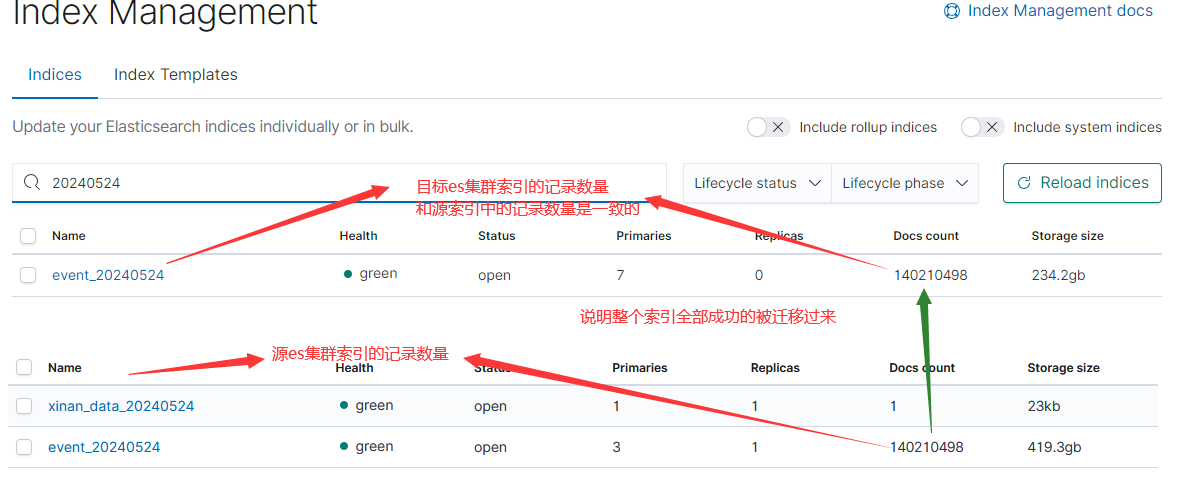

es高速迁移示例步骤

1.设置索引模板

curl -k -u elastic:Transfar111 -XPUT http://121.229.22.46:9200/_template/event -H 'content-Type:application/json' -d @./event.json

2.设置源索引的查询batch大小

curl --insecure -u elastic:Trans -XPUT 'https://10.30.92.77:9200/event_20240524/_settings' -H 'Content-Type: application/json' -d '{

"max_result_window":100000

}'

3.在目标集群创建新索引名字,创建新索引的时候自动匹配了索引模板

curl --insecure -u elastic:Transfar -XPUT "https://121.229.255.46:9200/event_20240524"

4.优化模板索引配置

1.比如分片数量等于节点数量和副本数量可以为0

curl --insecure -u elastic:Transfar -XPUT 'https://121.229.255.46:9200/event_20240524/_settings' -H 'Content-Type: application/json' -d '{

"number_of_replicas" : "0"

}'

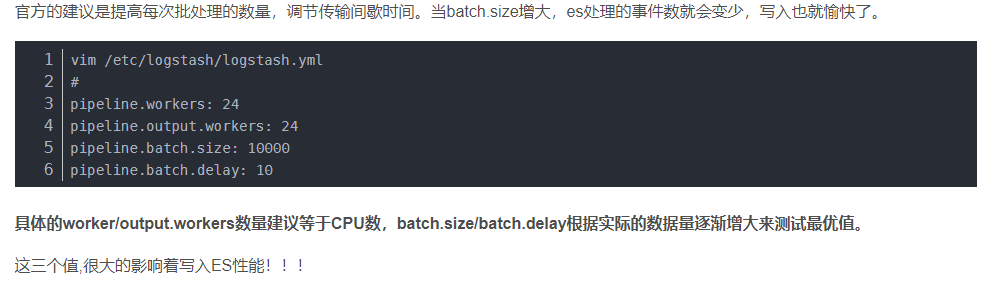

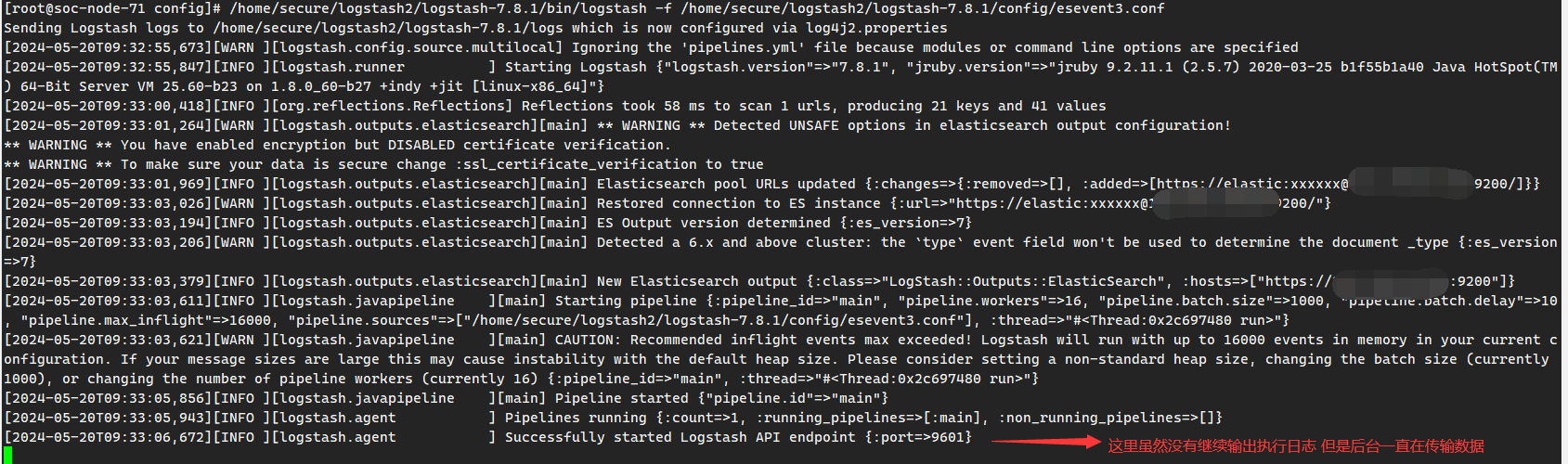

5.配置logstash配置文件参数

input{ elasticsearch{ hosts => ["instance:9200"] user => "elastic" password => "Transfar@2023" index => "event_20240524" docinfo=>true slices => 1 size => 10000 //这个值不要设置太大 ssl => true ca_file => "/home/secure/ca/ca.crt" scroll => "5m" } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ hosts => ["https://121.333.203.46:9200"] user => "elastic" password => "Transfar@2024" index => "%{[@metadata][_index]}" ilm_enabled => false manage_template => false ssl => true ssl_certificate_verification => false } }

# Settings file in YAML # # Settings can be specified either in hierarchical form, e.g.: # # pipeline: # batch: # size: 125 # delay: 5 # # Or as flat keys: # pipeline.batch.size: 5000 pipeline.batch.delay: 10 pipeline.workers: 20

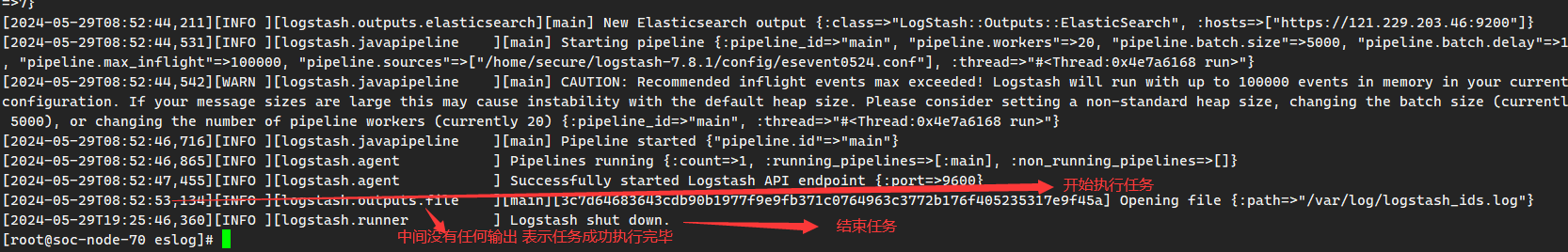

6.启动logstash同步程序

/home/secure/logstash-7.8.1/bin/logstash -f /home/secure/logstash-7.8.1/config/esevent0524.conf >/home/secure/eslog/esevent0524.log 2>&1

es索引模板操作

1.模板操作

curl -k -u elastic:Transfar111 -XGET http://121.229.203.22:9200/_template/event

curl -k -u elastic:Transfar111 -XDELETE http://121.229.333.46:9200/_template/event

同步索引数据前必须先创建索引模板 否则某些列的值会转换异常导致同步丢失

curl -k -u elastic:Transfar111 -XPUT http://121.229.22.46:9200/_template/event -H 'content-Type:application/json' -d @./event.json

2.模板操作脚本

#!/bin/bash ip=${IP} echo $ip DATE=`date +%Y%m` DATE1=`date +%Y` result_event=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/event_${DATE}` result_alarm=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/alarm_${DATE}` DATE2=`date +%m` if [ ${DATE2} -le 6 ] ; then result_incident=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/incident_${DATE1}01` result_merge=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/merge_alarm_${DATE1}01` DATE3=${DATE1}01 else result_incident=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/incident_${DATE1}02` result_merge=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/merge_alarm_${DATE1}02` DATE3=${DATE1}02 fi #result_incident=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/incident_${DATE}` #result_merge=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/merge_alarm_${DATE}` result_operation=`curl -k -s -u elastic:${ES_PASSWD} -XGET https://${ip}:9200/_cat/indices/operation_incident` echo -e "####导入event模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/event -H 'content-Type:application/json' -d @./template/event.json echo -e "\n" sleep 1 echo -e "####导入alarm模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/alarm -H 'content-Type:application/json' -d @./template/alarm.json echo -e "\n" sleep 1 echo -e "####导入excep模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/excep -H 'content-Type:application/json' -d @./template/excep.json echo -e "\n" sleep 1 echo "####导入incident模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/incident -H 'content-Type:application/json' -d @./template/incident.json echo -e "\n" sleep 1 echo "####导入merge_alarm模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/merge_alarm -H 'content-Type:application/json' -d @./template/merge_alarm.json echo -e "\n" sleep 1 echo "####导入operation模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/operation -H 'content-Type:application/json' -d @./template/operation.json echo -e "\n" sleep 1 echo "####导入unmatch模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/unmatch -H 'content-Type:application/json' -d @./template/unmatch.json echo -e "\n" sleep 1 echo "####导入ES_label模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/label -H 'content-Type:application/json' -d @./template/ES_label.json echo -e "\n" echo "####导入offline_merge_alarm.json模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/offline_merge_alarm -H 'content-Type:application/json' -d @./template/offline_merge_alarm.json echo -e "\n" echo "####导入offline_incident.json模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/offline_incident -H 'content-Type:application/json' -d @./template/offline_incident.json echo -e "\n" echo "####导入offline_alarm.json模板####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_template/offline_alarm -H 'content-Type:application/json' -d @./template/offline_alarm.json echo -e "\n" sleep 1 echo "####设置分片副本####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_settings -H 'content-Type:application/json' -d @./template/replicas.json echo -e "\n" echo "####设置分片10000####" curl -k -u elastic:${ES_PASSWD} -XPUT https://${ip}:9200/_cluster/settings -H 'content-Type:application/json' -d @./template/EePerNode.json echo -e "\n" echo "####删除默认event索引####" if [[ ${result_event} =~ "error" ]];then echo "默认event索引不存在" echo -e "\n" sleep 1 else curl -k -u elastic:${ES_PASSWD} -XDELETE https://${ip}:9200/event_${DATE} echo -e "\n" echo "默认event索引删除成功" echo -e "\n" sleep 1 fi echo "####删除默认alarm索引####" if [[ ${result_alarm} =~ "error" ]];then echo "默认alarm索引不存在" echo -e "\n" sleep 1 else curl -k -u elastic:${ES_PASSWD} -XDELETE https://${ip}:9200/alarm_${DATE} echo -e "\n" echo "默认alarm索引删除成功" echo -e "\n" sleep 1 fi echo "####删除默认incident索引####" if [[ ${result_incident} =~ "error" ]];then echo "默认incident索引不存在" echo -e "\n" sleep 1 else curl -k -u elastic:${ES_PASSWD} -XDELETE https://${ip}:9200/incident_${DATE3} echo -e "\n" echo "默认incident索引删除成功" echo -e "\n" sleep 1 fi echo "####删除默认merge_alarm索引####" if [[ ${result_merge} =~ "error" ]];then echo "默认merge_alarm索引不存在" echo -e "\n" sleep 1 else curl -k -u elastic:${ES_PASSWD} -XDELETE https://${ip}:9200/merge_alarm_${DATE3} echo -e "\n" echo "默认merge_alarm索引删除成功" echo -e "\n" sleep 1 fi echo "####删除默认operation索引####" if [[ ${result_operation} =~ "error" ]];then echo "默认operation索引不存在" echo -e "\n" sleep 1 else curl -k -u elastic:${ES_PASSWD} -XDELETE https://${ip}:9200/operation_incident echo -e "\n" echo "默认operation索引删除成功" echo -e "\n" sleep 1 fi echo -e "####模板导入完成####\n" echo -e "\033[33m ###安装完成#### \033[0m\n"

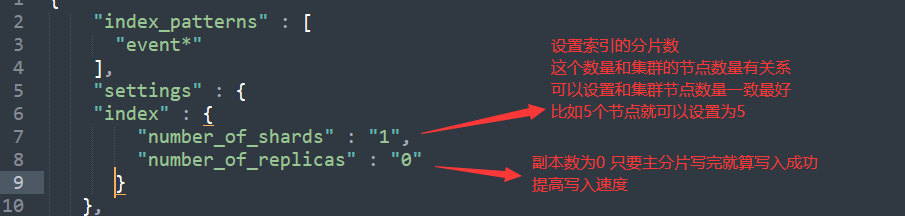

{ "index_patterns" : [ "event*" ], "settings" : { "index" : { "number_of_shards" : "1", "number_of_replicas" : "0" } }, "mappings" : { "_meta" : { }, "_source" : { }, "dynamic_templates": [ { "strings": { "mapping": { "type": "keyword" }, "match_mapping_type": "string" } } ], "properties" : { "asset_ids": { "type": "keyword" }, "dev_address": { "type": "keyword" }, "dev_port": { "type": "keyword" }, "label_id": { "type": "keyword" }, "protocol": { "type": "keyword" }, "product": { "type": "keyword" }, "vendor": { "type": "keyword" }, "data_source": { "type": "keyword" }, "dst_address": { "type": "keyword" }, "dst_port": { "type": "keyword" }, "event_id": { "type": "keyword" }, "event_name": { "type": "keyword" }, "equipment": { "type": "keyword" }, "event_type": { "type": "keyword" }, "event_type_name": { "type": "keyword" }, "input_id": { "type": "long" }, "log_id": { "type": "keyword" }, "occur_time": { "type": "date" }, "opt_time": { "type": "keyword" }, "original_log": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "out_index_or_topic": { "type": "keyword" }, "parse_filter_name": { "type": "keyword" }, "parse_filter_ruleid": { "type": "keyword" }, "receive_time": { "type": "date" }, "src_address": { "type": "keyword" }, "src_port": { "type": "keyword" }, "syslog_facility": { "type": "long" }, "syslog_level": { "type": "long" }, "level": { "type": "keyword" }, "dev_name": { "type": "keyword" }, "threat_category": { "type": "keyword" }, "classify": { "type": "keyword" }, "log_source": { "type": "keyword" }, "rule_tags": { "type": "keyword" }, "dept_id": { "type": "long" }, "packet_data": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "potential_impact": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "rsp_body": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "rsp_header": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "referer": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "user_agent": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "info_content": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "req_arg": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "req_body": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "req_header": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "req_info": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "bulletin": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "operation_command": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } }, "threat_advice": { "type": "text", "fields": { "keyword": { "type": "keyword", "ignore_above": 256 } } } } }, "aliases" : { } }

event.json定义了新创建以event开头的索引的所有列的属性,这样在创建索引后写入数据的时候就不会用默认的所有的列都是keyword类型

不需要每个索引都单独设置一个mapping了

logstash迁移全步骤

curl -k -u elastic:11111-XPUT http://121.11.11.11:9200/_template/event -H 'content-Type:application/json' -d @./event.json

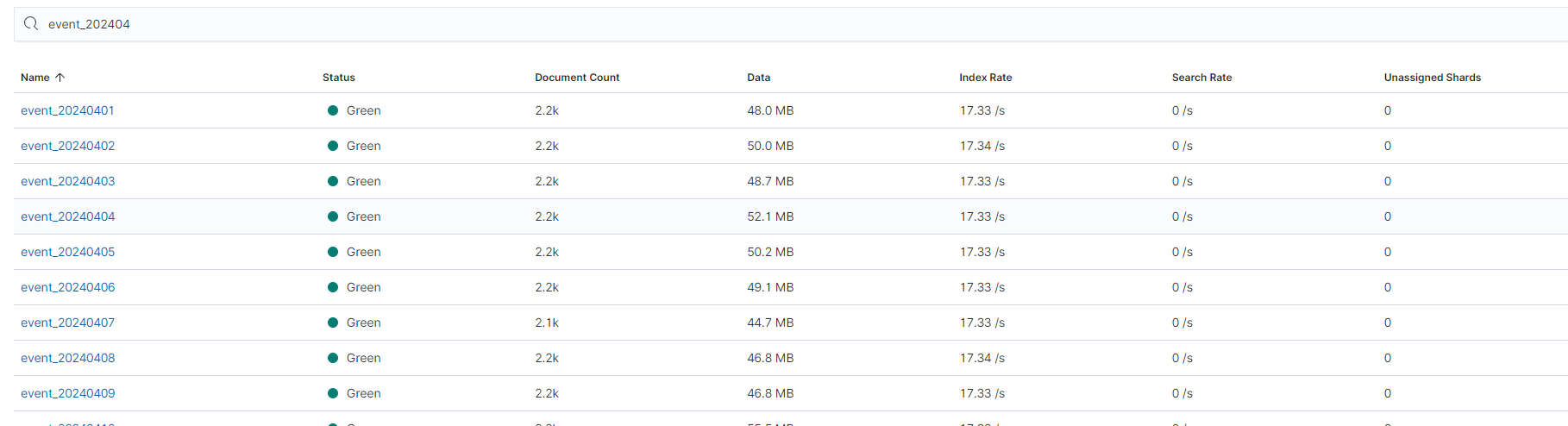

for i in $(curl --insecure -u elastic:Transfar111 -XGET 'https://10.30.98.71:9200/_cat/indices?v' | grep event_202404 | awk '{print $3}') do curl --insecure -u elastic:Transfar111 -XPUT 'https://10.30.98.71:9200/'$i'/_settings' -H 'Content-Type: application/json' -d '{ "max_result_window":100000 }' done

input{ elasticsearch{ hosts => ["instance:9200"] user => "elastic" password => "Transfar@1111" index => "event_202404*" docinfo=>true slices => 1 size => 20000 ssl => true ca_file => "/home/secure/ca/ca.crt" } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ hosts => ["https://121.1119.133.46:9200"] user => "elastic" password => "Transfar@1111" index => "%{[@metadata][_index]}" ilm_enabled => false ssl => true ssl_certificate_verification => false } }

input{ elasticsearch{ hosts => ["instance:9200"] user => "elastic" password => "Transfar@撒地方" index => "event_202405*,-event_20240515." docinfo=>true slices => 1 size => 20000 ssl => true ca_file => "/home/secure/ca/ca.crt" } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ hosts => ["https://144.2333.203.46:9200"] user => "elastic" password => "Transfaaf" index => "%{[@metadata][_index]}" ilm_enabled => false ssl => true ssl_certificate_verification => false } }

启动同步

/home/secure/logstash-7.8.1/bin/logstash -f /home/secure/logstash-7.8.1/config/esevent4.conf >/home/secure/eslog/esevent4.log 2>&1

等待同步完成即可

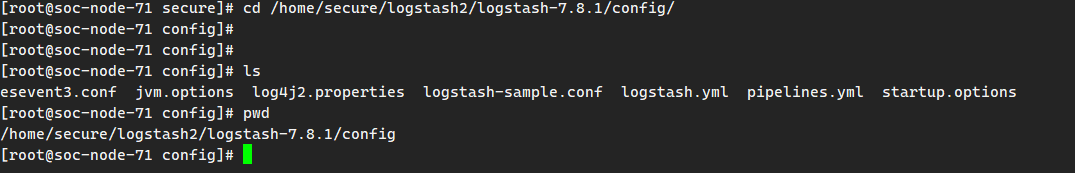

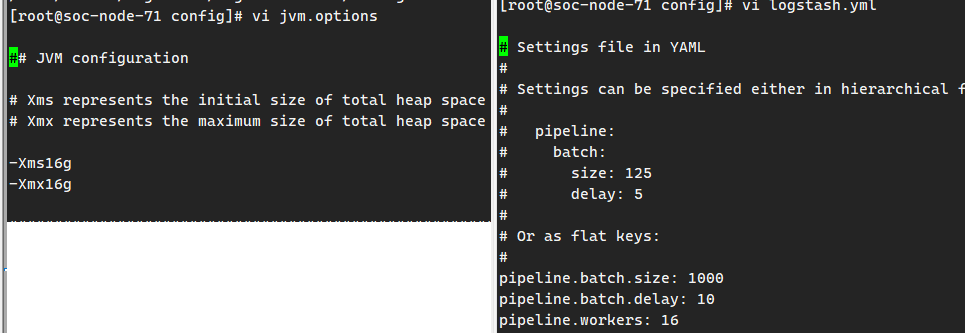

logstash性能调优参数配置

1.设置logstash的堆内存大小

2.调整管道参数

3.官方建议管道配置

4.传输集群配置

input{ elasticsearch{ hosts => ["instance:9200"] user => "elastic" password => "Transfar1111" index => "event_20240315" docinfo=>true slices => 1 size => 50000 ssl => true ca_file => "/home/secure/ca/ca.crt" scroll => "5m" } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ hosts => ["https://121.221:9200"] user => "elastic" password => "Transf" index => "%{[@metadata][_index]}" ilm_enabled => false manage_template => false ssl => true ssl_certificate_verification => false } }

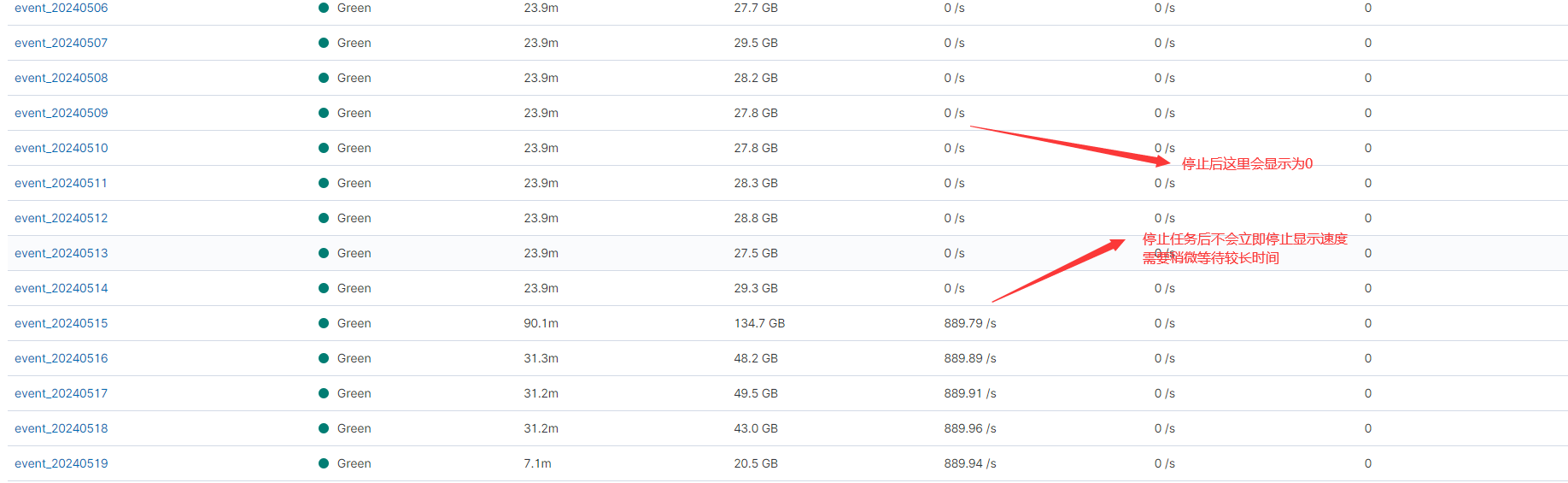

5.测试效果

测试速度对比明显 管道的参数需要根据具体的集群环境不断的调优才行,并不是有一个统一固定的参数值.

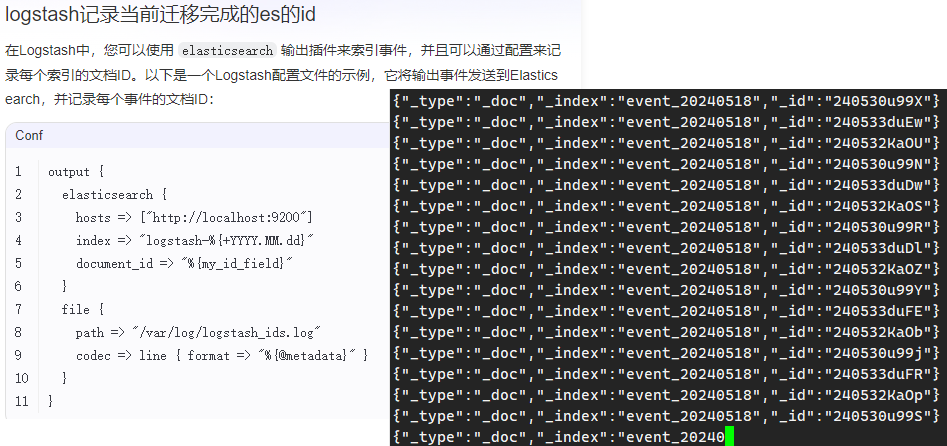

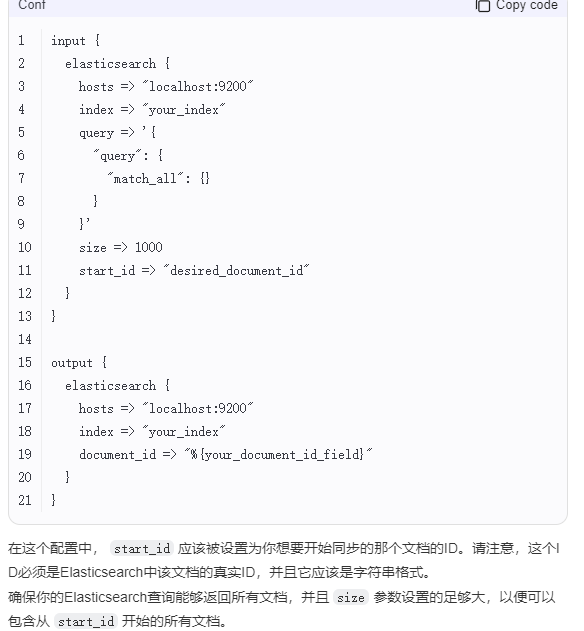

logstash实现断点续传

1.记录已经迁移的文档id

2.再次启动logstash的时候指定从上次中断的位置开始继续迁移同步

同步测试查看数据

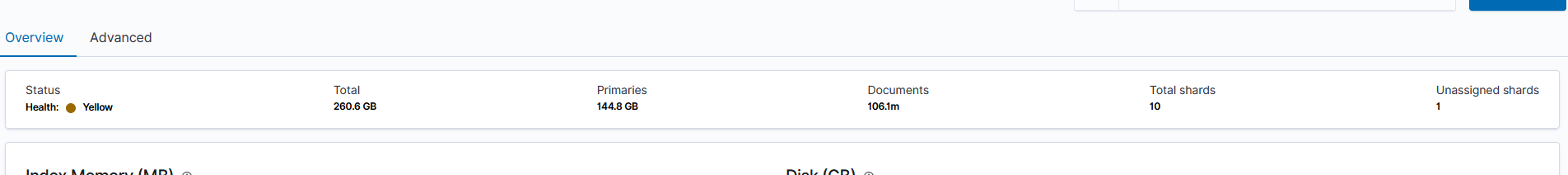

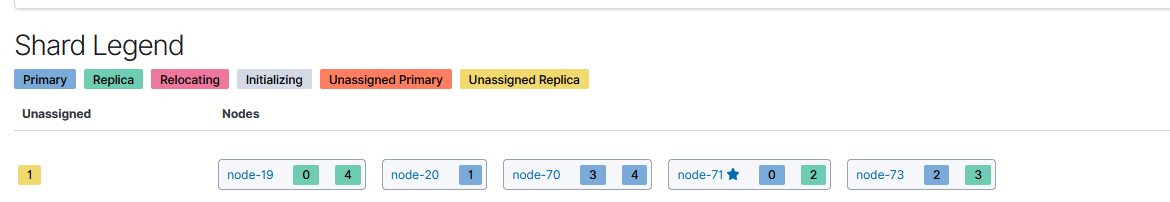

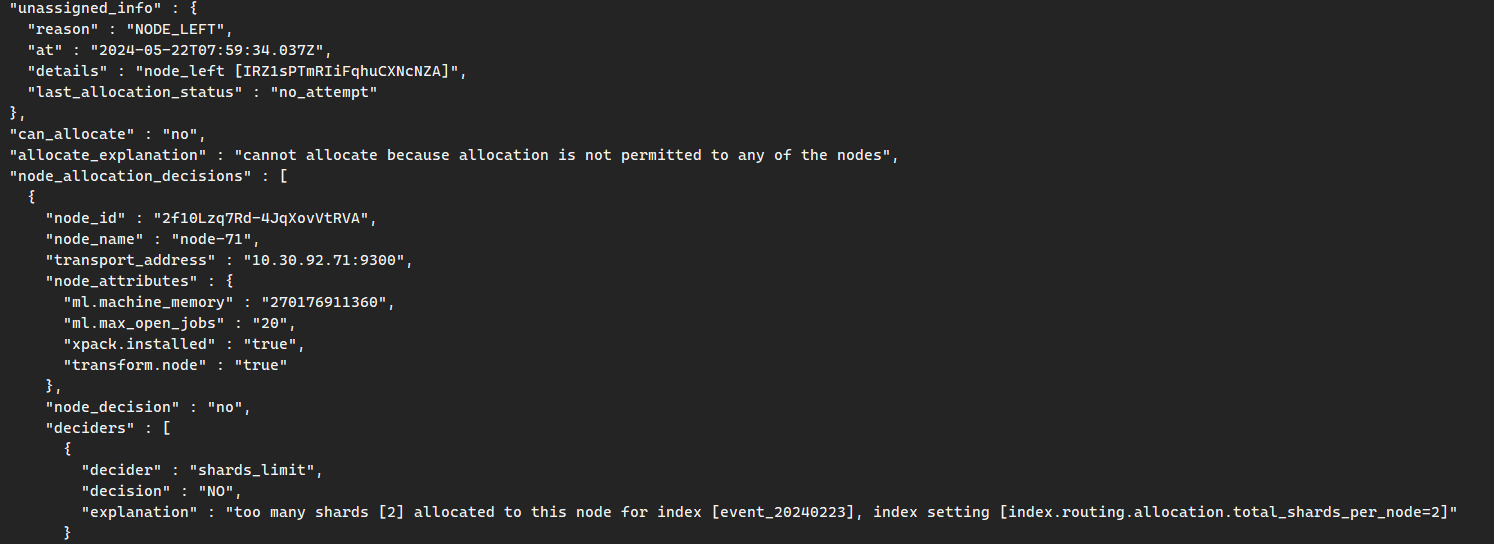

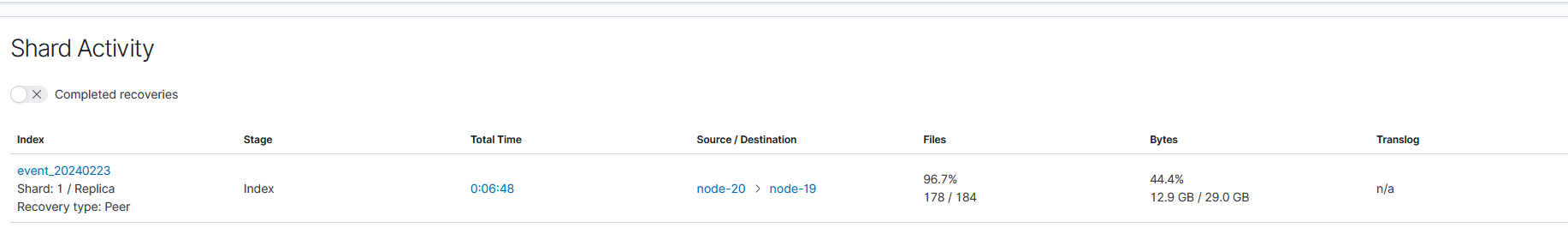

es分片失败修复

由于有分片未被分配到节点导致集群一直处于yellow状态

使用api查看不能分配的原因

curl --insecure -u elastic:11111 -XGET https://10.308.99:9200/_cluster/allocation/explain?pretty

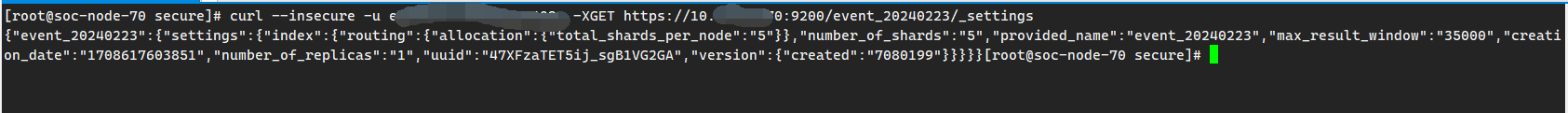

1.查看索引配置

curl --insecure -u elastic:11111-XGET https://10.30.988.780:9200/event_20240223/_settings

2.修改索引配置

curl --insecure -u elastic:Transf5555 -XPUT 'https://10.380.992.70:9200/event_20240223/_settings' -H"Content-Type:application/json" -d '{

"index.routing.allocation.total_shards_per_node": 5

}'

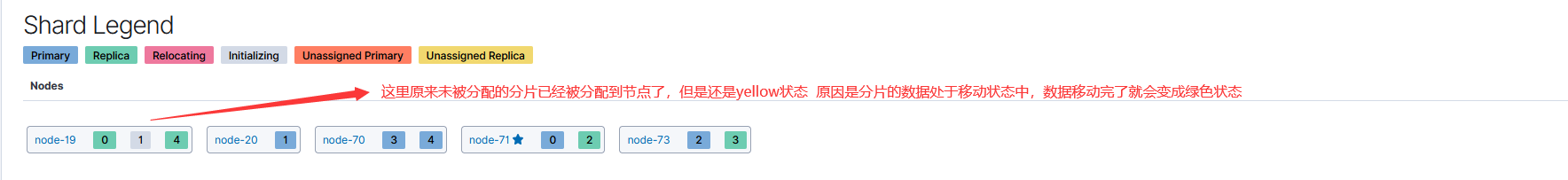

3.重新分配未被分配的分片

curl --insecure -u elastic:2222 -XPOST https://10.380.992.70:9200/_cluster/reroute?retry_failed=true

分片修复处理结束,集群恢复成绿色状态

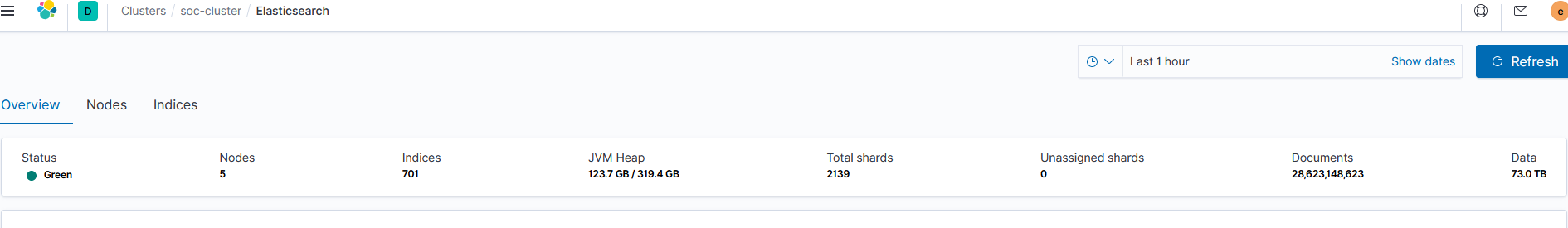

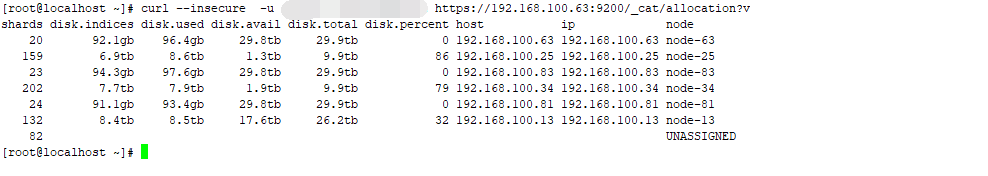

ES查看集群中所有节点的数据信息

curl --insecure -u elastic:111111 https://192.168.100.63:9200/_cat/allocation?v

查询每个节点的shard数量和磁盘空间利用率等信息

ES给索引添加别名

curl -u elastic:11111 -k -X POST "https://192.168.0.157:9200/_aliases" -H 'Content-Type: application/json' -d'

{

"actions": [

{

"add": {

"index": "event_20240725",

"alias": "event_20240725_11010002"

}

}

]

}

'

curl -u elastic:11111 -k "https://192.168.0.157:9200/_cat/indices" | grep event_20240725

curl -u elastic:11111 -k -X GET "https://192.168.0.157:9200/event_20240725_11010002/_search"

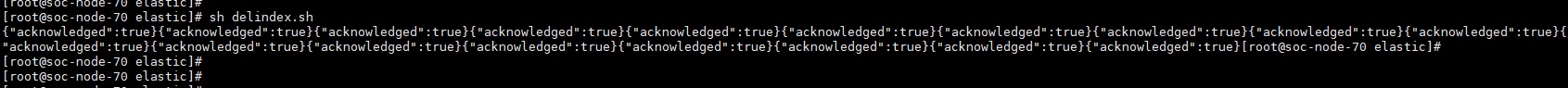

shell批量删除es释放磁盘空间

#!/bin/bash ES_URL="https://10.30.966.70:9200" # 索引数组 indexes=( "event_20240211" "event_20240212" "event_20240213" "event_20240214" "event_20240215" "event_20240216" "event_20240217" "event_20240218" "event_20240219" "event_20240220" "event_20240221" "event_20240222" "event_20240223" "event_20240224" "event_20240225" "event_20240226" "event_20240227" "event_20240228" ) # 循环删除索引 for index in "${indexes[@]}"; do curl -X DELETE -u elastic:33333 -k "$ES_URL/$index" done

释放磁盘完成

本文来自博客园,作者:不懂123,转载请注明原文链接:https://www.cnblogs.com/yxh168/p/18198017