Doris消费Kafka数据

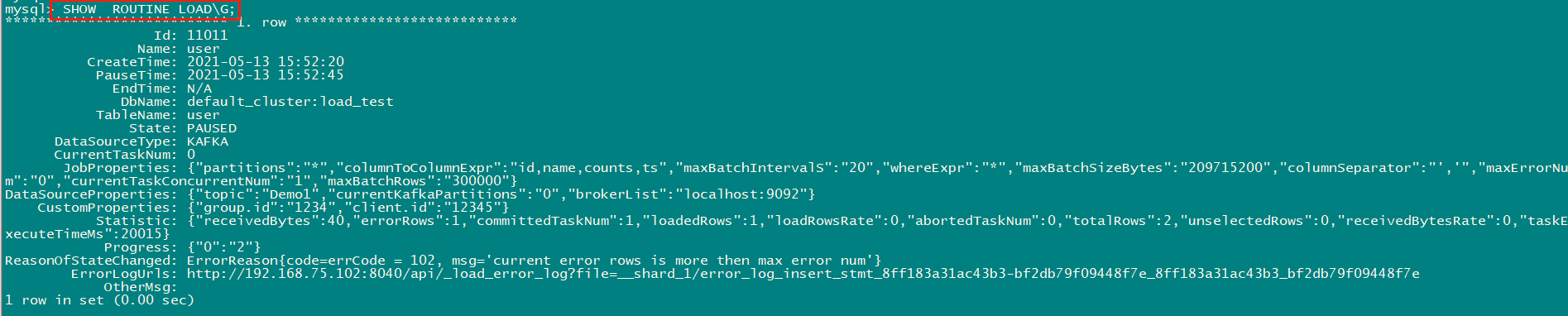

1、查看load task

show routine Load;

SHOW ROUTINE LOAD\G;

2、暂停一个load Task

PAUSE ROUTINE LOAD FOR [job_name];

PAUSE ROUTINE LOAD FOR load_test_user_3;

3、删除一个

STOP ROUTINE LOAD FOR load_test_user_4;

4、查看日志,一定要查看be.INFO

I0430 14:24:36.568722 6899 data_consumer.h:88] kafka error: Local: Host resolution failure, event: uat-datacenter2:9092/100: Failed to resolve 'uat-datacenter2:9092': Name or service not known (after 514035102ms in state INIT) I0430 14:24:36.568774 6899 data_consumer.h:88] kafka error: Local: Host resolution failure, event: uat-datacenter1:9092/98: Failed to resolve 'uat-datacenter1:9092': Name or service not known (after 514035102ms in state INIT) I0430 14:24:36.579171 6899 data_consumer.h:88] kafka error: Local: Host resolution failure, event: uat-datacenter3:9092/99: Failed to resolve 'uat-datacenter3:9092': Name or service not known (after 514035112ms in state INIT)

表示不知道kafka地址

5、错误信息

ReasonOfStateChanged: ErrorReason{code=errCode = 4, msg='Job failed to fetch all current partition with error errCode = 2, detailMessage = Failed to get all partitions of kafka topic: doris_test. error: errCode = 2, detailMessage = failed to get kafka partition info: [failed to get partition meta: Local: Broker transport failure]'}

表示分区信息获取不到

6、查看routine 建立信息

help routine load

7、查看建表信息

help create table

8、重启一个暂停的routine

RESUME ROUTINE LOAD FOR [job_name];

#############案例(CSV格式)##################

1、创建一个用户表

CREATE TABLE user ( id INTEGER, name VARCHAR(256) DEFAULT '', ts DATETIME, counts BIGINT SUM DEFAULT '0' ) AGGREGATE KEY(id, name, ts) DISTRIBUTED BY HASH(id) BUCKETS 3 PROPERTIES("replication_num" = "1");

2、创建一个routine load (注意:如果这个topic不存在,routine会自动创建一个)

CREATE ROUTINE LOAD load_test.user ON user COLUMNS TERMINATED BY ",", COLUMNS(id, name, counts, ts) PROPERTIES ( "desired_concurrent_number"="3", "max_batch_interval" = "20", "max_batch_rows" = "300000", "max_batch_size" = "209715200", "strict_mode" = "false" ) FROM KAFKA ( "kafka_broker_list" = "localhost:9092", "kafka_topic" = "Demo2", "property.group.id" = "1234", "property.client.id" = "12345", "kafka_partitions" = "0", "kafka_offsets" = "1" );

3、查看ROUTINE状态

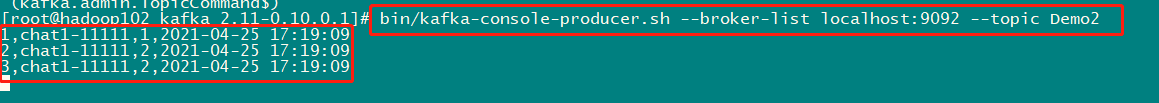

4、往kafka的topic主题Demo2添加发送信息

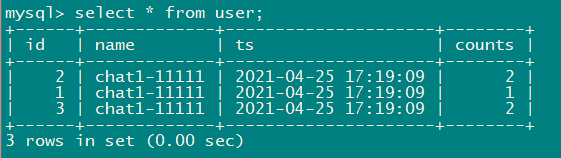

5、查看表信息

1 | select * from user; |

#############案例(JSON格式:无嵌套)##################

1、创建表

CREATE TABLE user_json ( id INTEGER, name VARCHAR(256) DEFAULT '', ts DATETIME, counts BIGINT SUM DEFAULT '0' ) AGGREGATE KEY(id, name, ts) DISTRIBUTED BY HASH(id) BUCKETS 3 PROPERTIES("replication_num" = "1");

2、创建routine

CREATE ROUTINE LOAD test.test_json_label_1 ON user_json COLUMNS(id, name, counts, ts) PROPERTIES ( "desired_concurrent_number"="3", "max_batch_interval" = "20", "max_batch_rows" = "300000", "max_batch_size" = "209715200", "strict_mode" = "false", "format" = "json" ) FROM KAFKA ( "kafka_broker_list" = "localhost:9092", "kafka_topic" = "Demo2", "property.group.id" = "1234", "property.client.id" = "12345", "kafka_partitions" = "0", "kafka_offsets" = "0" );

3、发送Kafka数据(Json格式,无嵌套)

{"id":8,"name":"chat1-11111","ts":"2021-04-25 17:19:09","counts":2}

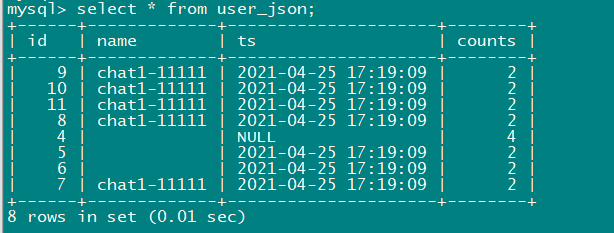

4、查看数据和routine

#############案例(JSON格式:有嵌套)##################

1、创建表

CREATE TABLE user_json ( id INTEGER, name VARCHAR(256) DEFAULT '', ts DATETIME, counts BIGINT SUM DEFAULT '0' ) AGGREGATE KEY(id, name, ts) DISTRIBUTED BY HASH(id) BUCKETS 3 PROPERTIES("replication_num" = "1");

2、创建routine

CREATE ROUTINE LOAD test.test_json_label_4 ON user_json COLUMNS(id, name, counts, ts) PROPERTIES ( "desired_concurrent_number"="3", "max_batch_interval" = "20", "max_batch_rows" = "300000", "max_batch_size" = "209715200", "strict_mode" = "false", "format" = "json", "jsonpaths" = "[\"$.test.id\",\"$.test.name\",\"$.test.counts\",\"$.test.ts\"]" ) FROM KAFKA ( "kafka_broker_list" = "localhost:9092", "kafka_topic" = "Demo2", "kafka_partitions" = "0", "kafka_offsets" = "0" );

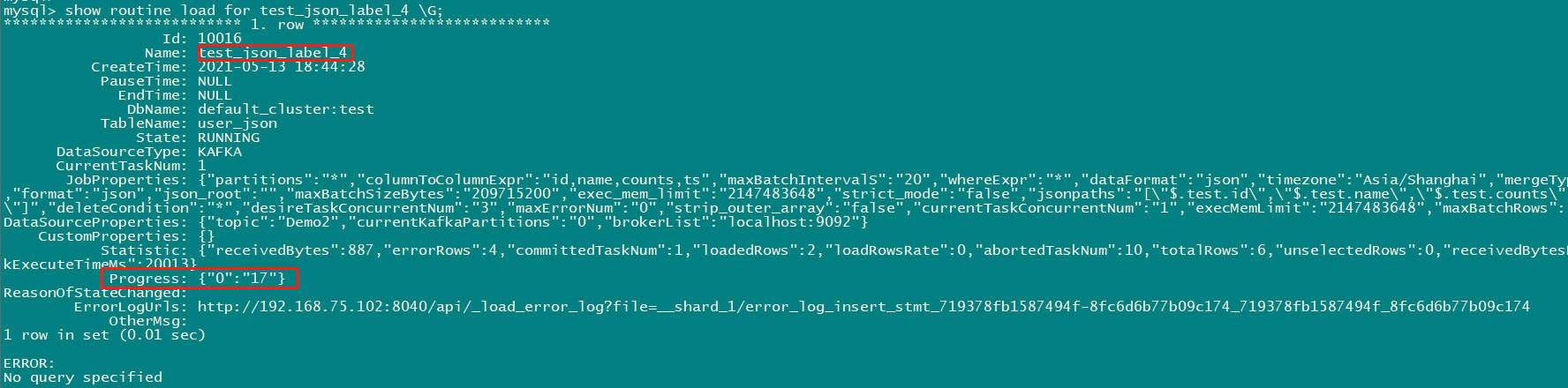

3、查看routine

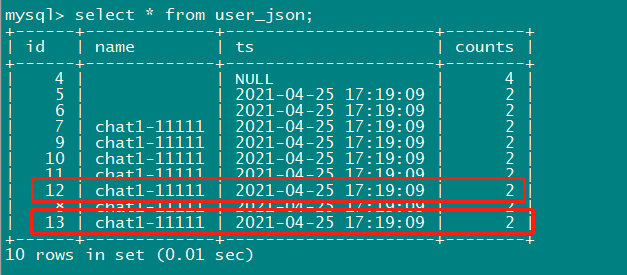

4、kafka发送数据并查看数据

{"test":{"id":12,"name":"chat1-11111","ts":"2021-04-25 17:19:09","counts":2}}

本文来自博客园,作者:小白啊小白,Fighting,转载请注明原文链接:https://www.cnblogs.com/ywjfx/p/14722302.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 字符编码:从基础到乱码解决

2020-04-30 Netty心跳机制

2020-04-30 Netty5实现客户端和服务端