CDH5.12.1利用Sqoop将mysql数据导入hive

环境: CDH5.12.1 、 centos7

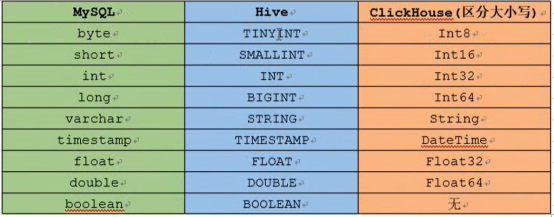

数据库类型

1、权限问题

dfs.permissions 设置为false(可以在配置界面勾选掉)

2、关闭安全模式,允许读写

hdfs dfsadmin -safemode leave

3、创建hive表

drop table if exists default.opportunity; create table default.opportunity(id BIGINT,consultant_account STRING,first_consultant_account STRING,group_id BIGINT, first_group_id BIGINT,sale_department_id BIGINT,first_sale_department_id BIGINT,legion_id BIGINT, first_legion_id BIGINT, business_id BIGINT,student_id BIGINT,province_id BIGINT,city_id BIGINT,create_user STRING, online_group_id BIGINT, online_center_id BIGINT, create_time TIMESTAMP,allocate_time TIMESTAMP,apply_time TIMESTAMP, auto_apply_time TIMESTAMP )ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

4、sqoop全量导入数据

sqoop import \ --connect jdbc:mysql://192.168.75.101:3306/dragnet \ --username root \ --password yang156122 \ --query 'select id,consultant_account ,first_consultant_account,group_id, first_group_id,sale_department_id,first_sale_department_id,legion_id,first_legion_id,business_id,student_id,province_id,city_id,create_user,online_group_id,online_center_id,create_time,allocate_time,apply_time,auto_apply_time from opportunity where $CONDITIONS' \ --target-dir /user/sqoop2/opportunity \ --delete-target-dir \ --num-mappers 1 \ --compress \ --compression-codec org.apache.hadoop.io.compress.SnappyCodec \ --direct \ --fields-terminated-by '\t'

#建表语句

//##鍒涘缓hive鍔ㄦ€佸垎鍖鸿〃 use advertise_ods; set hive.exec.dynamic.partition=true; set hive.exec.dynamic.partition.mode=nonstrict; create external table ods_ad_user_visit_history ( `id` bigint , `code` string , `cookie` string , `key_word` string , `ip` string , `province_id` bigint , `province` string , `city_id` bigint , `city` string , `terminal` bigint , `system` bigint , `site_id` bigint, `plan_id` bigint , `main_url` string , `full_url` string , `page_name` string , `channel_id` bigint , `channel_name` string , `project_id` bigint , `project_name` string , `ocpc_config` string , `creative_name` string , `tenant_id` bigint , `create_ts` string, `update_ts` string ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/advertise/db/ad_user_visit_history"; create external table ad_campaign ( `id` bigint, `campaign_number` string, `campaign_name` string, `channel_id` bigint, `channel_name` string, `account_id` int, `account_name` string, `region` string, `pc_site_id` bigint, `pc_site_number` string, `mobile_site_id` bigint, `mobile_site_number` string, `pc_page_id` bigint, `pc_page_number` string , `mobile_page_id` bigint , `mobile_page_number` string, `pc_url` string, `mobile_url` string, `obverse_sign` int, `reverse_sign` int, `promoted_campaign_id` string, `mini_program_id` bigint, `mini_program_name` string, `start_sign` int, `state` int, `create_user_id` bigint, `create_user_account` string, `create_time` string, `update_user_id` bigint, `update_user_account` string, `update_time` string, `tenant_id` bigint ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/advertise/db/ad_campaign"; create external table ods_ad_info_card ( `id` bigint, `card_type` tinyint, `data_source` tinyint , `consultant_type` tinyint, `owner_user_id` bigint, `request_url` string, `request_ip` string, `site_id` bigint, `account_id` int, `account_name` string, `customer_sign` string, `customer_name` string, `city_id` int, `city_name` string, `province_id` int, `province_name` string, `phone` string, `phone1` string, `qq` string, `wechat` string, `project_id` bigint , `project_name` string, `remarks` string, `date` date , `submit_time` string , `update_time` string , `tenant_id` bigint ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/advertise/db/ad_info_card"; create external table ad_account_bind ( `id` int, `account_id` int , `platform_user_id` bigint , `platform_user_account` string, `platform_user_org_id1` bigint, `platform_user_org_id2` bigint, `state` tinyint , `create_user_id` bigint , `create_user_account` string, `create_time` string , `update_user_id` bigint , `update_user_account` string, `update_time` string , `tenant_id` bigint ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/advertise/db/ad_account_bind"; /******************privilege_center***************************/ use privilege_center_ods; create external table ods_u_organization ( `id` bigint, `tenant_id` bigint , `org_name` string, `org_level` int , `parent_id` bigint, `org_full_id` string, `org_full_name` string, `defaulted` tinyint , `order_num` int , `delete_flag` tinyint, `creator` string, `create_time` string, `updator` string, `update_time` string ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/privilege_center/db/u_organization"; create external table ods_u_user ( `id` bigint, `tenant_id` bigint , `tenant_name` string, `user_name` string, `nick_name` string, `user_image` string, `user_account` string, `user_pass` string, `salt_value` string, `phone_number` string, `job_number` string, `delete_flag` tinyint, `root_flag` tinyint , `user_status` tinyint , `org_id` bigint, `org_position` tinyint , `user_mail` string , `creator` string, `create_time` string , `updator` string , `update_time` string ) partitioned by (dt STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' stored as textfile location "/ods/privilege_center/db/u_user";

#mysq导入至hdfs

#! /bin/bash if [ -n "$2" ] ;then do_date=$2 else do_date=`date -d '-1 day' +%F` fi import_data(){ sqoop import \ --connect jdbc:mysql://localhost:3306/privilege_center?useSSL=false \ --username root \ --password 'root' \ --target-dir /ods/privilege_center/db/$1/$do_date \ --delete-target-dir \ --query "$2 and \$CONDITIONS" \ --num-mappers 1 \ --fields-terminated-by '\t' \ --compress \ --compression-codec org.apache.hadoop.io.compress.SnappyCodec \ --null-string '\\N' \ --null-non-string '\\N' } import_u_user(){ import_data u_user "select id, tenant_id, tenant_name, user_name, nick_name, user_image, user_account, user_pass, salt_value, phone_number, job_number, delete_flag, root_flag, user_status, org_id, org_position, user_mail, creator, DATE_FORMAT(create_time, '%Y-%m-%d %k:%i:%s') as create_time, updator, DATE_FORMAT(update_time, '%Y-%m-%d %k:%i:%s') as update_time from u_user where 1=1 " } import_u_organization(){ import_data u_organization "select id, tenant_id, org_name, org_level, parent_id, org_full_id, org_full_name, defaulted, order_num, delete_flag, creator, DATE_FORMAT(create_time, '%Y-%m-%d %k:%i:%s') as create_time, updator, DATE_FORMAT(update_time, '%Y-%m-%d %k:%i:%s') as update_time from u_organization where 1=1 " } case $1 in "all") import_u_user import_u_organization ;; esac

#hdfs到hive

#!/bin/bash APP=privilege_center hive=/usr/bin/hive # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$2" ] ;then do_date=$2 else do_date=`date -d "-1 day" +%F` fi sql1=" load data inpath '/ods/privilege_center/db/u_organization/$do_date' OVERWRITE into table ${APP}.ods_u_organization partition(dt='$do_date'); load data inpath '/ods/privilege_center/db/u_user/$do_date' OVERWRITE into table ${APP}.ods_u_user partition(dt='$do_date'); " #(目前采用全量导入) case $1 in "all"){ $hive -e "$sql1" };; esac

###############实际案例##############

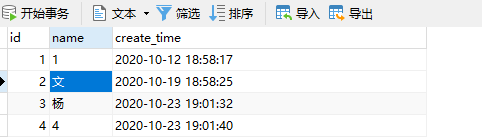

说明: 库表结构

1、创建库表

drop table if exists default.data create table default.data(id BIGINT,name STRING, create_time TIMESTAMP)ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

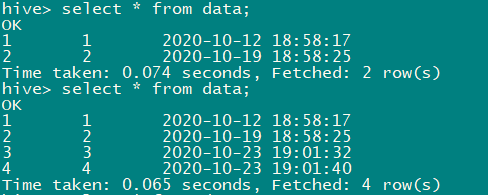

2、全量导入

sqoop import \ --connect jdbc:mysql://192.168.75.101:3306/dragnet \ --username root \ --password yang156122 \ --table data \ --hive-import \ --fields-terminated-by ',' --m 1

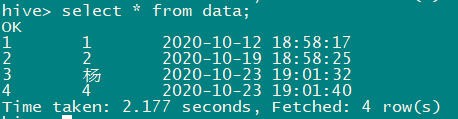

3、增量导入

sqoop import --connect jdbc:mysql://192.168.75.101:3306/dragnet \ --username root \ --password yang156122 \ --table data \ --target-dir '/user/hive/warehouse/data' \ --check-column create_time \ --incremental lastmodified \ --last-value '2020-10-23 00:00:00' \ --m 1 \ --merge-key id

4、编写定时任务,并重启

/bin/systemctl restart crond.service

本文来自博客园,作者:小白啊小白,Fighting,转载请注明原文链接:https://www.cnblogs.com/ywjfx/p/13865520.html