机器学习之逻辑回归

知识点:

""" 逻辑回归:只能解决二分类问题 损失函数: 1、均方误差(不存在多个局部最低点):只有一个最小值 2、对数似然损失:存在多个局部最小值 ; 改善方法:1、多次随机初始化,多次比较最小值结果; 2、调整学习率 逻辑回归缺点:不好处理多分类问题 生成模型:有先验概率 (逻辑回归,隐马尔科夫模型) 判别模型:没有先验概率 (KNN,决策树,随机森林,神经网络) """

代码:

def logistic(): """ 逻辑回归做二分类进行癌症预测(根据细胞的属性特征) :return: NOne """ # 构造列标签名字 column = ['Sample code number','Clump Thickness', 'Uniformity of Cell Size','Uniformity of Cell Shape','Marginal Adhesion', 'Single Epithelial Cell Size','Bare Nuclei','Bland Chromatin','Normal Nucleoli','Mitoses','Class'] # 读取数据 data = pd.read_csv("https://archive.ics.uci.edu/ml/machine-learning-databases/breast-cancer-wisconsin/breast-cancer-wisconsin.data", names=column) print(data) # 缺失值进行处理 data = data.replace(to_replace='?', value=np.nan) data = data.dropna() # 进行数据的分割 x_train, x_test, y_train, y_test = train_test_split(data[column[1:10]], data[column[10]], test_size=0.25) # 进行标准化处理 std = StandardScaler() x_train = std.fit_transform(x_train) x_test = std.transform(x_test) # 逻辑回归预测 lg = LogisticRegression(C=1.0) lg.fit(x_train, y_train) print(lg.coef_) y_predict = lg.predict(x_test) print("准确率:", lg.score(x_test, y_test)) print("召回率:", classification_report(y_test, y_predict, labels=[2, 4], target_names=["良性", "恶性"])) return None

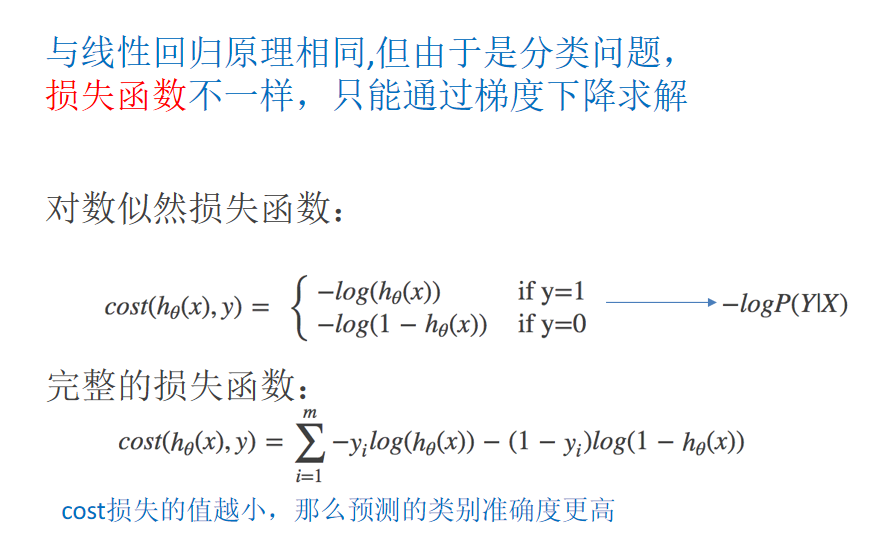

损失函数:

本文来自博客园,作者:小白啊小白,Fighting,转载请注明原文链接:https://www.cnblogs.com/ywjfx/p/10898684.html