109个shell实用脚本

109个shell实用脚本

1、Dos 攻击防范(自动屏蔽攻击 IP)

#!/bin/bash

DATE=$(date +%d/%b/%Y:%H:%M)

LOG_FILE=/usr/local/nginx/logs/demo2.access.log

ABNORMAL_IP=$(tail -n5000 $LOG_FILE |grep $DATE |awk '{a[$1]++}END{for(i in a)if(a[i]>10)print i}')

for IP in $ABNORMAL_IP; do

if [ $(iptables -vnL |grep -c "$IP") -eq 0 ]; then

iptables -I INPUT -s $IP -j DROP

echo "$(date +'%F_%T') $IP" >> /tmp/drop_ip.log

fi

done

2、Linux 系统发送告警脚本

# yum install mailx

# vi /etc/mail.rc

set from=baojingtongzhi@163.com smtp=smtp.163.com

set smtp-auth-user=baojingtongzhi@163.com smtp-auth-password=123456

set smtp-auth=login

3、MySQL 数据库备份单循环

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null | egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_NAME=$BACKUP_DIR/${DB}_${DATE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS -B $DB > $BACKUP_NAME 2>/dev/null;then

echo "$BACKUP_NAME 备份失败!"

fi

done

4、MySQL 数据库备份多循环

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null | egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_DB_DIR=$BACKUP_DIR/${DB}_${DATE}

[ ! -d $BACKUP_DB_DIR ] && mkdir -p $BACKUP_DB_DIR &>/dev/null

TABLE_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "use $DB;show tables;" 2>/dev/null)

for TABLE in $TABLE_LIST; do

BACKUP_NAME=$BACKUP_DB_DIR/${TABLE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS $DB $TABLE > $BACKUP_NAME 2>/dev/null; then

echo "$BACKUP_NAME 备份失败!"

fi

done

done

5、Nginx 访问访问日志按天切割

#!/bin/bash

LOG_DIR=/usr/local/nginx/logs

YESTERDAY_TIME=$(date -d "yesterday" +%F)

LOG_MONTH_DIR=$LOG_DIR/$(date +"%Y-%m")

LOG_FILE_LIST="default.access.log"

for LOG_FILE in $LOG_FILE_LIST; do

[ ! -d $LOG_MONTH_DIR ] && mkdir -p $LOG_MONTH_DIR

mv $LOG_DIR/$LOG_FILE $LOG_MONTH_DIR/${LOG_FILE}_${YESTERDAY_TIME}

done

kill -USR1 $(cat /var/run/nginx.pid)

6、Nginx 访问日志分析脚本

#!/bin/bash

# 日志格式: $remote_addr - $remote_user [$time_local] "$request" $status

$body_bytes_sent "$http_referer" "$http_user_agent" "$http_x_forwarded_for"

LOG_FILE=$1

echo "统计访问最多的10个IP"

awk '{a[$1]++}END{print "UV:",length(a);for(v in a)print v,a[v]}' $LOG_FILE |sort -k2 -nr |head -10

echo "----------------------"

echo "统计时间段访问最多的IP"

awk '$4>="[01/Dec/2018:13:20:25" && $4<="[27/Nov/2018:16:20:49"{a[$1]++}END{for(v in a)print v,a[v]}' $LOG_FILE |sort -k2 -nr|head -10

echo "----------------------"

echo "统计访问最多的10个页面"

awk '{a[$7]++}END{print "PV:",length(a);for(v in a){if(a[v]>10)print v,a[v]}}' $LOG_FILE |sort -k2 -nr

echo "----------------------"

echo "统计访问页面状态码数量"

awk '{a[$7" "$9]++}END{for(v in a){if(a[v]>5)print v,a[v]}}'

7、查看网卡实时流量脚本

#!/bin/bash

NIC=$1

echo -e " In ------ Out"

while true; do

OLD_IN=$(awk '$0~"'$NIC'"{print $2}' /proc/net/dev)

OLD_OUT=$(awk '$0~"'$NIC'"{print $10}' /proc/net/dev)

sleep 1

NEW_IN=$(awk '$0~"'$NIC'"{print $2}' /proc/net/dev)

NEW_OUT=$(awk '$0~"'$NIC'"{print $10}' /proc/net/dev)

IN=$(printf "%.1f%s" "$((($NEW_IN-$OLD_IN)/1024))" "KB/s")

OUT=$(printf "%.1f%s" "$((($NEW_OUT-$OLD_OUT)/1024))" "KB/s")

echo "$IN $OUT"

sleep 1

done

8、服务器系统配置初始化脚本

#/bin/bash

# 设置时区并同步时间

ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

if ! crontab -l |grep ntpdate &>/dev/null ; then

(echo "* 1 * * * ntpdate time.windows.com >/dev/null 2>&1";crontab -l) | crontab

fi

# 禁用selinux

sed -i '/SELINUX/{s/permissive/disabled/}' /etc/selinux/config

# 关闭防火墙

if egrep "7.[0-9]" /etc/redhat-release &>/dev/null; then

systemctl stop firewalld

systemctl disable firewalld

elif egrep "6.[0-9]" /etc/redhat-release &>/dev/null; then

service iptables stop

chkconfig iptables off

fi

# 历史命令显示操作时间

if ! grep HISTTIMEFORMAT /etc/bashrc; then

echo 'export HISTTIMEFORMAT="%F %T `whoami` "' >> /etc/bashrc

fi

# SSH超时时间

if ! grep "TMOUT=600" /etc/profile &>/dev/null; then

echo "export TMOUT=600" >> /etc/profile

fi

# 禁止root远程登录

sed -i 's/#PermitRootLogin yes/PermitRootLogin no/' /etc/ssh/sshd_config

# 禁止定时任务向发送邮件

sed -i 's/^MAILTO=root/MAILTO=""/' /etc/crontab

# 设置最大打开文件数

if ! grep "* soft nofile 65535" /etc/security/limits.conf &>/dev/null; then

cat >> /etc/security/limits.conf << EOF

* soft nofile 65535

* hard nofile 65535

EOF

fi

# 系统内核优化

cat >> /etc/sysctl.conf << EOF

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_tw_buckets = 20480

net.ipv4.tcp_max_syn_backlog = 20480

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_fin_timeout = 20

EOF

# 减少SWAP使用

echo "0" > /proc/sys/vm/swappiness

# 安装系统性能分析工具及其他

yum -y install gcc make autoconf vim sysstat net-tools iostat if

9、监控 100 台服务器磁盘利用率脚本

#!/bin/bash

HOST_INFO=host.info

for IP in $(awk '/^[^#]/{print $1}' $HOST_INFO); do

USER=$(awk -v ip=$IP 'ip==$1{print $2}' $HOST_INFO)

PORT=$(awk -v ip=$IP 'ip==$1{print $3}' $HOST_INFO)

TMP_FILE=/tmp/disk.tmp

ssh -p $PORT $USER@$IP 'df -h' > $TMP_FILE

USE_RATE_LIST=$(awk 'BEGIN{OFS="="}/^\/dev/{print $NF,int($5)}' $TMP_FILE)

for USE_RATE in $USE_RATE_LIST; do

PART_NAME=${USE_RATE%=*}

USE_RATE=${USE_RATE#*=}

if [ $USE_RATE -ge 80 ]; then

echo "Warning: $PART_NAME Partition usage $USE_RATE%!"

fi

done

done

1-9来自微信公众号:我的小碗汤

10、并发从数台机器中获取 hostname,并记录返回信息花费的时长,重定向到一个文件 hostname.txt 中,在全部完成后输出花费时长最短的那台机器的 CPU 信息。

#!bin/bash

# 所以主机,以空格分隔

ALL_HOSTS=(IP 地址 IP 地址)

for host in ${ALL_HOSTS[*]}

do

{

start_time=$(date +'%s')

ssh $host "hostname" &>/dev/null

sleep 2

stop_time=$(date +'%s')

time_consuming=$((stop_time-start_time))

echo "$host: $time_consuming" >>hostname.txt

}&

done

wait

host=$(sort -n -k 2 hostname.txt | head -1 | awk -F':' '{print $1}')

ssh $host "top -b -n 1"

11、统计 /proc 目类下 Linux 进程相关数量信息,输出总进程数,running 进程数,stoped 进程数,sleeing 进程数,zombie 进程数。

输出所有 zombie 的进程到 zombie.txt 杀死所有 zombie 进程。

#!/bin/bash

ALL_PROCESS=$(ls /proc/ | egrep '[0-9]+')

running_count=0

stoped_count=0

sleeping_count=0

zombie_count=0

for pid in ${ALL_PROCESS[*]}

do

test -f /proc/$pid/status && state=$(egrep "State" /proc/$pid/status | awk '{print $2}')

case "$state" in

R)

running_count=$((running_count+1))

;;

T)

stoped_count=$((stoped_count+1))

;;

S)

sleeping_count=$((sleeping_count+1))

;;

Z)

zombie_count=$((zombie_count+1))

echo "$pid" >>zombie.txt

kill -9 "$pid"

;;

esac

done

echo -e "total:

$((running_count+stoped_count+sleeping_count+zombie_count))\nrunning:

$running_count\nstoped: $stoped_count\nsleeping: $sleeping_count\nzombie:

$zombie_count"

12、把当前目录(包含子目录)下所有后缀为 ".sh" 的文件

后缀变更为 ".shell",之后删除每个文件的第二行。

#!/bin/bash

ALL_SH_FILE=$(find . -type f -name "*.sh")

for file in ${ALL_SH_FILE[*]}

do

filename=$(echo $file | awk -F'.sh' '{print $1}')

new_filename="${filename}.shell"

mv "$file" "$new_filename"

sed -i '2d' "$new_filename"

done

13、判断目录 /tmp/jstack 是否存在,不存在则新建一个目录,若存在则删除目录下所有内容。

每隔 1 小时打印 inceptor server 的 jstack 信息,并以 jstack_${当前时间} 命名文件,每当目录下超过10 个文件后,删除最旧的文件。

#!/bin/bash

DIRPATH='/tmp/jstack'

CURRENT_TIME=$(date +'%F'-'%H:%M:%S')

if [ ! -d "$DIRPATH" ];then

mkdir "$DIRPATH"

else

rm -rf "$DIRPATH"/*

fi

cd "$DIRPATH"

while true

do

sleep 3600

#这里需要将inceptor改后自己的java进程名称

pid=$(ps -ef | grep 'inceptor' | grep -v grep | awk '{print $2}')

jstack $pid >> "jstack_${CURRENT_TIME}"

dir_count=$(ls | wc -l)

if [ "$dir_count" -gt 10 ];then

rm -f $(ls -tr | head -1)

fi

done

14、从 test.log 中截取当天的所有 gc 信息日志,并统计 gc 时间的平均值和时长最长的时间。

#!/bin/bash

awk '{print $2}' hive-server2.log | tr -d ':' | awk '{sum+=$1} END {print "avg:", sum/NR}' >>capture_hive_log.log

awk '{print $2}' hive-server2.log | tr -d ':' | awk '{max = 0} {if ($1+0 > max+0)max=$1} END {print "Max: ", max}'>>capture_hive_log.log

15、查找 80 端口请求数最高的前 20 个 IP 地址,判断中间最小的请求数是否大于 500,如大于 500,则输出系统活动情况报告到 alert.txt,如果没有,则在 600s 后重试,直到有输出为止。

#!/bin/bash

state="true"

while $state

do

SMALL_REQUESTS=$(netstat -ant | awk -F'[ :]+' '/:22/{count[$4]++} END {for(ip in count) print count[ip]}' | sort -n | head -20 | head -1)

if [ "$SMALL_REQUESTS" -gt 500 ];then

sar -A > alert.txt

state="false"

else

sleep 6

continue

fi

done

16、将当前目录下大于 10K 的文件转移到 /tmp 目录,再按

照文件大小顺序,从大到小输出文件名。

#!/bin/bash

# 目标目录

DIRPATH='/tmp'

# 查看目录

FILEPATH='.'

find "$FILEPATH" -size +10k -type f | xargs -i mv {} "$DIRPATH"

ls -lS "$DIRPATH" | awk '{if(NR>1) print $NF}'

17、企业微信告警

此脚本通过企业微信应用,进行微信告警,可用于 Zabbix 监控。

# -*- coding: utf-8 -*-

import requests

import json

class DLF:

def __init__(self, corpid, corpsecret):

self.url = "https://qyapi.weixin.qq.com/cgi-bin"

self.corpid = corpid

self.corpsecret = corpsecret

self._token = self._get_token()

def _get_token(self):

'''

获取企业微信API接口的access_token

:return:

'''

token_url = self.url + "/gettoken?corpid=%s&corpsecret=%s" %(self.corpid,self.corpsecret)

try:

res = requests.get(token_url).json()

token = res['access_token']

return token

except Exception as e:

return str(e)

def _get_media_id(self, file_obj):

get_media_url = self.url + "/media/upload?access_token={}&type=file".format(self._token)

data = {"media": file_obj}

try:

res = requests.post(url=get_media_url, files=data)

media_id = res.json()['media_id']

return media_id

except Exception as e:

return str(e)

def send_text(self, agentid, content, touser=None, toparty=None):

send_msg_url = self.url + "/message/send?access_token=%s" %

(self._token)

send_data = {

"touser": touser,

"toparty": toparty,

"msgtype": "text",

"agentid": agentid,

"text": {

"content": content

}

}

try:

res = requests.post(send_msg_url, data=json.dumps(send_data))

except Exception as e:

return str(e)

def send_image(self, agentid, file_obj, touser=None, toparty=None):

media_id = self._get_media_id(file_obj)

send_msg_url = self.url + "/message/send?access_token=%s" % (self._token)

send_data = {

"touser": touser,

"toparty": toparty,

"msgtype": "image",

"agentid": agentid,

"image": {

"media_id": media_id

}

}

try:

res = requests.post(send_msg_url, data=json.dumps(send_data))

except Exception as e:

return str(e)

18、FTP 客户端

通过 ftplib 模块操作 ftp 服务器,进行上传下载等操作。

# -*- coding: utf-8 -*-

from ftplib import FTP

from os import path

import copy

class FTPClient:

def __init__(self, host, user, passwd, port=21):

self.host = host

self.user = user

self.passwd = passwd

self.port = port

self.res = {'status': True, 'msg': None}

self._ftp = None

self._login()

def _login(self):

'''

登录FTP服务器

:return: 连接或登录出现异常时返回错误信息

'''

try:

self._ftp = FTP()

self._ftp.connect(self.host, self.port, timeout=30)

self._ftp.login(self.user, self.passwd)

except Exception as e:

return e

def upload(self, localpath, remotepath=None):

'''

上传ftp文件

:param localpath: local file path

:param remotepath: remote file path

:return:

'''

if not localpath: return 'Please select a local file. '

#读取本地文件

#fp = open(localpath, 'rb')

#如果未传递远程文件路径,则上传到当前目录,文件名称同本地文件

if not remotepath:

remotepath = path.basename(localpath)

#上传文件

self._ftp.storbinary('STOR ' + remotepath, localpath)

#fp.close()

def download(self, remotepath, localpath=None):

'''

localpath

:param localpath: local file path

:param remotepath: remote file path

:return:

'''

if not remotepath: return 'Please select a remote file. '

#如果未传递本地文件路径,则下载到当前目录,文件名称同远程文件

if not localpath:

localpath = path.basename(remotepath)

#如果localpath是目录的话就和remotepath的basename拼接

if path.isdir(localpath):

localpath = path.join(localpath, path.basename(remotepath))

#写入本地文件

fp = open(localpath, 'wb')

#下载文件

self._ftp.retrbinary('RETR ' + remotepath, fp.write)

fp.close()

def nlst(self, dir='/'):

'''

查看目录下的内容

:return: 以列表形式返回目录下的所有内容

'''

files_list = self._ftp.nlst(dir)

return files_list

def rmd(self, dir=None):

'''

删除目录

:param dir: 目录名称

:return: 执行结果

'''

if not dir: return 'Please input dirname'

res = copy.deepcopy(self.res)

try:

del_d = self._ftp.rmd(dir)

res['msg'] = del_d

except Exception as e:

res['status'] = False

res['msg'] = str(e)

return res

def mkd(self, dir=None):

'''

创建目录

:param dir: 目录名称

:return: 执行结果

'''

if not dir: return 'Please input dirname'

res = copy.deepcopy(self.res)

try:

mkd_d = self._ftp.mkd(dir)

res['msg'] = mkd_d

except Exception as e:

res['status'] = False

res['msg'] = str(e)

return res

def del_file(self, filename=None):

'''

删除文件

:param filename: 文件名称

:return: 执行结果

'''

if not filename: return 'Please input filename'

res = copy.deepcopy(self.res)

try:

del_f = self._ftp.delete(filename)

res['msg'] = del_f

except Exception as e:

res['status'] = False

res['msg'] = str(e)

return res

def get_file_size(self, filenames=[]):

'''

获取文件大小,单位是字节

判断文件类型

:param filename: 文件名称

:return: 执行结果

'''

if not filenames: return {'msg': 'This is an empty directory'}

res_l = []

for file in filenames:

res_d = {}

#如果是目录或者文件不存在就会报错

try:

size = self._ftp.size(file)

type = 'f'

except:

#如果是路径的话size显示 - , file末尾加/ (/dir/)

size = '-'

type = 'd'

file = file + '/'

res_d['filename'] = file

res_d['size'] = size

res_d['type'] = type

res_l.append(res_d)

return res_l

def rename(self, old_name=None, new_name=None):

'''

重命名

:param old_name: 旧的文件或者目录名称

:param new_name: 新的文件或者目录名称

:return: 执行结果

'''

if not old_name or not new_name: return 'Please input old_name and new_name'

res = copy.deepcopy(self.res)

try:

rename_f = self._ftp.rename(old_name, new_name)

res['msg'] = rename_f

except Exception as e:

res['status'] = False

res['msg'] = str(e)

return res

def close(self):

'''

退出ftp连接

:return:

'''

try:

#向服务器发送quit命令

self._ftp.quit()

except Exception:

return 'No response from server'

finally:

#客户端单方面关闭连接

self._ftp.close()

19、SSH 客户端

此脚本仅用于通过 key 连接,如需要密码连接,简单修改下即可。

# -*- coding: utf-8 -*-

import paramiko

class SSHClient:

def __init__(self, host, port, user, pkey):

self.ssh_host = host

self.ssh_port = port

self.ssh_user = user

self.private_key = paramiko.RSAKey.from_private_key_file(pkey)

self.ssh = None

self._connect()

def _connect(self):

self.ssh = paramiko.SSHClient()

self.ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

self.ssh.connect(hostname=self.ssh_host, port=self.ssh_port, username=self.ssh_user, pkey=self.private_key, timeout=10)

except:

return 'ssh connect fail'

def execute_command(self, command):

stdin, stdout, stderr = self.ssh.exec_command(command)

out = stdout.read()

err = stderr.read()

return out, err

def close(self):

self.ssh.close()

20、Saltstack 客户端

通过 api 对 Saltstack 服务端进行操作,执行命令。

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import requests

import json

import copy

class SaltApi:

"""

定义salt api接口的类

初始化获得token

"""

def __init__(self):

self.url = "http://172.85.10.21:8000/"

self.username = "saltapi"

self.password = "saltapi"

self.headers = {"Content-type": "application/json"}

self.params = {'client': 'local', 'fun': None, 'tgt': None, 'arg': None}

self.login_url = self.url + "login"

self.login_params = {'username': self.username, 'password': self.password, 'eauth': 'pam'}

self.token = self.get_data(self.login_url, self.login_params)['token']

self.headers['X-Auth-Token'] = self.token

def get_data(self, url, params):

'''

请求url获取数据

:param url: 请求的url地址

:param params: 传递给url的参数

:return: 请求的结果

'''

send_data = json.dumps(params)

request = requests.post(url, data=send_data, headers=self.headers)

response = request.json()

result = dict(response)

return result['return'][0]

def get_auth_keys(self):

'''

获取所有已经认证的key

:return:

'''

data = copy.deepcopy(self.params)

data['client'] = 'wheel'

data['fun'] = 'key.list_all'

result = self.get_data(self.url, data)

try:

return result['data']['return']['minions']

except Exception as e:

return str(e)

def get_grains(self, tgt, arg='id'):

"""

获取系统基础信息

:tgt: 目标主机

:return:

"""

data = copy.deepcopy(self.params)

if tgt:

data['tgt'] = tgt

else:

data['tgt'] = '*'

data['fun'] = 'grains.item'

data['arg'] = arg

result = self.get_data(self.url, data)

return result

def execute_command(self, tgt, fun='cmd.run', arg=None, tgt_type='list', salt_async=False):

"""

执行saltstack 模块命令,类似于salt '*' cmd.run 'command'

:param tgt: 目标主机

:param fun: 模块方法 可为空

:param arg: 传递参数 可为空

:return: 执行结果

"""

data = copy.deepcopy(self.params)

if not tgt: return {'status': False, 'msg': 'target host not exist'}

if not arg:

data.pop('arg')

else:

data['arg'] = arg

if tgt != '*':

data['tgt_type'] = tgt_type

if salt_async: data['client'] = 'local_async'

data['fun'] = fun

data['tgt'] = tgt

result = self.get_data(self.url, data)

return result

def jobs(self, fun='detail', jid=None):

"""

任务

:param fun: active, detail

:param jod: Job ID

:return: 任务执行结果

"""

data = {'client': 'runner'}

data['fun'] = fun

if fun == 'detail':

if not jid: return {'success': False, 'msg': 'job id is none'}

data['fun'] = 'jobs.lookup_jid'

data['jid'] = jid

else:

return {'success': False, 'msg': 'fun is active or detail'}

result = self.get_data(self.url, data)

return result

21、vCenter 客户端通过官方 SDK 对 vCenter 进行日常操作,此脚本是我用于 cmdb平台的,自动获取主机信息,存入数据库。

from pyVim.connect import SmartConnect, Disconnect, SmartConnectNoSSL

from pyVmomi import vim

from asset import models

import atexit

class Vmware:

def __init__(self, ip, user, password, port, idc, vcenter_id):

self.ip = ip

self.user = user

self.password = password

self.port = port

self.idc_id = idc

self.vcenter_id = vcenter_id

def get_obj(self, content, vimtype, name=None):

'''

列表返回,name 可以指定匹配的对象

'''

container = content.viewManager.CreateContainerView(content.rootFolder, vimtype, True)

obj = [ view for view in container.view ]

return obj

def get_esxi_info(self):

# 宿主机信息

esxi_host = {}

res = {"connect_status": True, "msg": None}

try:

# connect this thing

si = SmartConnectNoSSL(host=self.ip, user=self.user, pwd=self.password, port=self.port, connectionPoolTimeout=60)

except Exception as e:

res['connect_status'] = False

try:

res['msg'] = ("%s Caught vmodl fault : " + e.msg) % (self.ip)

except Exception as e:

res['msg'] = '%s: connection error' % (self.ip)

return res

# disconnect this thing

atexit.register(Disconnect, si)

content = si.RetrieveContent()

esxi_obj = self.get_obj(content, [vim.HostSystem])

for esxi in esxi_obj:

esxi_host[esxi.name] = {}

esxi_host[esxi.name]['idc_id'] = self.idc_id

esxi_host[esxi.name]['vcenter_id'] = self.vcenter_id

esxi_host[esxi.name]['server_ip'] = esxi.name

esxi_host[esxi.name]['manufacturer'] = esxi.summary.hardware.vendor

esxi_host[esxi.name]['server_model'] = esxi.summary.hardware.model

for i in esxi.summary.hardware.otherIdentifyingInfo:

if isinstance(i, vim.host.SystemIdentificationInfo):

esxi_host[esxi.name]['server_sn'] = i.identifierValue

# 系统名称

esxi_host[esxi.name]['system_name'] = esxi.summary.config.product.fullName

# cpu总核数

esxi_cpu_total = esxi.summary.hardware.numCpuThreads

# 内存总量 GB

esxi_memory_total = esxi.summary.hardware.memorySize / 1024 / 1024 / 1024

# 获取硬盘总量 GB

esxi_disk_total = 0

for ds in esxi.datastore:

esxi_disk_total += ds.summary.capacity / 1024 / 1024 / 1024

# 默认配置4核8G100G,根据这个配置计算剩余可分配虚拟机

default_configure = {

'cpu': 4,

'memory': 8,

'disk': 100

}

esxi_host[esxi.name]['vm_host'] = []

vm_usage_total_cpu = 0

vm_usage_total_memory = 0

vm_usage_total_disk = 0

# 虚拟机信息

for vm in esxi.vm:

host_info = {}

host_info['vm_name'] = vm.name

host_info['power_status'] = vm.runtime.powerState

host_info['cpu_total_kernel'] = str(vm.config.hardware.numCPU) + '核'

host_info['memory_total'] = str(vm.config.hardware.memoryMB) + 'MB'

host_info['system_info'] = vm.config.guestFullName

disk_info = ''

disk_total = 0

for d in vm.config.hardware.device:

if isinstance(d, vim.vm.device.VirtualDisk):

disk_total += d.capacityInKB / 1024 / 1024

disk_info += d.deviceInfo.label + ": " + str((d.capacityInKB) / 1024 / 1024) + ' GB' + ','

host_info['disk_info'] = disk_info

esxi_host[esxi.name]['vm_host'].append(host_info)

# 计算当前宿主机可用容量:总量 - 已分配的

if host_info['power_status'] == 'poweredOn':

vm_usage_total_cpu += vm.config.hardware.numCPU

vm_usage_total_disk += disk_total

vm_usage_total_memory += (vm.config.hardware.memoryMB / 1024)

esxi_cpu_free = esxi_cpu_total - vm_usage_total_cpu

esxi_memory_free = esxi_memory_total - vm_usage_total_memory

esxi_disk_free = esxi_disk_total - vm_usage_total_disk

esxi_host[esxi.name]['cpu_info'] = 'Total: %d核, Free: %d核' % (esxi_cpu_total, esxi_cpu_free)

esxi_host[esxi.name]['memory_info'] = 'Total: %dGB, Free: %dGB' % (esxi_memory_total, esxi_memory_free)

esxi_host[esxi.name]['disk_info'] = 'Total: %dGB, Free: %dGB' % (esxi_disk_total, esxi_disk_free)

# 计算cpu 内存 磁盘按照默认资源分配的最小值,即为当前可分配资源

if esxi_cpu_free < 4 or esxi_memory_free < 8 or esxi_disk_free < 100:

free_allocation_vm_host = 0

else:

free_allocation_vm_host = int(min(

[

esxi_cpu_free / default_configure['cpu'],

esxi_memory_free / default_configure['memory'],

esxi_disk_free / default_configure['disk']

]

))

esxi_host[esxi.name]['free_allocation_vm_host'] =

free_allocation_vm_host

esxi_host['connect_status'] = True

return esxi_host

def write_to_db(self):

esxi_host = self.get_esxi_info()

# 连接失败

if not esxi_host['connect_status']:

return esxi_host

del esxi_host['connect_status']

for machine_ip in esxi_host:

# 物理机信息

esxi_host_dict = esxi_host[machine_ip]

# 虚拟机信息

virtual_host = esxi_host[machine_ip]['vm_host']

del esxi_host[machine_ip]['vm_host']

obj = models.EsxiHost.objects.create(**esxi_host_dict)

obj.save()

for host_info in virtual_host:

host_info['management_host_id'] = obj.id

obj2 = model

22、获取域名 ssl 证书过期时间

用于 zabbix 告警

import re

import sys

import time

import subprocess

from datetime import datetime

from io import StringIO

def main(domain):

f = StringIO()

comm = f"curl -Ivs https://{domain} --connect-timeout 10"

result = subprocess.getstatusoutput(comm)

f.write(result[1])

try:

m = re.search('start date: (.*?)\n.*?expire date: (.*?)\n.*?common name: (.*?)\n.*?issuer: CN=(.*?)\n', f.getvalue(), re.S)

start_date = m.group(1)

expire_date = m.group(2)

common_name = m.group(3)

issuer = m.group(4)

except Exception as e:

return 999999999

# time 字符串转时间数组

start_date = time.strptime(start_date, "%b %d %H:%M:%S %Y GMT")

start_date_st = time.strftime("%Y-%m-%d %H:%M:%S", start_date)

# datetime 字符串转时间数组

expire_date = datetime.strptime(expire_date, "%b %d %H:%M:%S %Y GMT")

expire_date_st = datetime.strftime(expire_date,"%Y-%m-%d %H:%M:%S")

# 剩余天数

remaining = (expire_date-datetime.now()).days

return remaining

if __name__ == "__main__":

domain = sys.argv[1]

remaining_days = main(domain)

print(remaining_day

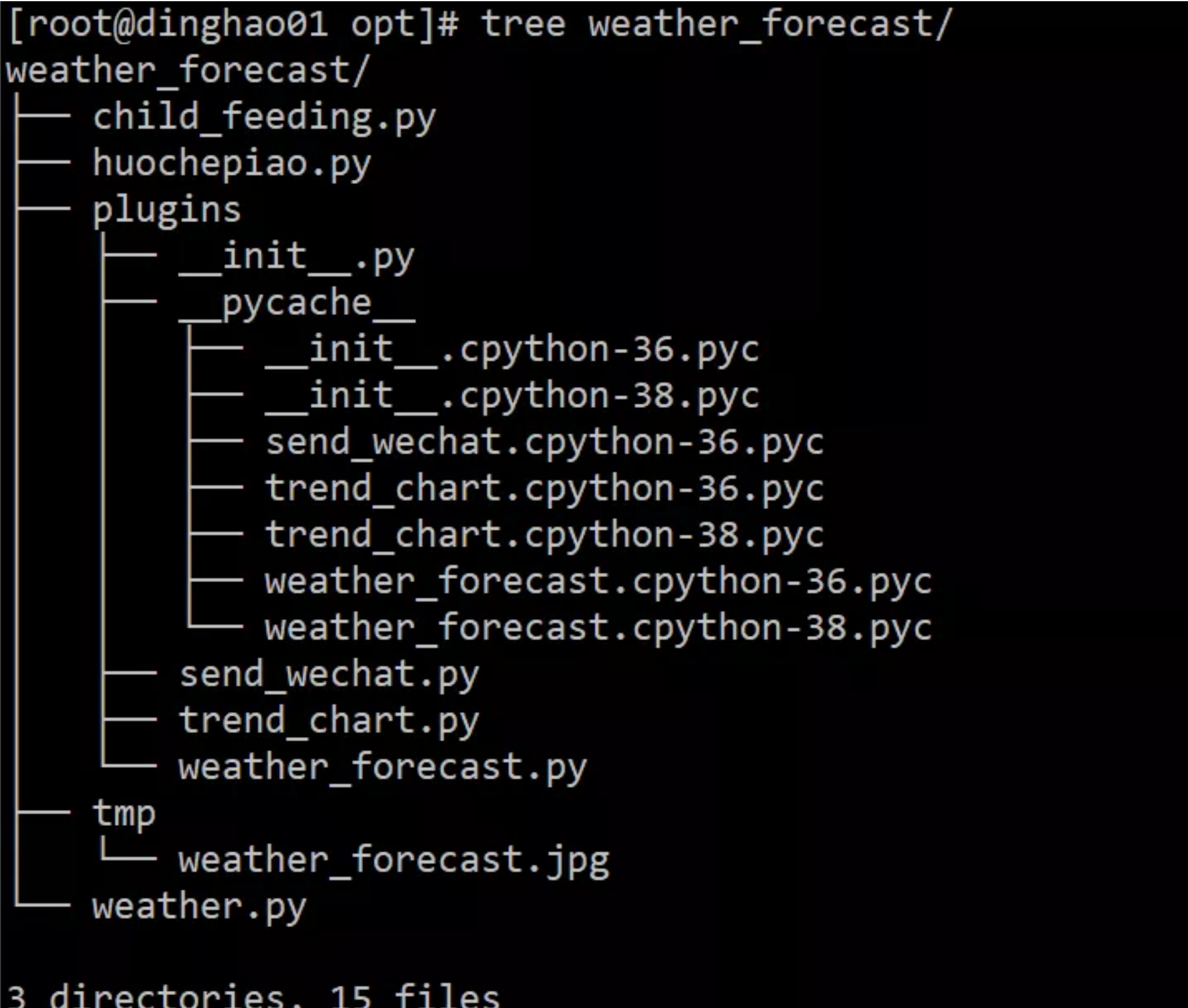

23、发送今天的天气预报以及未来的天气趋势图

此脚本用于给老婆大人发送今天的天气预报以及未来的天气趋势图,现在微信把网页端禁止了,没法发送到微信了,我是通过企业微信进行通知的,需要把你老婆大人拉到企业微信,无兴趣的小伙伴跳过即可。

# -*- coding: utf-8 -*-

import requests

import json

import datetime

def weather(city):

url = "http://wthrcdn.etouch.cn/weather_mini?city=%s" % city

try:

data = requests.get(url).json()['data']

city = data['city']

ganmao = data['ganmao']

today_weather = data['forecast'][0]

res = "老婆今天是{}\n今天天气概况\n城市: {:<10}\n时间: {:<10}\n高温: {:<10}\n低温: {:<10}\n风力: {:<10}\n风向: {:<10}\n天气:{:<10}\n\n稍后会发送近期温度趋势图,请注意查看。\

".format(

ganmao,

city,

datetime.datetime.now().strftime('%Y-%m-%d'),

today_weather['high'].split()[1],

today_weather['low'].split()[1],

today_weather['fengli'].split('[')[2].split(']')[0],

today_weather['fengxiang'],today_weather['type'],

)

return {"source_data": data, "res": res}

except Exception as e:

return str(e)

获取天气预报趋势图

# -*- coding: utf-8 -*-

import matplotlib.pyplot as plt

import re

import datetime

def Future_weather_states(forecast, save_path, day_num=5):

'''

展示未来的天气预报趋势图

:param forecast: 天气预报预测的数据

:param day_num: 未来几天

:return: 趋势图

'''

future_forecast = forecast

dict={}

for i in range(day_num):

data = []

date = future_forecast[i]["date"]

date = int(re.findall("\d+",date)[0])

data.append(int(re.findall("\d+", future_forecast[i]["high"])[0]))

data.append(int(re.findall("\d+", future_forecast[i]["low"])[0]))

data.append(future_forecast[i]["type"])

dict[date] = data

data_list = sorted(dict.items())

date=[]

high_temperature = []

low_temperature = []

for each in data_list:

date.append(each[0])

high_temperature.append(each[1][0])

low_temperature.append(each[1][2])

fig = plt.plot(date,high_temperature,"r",date,low_temperature,"b")

current_date = datetime.datetime.now().strftime('%Y-%m')

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.xlabel(current_date)

plt.ylabel("°C")

plt.legend(["高温", "低温"])

plt.xticks(date)

plt.title("最近几天温度变化趋势")

plt.savefig(save_path)

发送到企业微信

# -*- coding: utf-8 -*-

import requests

import json

class DLF:

def __init__(self, corpid, corpsecret):

self.url = "https://qyapi.weixin.qq.com/cgi-bin"

self.corpid = corpid

self.corpsecret = corpsecret

self._token = self._get_token()

def _get_token(self):

'''

获取企业微信API接口的access_token

:return:

'''

token_url = self.url + "/gettoken?corpid=%s&corpsecret=%s" % (self.corpid, self.corpsecret)

try:

res = requests.get(token_url).json()

token = res['access_token']

return token

except Exception as e:

return str(e)

def _get_media_id(self, file_obj):

get_media_url = self.url + "/media/upload?access_token= {}&type=file".format(self._token)

data = {"media": file_obj}

try:

res = requests.post(url=get_media_url, files=data)

media_id = res.json()['media_id']

return media_id

except Exception as e:

return str(e)

def send_text(self, agentid, content, touser=None, toparty=None):

send_msg_url = self.url + "/message/send?access_token=%s" % (self._token)

send_data = {

"touser": touser,

"toparty": toparty,

"msgtype": "text",

"agentid": agentid,

"text": {

"content": content

}

}

try:

res = requests.post(send_msg_url, data=json.dumps(send_data))

except Exception as e:

return str(e)

def send_image(self, agentid, file_obj, touser=None, toparty=None):

media_id = self._get_media_id(file_obj)

send_msg_url = self.url + "/message/send?access_token=%s" % (self._token)

send_data = {

"touser": touser,

"toparty": toparty,

"msgtype": "image",

"agentid": agentid,

"image": {

"media_id": media_id

}

}

try:

res = requests.post(send_msg_url, data=json.dumps(send_data))

except Exception as e:

return str(e)

main脚本

# -*- coding: utf-8 -*-

from plugins.weather_forecast import weather

from plugins.trend_chart import Future_weather_states

from plugins.send_wechat import DLF

import os

# 企业微信相关信息

corpid = "xxx"

corpsecret = "xxx"

agentid = "xxx"

# 天气预报趋势图保存路径

_path = os.path.dirname(os.path.abspath(__file__))

save_path = os.path.join(_path ,'./tmp/weather_forecast.jpg')

# 获取天气预报信息

content = weather("大兴")

# 发送文字消息

dlf = DLF(corpid, corpsecret)

dlf.send_text(agentid=agentid, content=content['res'], toparty='1')

# 生成天气预报趋势图

Future_weather_states(content['source_data']['forecast'], save_path)

# 发送图片消息

file_obj = open(save_path, 'rb')

dlf.send_image(agentid=agentid, toparty='1', file_obj=file_ob

24、SVN 完整备份

通过 hotcopy 进行 SVN 完整备份,备份保留 7 天。

#!/bin/bash

# Filename : svn_backup_repos.sh

# Date : 2020/12/14

# Author : JakeTian

# Email : JakeTian@***.com

# Crontab : 59 23 * * * /bin/bash $BASE_PATH/svn_backup_repos.sh >/dev/null

2>&1

# Notes : 将脚本加入crontab中,每天定时执行

# Description: SVN完全备份

set -e

SRC_PATH="/opt/svndata"

DST_PATH="/data/svnbackup"

LOG_FILE="$DST_PATH/logs/svn_backup.log"

SVN_BACKUP_C="/bin/svnadmin hotcopy"

SVN_LOOK_C="/bin/svnlook youngest"

TODAY=$(date +'%F')

cd $SRC_PATH

ALL_REPOS=$(find ./ -maxdepth 1 -type d ! -name 'httpd' -a ! -name 'bak' | tr -d'./')

# 创建备份目录,备份脚本日志目录

test -d $DST_PATH || mkdir -p $DST_PATH

test -d $DST_PATH/logs || mkdir $DST_PATH/logs

test -d $DST_PATH/$TODAY || mkdir $DST_PATH/$TODAY

# 备份repos文件

for repo in $ALL_REPOS

do

$SVN_BACKUP_C $SRC_PATH/$repo $DST_PATH/$TODAY/$repo

# 判断备份是否完成

if $SVN_LOOK_C $DST_PATH/$TODAY/$repo;then

echo "$TODAY: $repo Backup Success" >> $LOG_FILE

else

echo "$TODAY: $repo Backup Fail" >> $LOG_FILE

fi

done

# # 备份用户密码文件和权限文件

cp -p authz access.conf $DST_PATH/$TODAY

# 日志文件转储

mv $LOG_FILE $LOG_FILE-$TODAY

# 删除七天前的备份

seven_days_ago=$(date -d "7 days ago" +'%F')

rm -rf $DST_PATH/$seven_days_ag

25、zabbix 监控用户密码过期用于 Zabbix 监控 Linux 系统用户(shell 为 /bin/bash 和 /bin/sh)密码过期,密码有效期剩余 7 天触发加自动发现用户。

#!/bin/bash

diskarray=(`awk -F':' '$NF ~ /\/bin\/bash/||/\/bin\/sh/{print $1}' /etc/passwd`)

length=${#diskarray[@]}

printf "{\n"

printf '\t'"\"data\":["

for ((i=0;i<$length;i++))

do

printf '\n\t\t{'

printf "\"{#USER_NAME}\":\"${diskarray[$i]}\"}"

if [ $i -lt $[$length-1] ];then

printf ','

fi

done

printf "\n\t]\n"

printf "}\n"

检查用户密码过期

#!/bin/bash

export LANG=en_US.UTF-8

SEVEN_DAYS_AGO=$(date -d '-7 day' +'%s')

user="$1"

# 将Sep 09, 2018格式的时间转换成unix时间

expires_date=$(sudo chage -l $user | awk -F':' '/Password expires/{print $NF}' | sed -n 's/^ //p')

if [[ "$expires_date" != "never" ]];then

expires_date=$(date -d "$expires_date" +'%s')

if [ "$expires_date" -le "$SEVEN_DAYS_AGO" ];then

echo "1"

else

echo "0"

fi

else

echo "0"

fi

26、构建本地YUM

通过 rsync 的方式同步 yum,通过 nginx 只做 http yum 站点;

但是 centos6 的镜像最近都不能用了,国内貌似都禁用了,如果找到合适的自行更换地址。

#!/bin/bash

# 更新yum镜像

RsyncCommand="rsync -rvutH -P --delete --delete-after --delay-updates --

bwlimit=1000"

DIR="/app/yumData"

LogDir="$DIR/logs"

Centos6Base="$DIR/Centos6/x86_64/Base"

Centos7Base="$DIR/Centos7/x86_64/Base"

Centos6Epel="$DIR/Centos6/x86_64/Epel"

Centos7Epel="$DIR/Centos7/x86_64/Epel"

Centos6Salt="$DIR/Centos6/x86_64/Salt"

Centos7Salt="$DIR/Centos7/x86_64/Salt"

Centos6Update="$DIR/Centos6/x86_64/Update"

Centos7Update="$DIR/Centos7/x86_64/Update"

Centos6Docker="$DIR/Centos6/x86_64/Docker"

Centos7Docker="$DIR/Centos7/x86_64/Docker"

Centos6Mysql5_7="$DIR/Centos6/x86_64/Mysql/Mysql5.7"

Centos7Mysql5_7="$DIR/Centos7/x86_64/Mysql/Mysql5.7"

Centos6Mysql8_0="$DIR/Centos6/x86_64/Mysql/Mysql8.0"

Centos7Mysql8_0="$DIR/Centos7/x86_64/Mysql/Mysql8.0"

MirrorDomain="rsync://rsync.mirrors.ustc.edu.cn"

# 目录不存在就创建

check_dir(){

for dir in $*

do

test -d $dir || mkdir -p $dir

done

}

# 检查rsync同步结果

check_rsync_status(){

if [ $? -eq 0 ];then

echo "rsync success" >> $1

else

echo "rsync fail" >> $1

fi

}

check_dir $DIR $LogDir $Centos6Base $Centos7Base $Centos6Epel $Centos7Epel

$Centos6Salt $Centos7Salt $Centos6Update $Centos7Update $Centos6Docker

$Centos7Docker $Centos6Mysql5_7 $Centos7Mysql5_7 $Centos6Mysql8_0

$Centos7Mysql8_0

# Base yumrepo

#$RsyncCommand "$MirrorDomain"/repo/centos/6/os/x86_64/ $Centos6Base >> "$LogDir/centos6Base.log" 2>&1

# check_rsync_status "$LogDir/centos6Base.log"

$RsyncCommand "$MirrorDomain"/repo/centos/7/os/x86_64/ $Centos7Base >> "$LogDir/centos7Base.log" 2>&1

check_rsync_status "$LogDir/centos7Base.log"

# Epel yumrepo

# $RsyncCommand "$MirrorDomain"/repo/epel/6/x86_64/ $Centos6Epel >> "$LogDir/centos6Epel.log" 2>&1

# check_rsync_status "$LogDir/centos6Epel.log"

$RsyncCommand "$MirrorDomain"/repo/epel/7/x86_64/ $Centos7Epel >> "$LogDir/centos7Epel.log" 2>&1

check_rsync_status "$LogDir/centos7Epel.log"

# SaltStack yumrepo

# $RsyncCommand "$MirrorDomain"/repo/salt/yum/redhat/6/x86_64/ $Centos6Salt >> "$LogDir/centos6Salt.log" 2>&1

# ln -s $Centos6Salt/archive/$(ls $Centos6Salt/archive | tail -1)

$Centos6Salt/latest

# check_rsync_status "$LogDir/centos6Salt.log"

$RsyncComman "$MirrorDomain"/repo/salt/yum/redhat/7/x86_64/ $Centos7Salt >> "$LogDir/centos7Salt.log" 2>&1

check_rsync_status "$LogDir/centos7Salt.log"

# ln -s $Centos7Salt/archive/$(ls $Centos7Salt/archive | tail -1)

$Centos7Salt/latest

# Docker yumrepo

$RsyncCommand "$MirrorDomain"/repo/docker-ce/linux/centos/7/x86_64/stable/

$Centos7Docker >> "$LogDir/centos7Docker.log" 2>&1

check_rsync_status "$LogDir/centos7Docker.log"

# centos update yumrepo

# $RsyncCommand "$MirrorDomain"/repo/centos/6/updates/x86_64/ $Centos6Update >> "$LogDir/centos6Update.log" 2>&1

# check_rsync_status "$LogDir/centos6Update.log"

$RsyncCommand "$MirrorDomain"/repo/centos/7/updates/x86_64/ $Centos7Update >> "$LogDir/centos7Update.log" 2>&1

check_rsync_status "$LogDir/centos7Update.log"

# mysql 5.7 yumrepo

# $RsyncCommand "$MirrorDomain"/repo/mysql-repo/yum/mysql-5.7-community/el/6/x86_64/ "$Centos6Mysql5_7" >> "$LogDir/centos6Mysql5.7.log" 2>&1

# check_rsync_status "$LogDir/centos6Mysql5.7.log"

$RsyncCommand "$MirrorDomain"/repo/mysql-repo/yum/mysql-5.7-community/el/7/x86_64/ "$Centos7Mysql5_7" >> "$LogDir/centos7Mysql5.7.log" 2>&1 check_rsync_status "$LogDir/centos7Mysql5.7.log"

# mysql 8.0 yumrepo

# $RsyncCommand "$MirrorDomain"/repo/mysql-repo/yum/mysql-8.0-community/el/6/x86_64/ "$Centos6Mysql8_0" >> "$LogDir/centos6Mysql8.0.log" 2>&1

# check_rsync_status "$LogDir/centos6Mysql8.0.log"

$RsyncCommand "$MirrorDomain"/repo/mysql-repo/yum/mysql-8.0-community/el/7/x86_64/ "$Centos7Mysql8_0" >> "$LogDir/centos7Mysql8.0.log" 2>&1 check_rsync_status "$LogDir/centos7Mysql8.0.log"

10-26来自养乐多 ,转自公众号「杰哥的IT之旅」

27、备份当前日期文件

#!/bin/bash

#一月前

historyTime=$(date "+%Y-%m-%d %H" -d '1 month ago')

echo ${historyTime}

historyTimeStamp=$(date -d "$historyTime" +%s)

echo ${historyTimeStamp}

#一周前

$(date "+%Y-%m-%d %H" -d '7 day ago')

#本月一月一日

date_this_month=`date +%Y%m01`

#一天前

date_today=`date -d '1 day ago' +%Y%m%d`

#一小时前

$(date "+%Y-%m-%d %H" -d '-1 hours')

28、DOS攻击防范(自动屏蔽攻击IP)

#!/bin/bash

DATE=$(date +%d/%b/%Y:%H:%M)

#nginx日志

LOG_FILE=/usr/local/nginx/logs/demo2.access.log

#分析ip的访问情况

ABNORMAL_IP=$(tail -n5000 $LOG_FILE |grep $DATE |awk '{a[$1]++}END{for(i in a)if(a[i]>10)print i}')

for IP in $ABNORMAL_IP; do

if [ $(iptables -vnL |grep -c "$IP") -eq 0 ]; then

iptables -I INPUT -s $IP -j DROP

echo "$(date +'%F_%T') $IP" >> /tmp/drop_ip.log

fi

done

29、批量创建多少个用户并设置密码

#!/bin/bash

USER_LIST=$@

USER_FILE=./user.info

for USER in $USER_LIST;do

if ! id $USER &>/dev/null; then

PASS=$(echo $RANDOM |md5sum |cut -c 1-8)

useradd $USER

echo $PASS | passwd --stdin $USER &>/dev/null

echo "$USER $PASS" >> $USER_FILE

echo "$USER User create successful."

else

echo "$USER User already exists!"

fi

done

30、快速在Ubuntu 20.04上架设LAMP服务器及

WordPress博客

详情见:https://www.linuxmi.com/ubuntu-20-04-lamp-wordpress.html

#/bin/sh

install_dir="/var/www/html"

#Creating Random WP Database Credenitals

db_name="wp`date +%s`"

db_user=$db_name

db_password=`date |md5sum |cut -c '1-12'`

sleep 1

mysqlrootpass=`date |md5sum |cut -c '1-12'`

sleep 1

#### Install Packages for https and mysql

apt -y install apache2

apt -y install mysql-server

### Start http

rm /var/www/html/index.html

systemctl enable apache2

systemctl start apache2

#### Start mysql and set root password

systemctl enable mysql

systemctl start mysql

/usr/bin/mysql -e "USE mysql;"

/usr/bin/mysql -e "UPDATE user SET Password=PASSWORD($mysqlrootpass) WHERE user='root';"

/usr/bin/mysql -e "FLUSH PRIVILEGES;"

touch /root/.my.cnf

chmod 640 /root/.my.cnf

echo "[client]">>/root/.my.cnf

echo "user=root">>/root/.my.cnf

echo "password="$mysqlrootpass>>/root/.my.cnf

####Install PHP

apt -y install php

apt -y php-mysql

apt -y php-gd

sed -i '0,/AllowOverride\ None/! {0,/AllowOverride\ None/ s/AllowOverride\ None/AllowOverride\ All/}' /etc/apache2/apache2.conf #Allow htaccess usage

systemctl restart apache2

####Download and extract latest WordPress Package

if test -f /tmp/latest.tar.gz

then

echo "WP is already downloaded."

else

echo "Downloading WordPress"

cd /tmp/ && wget "http://wordpress.org/latest.tar.gz";

fi

/bin/tar -C $install_dir -zxf /tmp/latest.tar.gz --strip-components=1

chown www-data: $install_dir -R

#### Create WP-config and set DB credentials

/bin/mv $install_dir/wp-config-sample.php $install_dir/wp-config.php

/bin/sed -i "s/database_name_here/$db_name/g" $install_dir/wp-config.php

/bin/sed -i "s/username_here/$db_user/g" $install_dir/wp-config.php

/bin/sed -i "s/password_here/$db_password/g" $install_dir/wp-config.php

cat << EOF >> $install_dir/wp-config.php

define('FS_METHOD', 'direct');

EOF

cat << EOF >> $install_dir/.htaccess

# BEGIN WordPress

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteBase /

RewriteRule ^index.php$ – [L]

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule . /index.php [L]

</IfModule>

# END WordPress

EOF

chown www-data: $install_dir -R

##### Set WP Salts

grep -A50 'table_prefix' $install_dir/wp-config.php > /tmp/wp-tmp-config

/bin/sed -i '/**#@/,/$p/d' $install_dir/wp-config.php

/usr/bin/lynx --dump -width 200 https://api.wordpress.org/secret-key/1.1/salt/ >>

$install_dir/wp-config.php

/bin/cat /tmp/wp-tmp-config >> $install_dir/wp-config.php && rm /tmp/wp-tmp- config -f

/usr/bin/mysql -u root -e "CREATE DATABASE $db_name"

/usr/bin/mysql -u root -e "CREATE USER '$db_name'@'localhost' IDENTIFIED WITH mysql_native_password BY '$db_password';"

/usr/bin/mysql -u root -e "GRANT ALL PRIVILEGES ON $db_name.* TO '$db_user'@'localhost';"

######Display generated passwords to log file.

echo "Database Name: " $db_name

echo "Database User: " $db_user

echo "Database Password: " $db_password

echo "Mysql root password: " $mysqlrootpass

31、每天自动备份 MySQL 数据库

#!/bin/sh

# Database info

DB_USER="batsing"

DB_PASS="batsingpw"

DB_HOST="localhost"

DB_NAME="timepusher"

# 一些变量

BIN_DIR="/usr/bin" #mysql bin路径

BCK_DIR="/mnt/mysqlBackup" #备份文件目录

DATE=`date +%F`

# TODO

# /usr/bin/mysqldump --opt -ubatsing -pbatsingpw -hlocalhost timepusher > /mnt/mysqlBackup/db_`date +%F`.sql

$BIN_DIR/mysqldump --opt -u$DB_USER -p$DB_PASS -h$DB_HOST $DB_NAME > $BCK_DIR/db_$DATE.sql

#还原数据库

#用mysql-front导入前一天的 *.sql 文件即可恢复数据

32、MySQL 数据库备份单循环

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null |egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_NAME=$BACKUP_DIR/${DB}_${DATE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS -B $DB > $BACKUP_NAME 2>/dev/null; then

echo "$BACKUP_NAME 备份失败!"

fi

done

33、MySQL 数据库备份多循环

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null |egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_DB_DIR=$BACKUP_DIR/${DB}_${DATE}

[ ! -d $BACKUP_DB_DIR ] && mkdir -p $BACKUP_DB_DIR &>/dev/null

TABLE_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "use $DB;show tables;" 2>/dev/null)

for TABLE in $TABLE_LIST; do

BACKUP_NAME=$BACKUP_DB_DIR/${TABLE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS $DB $TABLE > $BACKUP_NAME 2>/dev/null; then

echo "$BACKUP_NAME 备份失败!"

fi

done

done

34、Nginx 日志按要求切割

#!/bin/bash

#安装目录下日志文件

base_log_path='/usr/local/openresty/nginx/logs/access.log'

base_error_path='/usr/local/openresty/nginx/logs/error.log'

#需要保存的目录位置

log_path='/data_lytdev_dir/nginx/logs/'

#获取月份

log_month=$(date -d yesterday +"%Y%m")

#获取前一天日期 (第二天凌晨备份,即保存的日志就是当天时间的日志)

log_day=$(date -d yesterday +"%d")

#在指定位置创建文件夹

mkdir -p $log_path/$log_month

#将安装目录下的日志文件,移动到指定存储位置

mv $base_log_path $log_path/$log_month/access_$log_day.log

mv $base_error_path $log_path/$log_month/error_$log_day.log

#再使用信号控制切割日志

#USR1 表示nginx信号控制,切割日志

kill -USR1 `cat /usr/local/openresty/nginx/logs/nginx.pid`

#每天凌晨1点切割日志

* 1 * * * /usr/local/openresty/nginx/logs/log_rotate.sh

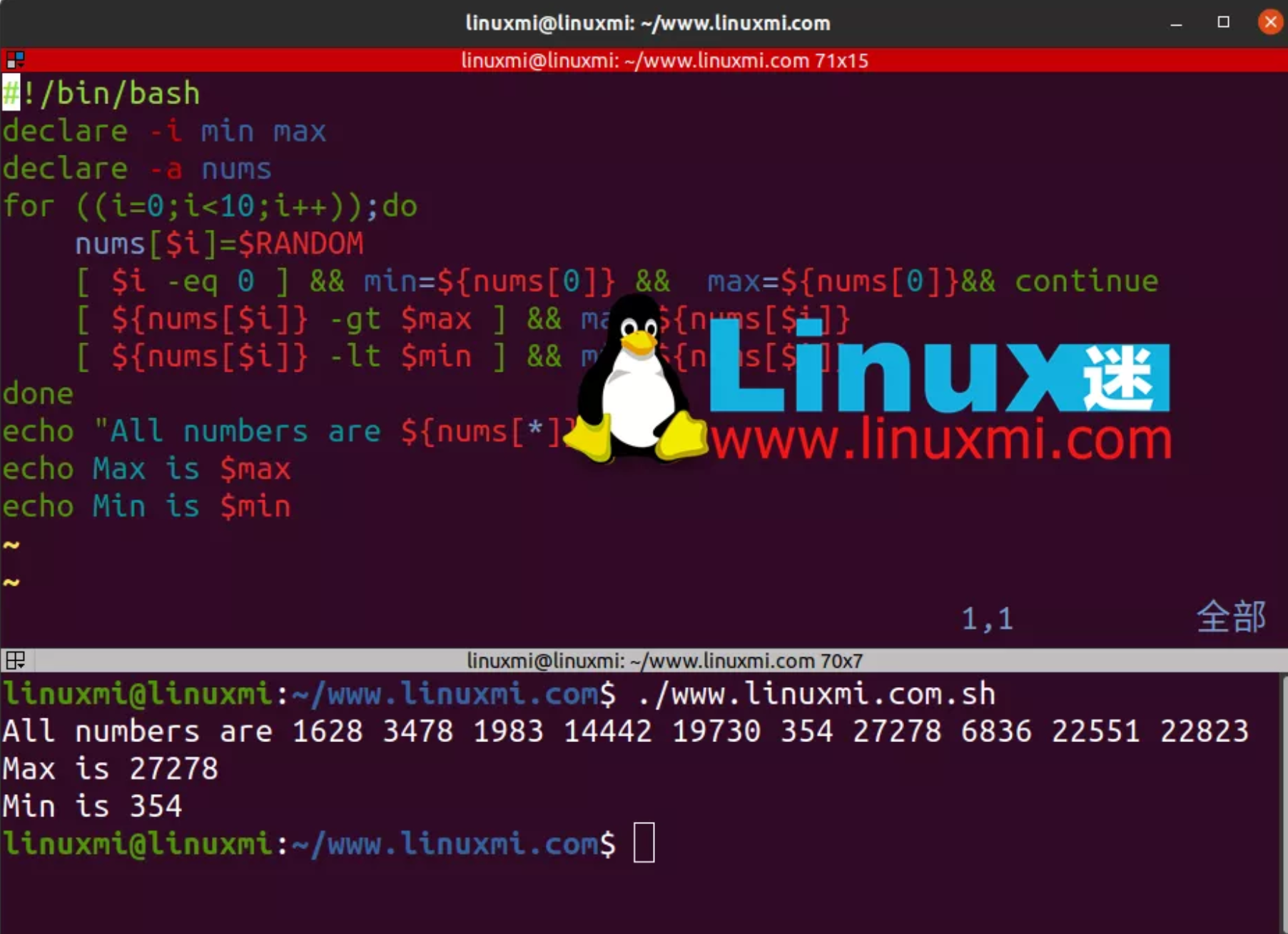

35、生成10个随机数保存于数组中并找出其最大值和最小值

#!/bin/bash

declare -i min max

declare -a nums

for ((i=0;i<10;i++));do

nums[$i]=$RANDOM

[ $i -eq 0 ] && min=${nums[0]} && max=${nums[0]}&& continue

[ ${nums[$i]} -gt $max ] && max=${nums[$i]}

[ ${nums[$i]} -lt $min ] && min=${nums[$i]}

done

echo "All numbers are ${nums[*]}"

echo Max is $max

echo Min is $min

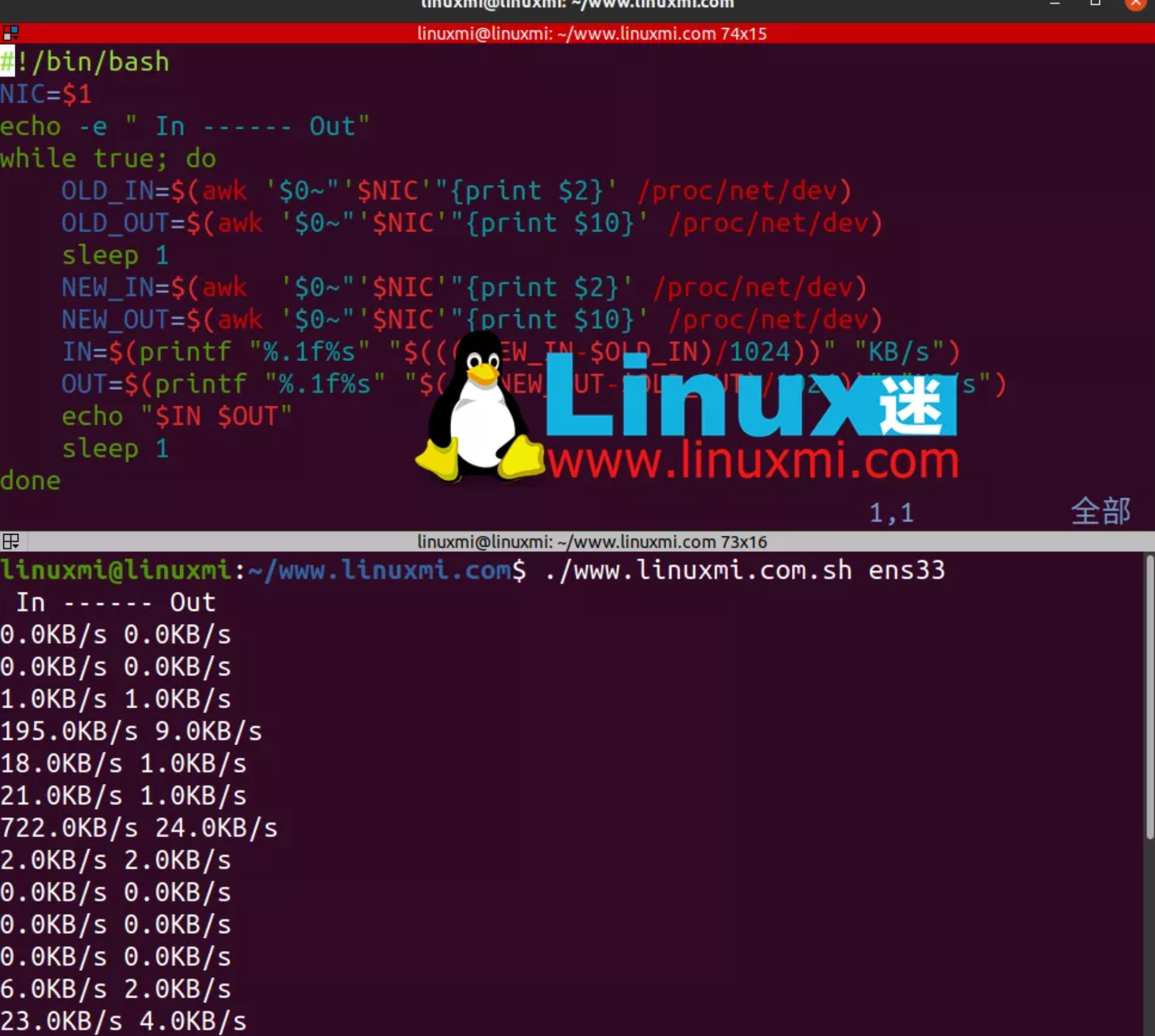

36、查看网卡实时流量

#!/bin/bash

NIC=$1

echo -e " In ------ Out"

while true; do

OLD_IN=$(awk '$0~"'$NIC'"{print $2}' /proc/net/dev)

OLD_OUT=$(awk '$0~"'$NIC'"{print $10}' /proc/net/dev)

sleep 1

NEW_IN=$(awk '$0~"'$NIC'"{print $2}' /proc/net/dev)

NEW_OUT=$(awk '$0~"'$NIC'"{print $10}' /proc/net/dev)

IN=$(printf "%.1f%s" "$((($NEW_IN-$OLD_IN)/1024))" "KB/s")

OUT=$(printf "%.1f%s" "$((($NEW_OUT-$OLD_OUT)/1024))" "KB/s")

echo "$IN $OUT"

sleep 1

done

27-36来自:Linux迷,https://www.linuxmi.com/shell-script-10.html

37、服务器系统配置初始化

#/bin/bash

# 安装系统性能分析工具及其他

yum install gcc make autoconf vim sysstat net-tools iostat iftop iotp wget lrzsz lsof unzip openssh-clients net-tool vim ntpdate -y

# 设置时区并同步时间

ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

if ! crontab -l |grep ntpdate &>/dev/null ; then

(echo "* 1 * * * ntpdate time.windows.com >/dev/null 2>&1";crontab -l) |crontab

fi

# 禁用selinux

sed -i '/SELINUX/{s/permissive/disabled/}' /etc/selinux/config

# 关闭防火墙

if egrep "7.[0-9]" /etc/redhat-release &>/dev/null; then

systemctl stop firewalld

systemctl disable firewalld

elif egrep "6.[0-9]" /etc/redhat-release &>/dev/null; then

service iptables stop

chkconfig iptables off

fi

# 历史命令显示操作时间

if ! grep HISTTIMEFORMAT /etc/bashrc; then

echo 'export HISTTIMEFORMAT="%Y-%m-%d %H:%M:%S `whoami` "' >> /etc/bashrc

fi

# SSH超时时间

if ! grep "TMOUT=600" /etc/profile &>/dev/null; then

echo "export TMOUT=600" >> /etc/profile

fi

# 禁止root远程登录 切记给系统添加普通用户,给su到root的权限

sed -i 's/#PermitRootLogin yes/PermitRootLogin no/' /etc/ssh/sshd_config

# 禁止定时任务向发送邮件

sed -i 's/^MAILTO=root/MAILTO=""/' /etc/crontab

# 设置最大打开文件数

if ! grep "* soft nofile 65535" /etc/security/limits.conf &>/dev/null; then

cat >> /etc/security/limits.conf << EOF

* soft nofile 65535

* hard nofile 65535

EOF

fi

# 系统内核优化

cat >> /etc/sysctl.conf << EOF

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_tw_buckets = 20480

net.ipv4.tcp_max_syn_backlog = 20480

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_fin_timeout = 20

EOF

# 减少SWAP使用

echo "0" > /proc/sys/vm/swappiness

38、批量创建多个用户并设置密码

#!/bin/bash

USER_LIST=$@

USER_FILE=./user.info

for USER in $USER_LIST;do

if ! id $USER &>/dev/null; then

PASS=$(echo $RANDOM |md5sum |cut -c 1-8)

useradd $USER

echo $PASS | passwd --stdin $USER &>/dev/null

echo "$USER $PASS" >> $USER_FILE

echo "$USER User create successful."

else

echo "$USER User already exists!"

fi

done

39、一键查看服务器利用率

#!/bin/bash

function cpu(){

util=$(vmstat | awk '{if(NR==3)print $13+$14}')

iowait=$(vmstat | awk '{if(NR==3)print $16}')

echo "CPU -使用率:${util}% ,等待磁盘IO相应使用率:${iowait}:${iowait}%"

}

function memory (){

total=`free -m |awk '{if(NR==2)printf "%.1f",$2/1024}'`

used=`free -m |awk '{if(NR==2) printf "%.1f",($2-$NF)/1024}'`

available=`free -m |awk '{if(NR==2) printf "%.1f",$NF/1024}'`

echo "内存 - 总大小: ${total}G , 使用: ${used}G , 剩余: ${available}G"

}

disk(){

fs=$(df -h |awk '/^\/dev/{print $1}')

for p in $fs; do

mounted=$(df -h |awk '$1=="'$p'"{print $NF}')

size=$(df -h |awk '$1=="'$p'"{print $2}')

used=$(df -h |awk '$1=="'$p'"{print $3}')

used_percent=$(df -h |awk '$1=="'$p'"{print $5}')

echo "硬盘 - 挂载点: $mounted , 总大小: $size , 使用: $used , 使用率: $used_percent"

done

}

function tcp_status() {

summary=$(ss -antp |awk '{status[$1]++}END{for(i in status) printf i":"status[i]" "}')

echo "TCP连接状态 - $summary"

}

cpu

memory

disk

tcp_status

40、找出占用CPU 内存过高的进程

#!/bin/bash

echo "-------------------CUP占用前10排序--------------------------------"

ps -eo user,pid,pcpu,pmem,args --sort=-pcpu |head -n 10

echo "-------------------内存占用前10排序--------------------------------"

ps -eo user,pid,pcpu,pmem,args --sort=-pmem |hea

41、查看网卡的实时流量

#!/bin/bash

eth0=$1

echo -e "流量进入--流量传出 "

while true; do

old_in=$(cat /proc/net/dev |grep $eth0 |awk '{print $2}')

old_out=$(cat /proc/net/dev |grep $eth0 |awk '{print $10}')

sleep 1

new_in=$(cat /proc/net/dev |grep $eth0 |awk '{print $2}')

new_out=$(cat /proc/net/dev |grep $eth0 |awk '{print $10}')

in=$(printf "%.1f%s" "$((($new_in-$old_in)/1024))" "KB/s")

out=$(printf "%.1f%s" "$((($new_out-$old_out)/1024))" "KB/s")

echo "$in $out"

done

42、监控多台服务器磁盘利用率脚本

#!/bin/bash

HOST_INFO=host.info

for IP in $(awk '/^[^#]/{print $1}' $HOST_INFO); do

#取出用户名和端口

USER=$(awk -v ip=$IP 'ip==$1{print $2}' $HOST_INFO)

PORT=$(awk -v ip=$IP 'ip==$1{print $3}' $HOST_INFO)

#创建临时文件,保存信息

TMP_FILE=/tmp/disk.tmp

#通过公钥登录获取主机磁盘信息

ssh -p $PORT $USER@$IP 'df -h' > $TMP_FILE

#分析磁盘占用空间

USE_RATE_LIST=$(awk 'BEGIN{OFS="="}/^\/dev/{print $NF,int($5)}' $TMP_FILE)

#循环磁盘列表,进行判断

for USE_RATE in $USE_RATE_LIST; do

#取出等号(=)右边的值 挂载点名称

PART_NAME=${USE_RATE%=*}

#取出等号(=)左边的值 磁盘利用率

USE_RATE=${USE_RATE#*=}

#进行判断

if [ $USE_RATE -ge 80 ]; then

echo "Warning: $PART_NAME Partition usage $USE_RATE%!"

echo "服务器$IP的磁盘空间占用过高,请及时处理" | mail -s "空间不足警告" 你的qq@qq.com

else

echo "服务器$IP的$PART_NAME目录空间良好"

fi

done

done

43、批量检测网站是否异常并邮件通知

#!/bin/bash

URL_LIST="www.baidu.com www.ctnrs.com www.der-matech.net.cn www.der-matech.com.cn www.der-matech.cn www.der-matech.top www.der-matech.org"

for URL in $URL_LIST; do

FAIL_COUNT=0

for ((i=1;i<=3;i++)); do

HTTP_CODE=$(curl -o /dev/null --connect-timeout 3 -s -w "%{http_code}" $URL)

if [ $HTTP_CODE -eq 200 ]; then

echo "$URL OK"

break

else

echo "$URL retry $FAIL_COUNT"

let FAIL_COUNT++

fi

done

if [ $FAIL_COUNT -eq 3 ]; then

echo "Warning: $URL Access failure!"

echo "网站$URL坏掉,请及时处理" | mail -s "$URL网站高危" 1794748404@qq.com

fi

done

44、批量主机远程执行命令脚本

#!/bin/bash

COMMAND=$*

HOST_INFO=host.info

for IP in $(awk '/^[^#]/{print $1}' $HOST_INFO); do

USER=$(awk -v ip=$IP 'ip==$1{print $2}' $HOST_INFO)

PORT=$(awk -v ip=$IP 'ip==$1{print $3}' $HOST_INFO)

PASS=$(awk -v ip=$IP 'ip==$1{print $4}' $HOST_INFO)

expect -c "

spawn ssh -p $PORT $USER@$IP

expect {

\"(yes/no)\" {send \"yes\r\"; exp_continue}

\"password:\" {send \"$PASS\r\"; exp_continue}

\"$USER@*\" {send \"$COMMAND\r exit\r\"; exp_continue}

}

"

echo "-------------------"

done

45、一键部署LNMP网站平台脚本

#!/bin/bash

NGINX_V=1.15.6

PHP_V=5.6.36

TMP_DIR=/tmp

INSTALL_DIR=/usr/local

PWD_C=$PWD

echo

echo -e "\tMenu\n"

echo -e "1. Install Nginx"

echo -e "2. Install PHP"

echo -e "3. Install MySQL"

echo -e "4. Deploy LNMP"

echo -e "9. Quit"

function command_status_check() {

if [ $? -ne 0 ]; then

echo $1

exit

fi

}

function install_nginx() {

cd $TMP_DIR

yum install -y gcc gcc-c++ make openssl-devel pcre-devel wget

wget http://nginx.org/download/nginx-${NGINX_V}.tar.gz

tar zxf nginx-${NGINX_V}.tar.gz

cd nginx-${NGINX_V}

./configure --prefix=$INSTALL_DIR/nginx \

--with-http_ssl_module \

--with-http_stub_status_module \

--with-stream

command_status_check "Nginx - 平台环境检查失败!"

make -j 4

command_status_check "Nginx - 编译失败!"

make install

command_status_check "Nginx - 安装失败!"

mkdir -p $INSTALL_DIR/nginx/conf/vhost

alias cp=cp ; cp -rf $PWD_C/nginx.conf $INSTALL_DIR/nginx/conf

rm -rf $INSTALL_DIR/nginx/html/*

echo "ok" > $INSTALL_DIR/nginx/html/status.html

echo '<?php echo "ok"?>' > $INSTALL_DIR/nginx/html/status.php

$INSTALL_DIR/nginx/sbin/nginx

command_status_check "Nginx - 启动失败!"

}

function install_php() {

cd $TMP_DIR

yum install -y gcc gcc-c++ make gd-devel libxml2-devel \

libcurl-devel libjpeg-devel libpng-devel openssl-devel \

libmcrypt-devel libxslt-devel libtidy-devel

wget http://docs.php.net/distributions/php-${PHP_V}.tar.gz

tar zxf php-${PHP_V}.tar.gz

cd php-${PHP_V}

./configure --prefix=$INSTALL_DIR/php \

--with-config-file-path=$INSTALL_DIR/php/etc \

--enable-fpm --enable-opcache \

--with-mysql --with-mysqli --with-pdo-mysql \

--with-openssl --with-zlib --with-curl --with-gd \

--with-jpeg-dir --with-png-dir --with-freetype-dir \

--enable-mbstring --enable-hash

command_status_check "PHP - 平台环境检查失败!"

make -j 4

command_status_check "PHP - 编译失败!"

make install

command_status_check "PHP - 安装失败!"

cp php.ini-production $INSTALL_DIR/php/etc/php.ini

cp sapi/fpm/php-fpm.conf $INSTALL_DIR/php/etc/php-fpm.conf

cp sapi/fpm/init.d.php-fpm /etc/init.d/php-fpm

chmod +x /etc/init.d/php-fpm

/etc/init.d/php-fpm start

command_status_check "PHP - 启动失败!"

}

read -p "请输入编号:" number

case $number in

1)

install_nginx;;

2)

install_php;;

3)

install_mysql;;

4)

install_nginx

install_php

;;

9)

exit;;

esac

46、监控MySQL主从同步状态是否异常脚本

#!/bin/bash

HOST=localhost

USER=root

PASSWD=123.com

IO_SQL_STATUS=$(mysql -h$HOST -u$USER -p$PASSWD -e 'show slave status\G' 2>/dev/null |awk '/Slave_.*_Running:/{print $1$2}')

for i in $IO_SQL_STATUS; do

THREAD_STATUS_NAME=${i%:*}

THREAD_STATUS=${i#*:}

if [ "$THREAD_STATUS" != "Yes" ]; then

echo "Error: MySQL Master-Slave $THREAD_STATUS_NAME status is $THREAD_STATUS!" |mail -s "Master-Slave Staus" xxx@163.com

fi

done

mysqldump -uroot -pxxx -B A > A.sql

47、MySql数据库备份脚本

分库备份

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null |egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_NAME=$BACKUP_DIR/${DB}_${DATE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS -B $DB > $BACKUP_NAME 2>/dev/null;

then

echo "$BACKUP_NAME 备份失败!"

fi

done

分表备份

mysqldump -uroot -pxxx -A t > t.sql

#!/bin/bash

DATE=$(date +%F_%H-%M-%S)

HOST=localhost

USER=backup

PASS=123.com

BACKUP_DIR=/data/db_backup

DB_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "show databases;" 2>/dev/null |egrep -v "Database|information_schema|mysql|performance_schema|sys")

for DB in $DB_LIST; do

BACKUP_DB_DIR=$BACKUP_DIR/${DB}_${DATE}

[ ! -d $BACKUP_DB_DIR ] && mkdir -p $BACKUP_DB_DIR &>/dev/null

TABLE_LIST=$(mysql -h$HOST -u$USER -p$PASS -s -e "use $DB;show tables;" 2>/dev/null)

for TABLE in $TABLE_LIST; do

BACKUP_NAME=$BACKUP_DB_DIR/${TABLE}.sql

if ! mysqldump -h$HOST -u$USER -p$PASS $DB $TABLE > $BACKUP_NAME 2>/dev/null; then

echo "$BACKUP_NAME 备份失败!"

fi

done

done

48、Nginx访问日志分析

#!/bin/bash

# 日志格式: $remote_addr - $remote_user [$time_local] "$request" $status

$body_bytes_sent "$http_referer" "$http_user_agent" "$http_x_forwarded_for" LOG_FILE=$1

echo "统计访问最多的10个IP"

awk '{a[$1]++}END{print "UV:",length(a);for(v in a)print v,a[v]}' $LOG_FILE |sort -k2 -nr |head -10

echo "----------------------"

echo "统计时间段访问最多的IP"

awk '$4>="[01/Dec/2018:13:20:25" && $4<="[27/Nov/2018:16:20:49"{a[$1]++}END{for(v in a)print v,a[v]}' $LOG_FILE |sort -k2 -nr|head -10

echo "----------------------"

echo "统计访问最多的10个页面"

awk '{a[$7]++}END{print "PV:",length(a);for(v in a){if(a[v]>10)print v,a[v]}}' $LOG_FILE |sort -k2 -nr

echo "----------------------"

echo "统计访问页面状态码数量"

awk '{a[$7" "$9]++}END{for(v in a){if(a[v]>5)print v,a[v]}}' $LOG_FILE |sort -k3 -nr

49、Nginx访问日志自动按天(周、月)切割

#!/bin/bash

#nginx日志目录

LOG_DIR=/www/server/nginx/logs

#获取到上一天的时间

YESTERDAY_TIME=$(date -d "yesterday" +%F)

#归档日志取时间

LOG_MONTH_DIR=$LOG_DIR/$(date +"%Y-%m")

#归档日志的名称

LOG_FILE_LIST="access.log"

for LOG_FILE in $LOG_FILE_LIST; do

[ ! -d $LOG_MONTH_DIR ] && mkdir -p $LOG_MONTH_DIR

mv $LOG_DIR/$LOG_FILE $LOG_MONTH_DIR/${LOG_FILE}_${YESTERDAY_TIME}

done

kill -USR1 $(cat $LOG_DIR/nginx.pid)

50、自动发布Java项目(Tomcat)

#!/bin/bash

DATE=$(date +%F_%T)

TOMCAT_NAME=$1

TOMCAT_DIR=/usr/local/$TOMCAT_NAME

ROOT=$TOMCAT_DIR/webapps/ROOT

BACKUP_DIR=/data/backup

WORK_DIR=/tmp

PROJECT_NAME=tomcat-java-demo

# 拉取代码

cd $WORK_DIR

if [ ! -d $PROJECT_NAME ]; then

git clone https://github.com/lizhenliang/tomcat-java-demo

cd $PROJECT_NAME

else

cd $PROJECT_NAME

git pull

fi

# 构建

mvn clean package -Dmaven.test.skip=true

if [ $? -ne 0 ]; then

echo "maven build failure!"

exit 1

fi

# 部署

TOMCAT_PID=$(ps -ef |grep "$TOMCAT_NAME" |egrep -v "grep|$$" |awk 'NR==1{print $2}')

[ -n "$TOMCAT_PID" ] && kill -9 $TOMCAT_PID

[ -d $ROOT ] && mv $ROOT $BACKUP_DIR/${TOMCAT_NAME}_ROOT$DATE

unzip $WORK_DIR/$PROJECT_NAME/target/*.war -d $ROOT

$TOMCAT_DIR/bin/startup.sh

51、自动发布PHP项目

#!/bin/bash

DATE=$(date +%F_%T)

WWWROOT=/usr/local/nginx/html/$1

BACKUP_DIR=/data/backup

WORK_DIR=/tmp

PROJECT_NAME=php-demo

# 拉取代码

cd $WORK_DIR

if [ ! -d $PROJECT_NAME ]; then

git clone https://github.com/lizhenliang/php-demo

cd $PROJECT_NAME

else

cd $PROJECT_NAME

git pull

fi

# 部署

if [ ! -d $WWWROOT ]; then

mkdir -p $WWWROOT

rsync -avz --exclude=.git $WORK_DIR/$PROJECT_NAME/* $WWWROOT

else

rsync -avz --exclude=.git $WORK_DIR/$PROJECT_NAME/* $WWWROOT

fi

52、DOS攻击防范(自动屏蔽攻击IP)

#!/bin/bash

DATE=$(date +%d/%b/%Y:%H:%M)

#nginx日志

LOG_FILE=/usr/local/nginx/logs/demo2.access.log

#分析ip的访问情况

ABNORMAL_IP=$(tail -n5000 $LOG_FILE |grep $DATE |awk '{a[$1]++}END{for(i in a)if(a[i]>10)print i}')

for IP in $ABNORMAL_IP; do

if [ $(iptables -vnL |grep -c "$IP") -eq 0 ]; then

iptables -I INPUT -s $IP -j DROP

echo "$(date +'%F_%T') $IP" >> /tmp/drop_ip.log

fi

done

53、目录入侵检测与告警

#!/bin/bash

MON_DIR=/opt

inotifywait -mqr --format %f -e create $MON_DIR |\

while read files; do

#同步文件

rsync -avz /opt /tmp/opt

#检测文件是否被修改

#echo "$(date +'%F %T') create $files" | mail -s "dir monitor" xxx@163.com

done

37-53 作者:南宫乘风 链接:http://blog.csdn.net/heian_99/article/details/104027379

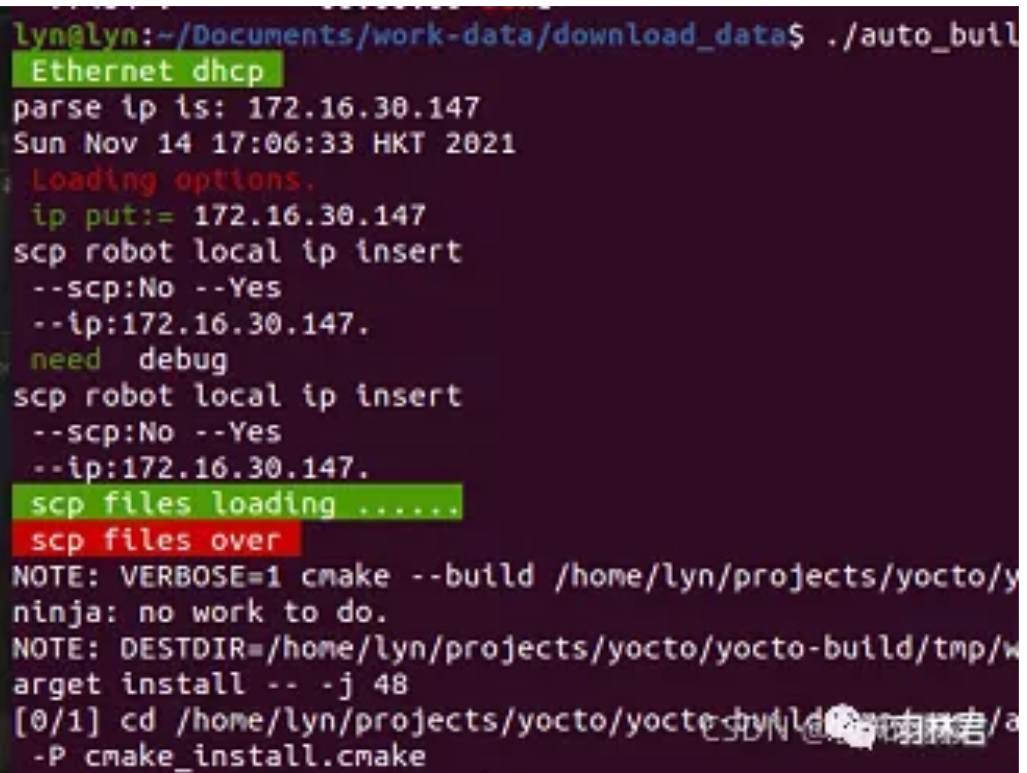

54、本地选择脚本auto_build.sh

脚本内容如下:

#/bin/bash

remote_ip=172.160.111.32

remote_hostname=lyn

case $1 in

1) echo -e '\033[0;42m Ethernet dhcp \033[0m'

VAR="eno1"

;;

2) echo -e '\033[0;46m wireless dhcp \033[0m'

VAR="wlo1"

;;

esac

HOST_IP=$(ifconfig $VAR | grep "inet" | grep -v inet6| awk '{ print $2}' | awk -F: '{print $1}')

echo "parse ip is:" $HOST_IP

if [[ ! -n "${HOST_IP}" ]] ;then

echo -e "\033[0;31m input local ip \033[0m"

read local_ip

else

if [[ ! $(echo "${HOST_IP}" | awk -F. '{printf $1}') == "192" ]] ;then

local_ip=$HOST_IP

else

echo -e "\033[0;31m error ip \033[0m"

exit 0

fi

fi

#exit 0

if [[ $2 == 1 ]];then

build_opt="all_build"

else

build_opt=

fi

ssh -t ${remote_hostname}@${remote_ip} "/home/lyn/build.sh ip=${local_ip}

${build_opt}"

这个部分有几处技术使用:

switch case使用,if else、免密登陆,远程调用脚本。

首先是一个switch case

此处作用是进行ip地址的筛选,因为的在调试过程中,我的电脑有时候用网线连接,有时候会去测试房去测试,用wifi连接,这个时候会进行网络ip地址的区分,当我输入./auto_build.sh 1的时候,脚本会进行解析eno1网线分配的ip地址,当我输入./auto_build.sh 2的时候则会解析wlo1wifi分配的ip。

在里面我还用了颜色打印,进行关键词的标注,如下所示:

switch case使用,if else、免密登陆,远程调用脚本。

关于颜色打印的部分这个是另一个知识,这是一个转义的实际使用过程,通过特定符号的转义识别,我们在Linux终端去显示不同颜色的打印输出,这个是我们经常使用的操作,例如log等级分级打印时候,error是红色,正常是绿色,普通是白色等。

颜色打印大致介绍如下:

转义序列以控制字符'ESC'开头。该字符的ASCII码十进制表示为27,十六进制表示为0x1B,八进制表示为033。多数转义序列超过两个字符,故通常以'ESC'和左括号'['开头。该起始序列称为控制序列引导符(CSI,Control Sequence Intro),通常由 '\033[' 或 '\e[' 代替。

通过转义序列设置终端显示属性时,可采用以下格式:

\033[ Param {;Param;...}m

或

\e[ Param {;Param;...}m

其中,'\033['或'\e['引导转义序列,'m'表示设置属性并结束转义序列。

因此,通过转义序列设置终端显示属性时,常见格式为:

\033[显示方式;前景色;背景色m输出字符串\033[0m

或\e[显示方式;前景色;背景色m输出字符串\033[0m

其中 ,'\033[0m'用于恢复默认的终端输出属性,否则会影响后续的输出。

示例:我在此处使用 echo -e '\033[0;42m Ethernet dhcp \033[0m' 进行网线端口ip分配的打印,通过转义之后,打印颜色为带背景色的绿色显示。具体对应的颜色,大家可以看一下小麦老兄写的这篇文章printf打印还能这么玩。

注:打印log时候记得echo 要使用 -e参数。

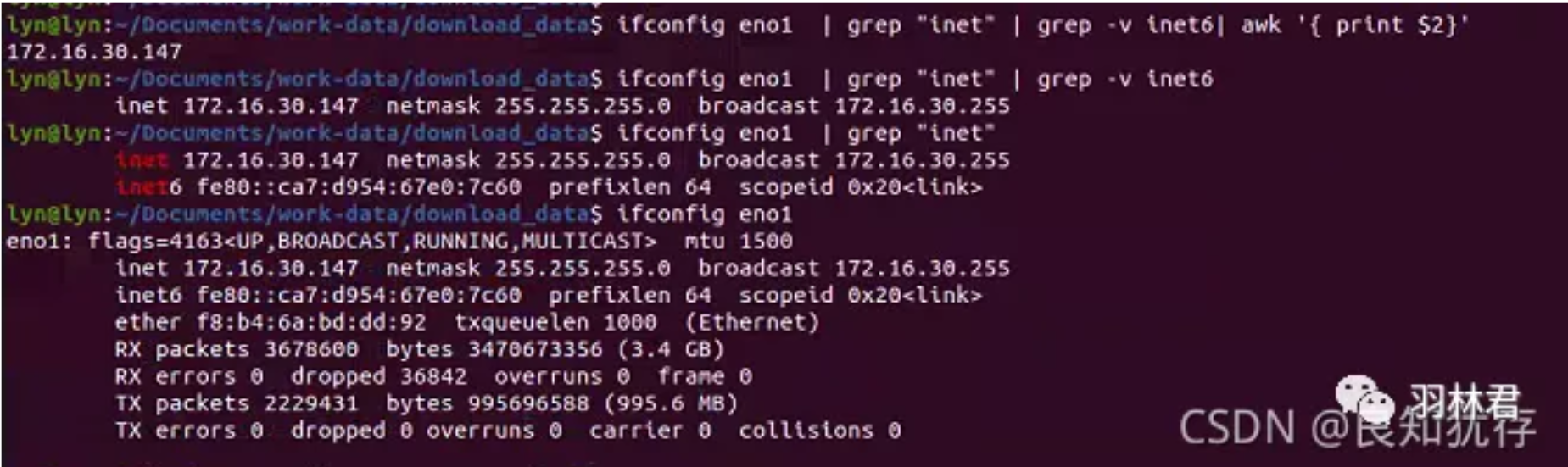

其次还有组合使用命令实现获取本地ip

HOST_IP=$(ifconfig $VAR | grep "inet" | grep -v inet6| awk '{ print $2}' | awk -F: '{print $1}')

我们一步步查看执行情况

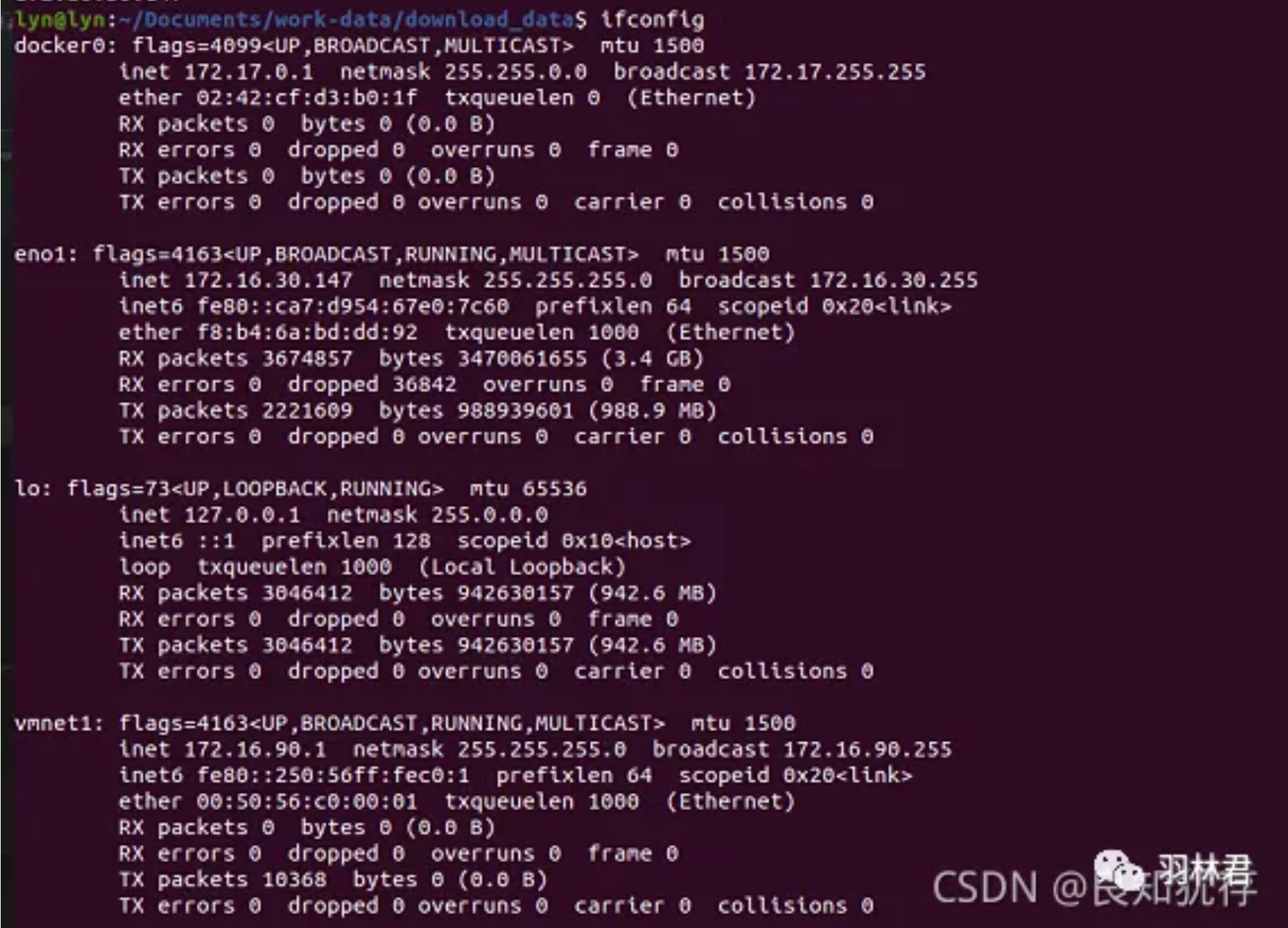

第一步:ifconfig eno1

lyn@lyn:~/Documents/work-data/download_data$ ifconfig eno1

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.16.30.147 netmask 255.255.255.0 broadcast 172.16.30.255

inet6 fe80::ca7:d954:67e0:7c60 prefixlen 64 scopeid 0x20<link>

ether f8:b4:6a:bd:dd:92 txqueuelen 1000 (Ethernet)

RX packets 3678600 bytes 3470673356 (3.4 GB)

RX errors 0 dropped 36842 overruns 0 frame 0

TX packets 2229431 bytes 995696588 (995.6 MB)

TX errors 0

我们经常使用ifconfig查看ip,但是使用ifconfig返回的数据过多,而我们实际使用的部分只是一部分而已。

所以我们使用ifconfig指定设备查找ip,筛去无用信息。

第二步:ifconfig eno1 | grep "inet"

把第一步查询的信息通过 | 产生一个管道传递给下一个命令,用grep查找有inet字符的行数据,显示如下:

lyn@lyn:~/Documents/work-data/download_data$ ifconfig eno1 | grep "inet"

inet 172.16.30.147 netmask 255.255.255.0 broadcast 172.16.30.255

inet6 fe80::ca7:d954:67e0:7c60 prefixlen 64 scopeid 0x20<link>

因为我们只需要ipv4协议的ip,所以我们要去掉inet6对应的地址

第三步:ifconfig eno1 | grep "inet" | grep -v inet6

使用grep -v命令去掉 inet6 关键词的对应一行信息

lyn@lyn:~/Documents/work-data/download_data$ ifconfig eno1 | grep "inet" | grep -v inet6

inet 172.16.30.147 netmask 255.255.255.0 broadcast 172.16.30.255

第四步:ifconfig eno1 | grep "inet" | grep -v inet6 | awk '{ print$2}'

使用 awk处理文本文件的语言进行处理数据,$2 表示默认以空格分割的第二组 ,-F:-F指定分隔符为 ‘ : ’

lyn@lyn:~/Documents/work-data/download_data$ ifconfig eno1 | grep "inet" | grep -v inet6| awk '{ print$2}'

172.16.30.147

关于grep sed awk的使用大家也可以网上具体查看一下,但是我们一般使用过程中,grep 更适合单纯的查找或匹配文本, sed 更适合编辑匹配到的文本,awk更适合格式化文本,对文本进行较复杂格式处理。

这个时候我们从本机得到了ip地址。我们需要进行远程调用服务器脚本,并把ip以参数形式传入。

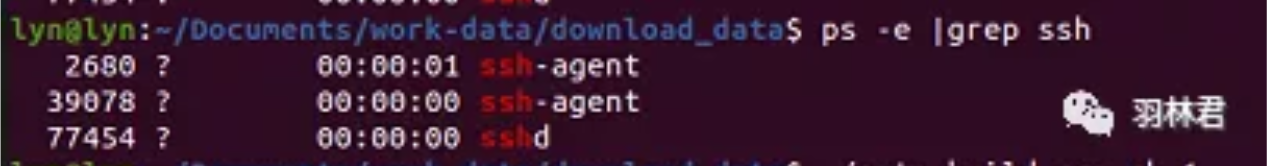

其次ssh免密登陆和ssh远程执行任务

首先第一个部分就是ssh免密登陆

本地执行ssh到服务的相关操作命令需要免密,服务器scp本地文件也要免密登陆,那么如何设置我们ssh相关命令操作,无需密码呢?

SSH分客户端openssh-client和服务器openssh-server如果你只是想登陆别的机器,只需要安装openssh-client(ubuntu有默认安装,如果没有则sudo apt-get install openssh-client),如果要使别的机器登陆本机就需要在本机安装openssh-server(sudo apt-get install openssh-server)

我们可以使用 ps -e | grep ssh来查看对应的openssh-client和openssh-server运行情况,其中ssh是client ,sshd是server,哪个缺我们就使用apt-get install 。

sudo service ssh start 安装之后可以使用这个命令启动。

准备好了对应的server和client接下来,把我们生成的rsa公钥拷贝要对应要登陆的机器,即可免密登陆。

1.客户端生成公私钥

ssh-keygen 命令一路回车默认生成

这个命令会在用户目录.ssh文件夹下创建公私钥,id_rsa (私钥),id_rsa.pub (公钥)。

2.上传公钥到服务器

ssh-copy-id -i ~/.ssh/id_rsa.pub lyn@172.160.111.32

上面这条命令是写到服务器上的ssh目录下去了

vi ~/.ssh/authorized_keys

可以看到客户端写入到服务器的 id_rsa.pub (公钥)内容。

3.测试免密登录 客户端通过ssh连接远程服务器,就可以免密登录了。

ssh lyn@172.160.111.32

第二个部分就是ssh远程执行服务器脚本

有时候我们需要远程执行一些有交互操作的命令。这个时候我们就可以使用ssh加参数进去进行远程执行。

格式如下:

远程执行一个命令

ssh lyn@172.160.111.32 "ls -l"

执行多条命令,使用分号把不同的命令隔起来

ssh lyn@172.160.111.32 "ls;cat test.txt "

远程执行本地脚本

ssh lyn@172.160.111.32 < test.sh

远程执行本地的脚本(执行带有参数的脚本),需要为 bash 指定 -s 参数:

ssh lyn@172.160.111.32 'bash -s' < test.sh helloworld

执行远程的脚本

ssh lyn@172.160.111.32 "/home/lyn/test.sh"

注,此时需要指定脚本的绝对路径!

而我们使用的为远程执行脚本,最终ssh远程执行如下:

remote_ip=172.160.111.32

remote_hostname=lyn

local_ip=172.16.30.147

build_opt=

ssh -t ${remote_hostname}@${remote_ip} "/home/lyn/build.sh ip=${local_ip}

${build_opt}"

55、服务器编译脚本 build.sh

脚本内容如下:

#!/bin/bash -e

scp_dir=/media/lyn/win_data/lyn_workdata/working/robot-ctl

download_data=/home/lyn/Documents/work-data/download_data

build_dir=/home/lyn/projects/yocto/yocto-build/tmp/work/aarch64-poky-linux/robot-ctl/git-r0/git/

image_dir=/home/lyn/projects/yocto/yocto-build/tmp/work/aarch64-poky-linux/robot-ctl/git-r0/image/robot-ctl/

remote_exec_file_dir=/home/lyn/Documents/work-data/download_data/scp_exec.sh

all_build=No

wifi_src=No

only_scp_robot=No

strip_mode=No

ip_wireless_dhcp=170.160.111.45

ip_ethernet_dhcp=170.160.111.147

local_ip=${ip_wireless_dhcp}

host_name=lyn

date

echo -e "\033[0;31m Loading options.\033[0m"

# Load all the options.

if [ $# -eq 0 ];then

echo -e "\033[33;5m no argument \033[0m"

fi

for arg in "${@}"

do

if [[ -n "${arg}" ]] && [[ "${arg}" == "wifi" ]] ; then

wifi_src="Yes"

local_ip=${ip_ethernet_dhcp}

echo -e "local connect robot wifi \n --${wifi_src}\n --ip:${local_ip}."

fi

if [[ -n "${arg}" ]] && [[ "${arg}" == "scp" ]] ; then

only_scp_robot="Yes"

local_ip=${ip_ethernet_dhcp}

echo -e "scp robot local connect robot wifi \n --scp:${only_scp_robot} - -${wifi_src}\n --ip:${local_ip}."

fi

if [[ -n "${arg}" ]] && [[ "${arg}" == "all_build" ]] ; then

all_build="Yes"

echo -e "all bulid"

fi

if [[ -n "${arg}" ]] && [[ "${arg}" == "ip" ]] ; then

echo -e "\033[32m ip:=\033[0m"

read ip_addr

local_ip=${ip_addr}

wifi_src="Yes"

echo -e "scp robot local ip insert \n --scp:${only_scp_robot} - -${wifi_src}\n --ip:${local_ip}."

fi

if [[ -n "${arg}" ]] && [[ "${arg:0:3}" == "ip=" ]] ; then

echo -e "\033[32m ip put:=\033[0m" ${arg#*=}

local_ip=${arg#*=}

wifi_src="Yes"

echo -e "scp robot local ip insert \n --scp:${only_scp_robot} - -${wifi_src}\n --ip:${local_ip}."

fi

if [[ -n "${arg}" ]] && [[ "${arg}" == "debug" ]] ; then

echo -e "\033[32m need \033[0m" ${arg}

strip_mode="Yes"

echo -e "scp robot local ip insert \n --scp:${only_scp_robot} - -${wifi_src}\n --ip:${local_ip}."

fi

done

if [ "${only_scp_robot}" == "No" ];then

if [ ! "${wifi_src}" == "Yes" ] ; then

echo -e "local don't connect robot wifi \n --${wifi_src}\n --ip:${local_ip}."

fi

if [ -d "${build_dir}" ]; then

cd ${build_dir}

scp -rp lyn@{local_ip}:${scp_dir}/src \

lyn@{local_ip}:${scp_dir}/include \

lyn@{local_ip}:${scp_dir}/CMakeLists.txt .

//ssh ${host_name}@${local_ip} "${remote_scp_code_dir}"

#git pull

if [ "${all_build}" == "Yes" ] ; then

./../temp/run.do_generate_toolchain_file

./../temp/run.do_configure

fi

./../temp/run.do_compile

./../temp/run.do_install

#cd /home/lyn/

if [ "${strip_mode}" == "No" ]; then

#./strip_x1000.sh

cd ${image_dir}

aarch64-linux-gnu-strip pp

fi

else

echo -e "\033[0;31m dir is not exist.\033[0m"

fi

fi

scp ${image_dir}/exec ${host_name}@${local_ip}:${download_data}

if [[ "${wifi_src}" == "Yes" ]] || [[ "${only_scp_robot}" ]] ; then

ssh ${host_name}@${local_ip} "${remote_exec_file_dir}"

else

echo -e "no robot wifi\n"

fi

服务器执行的脚本内容比较长,从执行的流程来说,在这个脚本中,大致为初始化读取脚本执行传入的参数,通过参数配置不同的变量匹配不同机器状态,紧接着,拷贝本地的文件到服务器编译,服务器编译完成之后拷贝可执行文件到本地,再调用本地的脚本把可执行文件拷贝到机器对应的目录。

这个脚本有些使用技术和第一个脚本有重合,此处仅说没有讲到的部分。

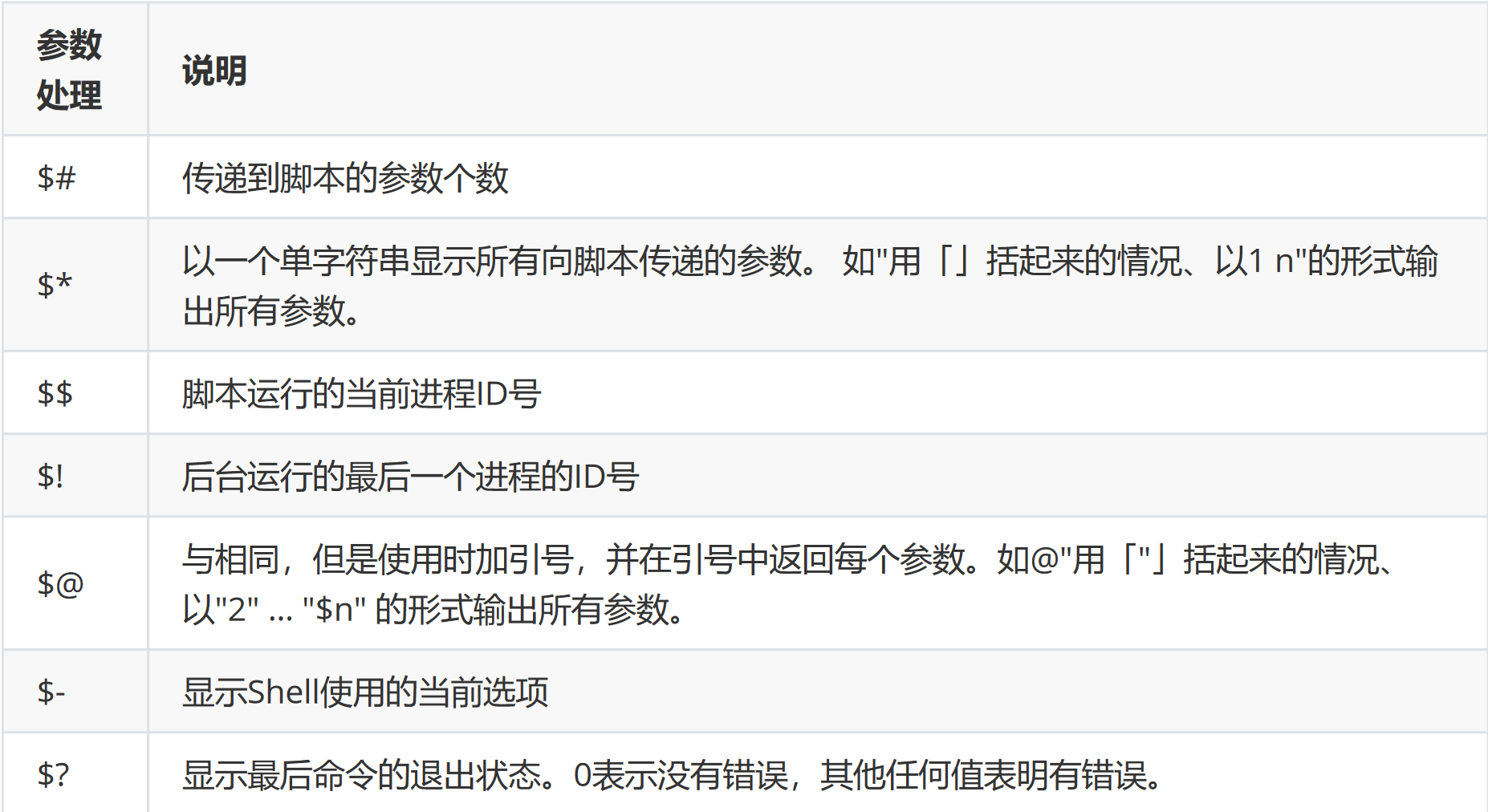

首先第一个使用的就是 { $# } 和 {$@ }

下面是从菜鸟教程拷贝的shell传参的特殊字符介绍

我在其中使用了$#用来辅助提醒输入的参数数量,防止出错;

if [ $# -eq 0 ];then

echo -e "\033[33;5m no argument \033[0m"

fi

然后使用$@把传入的参数一个个解析出了来,进行变量的配置。

for arg in "${@}"

do

if [[ -n "${arg}" ]] && [[ "${arg}" == "wifi" ]] ; then

wifi_src="Yes"

local_ip=${ip_ethernet_dhcp}

echo -e "local connect robot wifi \n --${wifi_src}\n --ip:${local_ip}."

fi

...

done

其次使用了字符串截取的操作

if [[ -n "${arg}" ]] && [[ "${arg:0:3}" == "ip=" ]] ; then

echo -e "\033[32m ip put:=\033[0m" ${arg#*=}

local_ip=${arg#*=}

wifi_src="Yes"

echo -e "scp robot local ip insert \n --scp:${only_scp_robot} -

-${wifi_src}\n --ip:${local_ip}."

fi

${arg:0:3} 意思为从左边第0个字符开始,字符的个数为3个

${arg#*=} 意思为 # 号截取,删除 '='左边字符,保留右边字符。

至于为什么这么使用,以及其他使用的介绍,这里我摘录其他博主的文章给大家做一个简单的分享

摘录自:《shell脚本字符串截取的8种方法》

假设有变量 var=http://www.aaa.com/123.htm

1. # 号截取,删除左边字符,保留右边字符。

echo ${var#*//}

其中 var 是变量名,# 号是运算符,*// 表示从左边开始删除第一个 // 号及左边的所有字符 即删除http:// 结果是 :www.aaa.com/123.htm

2. ## 号截取,删除左边字符,保留右边字符。

echo ${var##*/}

##*/ 表示从左边开始删除最后(最右边)一个 / 号及左边的所有字符 即删除http://www.aaa.com/

结果是 123.htm

3. %号截取,删除右边字符,保留左边字符

echo ${var%/*}

%/* 表示从右边开始,删除第一个 / 号及右边的字符

结果是:http://www.aaa.com

4. %% 号截取,删除右边字符,保留左边字符

echo ${var%%/*}

%%/* 表示从右边开始,删除最后(最左边)一个 / 号及右边的字符 结果是:http:

5. 从左边第几个字符开始,及字符的个数

echo ${var:0:5}

其中的 0 表示左边第一个字符开始,5 表示字符的总个数。 结果是:http:

6. 从左边第几个字符开始,一直到结束。

echo ${var:7}

其中的 7 表示左边第8个字符开始,一直到结束。 结果是 :www.aaa.com/123.htm

7. 从右边第几个字符开始,及字符的个数

echo ${var:0-7:3}

其中的 0-7 表示右边算起第七个字符开始,3 表示字符的个数。 结果是:123

8. 从右边第几个字符开始,一直到结束。

echo ${var:0-7}

表示从右边第七个字符开始,一直到结束。 结果是:123.htm

注:(左边的第一个字符是用 0 表示,右边的第一个字符用 0-1 表示)

此外我们也可以使用awk、cut进行截取,这里就不一一列举了

56、本地expect登陆拷贝scp_exec.sh脚本

脚本内容如下:

#!/bin/expect

set timeout 30

set host 192.168.1.1

set user root

spawn scp /home/lyn/Documents/work-data/download_data/ $user@$host:/opt/lib/exec

#spawn ssh $user@$host

expect {

"*yes/no*"

{

send "yes\r"

expect "*password:*" { send "123456\r" }

}

"*password:*"

{

send "123456\r"

}

}

expect eof

因为服务器地址相对固定,并且方便设置ssh公钥免密登陆,但是机器而言,你需要调试的机器有很多,

我就没有考虑使用了expect命令。先给大家简单介绍一下expect:

Expect是等待输出内容中的特定字符。然后由send发送特定的相应。其交互流程是:

spawn启动指定进程 -> expect获取指定关键字 -> send想指定进程发送指定指令 -> 执行完成, 退出.

首先使用expect 我们需要安装expect

sudo apt-get install tcl tk expect

因为我写的这个部分也比较简单,所以就一点点给大家说明里面执行细节:

#!/bin/expect

expect的目录,类似与shell目录

set timeout 30

set 自定义变量名”:设置超时时间,单位为秒,有些拷贝大文件的朋友可能会遇到

expect: spawn id exp4 not open这里没有等到expecteof是,ssh连接已经关闭了。一般是超时时间太短了,

建议可以直接设置成 timeout -1 ,这意味着用不超时,拷贝结束之后才会断开。

**set host 192.168.1.1 set user root spawn scp /home/lyn/Documents/work- data/download_data/ $user@$host:/opt/lib/exec **

spawn(expect安装后的命令)是进入expect环境后才可以执行的expect内部命令,它主要的功能是给ssh运行进程加个壳,用来传递交互指令。可以理解为启动一个新进程 。

expect { "\*yes/no\*" { send "yes\r" expect "\*password:\*" { send "123456\r" } }"\*password:\*" { send "123456\r" } }

expect {}: 多行期望,从上往下匹配,匹配成功里面的哪一条,将执行与之的 send命令,注意,这里面的匹配字符串只会执行一个,即匹配到的那个,其余的将不会执行,如果想匹配这句命令执行成功后(如登录成功后等待输入的root@ubuntu:~#)的其他字符,需要另起一个expect命令,并保证不在expect{}里面。

send "yes\r"

send接收一个字符串参数,并将该参数发送到进程。这里就是执行交互动作, 与手工输入密码的动作等效。 命令字符串结尾别忘记加上“\r”,表示“回车 键”。

expect eof

expect执行结束, 退出交互程序。

这里我只是简单描述了一下我使用expect文件,更多expect命令学习,有兴趣的朋友,可以自行搜索学习。

45-55作者:作者:良知犹存

微信公众号:羽林君

57、检测两台服务器指定目录下的文件一致性

#!/bin/bash

######################################

检测两台服务器指定目录下的文件一致性

#####################################

#通过对比两台服务器上文件的md5值,达到检测一致性的目的

dir=/data/web

b_ip=192.168.88.10

#将指定目录下的文件全部遍历出来并作为md5sum命令的参数,进而得到所有文件的md5值,并写入到指定文件中

find $dir -type f|xargs md5sum > /tmp/md5_a.txt

ssh $b_ip "find $dir -type f|xargs md5sum > /tmp/md5_b.txt"

scp $b_ip:/tmp/md5_b.txt /tmp

#将文件名作为遍历对象进行一一比对

for f in `awk '{print 2} /tmp/md5_a.txt'`do

#以a机器为标准,当b机器不存在遍历对象中的文件时直接输出不存在的结果

if grep -qw "$f" /tmp/md5_b.txt;then

md5_a=`grep -w "$f" /tmp/md5_a.txt|awk '{print 1}'`

md5_b=`grep -w "$f" /tmp/md5_b.txt|awk '{print 1}'`

#当文件存在时,如果md5值不一致则输出文件改变的结果

if [ $md5_a != $md5_b ]then

echo "$f changed."

fi

else

echo "$f deleted."

fi

done

58、定时清空文件内容,定时记录文件大小

#!/bin/bash

#################################################################

每小时执行一次脚本(任务计划),当时间为0点或12点时,将目标目录下的所有文件内#容清空,但不删除文件,其他时间则只统计各个文件的大小,一个文件一行,输出到以时#间和日期命名的文件中,需要考虑目标目录下二级、三级等子目录的文件

################################################################

logfile=/tmp/`date +%H-%F`.log

n=`date +%H`

if [ $n -eq 00 ] || [ $n -eq 12 ];then

#通过for循环,以find命令作为遍历条件,将目标目录下的所有文件进行遍历并做相应操作

for i in `find /data/log/ -type f`

do

true > $i

done

else

for i in `find /data/log/ -type f`

do

du -sh $i >> $logfile

done

fi

59、检测网卡流量,并按规定格式记录在日志中

#!/bin/bash

#######################################################

#检测网卡流量,并按规定格式记录在日志中#规定一分钟记录一次

#日志格式如下所示:

#2019-08-12 20:40

#ens33 input: 1234bps

#ens33 output: 1235bps

######################################################3

while :

do

#设置语言为英文,保障输出结果是英文,否则会出现bug

LANG=en

logfile=/tmp/`date +%d`.log

#将下面执行的命令结果输出重定向到logfile日志中

exec >> $logfile

date +"%F %H:%M"

#sar命令统计的流量单位为kb/s,日志格式为bps,因此要*1000*8

sar -n DEV 1 59|grep Average|grep ens33|awk '{print $2,"\t","input:","\t",$5*1000*8,"bps","\n",$2,"\t","output:","\t",$6*1000*8,"bps"}'

echo "####################"

#因为执行sar命令需要59秒,因此不需要sleep

done

60、计算文档每行出现的数字个数,并计算整个文档的数字总数

#!/bin/bash

#########################################################

#计算文档每行出现的数字个数,并计算整个文档的数字总数

########################################################

#使用awk只输出文档行数(截取第一段)

n=`wc -l a.txt|awk '{print $1}'`

sum=0

#文档中每一行可能存在空格,因此不能直接用文档内容进行遍历

for i in `seq 1 $n`do

#输出的行用变量表示时,需要用双引号

line=`sed -n "$i"p a.txt`#wc -L选项,统计最长行的长度

n_n=`echo $line|sed s'/[^0-9]//'g|wc -L`

echo $n_nsum=$[$sum+$n_n]

done

echo "sum:$sum"

杀死所有脚本

#!/bin/bash

################################################################

#有一些脚本加入到了cron之中,存在脚本尚未运行完毕又有新任务需要执行的情况,

#导致系统负载升高,因此可通过编写脚本,筛选出影响负载的进程一次性全部杀死。

################################################################

ps aux|grep 指定进程名|grep -v grep|awk '{print $2}'|xargs kill -9

61、从 FTP 服务器下载文件

#!/bin/bash

if [ $# -ne 1 ]; then

echo "Usage: $0 filename"

fi

dir=$(dirname $1)

file=$(basename $1)

ftp -n -v << EOF # -n 自动登录

open 192.168.1.10 # ftp服务器

user admin password

binary # 设置ftp传输模式为二进制,避免MD5值不同或.tar.gz压缩包格式错误

cd $dir

get "$file"

EOF

62、连续输入5个100以内的数字,统计和、最小和最大

#!/bin/bash

COUNT=1

SUM=0

MIN=0

MAX=100

while [ $COUNT -le 5 ]; do

read -p "请输入1-10个整数:" INT

if [[ ! $INT =~ ^[0-9]+$ ]]; then

echo "输入必须是整数!"

exit 1

elif [[ $INT -gt 100 ]]; then

echo "输入必须是100以内!"

exit 1

fi

SUM=$(($SUM+$INT))

[ $MIN -lt $INT ] && MIN=$INT

[ $MAX -gt $INT ] && MAX=$INT

let COUNT++

done

echo "SUM: $SUM"

echo "MIN: $MIN"

echo "MAX: $MAX

用户猜数字

#!/bin/bash # 脚本生成一个 100 以内的随机数,提示用户猜数字,根据用户的输入,提示用户猜对了,

# 猜小了或猜大了,直至用户猜对脚本结束。

# RANDOM 为系统自带的系统变量,值为 0-32767的随机数

# 使用取余算法将随机数变为 1-100 的随机数num=$[RANDOM%100+1]echo "$num"

# 使用 read 提示用户猜数字