scrapy框架爬取国际庄2011-2022的天气情况

目标网站:http://www.tianqihoubao.com/lishi/

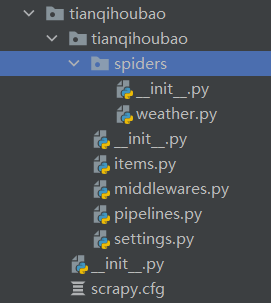

一.创建项目+初始化爬虫文件:

scrapy startpoject tianqihoubao

cd tianqihoubao

scrapy genspider weather www.tianqihoubao.com

二.配置settings.py

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36 Edg/99.0.1150.30' ROBOTSTXT_OBEY = False #君子协议 注释掉或者改为false ITEM_PIPELINES = { 'tianqihoubao.pipelines.TianqihoubaoPipeline': 300, 'tianqihoubao.pipelines.MySQLStoreCnblogsPipeline': 300 } # 连接数据MySQL # 数据库地址 MYSQL_HOST = 'localhost' # 数据库用户名: MYSQL_USER = 'root' # 数据库密码 MYSQL_PASSWORD = '123456' # 数据库端口 MYSQL_PORT = 3306 # 数据库名称 MYSQL_DBNAME = 'data' # 数据库编码 MYSQL_CHARSET = 'utf8'

三.修改items.py

import scrapy class TianqihoubaoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() riqi = scrapy.Field() tianqi = scrapy.Field() qiwen = scrapy.Field() wind = scrapy.Field()

四.编写weather.py

scrapy.Request()教程:Scrapy爬虫入门教程十一 Request和Response(请求和响应)

import scrapy from tianqihoubao.items import TianqihoubaoItem class WeatherSpider(scrapy.Spider): name = 'weather' allowed_domains = ['www.tianqihoubao.com'] start_urls = ['http://www.tianqihoubao.com/lishi/shijiazhuang.html'] def parse(self, response): #for path in self.start_urls: data = response.xpath('//div[@id="content"]/table[@class="b"]/tr') next_page = response.xpath('//div[@id="content"]/div[@class="box pcity"]/ul/li/a/@href').extract() for i in data[1:]: item = TianqihoubaoItem() item['riqi'] = i.xpath('./td/a/text()').extract()[0].replace('\r\n', '').replace('\n', '').replace(' ', '').strip() item['tianqi'] = i.xpath('./td/text()').extract()[2].replace('\r\n', '').replace('\n', '').replace(' ', '').strip() item['qiwen'] = i.xpath('./td/text()').extract()[3].replace('\r\n', '').replace('\n', '').replace(' ', '').strip() item['wind'] = i.xpath('./td/text()').extract()[4].replace('\r\n', '').replace('\n', '').replace(' ', '').strip() #print(item['tianqi']) yield item print("下一页", next_page) if next_page and len(next_page) > 5: # 翻页 for i in next_page: yield scrapy.Request(url='http://www.tianqihoubao.com'+i, callback=self.parse) else: print("没有下一页了" * 10) print(data)

五.配置pipelines.py(一个保存为csv,一个保存到mysql上)

import copy import csv import time from pymysql import cursors from twisted.enterprise import adbapi class TianqihoubaoPipeline(object): # 保存为csv格式 def __init__(self): # 打开文件,指定方式为写,利用第3个参数把csv写数据时产生的空行消除 self.f = open("weather.csv", "a", newline="", encoding="utf-8") # 设置文件第一行的字段名,注意要跟spider传过来的字典key名称相同 self.fieldnames = ["riqi", "tianqi", "qiwen", "wind"] # 指定文件的写入方式为csv字典写入,参数1为指定具体文件,参数2为指定字段名 self.writer = csv.DictWriter(self.f, fieldnames=self.fieldnames) # 写入第一行字段名,因为只要写入一次,所以文件放在__init__里面 self.writer.writeheader() def process_item(self, item, spider): # 写入spider传过来的具体数值 self.writer.writerow(item) # 写入完返回 return item def close(self, spider): self.f.close() class MySQLStoreCnblogsPipeline(object): # 初始化函数 def __init__(self, db_pool): self.db_pool = db_pool # 从settings配置文件中读取参数 @classmethod def from_settings(cls, settings): # 用一个db_params接收连接数据库的参数 db_params = dict( host=settings['MYSQL_HOST'], user=settings['MYSQL_USER'], password=settings['MYSQL_PASSWORD'], port=settings['MYSQL_PORT'], database=settings['MYSQL_DBNAME'], charset=settings['MYSQL_CHARSET'], use_unicode=True, # 设置游标类型 cursorclass=cursors.DictCursor ) # 创建连接池 db_pool = adbapi.ConnectionPool('pymysql', **db_params) # 返回一个pipeline对象 return cls(db_pool) # 处理item函数 def process_item(self, item, spider): # 对象拷贝,深拷贝 --- 这里是解决数据重复问题!!! asynItem = copy.deepcopy(item) # 把要执行的sql放入连接池 query = self.db_pool.runInteraction(self.insert_into, asynItem) # 如果sql执行发送错误,自动回调addErrBack()函数 query.addErrback(self.handle_error, item, spider) # 返回Item return item # 处理sql函数 def insert_into(self, cursor, item): # 创建sql语句 sql = "INSERT INTO weather (riqi,tianqi,qiwen,wind) VALUES ('{}','{}','{}','{}')".format( item['riqi'], item['tianqi'], item['qiwen'], item['wind']) # 执行sql语句 cursor.execute(sql) # 错误函数 def handle_error(self, failure, item, spider): # #输出错误信息 print("failure", failure)

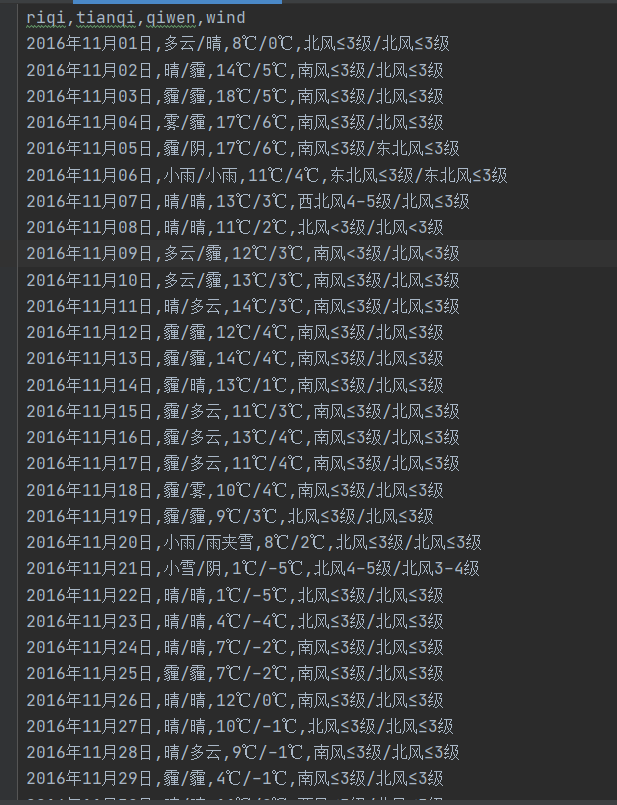

六.结果

这个在爬取的时候没有按时间顺序来,后续在搞一搞

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 周边上新:园子的第一款马克杯温暖上架

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

2021-03-06 每日学习