mapreduce分区

本次分区是采用项目垃圾分类的csv文件,按照小于4的分为一个文件,大于等于4的分为一个文件

源代码:

PartitionMapper.java:

package cn.idcast.partition; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; /* K1:行偏移量 LongWritable v1:行文本数据 Text k2:行文本数据 Text v2:NullWritable */ public class PartitionMapper extends Mapper<LongWritable,Text, Text, NullWritable> { //map方法将v1和k1转为k2和v2 @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { context.write(value,NullWritable.get()); } }

PartitionerReducer.java:

package cn.idcast.partition; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; /* k2: Text v2: NullWritable k3: Text v3: NullWritable */ public class PartitionerReducer extends Reducer<Text, NullWritable,Text, NullWritable> { @Override protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { context.write(key,NullWritable.get()); } }

MyPartitioner.java:

package cn.idcast.partition; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Partitioner; public class MyPartitioner extends Partitioner<Text, NullWritable> { /* 1:定义分区规则 2:返回对应的分区编号 */ @Override public int getPartition(Text text, NullWritable nullWritable, int numPartitions) { //1:拆分行文本数据(k2),获取垃圾分类数据的值 String[] split = text.toString().split(","); String numStr=split[1]; //2:判断字段与15的关系,然后返回对应的分区编号 if(Integer.parseInt(numStr)>=4){ return 1; } else{ return 0; } } }

JobMain.java:

package cn.idcast.partition; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner; import java.net.URI; public class JobMain extends Configured implements Tool { @Override public int run(String[] args) throws Exception { //1:创建Job任务对象 Job job = Job.getInstance(super.getConf(), "partition_mapreduce"); //如果打包运行出错,则需要加该配置 job.setJarByClass(cn.idcast.mapreduce.JobMain.class); //2:对Job任务进行配置(八个步骤) //第一步:设置输入类和输入的路径 job.setInputFormatClass(TextInputFormat.class); TextInputFormat.addInputPath(job,new Path("hdfs://node1:8020/input")); //第二部:设置Mapper类和数据类型(k2和v2) job.setMapperClass(PartitionMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(NullWritable.class); //第三步:指定分区类 job.setPartitionerClass(MyPartitioner.class); //第四、五、六步 //第七步:指定Reducer类和数据类型(k3和v3) job.setReducerClass(PartitionerReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class); //设置ReduceTask的个数 job.setNumReduceTasks(2); //第八步:指定输出类和输出路径 job.setOutputFormatClass(TextOutputFormat.class); Path path=new Path("hdfs://node1:8020/out/partition_out"); TextOutputFormat.setOutputPath(job,path); //获取FileSystem FileSystem fs = FileSystem.get(new URI("hdfs://node1:8020/partition_out"),new Configuration()); //判断目录是否存在 if (fs.exists(path)) { fs.delete(path, true); System.out.println("存在此输出路径,已删除!!!"); } //3:等待任务结束 boolean bl = job.waitForCompletion(true); return bl?0:1; } public static void main(String[] args) throws Exception { Configuration configuration = new Configuration(); //启动一个job任务 int run = ToolRunner.run(configuration, new JobMain(), args); System.exit(run); } }

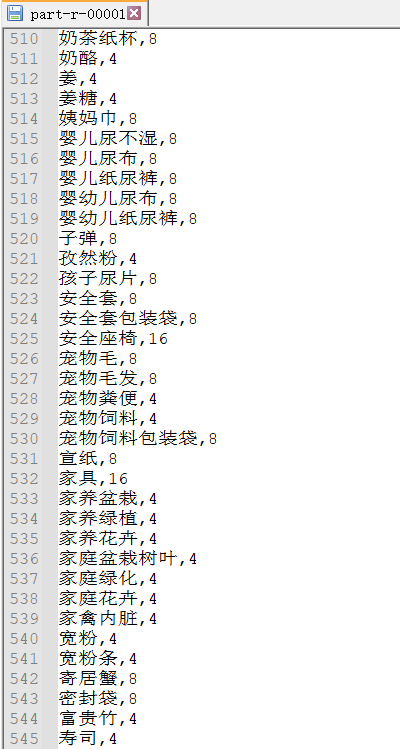

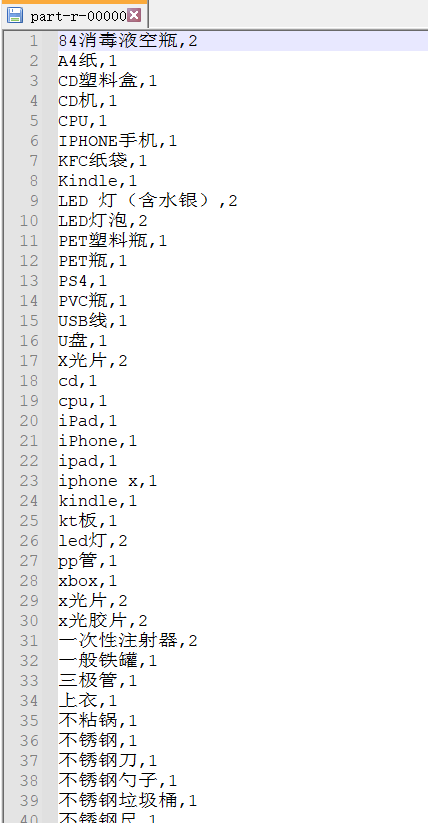

在hadoop或者本地运行结果:

1.均为4-16的文件

2.均为1-3的文件

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 周边上新:园子的第一款马克杯温暖上架

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!