mapreduce统计单词

源代码:

WordCountMapper.java:

package cn.idcast.mapreduce; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; /* 四个泛型解释: KEYIN:k1的类型 VALUEIN:v1的类型 KEYOUT:k2的类型 VALUEOUT:v2的类型 */ public class WordCountMapper extends Mapper<LongWritable,Text,Text,LongWritable> { //map方法就是将K1和v1 转为k2和v2 /* 参数: key :k1 行偏移量 value :v1 每一行的文本数据 context:表示上下文对象 */ /* 如何将K1和v1 转为k2和v2 k1 v1 0 hello,world,hadoop 15 hdfs,hive,hello ------------------------- k2 v2 hello 1 world 1 hdfs 1 hadoop 1 hello 1 */ @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { Text text = new Text(); LongWritable longWritable = new LongWritable(); //1:将一行的文本数据进行拆分 String[] split = value.toString().split(","); //2:遍历数组,组装k2和v2 for (String word : split) { //3:将k2和v2写入上下文中 text.set(word); longWritable.set(1); context.write(text,longWritable); } } }

WordCountReducer.java:

package cn.idcast.mapreduce; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; /* 四个泛型解释: KEYIN:k2的类型 VALUEIN:v2的类型 KEYOUT:k3的类型 VALUEOUT:v3的类型 */ public class WordCountReducer extends Reducer<Text,LongWritable,Text,LongWritable> { //reduce方法作用:将新的k2和v2转为 k3和v3,将k3 和v3写入上下文中 /* 参数: key :新k2 values :集合 新v2 context:表示上下文对象 ----------------------- 如何将新的k2和v2转为k3和v3 新 k2 v2 hello <1,1,1> world <1,1> hadoop <1> ------------------------- k3 v3 hello 3 world 2 hadoop 1 */ @Override protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException { long count=0; //1:遍历集合,将集合中的数字相加,得到v3 for (LongWritable value : values) { count +=value.get(); } //2:将k3和v3写入上下文中 context.write(key,new LongWritable(count)); } }

JobMain.java:

package cn.idcast.mapreduce; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner; import java.net.URI; public class JobMain extends Configured implements Tool { //该方法用于指定一个job任务 @Override public int run(String[] args) throws Exception { //1:创建一个job任务对象 Job job = Job.getInstance(super.getConf(), "wordcount"); //如果打包运行出错,则需要加该配置 job.setJarByClass(JobMain.class); //2:配置job任务对象(八个步骤) //第一步:指定文件的读取方式和读取路径 job.setInputFormatClass(TextInputFormat.class); TextInputFormat.addInputPath(job,new Path("hdfs://node1:8020/wordcount")); //第二部:指定Map阶段的处理方式 job.setMapperClass(WordCountMapper.class); //设置Map阶段k2的类型 job.setMapOutputKeyClass(Text.class); //设置Map阶段v2的类型 job.setMapOutputValueClass(LongWritable.class); //第三,四,五,六 采用默认方式,现阶段不做处理 //第七步:指定Reduce阶段的处理方式和数据类型 job.setReducerClass(WordCountReducer.class); //设置k3的类型 job.setOutputKeyClass(Text.class); //设置v3的类型 job.setOutputValueClass(LongWritable.class); //第八步:设置输出类型 job.setOutputFormatClass(TextOutputFormat.class); //设置输出的路径 Path path=new Path("hdfs://node1:8020/wordcount_out"); TextOutputFormat.setOutputPath(job,path); //获取FileSystem FileSystem fs = FileSystem.get(new URI("hdfs://node1:8020/wordcount_out"),new Configuration()); //判断目录是否存在 if (fs.exists(path)) { fs.delete(path, true); System.out.println("存在此输出路径,已删除!!!"); } //等待任务结束 boolean bl = job.waitForCompletion(true); return bl ? 0:1; } public static void main(String[] args) throws Exception { Configuration configuration = new Configuration(); //启动job任务 int run = ToolRunner.run(configuration, new JobMain(), args); System.exit(run); } }

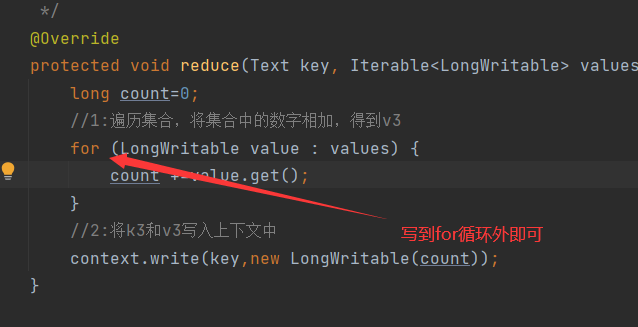

记录一个小错误:

发现key重复输出了,原因:reduce步骤中把提交上下文放到循环里去了,导致每加一次就输出一次

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 周边上新:园子的第一款马克杯温暖上架

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!