train a MLP model with tensorflow 2 for MNIST and deploy the model with cpp

In [ ]:

################## jupyter lab header

################## scipy, sk-learn, plotly

# %matplotlib notebook

# %matplotlib ipympl

%matplotlib widget

from IPython.display import display

from matplotlib import cm, projections

from matplotlib import pyplot as plt

from mpl_toolkits import mplot3d

from mpl_toolkits.mplot3d import Axes3D

from pathlib import Path

import cv2

import glob

import numpy as np

import os

import pandas as pd

import PIL

import pprint

import random

import re

import tensorflow as tf

# import torch

# settings to display all columns

pd.set_option("display.max_columns", None)

# pd.set_option("display.max_rows", None)load and parse dataset¶

data set source¶

MNIST handwritten digit database, Yann LeCun, Corinna Cortes and Chris Burges http://yann.lecun.com/exdb/mnist/

In [ ]:

dirRoot = r"D:\data\ml\dataset\MNIST"

ff_images_test = "t10k-images-idx3-ubyte"

ff_labels_test = "t10k-labels-idx1-ubyte"

ff_images_train = "train-images-idx3-ubyte"

ff_labels_train = "train-labels-idx1-ubyte"

ff_images_test = os.path.join(dirRoot, ff_images_test)

ff_labels_test = os.path.join(dirRoot, ff_labels_test)

ff_images_train = os.path.join(dirRoot, ff_images_train)

ff_labels_train = os.path.join(dirRoot, ff_labels_train)In [ ]:

# parse image data

def parse_image(ff):

image_cube = np.zeros((1,))

cnt = 0

rowN = 0

colN = 0

with open(ff, "rb") as file:

# print(type(file))

file.seek(4)

aa = file.read(4)

cnt = int.from_bytes(aa, "big")

aa = file.read(4)

rowN = int.from_bytes(aa, "big")

aa = file.read(4)

colN = int.from_bytes(aa, "big")

# print(cnt, rowN, colN)

aa = file.read(-1)

print(len(aa))

image_cube = np.array(np.frombuffer(aa, dtype=np.uint8))

image_cube = np.reshape(image_cube, [-1, rowN, colN])

print(image_cube.shape)

# fig = plt.figure("img_demo")

# plt.clf()

# for ii in range(8):

# plt.subplot(1, 8, ii+1)

# plt.imshow(train_image_cube[ii, :, :], cmap="gray")

# plt.show()

return image_cube

image_cube_train = parse_image(ff_images_train)

image_cube_test = parse_image(ff_images_test)

print("***************************")

print(image_cube_train.shape)

print(image_cube_test.shape)47040000

(60000, 28, 28)

7840000

(10000, 28, 28)

***************************

(60000, 28, 28)

(10000, 28, 28)In [ ]:

## parse label data

def parse_label(ff):

labels = np.zeros((1,))

with open(ff_labels_train, "rb") as file:

file.seek(4)

chunk = file.read(4)

label_cnt = int.from_bytes(chunk, "big")

# print(label_cnt)

chunk = file.read(-1)

labels = np.array(np.frombuffer(chunk, np.uint8))

print(labels[:40])

return labels

labels_train = parse_label(ff_labels_train)

labels_test = parse_label(ff_labels_test)

print("******************************")

print(labels_train.shape)

print(labels_test.shape)[5 0 4 1 9 2 1 3 1 4 3 5 3 6 1 7 2 8 6 9 4 0 9 1 1 2 4 3 2 7 3 8 6 9 0 5 6

0 7 6]

[5 0 4 1 9 2 1 3 1 4 3 5 3 6 1 7 2 8 6 9 4 0 9 1 1 2 4 3 2 7 3 8 6 9 0 5 6

0 7 6]

******************************

(60000,)

(60000,)model creation and training¶

In [ ]:

# Training a neural network on MNIST with Keras | TensorFlow Datasets

# https://www.tensorflow.org/datasets/keras_example

# Training a neural network on MNIST with Keras | TensorFlow Datasets

# https://www.tensorflow.org/datasets/keras_example

# SparseCategoricalCrossentropy - Google Search

# https://www.google.com/search?q=SparseCategoricalCrossentropy

# tf.keras.losses.SparseCategoricalCrossentropy | TensorFlow v2.9.1

# https://www.tensorflow.org/api_docs/python/tf/keras/losses/SparseCategoricalCrossentropy

# tf.keras.Model | TensorFlow v2.9.1

# https://www.tensorflow.org/api_docs/python/tf/keras/Model#fit

# tf.keras.metrics.sparse_categorical_crossentropy | TensorFlow v2.9.1

# https://www.tensorflow.org/api_docs/python/tf/keras/metrics/sparse_categorical_crossentropy

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28,28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10),

])

model.compile(

optimizer=tf.keras.optimizers.Adam(0.001),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

# model.compile(

# optimizer=tf.keras.optimizers.Adam(0.001),

# loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

# metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

# )

model.fit(

x=image_cube_train,

y=labels_train,

batch_size=40000,

epochs=300

)Epoch 1/300

2/2 [==============================] - 0s 7ms/step - loss: 183.9884 - sparse_categorical_accuracy: 0.1082

Epoch 2/300

2/2 [==============================] - 0s 8ms/step - loss: 78.6189 - sparse_categorical_accuracy: 0.2354

Epoch 3/300

2/2 [==============================] - 0s 8ms/step - loss: 52.0209 - sparse_categorical_accuracy: 0.4027

Epoch 4/300

2/2 [==============================] - 0s 8ms/step - loss: 39.2087 - sparse_categorical_accuracy: 0.5174

...

...

...

2/2 [==============================] - 0s 8ms/step - loss: 0.1588 - sparse_categorical_accuracy: 0.9861

Epoch 300/300

2/2 [==============================] - 0s 9ms/step - loss: 0.1574 - sparse_categorical_accuracy: 0.9863Out[ ]:

<keras.callbacks.History at 0x2020b598e50>freeze the model and export as pb file¶

Save, Load and Inference From TensorFlow 2.x Frozen Graph - Lei Mao's Log Book https://leimao.github.io/blog/Save-Load-Inference-From-TF2-Frozen-Graph/

How to export a TensorFlow 2.x Keras model to a frozen and optimized graph | by Sebastián García Acosta | Medium https://medium.com/@sebastingarcaacosta/how-to-export-a-tensorflow-2-x-keras-model-to-a-frozen-and-optimized-graph-39740846d9eb

In [ ]:

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2

# Convert Keras model to ConcreteFunction

full_model = tf.function(lambda x: model(x))

full_model = full_model.get_concrete_function(

tf.TensorSpec(model.inputs[0].shape, model.inputs[0].dtype))

# Get frozen graph def

frozen_func = convert_variables_to_constants_v2(full_model)

frozen_func.graph.as_graph_def()

layers = [op.name for op in frozen_func.graph.get_operations()]

print("-" * 60)

print("Frozen model layers: ")

for layer in layers:

print(layer)

print("-" * 60)

print("Frozen model inputs: ")

print(frozen_func.inputs)

print("Frozen model outputs: ")

print(frozen_func.outputs)

# Then, serialize the frozen graph and its text representation to disk.

frozen_out_path = ""

frozen_graph_filename = "mlp_mnist"

tf.io.write_graph(graph_or_graph_def=frozen_func.graph,

logdir=frozen_out_path,

name=f"{frozen_graph_filename}.pb",

as_text=False)

tf.io.write_graph(graph_or_graph_def=frozen_func.graph,

logdir=frozen_out_path,

name=f"{frozen_graph_filename}.pbtxt",

as_text=True)------------------------------------------------------------

Frozen model layers:

x

sequential_6/flatten_6/Const

sequential_6/flatten_6/Reshape

sequential_6/dense_12/MatMul/ReadVariableOp/resource

sequential_6/dense_12/MatMul/ReadVariableOp

sequential_6/dense_12/MatMul

sequential_6/dense_12/BiasAdd/ReadVariableOp/resource

sequential_6/dense_12/BiasAdd/ReadVariableOp

sequential_6/dense_12/BiasAdd

sequential_6/dense_12/Relu

sequential_6/dense_13/MatMul/ReadVariableOp/resource

sequential_6/dense_13/MatMul/ReadVariableOp

sequential_6/dense_13/MatMul

sequential_6/dense_13/BiasAdd/ReadVariableOp/resource

sequential_6/dense_13/BiasAdd/ReadVariableOp

sequential_6/dense_13/BiasAdd

NoOp

Identity

------------------------------------------------------------

Frozen model inputs:

[<tf.Tensor 'x:0' shape=(None, 28, 28) dtype=float32>]

Frozen model outputs:

[<tf.Tensor 'Identity:0' shape=(None, 10) dtype=float32>]Out[ ]:

'mlp_mnist.pbtxt'use the model with python¶

In [ ]:

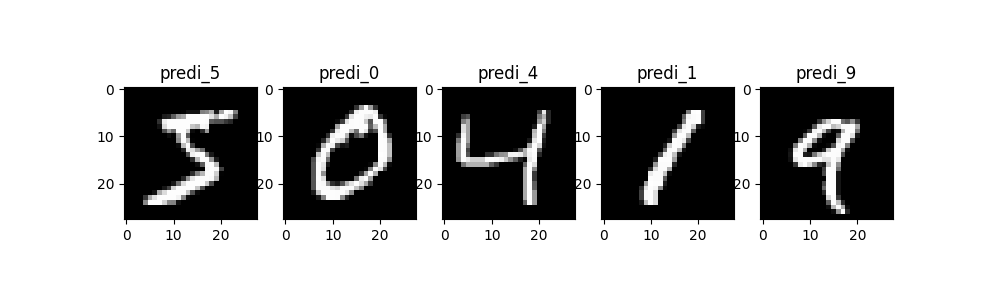

predi = model.predict(image_cube_train[:5,:,:])

print(type(predi))

print(predi.shape)

predi_cls = np.argmax(predi, axis=1)

print(predi_cls)

fig = plt.figure("show predi")

plt.clf()

for ii in range(5):

ax = plt.subplot(1, 5, ii + 1)

plt.imshow(image_cube_train[ii, :, :], cmap="gray")

ax.set_title(f"predi_{predi_cls[ii]}")

plt.show()<class 'numpy.ndarray'>

(5, 10)

[5 0 4 1 9]

use the model with cpp¶

prepare the image file to be loaded in the cpp code.¶

img = image_cube_train[0, :, :]

cv2.imwrite("mnist_image.bmp", img)the cpp code to predict the digit of the image is shown below.¶

my opencv version is 4.5.0.

int run_tf_model()

{

//python - How to load the pre-trained model of the tensorflow by using the opencv dnn model - Stack Overflow

//https://stackoverflow.com/questions/50701410/how-to-load-the-pre-trained-model-of-the-tensorflow-by-using-the-opencv-dnn-mode

//C++ (Cpp)normAssert Examples - HotExamples

//https ://cpp.hotexamples.com/examples/-/-/normAssert/cpp-normassert-function-examples.html

//Mask RCNN in OpenCV - Deep learning based Object Detection and Instance Segmentation

// https ://learnopencv.com/deep-learning-based-object-detection-and-instance-segmentation-using-mask-rcnn-in-opencv-python-c/

cv::dnn::Net model = cv::dnn::readNetFromTensorflow("path_to_dir/mlp_mnist.pb");

cv::Mat img = cv::imread("path_to_dir/mnist_image.bmp", -1);

cv::Mat input_blob = cv::dnn::blobFromImage(img);

model.setInput(input_blob);

cv::Mat out = model.forward();

float theMax = out.at<float>(0, 0);

int maxIdx = 0;

int currentIdx = 0;

while (currentIdx < out.cols - 1)

{

currentIdx++;

float currentVal = out.at<float>(0, currentIdx);

if (currentVal > theMax)

{

theMax = currentVal;

maxIdx = currentIdx;

}

}

printf_s("the digit in the image is %d.\n", maxIdx);

return 0;

}test¶

In [ ]:

a = np.array([1, 2, 3], dtype=np.uint8)

bts = a.tobytes()

print(bts)

a = np.array(np.frombuffer(bts, dtype=np.uint8));

print(a)b'\x01\x02\x03'

[1 2 3]In [ ]:

浙公网安备 33010602011771号

浙公网安备 33010602011771号