nvidia errors in TensorFlow

Go to check

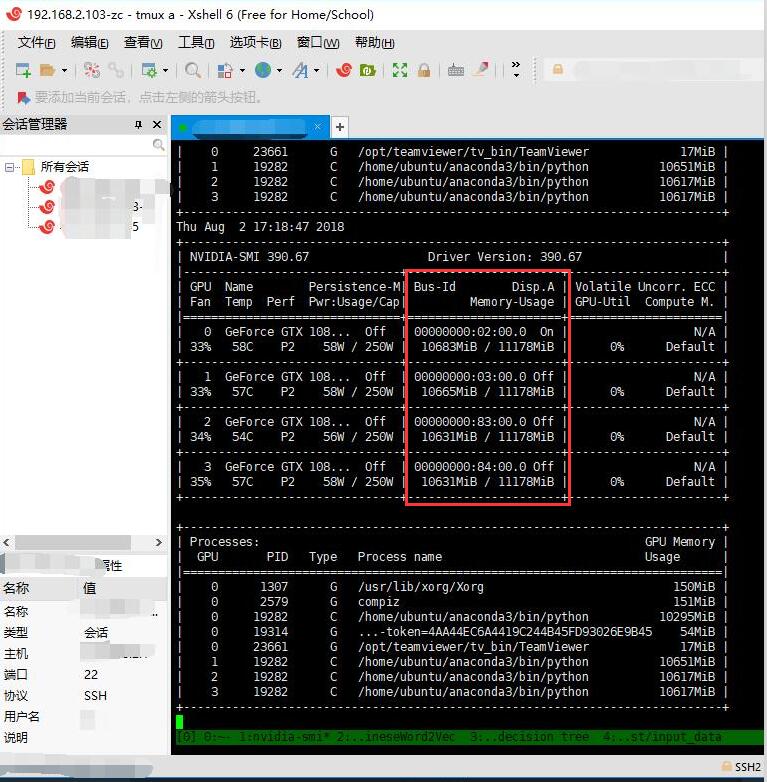

GPU memoryusage, when encounteringnvidiaorcudaerror.

I’ve been worked with tensorflow-gpu for a while. When run the codes, nvidia reports kinds of errors without clear instruction some time.

As far as my knowledge, met a nvidia error, one should check whether the gpu memory is occupied by other process by

nvidia-smi -lAs the following screenshot shows, the GPU-util is 0%, but the memory is nearly used out. If one run a new tensorflow process, errors are likely to be reported.

If you are coding with pycharm, there is scitific mode. In this mode, the process will not exit automatically, so the tensorflow session remains consuming the GPU memrory.

error example

E tensorflow/stream_executor/cuda/cuda_blas.cc:366] failed to create cublas handle: CUBLAS_STATUS_NOT_INITIALIZED

浙公网安备 33010602011771号

浙公网安备 33010602011771号