Python-异步编程

同步和异步:

同步就是整个处理过程顺序执行,当各个过程都执行完毕,并返回结果。是一种线性执行的方式,执行的流程不能跨越。

异步与同步相反,在调用发出后,调用者可以继续执行后面的操作,被调用者通过状态通知调用者,或者通过回调函数来通知结果。

1. Asyncio模块

import asyncio import time now = lambda: time.time() async def test1(): # async def定义异步函数, 其内部有异步操作. 每个线程有一个事件循环 await asyncio.sleep(5) print('test1') async def test2(): await asyncio.sleep(1) print('test2') async def test3(): await asyncio.sleep(4) print('test3') def start(): loop = asyncio.get_event_loop() # 主线程调用asyncio.get_event_loop()时会创建事件循环 tasks = [test1(), test2(), test3()] loop.run_until_complete(asyncio.wait(tasks)) # 异步的任务丢给这个循环的run_until_complete()方法,事件循环会安排协同程序的执行 loop.close() if __name__ == '__main__': start_time = now() start() end_time = now() print(end_time - start_time)

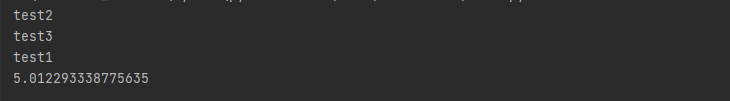

执行结果:

分析:如果是同步的话,需要先执行test1,遇到sleep会一直等待test1执行完成才继续执行下一个方法

2. Aiohhttp模块

import time import asyncio from aiohttp import ClientSession now = lambda: time.time() async def wait(): time.sleep(1) async def hello(url): # async def关键字定义了异步函数 async with ClientSession() as session: async with session.get(url) as response: # ClentSession类发起http请求 response = await response.read() # await关键字加在需要等待的操作前面, response.read()等待request响应,耗时IO await wait() # await关键字加在需要等待的操作前面 print(response) if __name__ == '__main__': start_time = now() loop = asyncio.get_event_loop() url1 = 'https://www.baidu.com' url2 = 'https://www.sogou.com/' url3 = 'https://www.zhihu.com/' tasks = [hello(url1), hello(url2), hello(url3)] loop.run_until_complete(asyncio.wait(tasks)) end_time = now() print(end_time - start_time)

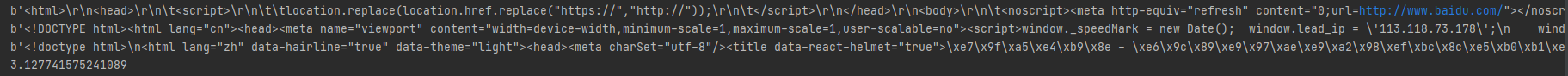

执行结果:

如果需要发起http请求怎么办,通常用requests,但是requests是同步的库,如果想异步的话需引入aiohttp。这里引入一个类,from aiottp import ClientSession, 首先建立一个session对象,然后用session对象去打开网页。session可以进行多项操作,比如get,post,put,head等。

import time import asyncio from aiohttp import ClientSession async def wait(): time.sleep(1) async def hello(url, semaphore): # async def关键字定义了异步函数 async with semaphore: async with ClientSession() as session: async with session.get(url) as response: # ClentSession类发起http请求,get, post, put, head等 response = await response.read() # await关键字加在需要等待的操作前面, response.read()等待request响应,耗时IO await wait() # await关键字加在需要等待的操作前面 return response async def run(): start_time = time.perf_counter() semaphore = asyncio.Semaphore(500) # 限制并发量500 url1 = 'https://www.baidu.com' url2 = 'https://www.sogou.com/' url3 = 'https://www.zhihu.com/' tasks = [asyncio.create_task(hello(url1, semaphore)), asyncio.create_task(hello(url2, semaphore)), asyncio.create_task(hello(url3, semaphore))] #asyncio.create_task()把不同的协程定义成异步任务,并把这些异步任务放入一个列表中 await asyncio.gather(*tasks) # 凑够一批任务以后,一次性提交给asyncio.gather(), Python 就会自动调度这一批异步任务,充分利用他们的请求等待时间发起新的请求 end_time = time.perf_counter() print(end_time - start_time) if __name__ == '__main__': asyncio.run(run())

通过asyncio.create_task()把不同的协程写成异步任务,并把这些异步任务放入一个列表中,凑够一批任务以后,一次性提交给asyncio.gather(). 于是, Python就会自动调度这一批异步任务,充分利用他们的请求等待时间发起新的请求.

收集http响应

import time import asyncio from aiohttp import ClientSession now = lambda: time.time() async def wait(): time.sleep(1) async def hello(url): # async def关键字定义了异步函数 async with ClientSession() as session: async with session.get(url) as response: # ClentSession类发起http请求,get, post, put, head等 response = await response.read() # await关键字加在需要等待的操作前面, response.read()等待request响应,耗时IO await wait() # await关键字加在需要等待的操作前面 return response if __name__ == '__main__': start_time = now() loop = asyncio.get_event_loop() url1 = 'https://www.baidu.com' url2 = 'https://www.sogou.com/' url3 = 'https://www.zhihu.com/' tasks = [asyncio.ensure_future(hello(url1)), asyncio.ensure_future(hello(url2)), asyncio.ensure_future(hello(url3))] result = loop.run_until_complete(asyncio.gather(*tasks)) # 把响应一个一个收集到列表中, 最后保存到本地. asyncio.gather(*tasks)将响应全部收集起来 print(result) end_time = now() print(end_time - start_time)

执行结果:

异常解决

假如并发达到了2000个,程序会报错: ValueError: too many file descriptors in select(). 报错的原因字面上看是Python调取的select对打开的文件于最大数量的限制,这个其实是操作系统的限制,linux打开文件的最大数默认是1024,window默认是509,超过了这个值,程序就开始报错。有三种方法解决这个问题

1. 限制并发数量(一次不要塞那么多任务, 或者显示最大并发数量)

2. 使用回调方式

3. 修改操作系统打开文件数的最大限制,在系统有配置文件可以修改默认值

import time import asyncio from aiohttp import ClientSession now = lambda: time.time() async def wait(): time.sleep(1) async def hello(url, semaphore): # async def关键字定义了异步函数 async with semaphore: async with ClientSession() as session: async with session.get(url) as response: # ClentSession类发起http请求,get, post, put, head等 response = await response.read() # await关键字加在需要等待的操作前面, response.read()等待request响应,耗时IO await wait() # await关键字加在需要等待的操作前面 return response if __name__ == '__main__': start_time = now() loop = asyncio.get_event_loop() semaphore = asyncio.Semaphore(500) # 限制并发量500 url1 = 'https://www.baidu.com' url2 = 'https://www.sogou.com/' url3 = 'https://www.zhihu.com/' tasks = [asyncio.ensure_future(hello(url1, semaphore)), asyncio.ensure_future(hello(url2, semaphore)), asyncio.ensure_future(hello(url3, semaphore))] #总共3个任务 result = loop.run_until_complete(asyncio.gather(*tasks)) # 把响应一个一个收集到列表中, 最后保存到本地. asyncio.gather(*tasks)将响应全部收集起来 print(result) end_time = now() print(end_time - start_time)