multi-node cluster (linux)

echo $PATH source /etc/environment export JAVA_HOME=${JAVA_HOME} rmp -Uvh tar -xvf

ssh ssh-keygen hostname id whoami df -h . ln -s gvfs-tree /etc/init.d/ssh restart

cut -d ":" -f 1 /etc/passwd compgen -u/-g

some important files for system configuration or else :

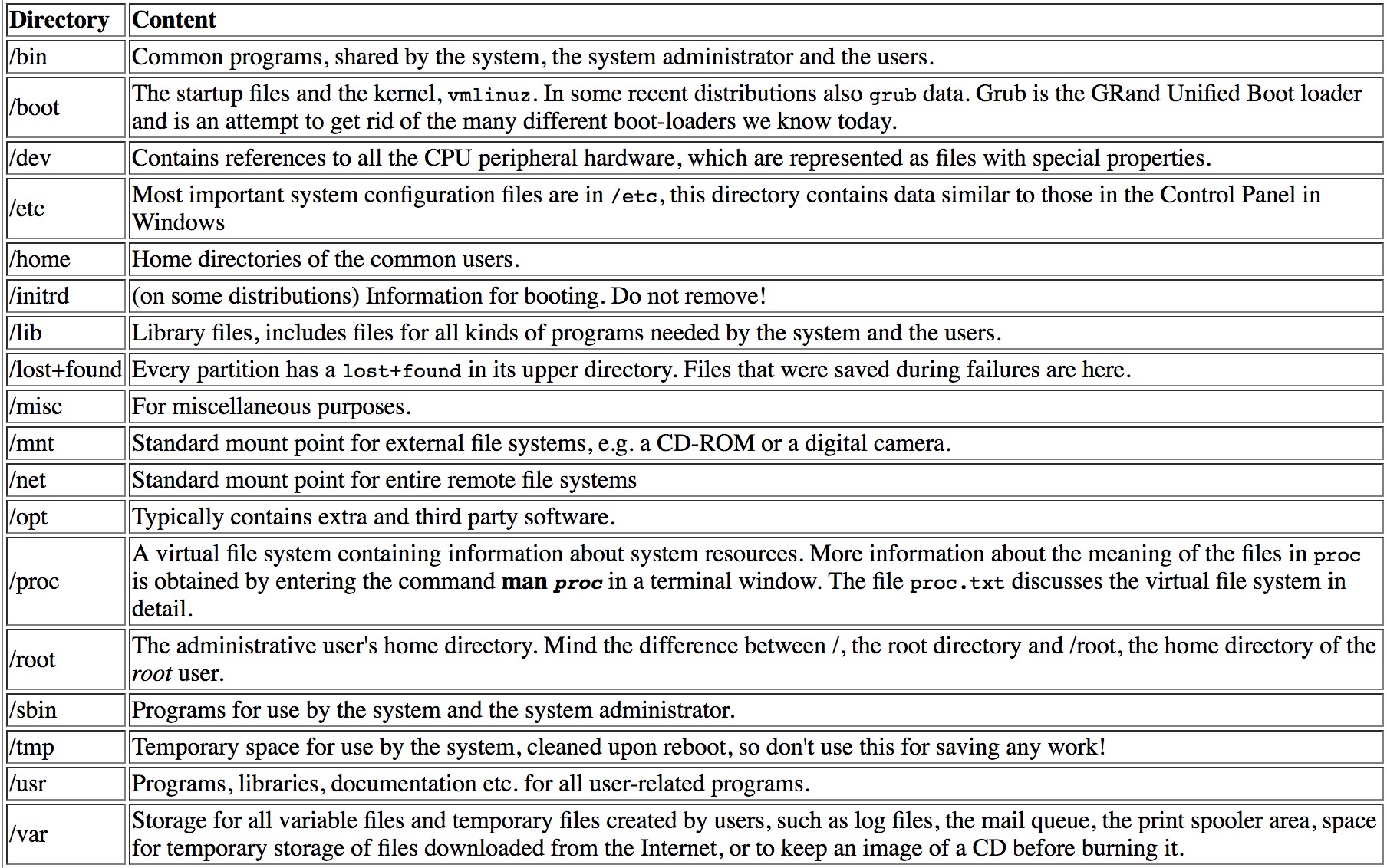

/usr /opt /boot /net /etc /root /sbin /bin /initrd

problem shooting

- index of jdk rpm

- open a connection to your authentication agent

eval `ssh-agent -s`ssh-add

- ssh localhost problem(vm to localhost): 1the username(master to slave) and hostname 2hosts

ssh & sshd:

1- ssh : The command we use to connect to remote machines - the client.

2- sshd : The daemon that is running on the server and allows clients to connect to the server.

ssh is pre-enabled on Linux, but in order to start sshd daemon, we need to install ssh first.

The ECDSA host key for localhost has changed, and the key for the corresponding IP address 192.168..is unchanged. This could either mean that DNS SPOOFING is happening or the IP address for the host and its host key have changed at the same time.

/usr/sbin/sshd -t

echo $?

ssh-add -D delete all keys

mac: ssh localhost/***@localhost password :* root@localhost permission denied cannot ssh slave

ubuntu: ssh ***@master * root@master permission denied hduser@slave denied

So,for master side can ssh by *** not root.(*** have root privilege in slave while a common user in master.)

After ssh-keygen -R hostname, for slave side can ssh hostname by hduser. https://www.digitalocean.com/community/questions/warning-remote-host-identification-has-changed

ssh-copy-id -i $HOME/.ssh/id_rsa.pub hduser@slave

And my sshd_config :

PermitRootLogin without-password

StrictModes yes

RSAAuthentication yes

PubkeyAuthentication yes

then ,i set PermitRootLogin yes. Now, i can ssh root@slave.(ssh can connect by any user except s-to-m by root but with passphare)

- allow root to max os

By default, the root user is not enabled [in Mountain Lion].

https://superuser.com/questions/555810/how-do-i-ssh-login-into-my-mac-as-root

- ubuntu login loop

https://askubuntu.com/questions/146137/login-screen-loops-unless-you-login-as-guest

(.profile .bashrc .xauthority .xsession-error xorg ) i have tried to modify all these files following the instructions but failed

solution1:some answers didn't suit me. I have to reinstall xubuntu-desktop .

solution2: disable the guest session by modifying .profile ,get the result"fail to start session"

/var/log/lightdm/lightdm.log file to get the debug info https://ubuntuforums.org/showthread.php?t=2226247

change the .dmrc and set the session = ubuntu ,then reboot

- change autologin-user

nano /etc/lightdm/lightdm.conf

/var/lib/AccountsService/users nano

/var/cache/lightdm/dmrc rm

After rebooting it seems nothing changed. just switch account.

get rid of monitor error rm $HOME/.config/monitors.xml- resolve to host /etc/hosts

- add path .bash_profile remember to source

source /etc/environment - disable ipv6 http://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/

permanently : /etc/sysctl.conf

Ubuntu is that using 0.0.0.0 for the various networking-related Hadoop configuration options will result in Hadoop binding to the IPv6 addresses of my Ubuntu box.

- export : not a valid identifier cannot have any blank space between = and the left of =

- ssh without passphare

http://www.thecloudavenue.com/2012/01/how-to-setup-password-less-ssh-to.html

after ssh-copy-id, ssh slave still have to input password because ssh by root of slave.

so, i remove root privilege of ***.(id,w,who nano /etc/passwd)

- multi-node cluster

http://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-multi-node-cluster/

1 conf/masters(m only) defines on which machines Hadoop will start secondary NameNodes

The primary NameNode and the JobTracker will always be the machines on which you run the bin/start-dfs.sh and bin/start-mapred.sh scripts

2 bin/hadoop-daemon.sh start [namenode | secondarynamenode | datanode | jobtracker | tasktracker]

which will not take the “conf/masters“ and “conf/slaves“ files into account. and be deprecated

3 the machine on which bin/start-dfs.sh is run will become the primaryNameNode.

4 secondary NameNode :

merges the fsimage and the edits log files periodically and keeps edits log size within a limit.

5 conf/slaves(m only) helps you to make “full” cluster restarts easier

The conf/slaves file lists the hosts, one per line, where the Hadoop slave daemons (DataNodes and TaskTrackers) will be run. We want both the master box and the slave box to act as Hadoop slaves because we want both of them to store and process data.

used only by the scripts like bin/start-dfs.sh or bin/stop-dfs.sh

6 dfs.replication

It defines how many machines a single file should be replicated to before it becomes available. If you set this to a value higher than the number of available slave nodes (more precisely, the number of DataNodes), you will start seeing a lot of “(Zero targets found, forbidden1.size=1)” type errors in the log files.

7 “mapred.reduce.tasks“

As a rule of thumb, use num_tasktrackers * num_reduce_slots_per_tasktracker * 0.99.

If num_tasktrackers is small (as in the case of this tutorial), use(num_tasktrackers - 1) * num_reduce_slots_per_tasktracker.

8 Do not format a running cluster because this will erase all existing data in the HDFS filesytem!

9

- difference of `hdfs dfs` and `hadoop dfs` and `hadoop fs`

- wget wrapper

https://superuser.com/questions/691612/does-wget-have-a-download-history

- ubuntu用户的配置文件及密码(解释/etc/passwd /etc/shadow /etc/group 格式)

http://blog.sina.com.cn/s/blog_5edae1a101017gfn.html

!!: take up each problem one at a time