第5章:Kubernetes调度

Pod创建流程,Pod中影响调度的主要属性

master节点有哪些组件?

1、apiserver -> etcd

2、scheduler

3、controller-manager

node节点有哪些组件?

1、kubelet

2、proxy

3、docker

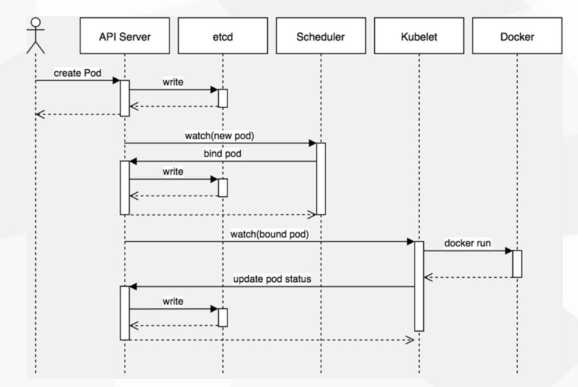

创建一个Pod的工作流程

创建一个Pod的工作流程?

0、kubectl apply -f pod.yaml

1、kubectl将yaml转换成json,提交给apiserver,apiserver将数据存储etcd

2、scheduler会监听到创建新pod的事件,根据pod属性调度到指定节点,并且给pod打个标签具体是哪个节点

3、apiserver拿到调度的结果并写到etcd中

4、kubelet从apiserver获取分配到我节点的pod

5、kubelet调用docker sock创建容器

6、docker根据kubelet需求创建完容器后将容器状态回报给kubelet

7、kubelet会将pod状态更新到apiserver

8、apiserer将状态数据写到etcd

9、kubectl get pods

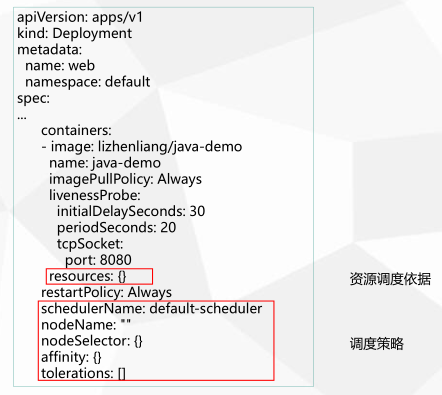

Pod中影响调度的主要属性

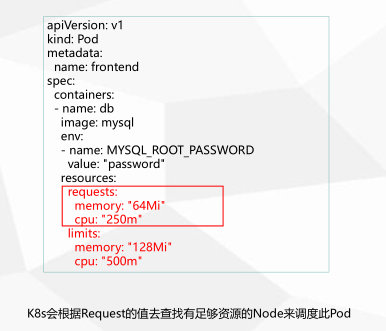

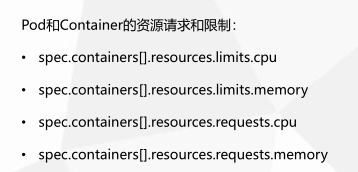

资源限制对Pod调度的影响

requests:资源配额,必须小于等于limits

limits:资源限制

如果不指定这两个值,那么pod能使用宿主机所有资源。

cpu单位:

2000m = 2

1000m = 1

500m = 0.5

100m = 0.1

[root@k8s-m1 chp5]# kubectl describe nodes k8s-n1 Name: k8s-n1 Roles: <none> Labels: beta.kubernetes.io/arch=amd64 beta.kubernetes.io/os=linux disktype=ssd kubernetes.io/arch=amd64 kubernetes.io/hostname=k8s-n1 kubernetes.io/os=linux Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock node.alpha.kubernetes.io/ttl: 0 projectcalico.org/IPv4Address: 172.16.1.24/24 projectcalico.org/IPv4IPIPTunnelAddr: 10.244.215.64 volumes.kubernetes.io/controller-managed-attach-detach: true CreationTimestamp: Thu, 23 Jul 2020 22:34:32 +0800 Taints: gpu=yes:NoSchedule Unschedulable: false Lease: HolderIdentity: k8s-n1 AcquireTime: <unset> RenewTime: Sun, 09 Aug 2020 23:13:47 +0800 Conditions: Type Status LastHeartbeatTime LastTransitionTime Reason Message ---- ------ ----------------- ------------------ ------ ------- NetworkUnavailable False Thu, 06 Aug 2020 04:03:43 +0800 Thu, 06 Aug 2020 04:03:43 +0800 CalicoIsUp Calico is running on this node MemoryPressure False Sun, 09 Aug 2020 23:12:38 +0800 Fri, 07 Aug 2020 22:09:45 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available DiskPressure False Sun, 09 Aug 2020 23:12:38 +0800 Fri, 07 Aug 2020 22:09:45 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure PIDPressure False Sun, 09 Aug 2020 23:12:38 +0800 Fri, 07 Aug 2020 22:09:45 +0800 KubeletHasSufficientPID kubelet has sufficient PID available Ready True Sun, 09 Aug 2020 23:12:38 +0800 Fri, 07 Aug 2020 22:09:45 +0800 KubeletReady kubelet is posting ready status Addresses: InternalIP: 10.0.0.24 Hostname: k8s-n1 Capacity: cpu: 2 ephemeral-storage: 27245572Ki hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 4026372Ki pods: 110 Allocatable: cpu: 2 ephemeral-storage: 25109519114 hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 3923972Ki pods: 110 System Info: Machine ID: 60f04d707dff4506a290fb01a760a736 System UUID: DAA64D56-F012-CD06-9629-DB9504A8AD2F Boot ID: 31b9eb98-e462-43ee-a665-f940eaff6ac8 Kernel Version: 3.10.0-957.el7.x86_64 OS Image: CentOS Linux 7 (Core) Operating System: linux Architecture: amd64 Container Runtime Version: docker://19.3.12 Kubelet Version: v1.18.0 Kube-Proxy Version: v1.18.0 PodCIDR: 10.244.1.0/24 PodCIDRs: 10.244.1.0/24 Non-terminated Pods: (9 in total) Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE --------- ---- ------------ ---------- --------------- ------------- --- default nginx-f89759699-qjjjb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 10d default web-6b9c78c94f-tqxvj 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4d default web-6b9c78c94f-zs9pk 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4d default web-k8s-n1 0 (0%) 0 (0%) 0 (0%) 0 (0%) 2d12h default web2-57b578c78c-kkh8p 500m (25%) 1 (50%) 0 (0%) 0 (0%) 3d23h default web3-8chqf 0 (0%) 0 (0%) 0 (0%) 0 (0%) 66m kube-system calico-node-62gxk 250m (12%) 0 (0%) 0 (0%) 0 (0%) 16d kube-system kube-proxy-t2xst 0 (0%) 0 (0%) 0 (0%) 0 (0%) 17d kube-system metrics-server-7875f8bf59-n2lr2 0 (0%) 0 (0%) 0 (0%) 0 (0%) 10d Allocated resources: (Total limits may be over 100 percent, i.e., overcommitted.) Resource Requests Limits -------- -------- ------ cpu 750m (37%) 1 (50%) memory 0 (0%) 0 (0%) ephemeral-storage 0 (0%) 0 (0%) hugepages-1Gi 0 (0%) 0 (0%) hugepages-2Mi 0 (0%) 0 (0%) Events: <none>

超过节点的cpu核心限制

nodeSelector nodeAffinity

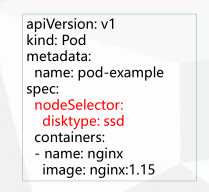

nodeSelector

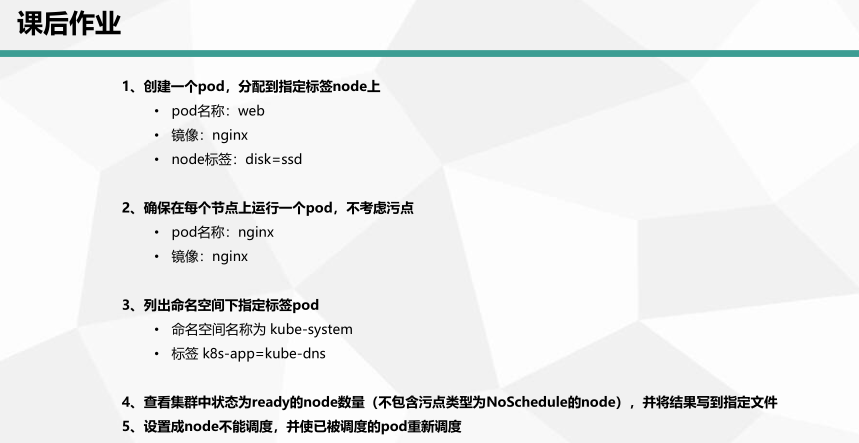

nodeSelector:用于将Pod调度到匹配Label 的Node上

给节点打标签:

kubectl label nodes [node] key=value

[root@k8s-m1 chp5]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS k8s-m1 Ready master 17d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-m1,kubernetes.io/os=linux,node-role.kubernetes.io/master= k8s-n1 Ready <none> 17d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssh,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-n1,kubernetes.io/os=linux k8s-n2 Ready <none> 17d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-n2,kubernetes.io/os=linux [root@k8s-m1 chp5]# kubectl label nodes k8s-n1 disktype=ssd error: 'disktype' already has a value (ssh), and --overwrite is false [root@k8s-m1 chp5]# kubectl label nodes k8s-n1 disktype=ssd --overwrite node/k8s-n1 labeled [root@k8s-m1 chp5]# cat nodeSelector.yml apiVersion: v1 kind: Pod metadata: name: nodeselector spec: nodeSelector: disktype: "ssd" containers: - name: web image: lizhenliang/nginx-php [root@k8s-m1 chp5]# kubectl get pod -o wide |grep nodeselector nodeselector 0/1 Pending 0 26s <none> <none> <none> <none>

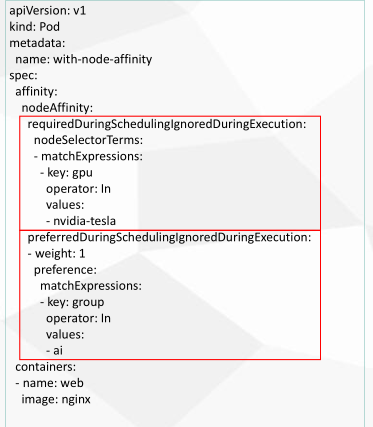

nodeAffinity

nodeAffinity:节点亲和类似于 nodeSelector,可以根据节点上的标签来约束Pod可以调度到哪些节点。

相比 nodeselector:

- 匹配有更多的逻辑组合,不只是字符串的完全相等

- 调度分为软策略和硬策略,而不是硬性要求

- 硬( required):必须满足

- 软( preferred):尝试满足,但不保证

操作符:In、NotIn、 Exists、 DoesNotExist、Gt、Lt

指定节点,污点,DS控制器,调度失败分析

Tant(污点)

Taints:避免Pod调度到特定Node上

应用场景:

- 专用节点,例如配备了特殊硬件的节点

- 基于 Taint的驱逐

设置污点 kubectl taint node [node] key=value:effect 其中[effect]可取值 NoSchedule:一定不能被调度。 PreferNoSchedule:尽量不要调度, NoExecute:不仅不会调度,还会驱逐Node上已有的Pod 去掉污点 kubectl taint node [node] key=value:effect-

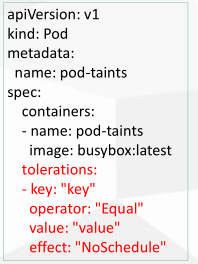

Toleration(污点容忍)

Toleration:允许Pod调度到持有 Taints的Node上

[root@k8s-m1 chp5]# kubectl describe nodes|grep Taint Taints: node-role.kubernetes.io/master:NoSchedule Taints: gpu=yes:NoSchedule Taints: <none>

[root@k8s-m1 chp5]# cat toleration.yml apiVersion: apps/v1 kind: Deployment metadata: name: web-toleration spec: selector: matchLabels: project: demo app: java template: metadata: labels: project: demo app: java spec: tolerations: - key: gpu operator: Equal value: "yes" effect: "NoSchedule" containers: - name: web3 image: lizhenliang/java-demo ports: - containerPort: 8080

[root@k8s-m1 chp5]# kubectl apply -f toleration.yml deployment.apps/web-toleration created [root@k8s-m1 chp5]# kubectl get pod -o wide |grep web-toleration web-toleration-5b867ffcf7-sjs9v 1/1 Running 0 32s 10.244.111.221 k8s-n2 <none> <none>

nodeName

nodeName:用于将Pod调度到指定的Node上,不经过调度器

[root@k8s-m1 chp5]# cat nodeName-pod.yml apiVersion: apps/v1 kind: Deployment metadata: name: web-nodename spec: selector: matchLabels: project: demo app: java template: metadata: labels: project: demo app: java spec: nodeName: k8s-n2 containers: - name: web-nodename image: lizhenliang/java-demo ports: - containerPort: 8080

[root@k8s-m1 chp5]# kubectl get pods -o wide |grep web-nodename web-nodename-6646f87bf5-d52rj 1/1 Running 0 77s 10.244.111.219 k8s-n2 <none> <none>

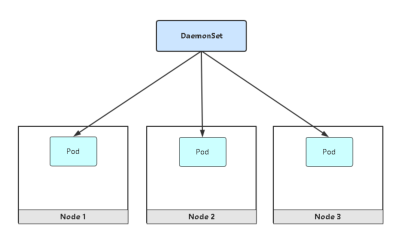

DaemonSet

Daemon Set功能

- 在每一个Node上运行一个Pod

- 新加入的Node也同样会自动运行一个Pod

应用场景:网络插件、 Agent

[root@k8s-m1 ~]# kubectl describe node|grep Taint Taints: node-role.kubernetes.io/master:NoSchedule Taints: <none> Taints: gpu=yes:NoSchedule [root@k8s-m1 ~]# kubectl taint node k8s-n2 gpu- node/k8s-n2 untainted [root@k8s-m1 ~]# kubectl describe node|grep Taint Taints: node-role.kubernetes.io/master:NoSchedule Taints: <none> Taints: <none> [root@k8s-m1 ~]# kubectl taint node k8s-n2 gpu=yes:NoSchedule node/k8s-n2 tainted

[root@k8s-m1 chp5]# cat daemon-toleration.yml apiVersion: apps/v1 kind: DaemonSet metadata: name: web3 spec: selector: matchLabels: project: demo app: java template: metadata: labels: project: demo app: java spec: tolerations: - key: gpu operator: Equal value: "yes" effect: "NoSchedule" - key: node-role.kubernetes.io/master effect: NoSchedule containers: - name: web3 image: lizhenliang/java-demo ports: - containerPort: 8080

[root@k8s-m1 chp5]# kubectl apply -f daemon-toleration.yml daemonset.apps/web3 created [root@k8s-m1 chp5]# kubectl get pod -o wide|grep web3 web3-8chqf 1/1 Running 0 34m 10.244.215.86 k8s-n1 <none> <none> web3-sh7dq 1/1 Running 0 34m 10.244.111.218 k8s-n2 <none> <none> web3-x87zb 1/1 Running 0 34m 10.244.42.144 k8s-m1 <none> <none>

Cluster Management Commands: certificate 修改 certificate 资源. cluster-info 显示集群信息 top Display Resource (CPU/Memory/Storage) usage. cordon 标记 node 为 unschedulable uncordon 标记 node 为 schedulable drain Drain node in preparation for maintenance taint 更新一个或者多个 node 上的 taints

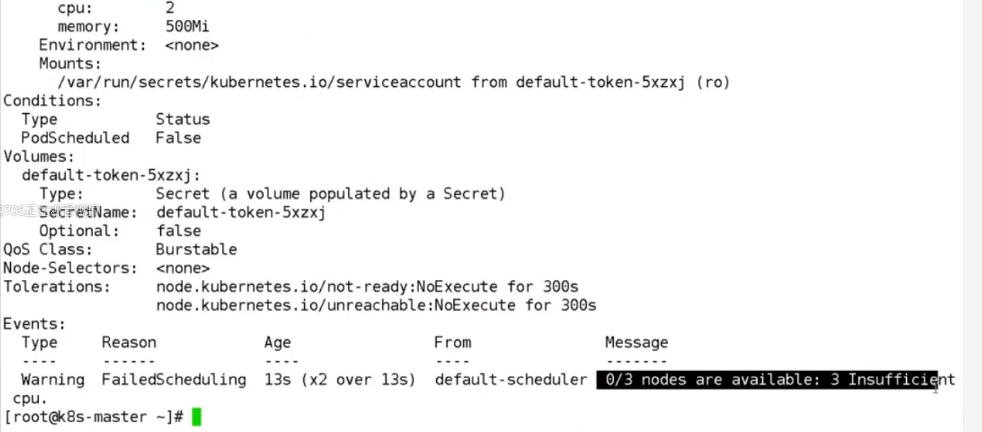

调度失败原因分析

查看调度结果:

kubectl get pod <NAME> -o wide

查看调度失败原因:kubectl describe pod <NAME>

- 节点CPU/内存不足

- 有污点,没容忍

- 没有匹配到节点标签

容器处于pending状态:

1、正在下载镜像

2、

CPU不足:

0/3 nodes are available: 3 Insufficient cpu.

没有匹配标签的节点:

0/3 nodes are available: 3 node(s) didn't match node selector

没有污点容忍:

0/3 nodes are available: 1 node(s) had taint {disktype: ssd}, that the pod didn't tolerate, 1 node(s) had taint {gpu: yes}, that the pod didn't tolerate, 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

本文来自博客园,作者:元贞,转载请注明原文链接:https://www.cnblogs.com/yuleicoder/p/13461784.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号