第3章:Kubernetes监控与日志管理

1.查看集群资源状况

集群整体状态: kubectl cluster-info 更多集群信息: kubectl cluster-info dump 查看资源信息 kubectl describe <资源> <名称> 查看资源信息 kubectl get pod <Pod名称> --watch

-w 监控着

kubectl api-resources

[root@k8s-m1 ~]# kubectl api-resources NAME SHORTNAMES APIGROUP NAMESPACED KIND bindings true Binding componentstatuses cs false ComponentStatus configmaps cm true ConfigMap endpoints ep true Endpoints events ev true Event limitranges limits true LimitRange namespaces ns false Namespace nodes no false Node persistentvolumeclaims pvc true PersistentVolumeClaim persistentvolumes pv false PersistentVolume pods po true Pod podtemplates true PodTemplate replicationcontrollers rc true ReplicationController resourcequotas quota true ResourceQuota secrets true Secret serviceaccounts sa true ServiceAccount services svc true Service mutatingwebhookconfigurations admissionregistration.k8s.io false MutatingWebhookConfiguration validatingwebhookconfigurations admissionregistration.k8s.io false ValidatingWebhookConfiguration customresourcedefinitions crd,crds apiextensions.k8s.io false CustomResourceDefinition apiservices apiregistration.k8s.io false APIService controllerrevisions apps true ControllerRevision daemonsets ds apps true DaemonSet deployments deploy apps true Deployment replicasets rs apps true ReplicaSet statefulsets sts apps true StatefulSet tokenreviews authentication.k8s.io false TokenReview localsubjectaccessreviews authorization.k8s.io true LocalSubjectAccessReview selfsubjectaccessreviews authorization.k8s.io false SelfSubjectAccessReview selfsubjectrulesreviews authorization.k8s.io false SelfSubjectRulesReview subjectaccessreviews authorization.k8s.io false SubjectAccessReview horizontalpodautoscalers hpa autoscaling true HorizontalPodAutoscaler cronjobs cj batch true CronJob jobs batch true Job certificatesigningrequests csr certificates.k8s.io false CertificateSigningRequest leases coordination.k8s.io true Lease bgpconfigurations crd.projectcalico.org false BGPConfiguration bgppeers crd.projectcalico.org false BGPPeer blockaffinities crd.projectcalico.org false BlockAffinity clusterinformations crd.projectcalico.org false ClusterInformation felixconfigurations crd.projectcalico.org false FelixConfiguration globalnetworkpolicies crd.projectcalico.org false GlobalNetworkPolicy globalnetworksets crd.projectcalico.org false GlobalNetworkSet hostendpoints crd.projectcalico.org false HostEndpoint ipamblocks crd.projectcalico.org false IPAMBlock ipamconfigs crd.projectcalico.org false IPAMConfig ipamhandles crd.projectcalico.org false IPAMHandle ippools crd.projectcalico.org false IPPool kubecontrollersconfigurations crd.projectcalico.org false KubeControllersConfiguration networkpolicies crd.projectcalico.org true NetworkPolicy networksets crd.projectcalico.org true NetworkSet endpointslices discovery.k8s.io true EndpointSlice events ev events.k8s.io true Event ingresses ing extensions true Ingress nodes metrics.k8s.io false NodeMetrics pods metrics.k8s.io true PodMetrics ingressclasses networking.k8s.io false IngressClass ingresses ing networking.k8s.io true Ingress networkpolicies netpol networking.k8s.io true NetworkPolicy runtimeclasses node.k8s.io false RuntimeClass poddisruptionbudgets pdb policy true PodDisruptionBudget podsecuritypolicies psp policy false PodSecurityPolicy clusterrolebindings rbac.authorization.k8s.io false ClusterRoleBinding clusterroles rbac.authorization.k8s.io false ClusterRole rolebindings rbac.authorization.k8s.io true RoleBinding roles rbac.authorization.k8s.io true Role priorityclasses pc scheduling.k8s.io false PriorityClass csidrivers storage.k8s.io false CSIDriver csinodes storage.k8s.io false CSINode storageclasses sc storage.k8s.io false StorageClass volumeattachments storage.k8s.io false VolumeAttachment

NAMESPACED 表示是不是可以被命名空间隔离

查看pod

[root@k8s-m1 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-f89759699-qjjjb 1/1 Running 0 9h [root@k8s-m1 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-f89759699-qjjjb 1/1 Running 0 9h

[root@k8s-m1 ~]# kubectl describe pod nginx-f89759699-qjjjb

[root@k8s-m1 ~]# kubectl describe pod nginx-f89759699-qjjjb Name: nginx-f89759699-qjjjb Namespace: default Priority: 0 Node: k8s-n1/10.0.0.24 Start Time: Thu, 30 Jul 2020 21:29:42 +0800 Labels: app=nginx pod-template-hash=f89759699 Annotations: cni.projectcalico.org/podIP: 10.244.215.73/32 cni.projectcalico.org/podIPs: 10.244.215.73/32 Status: Running IP: 10.244.215.73 IPs: IP: 10.244.215.73 Controlled By: ReplicaSet/nginx-f89759699 Containers: nginx: Container ID: docker://5cad37326a2ec8b9ac91e83910f664f0587723c6c863222eae702d94755d5b99 Image: nginx Image ID: docker-pullable://nginx@sha256:0e188877aa60537d1a1c6484b8c3929cfe09988145327ee47e8e91ddf6f76f5c Port: <none> Host Port: <none> State: Running Started: Thu, 30 Jul 2020 21:30:09 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-dvcjp (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-dvcjp: Type: Secret (a volume populated by a Secret) SecretName: default-token-dvcjp Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 15m (x353 over 9h) default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) had taint {node.kubernetes.io/unreachable: }, that the pod didn't tolerate. Normal Scheduled 9m57s default-scheduler Successfully assigned default/nginx-f89759699-qjjjb to k8s-n1 Normal Pulling 9m56s kubelet, k8s-n1 Pulling image "nginx" Normal Pulled 9m30s kubelet, k8s-n1 Successfully pulled image "nginx" Normal Created 9m30s kubelet, k8s-n1 Created container nginx Normal Started 9m30s kubelet, k8s-n1 Started container nginx

# 查看组件的状态

[root@k8s-m1 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"}

# 查看节点 [root@k8s-m1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-m1 Ready master 6d23h v1.18.0 k8s-n1 Ready <none> 6d23h v1.18.0 k8s-n2 Ready <none> 6d23h v1.18.0

2.监控集群资源利用率

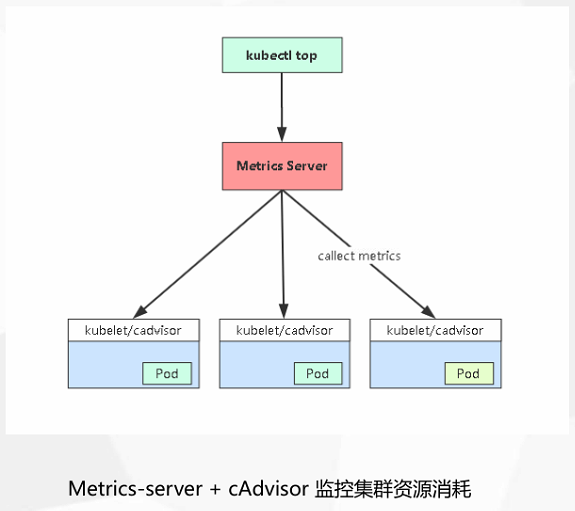

Metrics Server 是一个集群范围的资源使用情况的数据聚合器。作为一个应用部署在集群中。 Metric server,从毎个节点上 Kubelet AP收集指标,通过 Kubernetes聚合器注册在 Master APiServer中

默认执行 kubectl top node 会报错,需要安装 Metrics Server

Metrics Server 的架构

Metrics server部署

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml

vi components.yaml

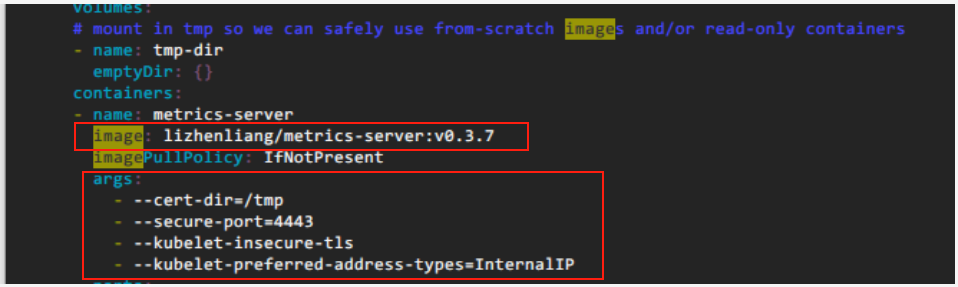

Metrics Server 镜像在国外需要拉到docker hub 方便拉取(镜像的地址 默认会去拉国外的image会卡在那里,这里改成国内的)

修改参数 --kubelet-insecure-tls # 允许不安全的tls --kubelet-preferred-address-types=InternalIP # 通过 InternalIP IP访问 以IP的形式去连接kubelet

修改后如下图所示

项目地址 https://github.com/kubernetessigs/metrics-server

1.19+ 在执行yaml文件的时候会有一个警告 Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created 这里改一下API的接口 sed 's#apiregistration.k8s.io/v1beta1#apiregistration.k8s.io/v1#g' components.yaml -i

heapster 用于汇总所有node节点资源利用率 metrics-server hpa pod水平扩展 vpa pod横向扩展 - --kubelet-preferred-address-types=InternalIP 以node ip连接kubelet - --kubelet-insecure-tls 跳过tls检查 kubectl get apiservice 查看apiserver聚合层注册信息

工作流程:kubectl top -> apiserver -> metrics-server pod -> kubelet(cadvisor) -> cgroups

apiserver聚合层:动态注册,安全代理,方便第三方应用接入,统一接入

查看Node资源消耗: kubectl top node <node name> 查看Pod资源消耗: kubectl top pod <pod name> [root@k8s-m1 ~]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-m1 108m 5% 1734Mi 45% k8s-n1 47m 2% 480Mi 12% k8s-n2 45m 2% 414Mi 10% [root@k8s-m1 ~]# kubectl top pod NAME CPU(cores) MEMORY(bytes) nginx-f89759699-qjjjb 0m 3Mi

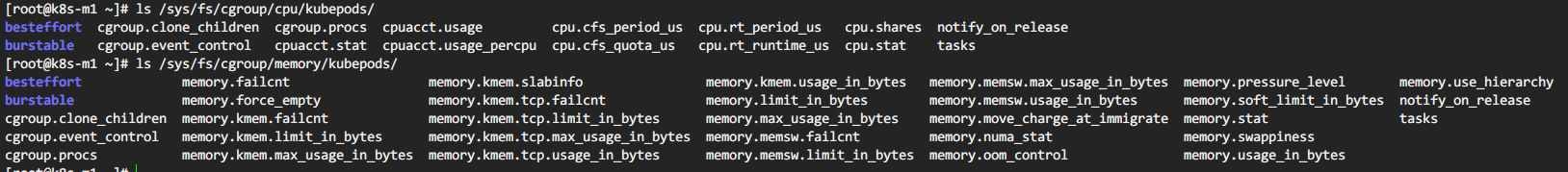

系统底层 对pod 的资源限制

3.管理K8s组件日志

K8S 系统的组件日志

K8S Cluster里面部署的应用程序日志

标准输出

日志文件

systemd 守护进程管理的组件 journalctl -u kubelet Pod部罟的组件 kubectl logs 组件名字 -n kube-system 系统日志 /var/log/messages

查看组件日志 [root@k8s-m1 chp3]# kubectl logs etcd-k8s-m1 -n kube-system

输出日志

[root@k8s-m1 ~]# kubectl logs etcd-k8s-m1 -n kube-system > etcd-k8s-m1.log

4.管理K8s应用日志

标准输出路径 /var/log/docker/containers/<container-id>/<container-id>-json.log

查看容器标准输出日志 kubectl logs <Pod名称> kubectl logs -f <Pod名称> kubectl logs -f <Pod名称> -c <容器名称>

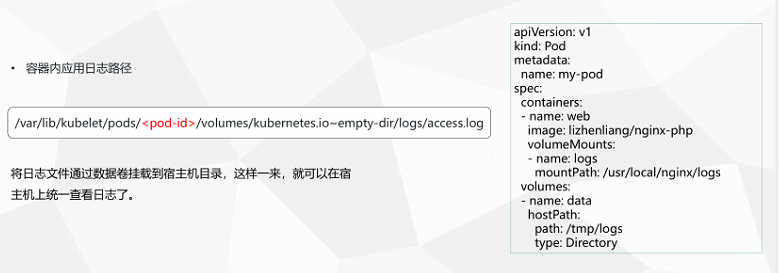

将日志文件通过数据卷挂载到宿主机目录,这样一来,就可以在宿主机上统一查看日志了。

- 1、deamonset方式在每个节点部署一个日志采集pod完成讲解的两个目录采集

- 2、sidecar在pod部署一个日志采集容器,通过数据卷共享业务容器日志目录

练习

将日志挂载到本地

[root@k8s-m1 chp3]# cat pod2.yml apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: web image: lizhenliang/nginx-php volumeMounts: - name: logs mountPath: /usr/local/nginx/logs volumes: - name: logs hostPath: path: /tmp/logs type: Directory

[root@k8s-n2 ~]# tail -f /tmp/logs/access.log 10.244.42.128 - - [30/Jul/2020:23:18:07 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0" 10.244.42.128 - - [30/Jul/2020:23:18:08 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0" 10.244.42.128 - - [30/Jul/2020:23:18:09 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0" 10.244.42.128 - - [30/Jul/2020:23:18:10 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0"

放到kubelet 的日志卷

[root@k8s-m1 chp3]# cat pod-kube-v.yml apiVersion: v1 kind: Pod metadata: name: my-pod-1 spec: containers: - name: web image: lizhengliang/nginx-php volumeMounts: - name: logs mountPath: /usr/local/nginx/logs volumes: - name: logs emptyDir: {}

1、查看pod日志,并将日志中Error的行记录到指定文件 • pod名称:web • 文件:/opt/web kubectl run web --image=nginx -n cka kubectl get pod -n cka -o wide kubectl logs web -n cka | grep "\[error\]" kubectl logs web -n cka | grep "\[error\]" > /opt/web.log 2、查看指定标签使用cpu最高的pod,并记录到到指定文件 • 标签:app=web • 文件:/opt/cpu kubectl run web1 --image=nginx -l app=web -n cka kubectl run web2 --image=nginx -l app=web -n cka kubectl run web3 --image=nginx -l app=web -n cka kubectl top pod -n cka -l app=web --sort-by=cpu > /opt/cpu.log

本文来自博客园,作者:元贞,转载请注明原文链接:https://www.cnblogs.com/yuleicoder/p/13403927.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号