Spark2.1.0编译

1.下载spark源码包

http://spark.apache.org/downloads.html

2.安装Scala与maven,解压spark源码包

安装Scala:

tar zxf scala-2.11.8.tar

修改vim /etc/profile

export SCALA_HOME=/usr/scala/scala-2.11.8

export PATH=$PATH:$SCALA_HOME/bin

安装maven

tar zxf apache-maven-3.3.9.tar

修改vim /etc/profile

export MAVEN_HOME=/usr/maven/apache-maven-3.3.9

export PATH=${MAVEN_HOME}/bin:${PATH}

解压:

cd /opt/spark

tar zxf spark-2.1.0.tgz

3.maven编译spark

(1)添加内存

export MAVEN_OPTS="-Xmx8g -XX:MaxPermSize=512M -XX:ReservedCodeCacheSize=2048M"

(2)修改spark的pom.xml文件中央仓库

CDH的中央仓库https://repository.cloudera.com/content/repositories/releases/

阿里云的中央仓库http://maven.aliyun.com/nexus/content/groups/public/

(3)在spark的pom.xml文件修改hadoop版本

hadoop-2.6.0

(4)maven编译

mvn -Phadoop-2.6 -Dhadoop.version=2.6.0-CDH5.10.0 -Pyarn -Phive -Phive-thriftserver -DskipTests -T 4 -Uclean package

4.make-distribution.sh打包spark

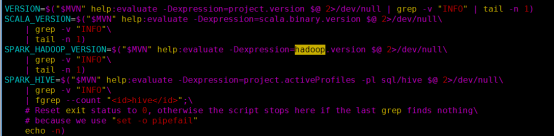

(1)注释make-distribution.sh中maven部分

vim /opt/spark/spark-2.1.0/dev/make-distribution.sh

(2)添加版本号

VERSION=2.1.0

SCALA_VERSION=2.11.8

SPARK_HADOOP_VERSION=2.6.0-CDH5.10.0

SPARK_HIVE=1.2.1

(3)执行make-distribution.sh命令

./make-distribution.sh --tgz

(4)打包成功

spark-2.1.O-bin-2.6.0-CDH5.10.0.tgz