python爬取河北省疫情通报

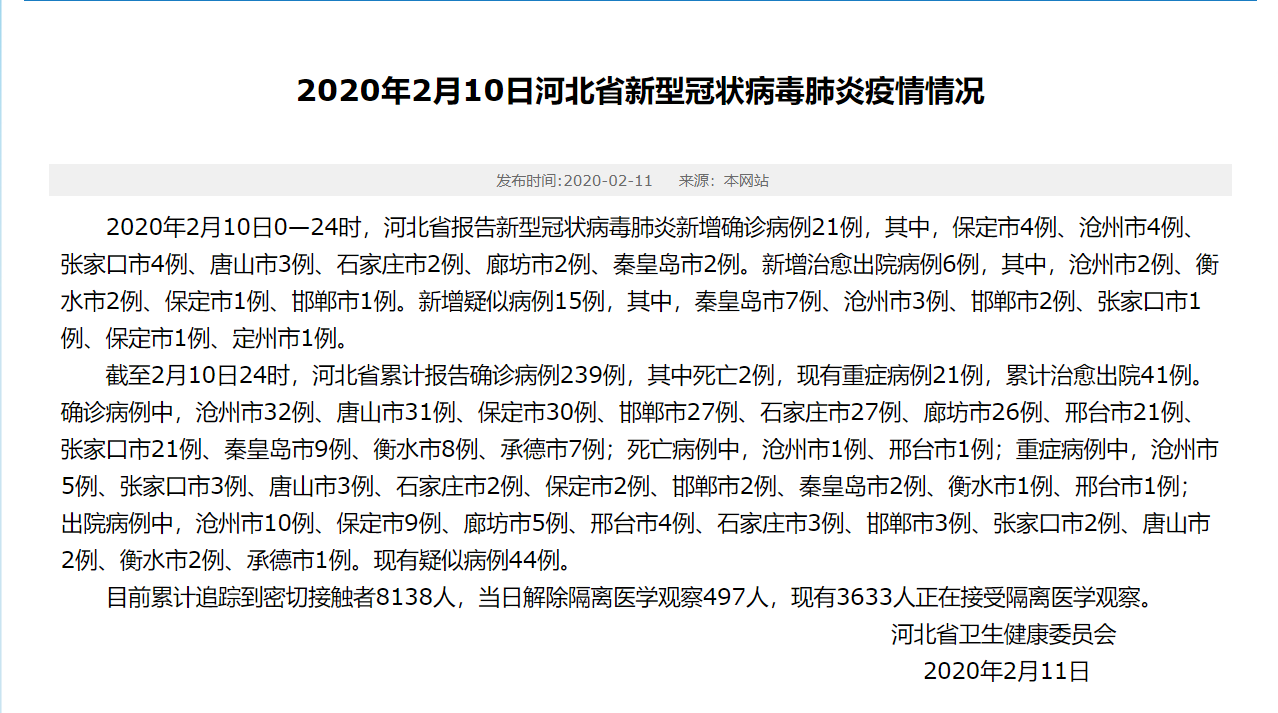

河北省卫生健康委员会关于疫情的通报格式一般为如下格式:因此可以通过改变爬取地址去爬取所有疫情数据。

代码如下:

from lxml import etree import re import requests def info(url): strhtml = requests.get(url) # Get方式获取网页数据 tree = etree.HTML(strhtml.text) text=tree.xpath('//p//text()') text[0] = re.sub(r'\u3000', '', text[0]) date=re.findall(r"(.+?日)", text[0]) print("时间",date) xin_que_num=re.findall(r"新增确诊病例(.+?例)", text[0]) mid = text[0].split("其中", 1)[1] num=len(mid.split("其中", 1)) if num>1: mid=mid.split("其中", 1)[0] xin_shi_num=re.findall(r"[,,、](.+?市)(.+?例)", mid) xin_chu_num = re.findall(r"新增治愈出院病例(.+?例)", text[0]) xin_yi_num = re.findall(r"新增疑似病例(.+?例)", text[0]) print("新增确诊病例",xin_que_num) print("详细新增确诊病例",xin_shi_num) print("新增治愈出院病例",xin_chu_num) print("新增疑似病例",xin_yi_num) que_num=re.findall(r"累计报告确诊病例(.+?例)", text[1]) si_num=re.findall(r"例,其中死亡(.+?例)", text[1]) zhong_num=re.findall(r",现有重症病例(.+?例)", text[1]) yu_num=re.findall(r",累计治愈出院(.+?例)", text[1]) print("累计确诊病例",que_num) print("死亡病例",si_num) print("重症病例",zhong_num) print("出院病例",yu_num) que_xi_num=[] si_xi_num=[] zhong_xi_num=[] chu_xi_num=[] num=len(text[1].split("确诊病例中",1)) if num>1: mid = text[1].split("确诊病例中", 1)[1] num = len(mid.split("死亡病例中",1)) if num > 1: que=mid.split("死亡病例中",1)[0] que_xi_num = re.findall(r"[,、](.+?市)(.+?例)", que) si=mid.split("死亡病例中",1)[1] mid=si num = len(mid.split("重症病例中", 1)) if num > 1: si=mid.split("重症病例中",1)[0] si_xi_num = re.findall(r"[,、](.+?市)(.+?例)", si) zhong=mid.split("重症病例中",1)[1] mid=zhong num = len(mid.split("出院病例中", 1)) if num > 1: zhong=mid.split("出院病例中",1)[0] zhong_xi_num = re.findall(r"[,、](.+?市)(.+?例)", zhong) chu=mid.split("出院病例中",1)[1] chu_xi_num = re.findall(r"[,、](.+?市)(.+?例)", chu) else: zhong_xi_num = re.findall(r"[,、](.+?市)(.+?例)", zhong) else: si_xi_num = re.findall(r"[,、](.+?市)(.+?例)", si) print("详细确诊病例",que_xi_num) print("详细死亡病例",si_xi_num) print("详细重症病例",zhong_xi_num) print("详细出院病例",chu_xi_num) yisi_num=re.findall(r"疑似病例(.+?例)", text[1]) print("疑似病例",yisi_num) miqie_num=re.findall(r"密切接触者(.+?人)", text[2]) jie_num=re.findall(r"解除隔离医学观察(.+?人)", text[2]) guan_num=re.findall(r"现有(.+?人)", text[2]) print("密切接触者",miqie_num) print("接触医学观察",jie_num) print("现有医学观察人数",guan_num) def get_url(url): strhtml = requests.get(url) # Get方式获取网页数据 tree = etree.HTML(strhtml.text) return tree if __name__ == '__main__': url = 'http://www.hebwst.gov.cn/index.do?cid=326&templet=list' list_url = get_url(url) tltle_ = list_url.xpath('//tr/td/a//text()') url_ = list_url.xpath('//tr/td/a/@href') l = [] url_tltles = [] #疫情标提列表 url_list = [] #疫情详情页列表 for i in tltle_: if i == '\r\n\t\t\t\t\t\t': pass else: l.append(i) for index,i in enumerate(l): if '河北省新型冠状病毒' not in i : pass else: url_list.append(url_[index]) url_tltles.append(i) for index,i in enumerate(url_list): url = 'http://www.hebwst.gov.cn/'+i print(url_tltles[index]) print(url) info(url)