1.centos7 取消打开文件限制

ulimit -n # 查看打开文件下载 ulimit -a # 查看详情 vim /etc/security/limits.conf * soft nofile 1024000 * hard nofile 1024000 hive - nofile 1024000 hive - nproc 1024000 vim /etc/security/limits.d/20-nproc.conf #加大普通用户限制 也可以改为unlimited * soft nproc 409600 root soft nproc unlimited shutdown -r now 重启服务

2.centos7取消selinux

getenforce # 查看 /usr/sbin/sestatus -v # 查看 vim /etc/selinux/config # 关闭selinux服务 将SELINUX=enforcing改为SELINUX=disabled shutdown -r now 重启服务

3.关闭防火墙

firewall-cmd --state # 查看防火墙状态 systemctl stop firewalld # 禁用防火墙 systemctl start firewalld # 启动防火墙

4.安装依赖

yum install -y curl pygpgme yum-utils coreutils epel-release libtool *unixODBC*

5.下载:https://packagecloud.io/altinity/clickhouse http://repo.yandex.ru/clickhouse/rpm/stable/x86_64/安装启动 单机部署完成

curl -s https://packagecloud.io/install/repositories/Altinity/clickhouse/script.rpm.sh | sudo bash # 使用脚本下载yum源 推荐使用yum安装 yum install -y clickhouse-server clickhouse-client clickhouse-server-common clickhouse-compressor # 安装clickhouse服务 yum list installed 'clickhouse*' # 查看是否安装成功 service clickhouse-server start # 启动server端clickhouse-server 单机版clickhouse部署完成 service clickhouse-server stop # 停止server端clickhouse-server service clickhouse-server restart # 重启server端clickhouse-server service clickhouse-server status # 查看server端服务开启/关闭状态 systemctl start clickhouse-server # 启动server端clickhouse-server 单机版clickhouse部署完成 systemctl stop clickhouse-server # 停止server端clickhouse-server systemctl restart clickhouse-server # 重启server端clickhouse-server systemctl status clickhouse-server # 查看server端服务开启/关闭状态 clickhouse-client # 进入client端clickhouse-client http://repo.yandex.ru/clickhouse/rpm/stable/x86_64/ # 下载地址 rpm -ivh *.rpm # rpm安装方式 rpm -qa | grep clickhouse # 查询软件是否安装 rpm -e clickhouse-client-20.3.8.53-2.noarch # 卸载 rpm -e clickhouse-common-static-20.3.8.53-2.x86_64 # 卸载 rpm -e clickhouse-server-20.3.8.53-2.noarch # 卸载

6.集群部署

6.1 zookeeper 集群搭建 https://www.cnblogs.com/yoyo1216/protected/p/12700989.html 这有详细过程

6.1创建文件

mkdir /usr/local/clickhouse # 创建文件 mkdir /usr/local/clickhouse/data # 存储目录 mkdir /usr/local/clickhouse/datalog # 日志目录

6.2修改clickhouse配置文件

vim /etc/clickhouse-server/config.xml # 修改配置文件 <listen_host>0.0.0.0<\listen_host> # 默认是注释的 只准许本机访问 取消注释,都可以访问 <log>/var/log/clickhouse-server/clickhouse-server.log</log> # 日志文件 <errorlog>/var/log/clickhouse-server/clickhouse-server.err.log</errorlog> <path>/usr/local/clickhouse/data/</path> # 数据保存路径 <path>/var/lib/clickhouse/data/</path> # 线上数据库 数据保存路径

6.3新建配置文件

vim /etc/metrika.xml

<yandex> <!-- 公司名称 -->

<clickhouse_remote_servers> <!-- 集群设置 -->

<report_shards_replicas> <!-- clickhouse显示名称 可以自己修改 -->

<shard> <!-- 一分片 两副本 -->

<weight>1</weight>

<internal_replication>false</internal_replication> <!-- 是否开启自动复制 -->

<replica> <!-- 副本 -->

<host>192.168.107.216</host> <!-- 当前部署的服务器ip -->

<port>9000</port> <!-- 端口 -->

<user>root</user> <!-- 账号 -->

<password>abc#123</password> <!-- 密码 -->

</replica>

<replica> <!-- 副本 -->

<host>192.168.107.214</host> <!-- 另外一台服务器ip -->

<port>9000</port>

<user>root</user> <!--clickhouse 用户名-->

<password>abc#123</password> <!--clickhouse 用户名对于的密码-->

</replica>

</shard>

<!-- -->

<shard> <!-- 分片1 两分片 一副本-->

<weight>1</weight>

<internal_replication>false</internal_replication> <!-- 是否开启自动复制 -->

<replica> <!-- 副本 -->

<host>192.168.107.216</host> <!-- 当前部署的服务器ip -->

<port>9000</port> <!-- 端口 -->

<user>root</user> <!-- 账号 -->

<password>abc#123</password> <!-- 密码 -->

</replica>

</shard>

<shard> <!-- 分片2 -->

<weight>1</weight>

<internal_replication>false</internal_replication> <!-- 是否开启自动复制 -->

<replica> <!-- 副本 -->

<host>192.168.107.214</host> <!-- 另外一台服务器ip -->

<port>9000</port>

<user>root</user>

<password>abc#123</password>

</replica>

</shard>

</report_shards_replicas>

</clickhouse_remote_servers>

<macros> <!-- 指定每个分片需zookeeper路径及副本名称 每个机器配置 要设置不一样的 -->

<shard>01</shard>

<replica>clickhouse216</replica> <!-- 下一个配置 clickhouse214 不能重复 -->

</macros>

<networks>

<ip>::/0</ip>

</networks>

<!-- zookeeper 配置 集群高可用 -->

<zookeeper-servers>

<node index="1">

<host>192.168.107.216</host>

<port>2181</port>

</node>

<node index="2">

<host>192.168.107.214</host>

<port>2181</port>

</node>

</zookeeper-servers>

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method>

</case>

</clickhouse_compression>

</yandex>

6.4

/etc/security/limits.d/clickhouse.conf:文件句柄数量的配置,默认值如下所示。该配置也可以通过config.xml的max_open_files修改。 # cat /etc/security/limits.d/clickhouse.conf clickhouse soft nofile 262144 clickhouse hard nofile 262144 /etc/cron.d/clickhouse-server:cron定时任务配置,用于恢复因异常原因中断的ClickHouse服务进程,其默认的配置如下。 # cat /etc/cron.d/clickhouse-server # */10 * * * * root (which service > /dev/null 2>&1 && (service clickhouse-server condstart ||:)) || /etc/init.d/clickhouse-server condstart > /dev/null 2>&1

6.5 启动click集群

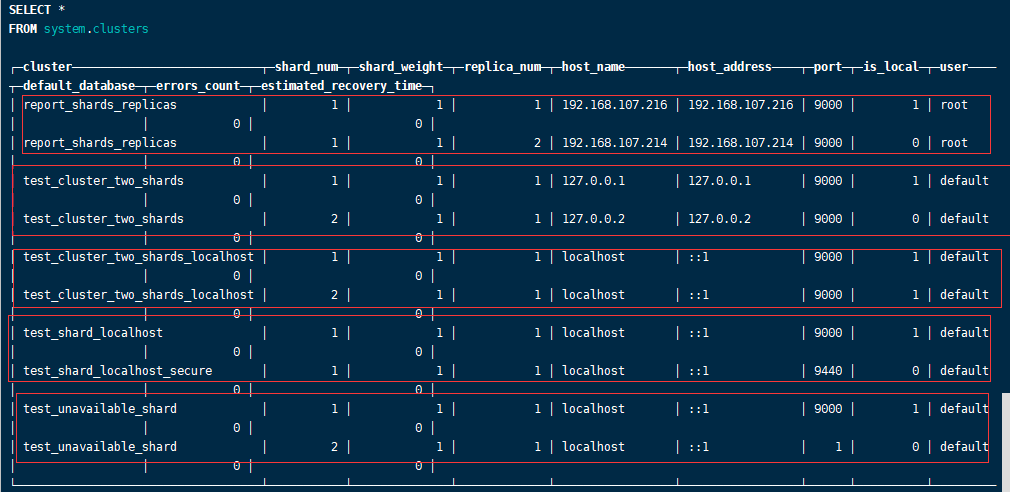

systemctl start clickhouse-server # 启动server端clickhouse-server 单机版clickhouse部署完成 systemctl stop clickhouse-server # 停止server端clickhouse-server systemctl restart clickhouse-server # 重启server端clickhouse-server systemctl status clickhouse-server # 查看server端服务开启/关闭状态 service clickhouse-server start # 启动server端clickhouse-server service clickhouse-server stop # 停止server端clickhouse-server service clickhouse-server restart # 重启server端clickhouse-server service clickhouse-server status # 查看server端服务开启/关闭状态 clickhouse-client # 进入client端clickhouse-client select * from system.clusters # 查看是否成功 显示下面信息 集群搭建成功