sqoop中作业的使用

sqoop中,可以将导入导出任务,写到job中,实现创建、查看、执行和删除job的功能。

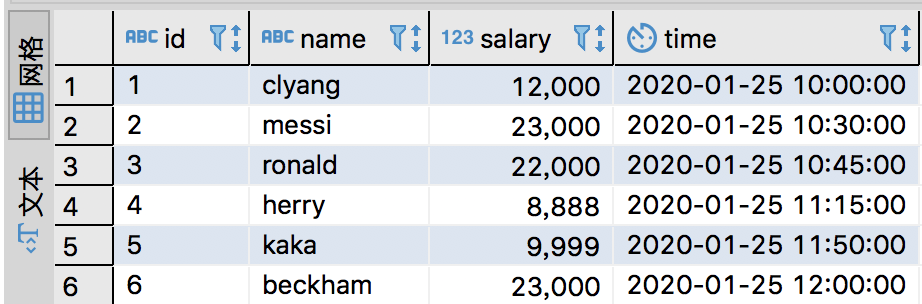

数据准备

mysql先准备数据,创建sqooptest数据库,并添加表Man和数据,如下图。

创建作业

可以通过'sqoop job --help'命令查看具体的使用方法。

[hadoop@node01 ~/.sqoop]$ sqoop job --help Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 16:54:13 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2

# 使用语法 usage: sqoop job [GENERIC-ARGS] [JOB-ARGS] [-- [<tool-name>] [TOOL-ARGS]] Job management arguments: --create <job-id> Create a new saved job --delete <job-id> Delete a saved job --exec <job-id> Run a saved job --help Print usage instructions --list List saved jobs --meta-connect <jdbc-uri> Specify JDBC connect string for the metastore --show <job-id> Show the parameters for a saved job --verbose Print more information while working Generic Hadoop command-line arguments: (must preceed any tool-specific arguments) Generic options supported are -conf <configuration file> specify an application configuration file -D <property=value> use value for given property -fs <local|namenode:port> specify a namenode -jt <local|resourcemanager:port> specify a ResourceManager -files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster -libjars <comma separated list of jars> specify comma separated jar files to include in the classpath. -archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

使用上面的提示,先查看一下目前的job,发现还没有。

# 查看创建的作业 [hadoop@node01 ~/.sqoop]$ sqoop job --list Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:03:16 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 # 暂时没有 Available jobs: You have new mail in /var/spool/mail/root

那就创建一个,使用如下命令。

# 创建job [hadoop@node01 ~/.sqoop]$ sqoop job \ > --create testjob \ > -- import \ > --connect jdbc:mysql://node01:3306/sqooptest \ > --username root \ > --password 123456 \ > --table Man \ > --target-dir /sqoop/testjob \ > --delete-target-dir \ > --m 1 Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:11:32 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 20/02/06 17:11:32 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. You have new mail in /var/spool/mail/root # 查看job [hadoop@node01 ~/.sqoop]$ sqoop job --list Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:11:48 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 # 创建成功 Available jobs: testjob

查看作业

使用如下命令来查看刚才的job,可以查看job的详细信息。

# 查看testjob详细信息 [hadoop@node01 ~/.sqoop]$ sqoop job --show testjob Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:14:31 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 # 输入密码 Enter password: Job: testjob Tool: import Options: ---------------------------- reset.onemapper = false codegen.output.delimiters.enclose = 0 sqlconnection.metadata.transaction.isolation.level = 2 codegen.input.delimiters.escape = 0 codegen.auto.compile.dir = true accumulo.batch.size = 10240000 codegen.input.delimiters.field = 0 accumulo.create.table = false mainframe.input.dataset.type = p enable.compression = false accumulo.max.latency = 5000 db.username = root sqoop.throwOnError = false db.clear.staging.table = false codegen.input.delimiters.enclose = 0 hdfs.append.dir = false import.direct.split.size = 0 hcatalog.drop.and.create.table = false codegen.output.delimiters.record = 10 codegen.output.delimiters.field = 44 hdfs.target.dir = /sqoop/testjob hbase.bulk.load.enabled = false # maptask数为1 mapreduce.num.mappers = 1 export.new.update = UpdateOnly db.require.password = true hive.import = false customtool.options.jsonmap = {} hdfs.delete-target.dir = true codegen.output.delimiters.enclose.required = false direct.import = false codegen.output.dir = . hdfs.file.format = TextFile hive.drop.delims = false codegen.input.delimiters.record = 0 db.batch = false split.limit = null hcatalog.create.table = false hive.fail.table.exists = false hive.overwrite.table = false incremental.mode = None temporary.dirRoot = _sqoop verbose = false import.max.inline.lob.size = 16777216 import.fetch.size = null codegen.input.delimiters.enclose.required = false relaxed.isolation = false sqoop.oracle.escaping.disabled = true # mysql表名 db.table = Man hbase.create.table = false codegen.compile.dir = /tmp/sqoop-hadoop/compile/b36217ccf86b7984cc9537d327e9598f codegen.output.delimiters.escape = 0 # 连接mysql的字符 db.connect.string = jdbc:mysql://node01:3306/sqooptest

执行作业

执行刚才创建的作业,使用如下命令执行,期间需要输入密码。

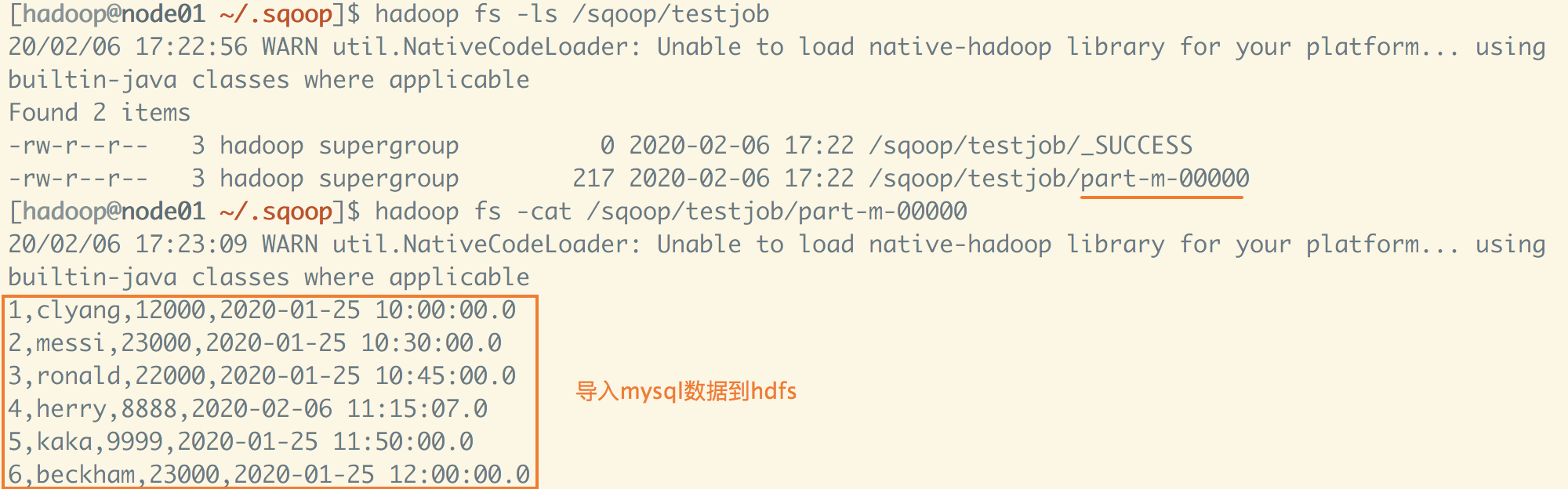

[hadoop@node01 ~/.sqoop]$ sqoop job --exec testjob Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:22:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 # 输入密码 Enter password: ...省略 20/02/06 17:22:36 INFO mapreduce.ImportJobBase: Transferred 177.6777 KB in 14.6772 seconds (12.1057 KB/sec) # 提示保存了6条数据 20/02/06 17:22:36 INFO mapreduce.ImportJobBase: Retrieved 6 records. You have new mail in /var/spool/mail/root

执行完后,查看hdfs,发现成功执行了job。

删除作业

可以使用如下命令删除刚才作业。

# 删除job [hadoop@node01 ~/.sqoop]$ sqoop job --delete testjob Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:33:54 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 You have new mail in /var/spool/mail/root # 查看发现已经删除 [hadoop@node01 ~/.sqoop]$ sqoop job --list Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 17:34:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 # 已删除 Available jobs:

sqoop增量作业

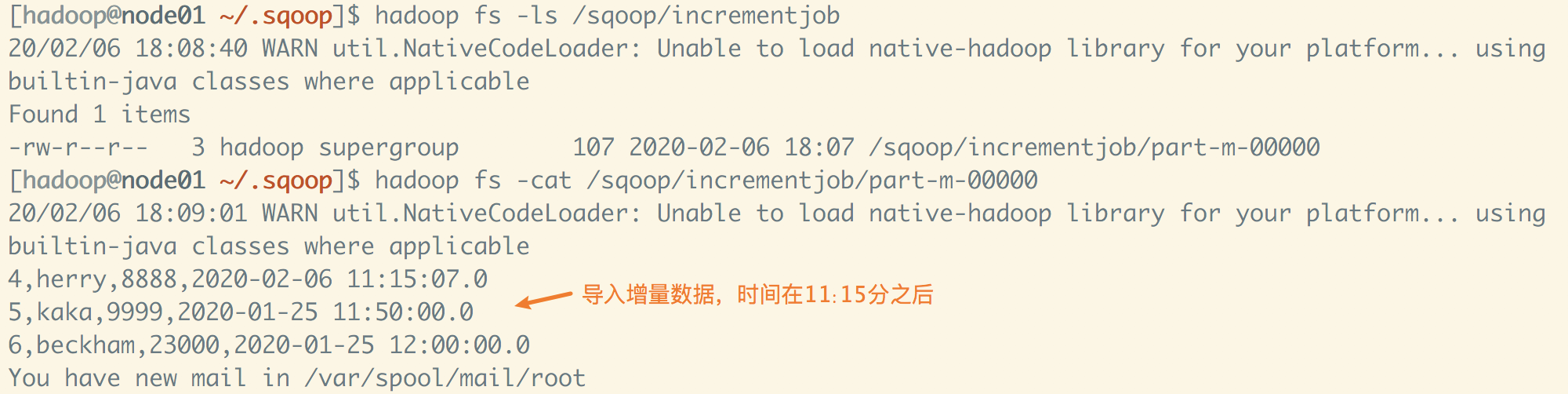

sqoop可以创建增量作业,依然使用刚才的表来导入, 使用模式为append。

# 创建一个增量job [hadoop@node01 ~/.sqoop]$ sqoop job --create incrementjob -- import --connect jdbc:mysql://node01:3306/sqooptest --username root --password 123456 --table Man --target-dir /sqoop/incrementjob --incremental append --check-column time --last-value '2020-01-25 11:15:00' --m 1 Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 18:03:31 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 20/02/06 18:03:31 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. # 查看新创建的job

[hadoop@node01 ~/.sqoop]$ sqoop job --list Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /kkb/install/sqoop-1.4.6-cdh5.14.2/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 20/02/06 18:03:43 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.14.2 Available jobs: incrementjob

先执行,查看hdfs中数据情况。注意执行时又提示输入密码,可以通过修改sqoop根目录/conf下的sqoop-site.xml文件来完成修改。

# 保存密码到sqoop元数据

<property> <name>sqoop.metastore.client.record.password</name> <value>true</value> <description>If true, allow saved passwords in the metastore. </description> </property>

执行job后,hdfs中正常保存增量数据。

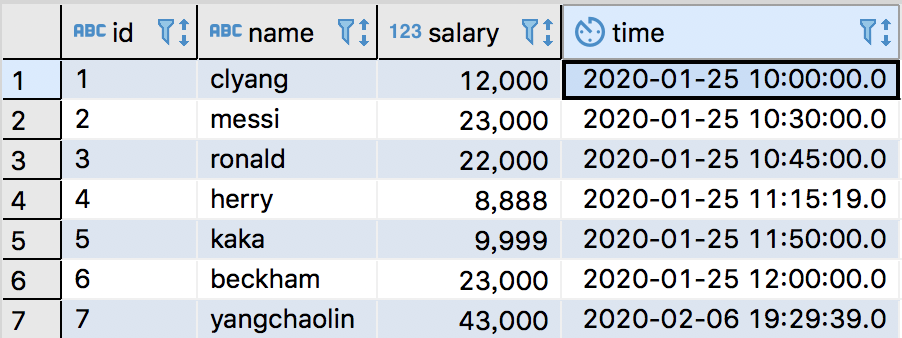

此时,如果把mysql原始数据修改下,增加一条id=7的数据,再次执行刚才的job,能否导入到hdfs呢?

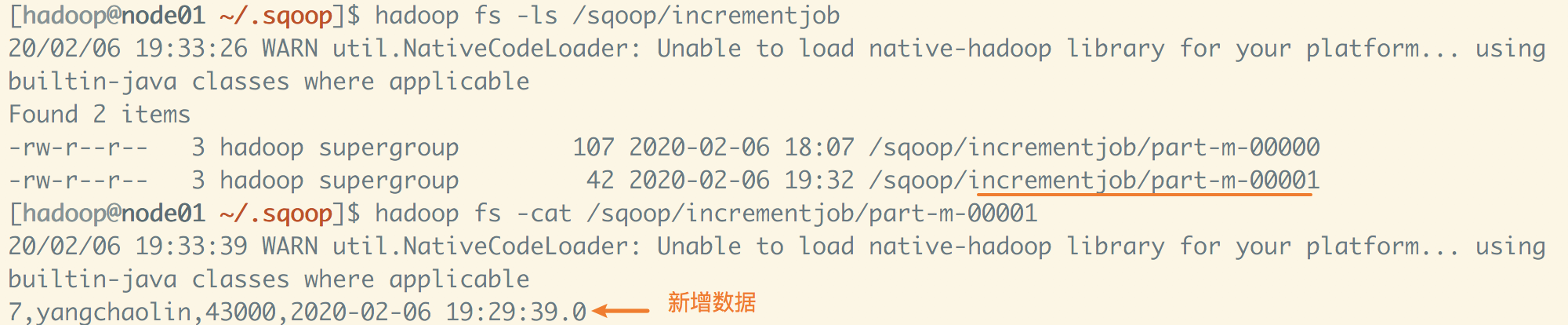

再次执行job,查看hdfs中情况。

说明可以导入增量数据,但是job创建时,检查列time的last-value是指定的,为什么还能导入数据,这是因为sqoop的元数据中更新了last-value的值,如下所示。

# sqoop安装目录平级目录下,有一个.sqoop隐藏目录 [hadoop@node01 ~/.sqoop]$ ll total 28 -rw-rw-r-- 1 hadoop hadoop 14802 Feb 6 17:22 Man.java -rw-rw-r-- 1 hadoop hadoop 419 Feb 6 19:32 metastore.db.properties -rw-rw-r-- 1 hadoop hadoop 6662 Feb 6 19:32 metastore.db.script You have new mail in /var/spool/mail/root # 查看元数据内容 [hadoop@node01 ~/.sqoop]$ more metastore.db.script CREATE SCHEMA PUBLIC AUTHORIZATION DBA CREATE MEMORY TABLE SQOOP_ROOT(VERSION INTEGER,PROPNAME VARCHAR(128) NOT NULL,PROPVAL VARCHAR(256),CONSTRAINT SQOOP_ROOT_UNQ UNIQUE(VERSION,PROPNAME)) CREATE MEMORY TABLE SQOOP_SESSIONS(JOB_NAME VARCHAR(64) NOT NULL,PROPNAME VARCHAR(128) NOT NULL,PROPVAL VARCHA R(1024),PROPCLASS VARCHAR(32) NOT NULL,CONSTRAINT SQOOP_SESSIONS_UNQ UNIQUE(JOB_NAME,PROPNAME,PROPCLASS)) CREATE USER SA PASSWORD "" GRANT DBA TO SA SET WRITE_DELAY 10 SET SCHEMA PUBLIC INSERT INTO SQOOP_ROOT VALUES(NULL,'sqoop.hsqldb.job.storage.version','0') INSERT INTO SQOOP_ROOT VALUES(0,'sqoop.hsqldb.job.info.table','SQOOP_SESSIONS') INSERT INTO SQOOP_SESSIONS VALUES('incrementjob','sqoop.tool','import','schema') INSERT INTO SQOOP_SESSIONS VALUES('incrementjob','sqoop.property.set.id','0','schema') INSERT INTO SQOOP_SESSIONS VALUES('incrementjob','verbose','false','SqoopOptions') INSERT INTO SQOOP_SESSIONS VALUES('incrementjob','hcatalog.drop.and.create.table','false','SqoopOptions') # 更新了last.value的值为刚插入的数据时间戳 INSERT INTO SQOOP_SESSIONS VALUES('incrementjob','incremental.last.value','2020-02-06 19:29:39.0','SqoopOption s')

以上,是对sqoop作业使用的记录。

参考博文:

浙公网安备 33010602011771号

浙公网安备 33010602011771号