kafka安装及服务端设置用户和密码登录及springboot访问实现

1、先安装zookeeper,不会的可以去查一下。

2、解压并放到目录下,改名

1 2 | tar zxvf kafka_2.12-2.4.0.tgz -C /datamv kafka_2.12-2.4.0 kafka |

3、修改kafka配置文件 server.properties

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | vim /data/kafka/config/server.properties:broker.id=0listeners=PLAINTEXT://192.168.110.110:9092advertised.listeners=PLAINTEXT://192.168.110.110:9092num.network.threads=3num.io.threads=8socket.send.buffer.bytes=102400socket.receive.buffer.bytes=102400socket.request.max.bytes=104857600############################# Log Basics #############################log.dirs=/data/kafka/logsnum.partitions=1num.recovery.threads.per.data.dir=1############################# Internal Topic Settings #############################offsets.topic.replication.factor=1transaction.state.log.replication.factor=1transaction.state.log.min.isr=1log.retention.hours=168log.segment.bytes=1073741824log.retention.check.interval.ms=300000zookeeper.connect=192.168.110.110:2181zookeeper.connection.timeout.ms=60000group.initial.rebalance.delay.ms=0~ |

4、添加SASL配置文件

为server创建登录验证文件,可以根据自己爱好命名文件,如kafka_server_jaas.conf,文件内容如下

1 2 3 4 5 6 7 8 9 10 11 12 | 第一种:<br>KafkaServer { org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="adminpasswd" user_admin="adminpasswd" user_producer="producerpwd" user_consumer="consumerpwd";};说明:该配置通过org.apache.org.apache.kafka.common.security.plain.PlainLoginModule由指定采用PLAIN机制,定义了用户。usemame和password指定该代理与集群其他代理初始化连接的用户名和密码"user_"为前缀后接用户名方式创建连接代理的用户名和密码,例如,user_producer=“producerpwd” 是指用户名为producer,密码为producerpwdusername为admin的用户,和user为admin的用户,密码要保持一致,否则会认证失败上述配置中,创建了三个用户,分别为admin、producer和consumer(创建多少个用户,可根据业务需要配置,用户名和密码可自定义设置) |

第二种:

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="visitor"

password="qaz@123"

user_visitor="qaz@123";

};

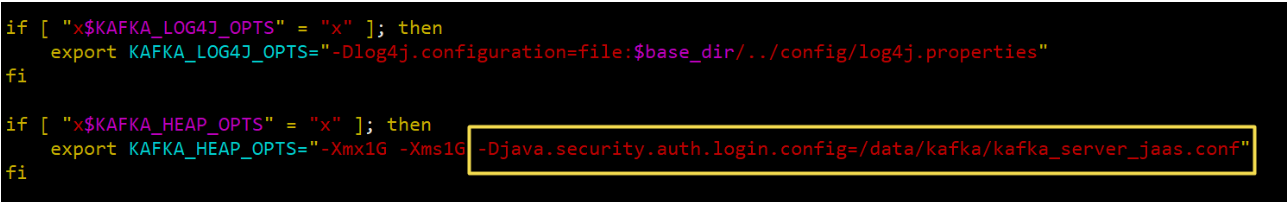

5、修改启动脚本

vim /data/kafka/bin/kafka-server-start.sh ,找到 export KAFKA_HEAP_OPTS ,

添加jvm 参数为kafka_server_jaas.conf文件:-Djava.security.auth.login.config=/data/kafka/kafka_server_jaas.conf

<--------------------------------------------------分割↓-------------------------------------------------->

为consumer和producer创建登录验证文件,可以根据爱好命名文件,如kafka_client_jaas.conf,文件内容如下(如果是程序访问,如springboot访问,可以不配置)

1 2 3 4 5 | KafkaClient { org.apache.kafka.common.security.plain.PlainLoginModule required username="visitor" password="qaz@123"; }; |

在consumer.properties和producer.properties里分别加上如下配置

1 2 | security.protocol=SASL_PLAINTEXTsasl.mechanism=PLAIN |

<--------------------------------------------------分隔↑-------------------------------------------------->

6、启动kafka服务,kafka启动.进入bin目录,分别启动zookeeper和kafka,至此服务端kafka用户登录验证配置完成

1 2 3 4 | # 先启动zookeeper/data/kafka/bin/zookeeper-server-start.sh -daemon /data/kafka/config/zookeeper.properties<br># 启动kafka/data/kafka/bin/kafka-server-start.sh -daemon /data/kafka/config/server.properties |

说明:如果kafka访问zookeeper也需要登录验证,则需要为zookeeper添加登录验证配置,如下

1 2 3 4 5 | zookeeper { org.apache.kafka.common.security.plain.PlainLoginModule required username="visitor" password="qaz@123";}; |

<--------------------------------------------------分割↓-------------------------------------------------->

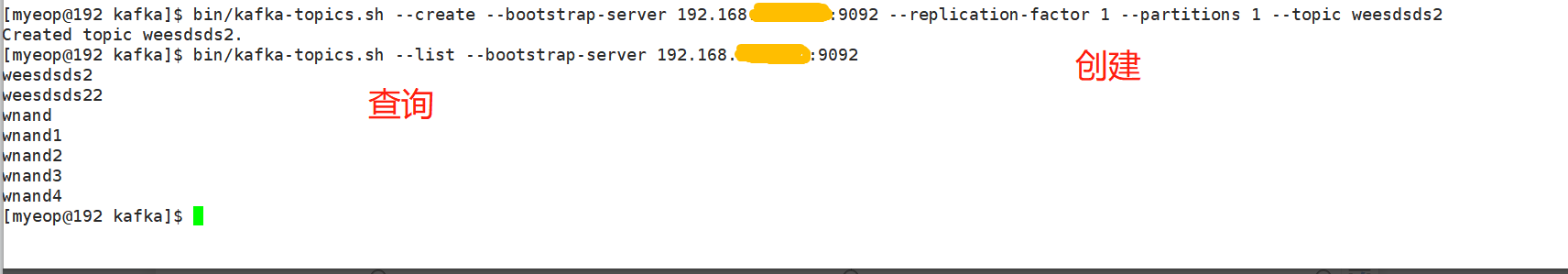

创建topic

本测试版本使用下面命令(2.2及更高版本)

bin/kafka-topics.sh --create --bootstrap-server 192.168.110.110:9092 --replication-factor 1 --partitions 1 --topic busi_topic

查看topic

bin/kafka-topics.sh --list --bootstrap-server 192.168.110.110:9092

1 2 3 4 5 6 7 8 | 发送消息bin/kafka-console-producer.sh --broker-list 192.168.110.110:9092 --topic test接收消息bin/kafka-console-consumer.sh --bootstrap-server 192.168.220.154:9092 --topic test --from-beginning查看特定topic信息bin/kafka-topics.sh --bootstrap-server 192.168.220.154:9092 --describe --topic test删除主题bin/kafka-topics.sh --bootstrap-server 137.32.173.79:9092 --delete --topic test |

<--------------------------------------------------分隔↑-------------------------------------------------->

A、创建及查看主题进入bin目录

a1、旧版本(2.2以下)使用zookeeper连接字符串,创建主题,执行命令

1 | ./kafka-topics.sh --zookeeper localhost:2181 --create --topic demo-hehe-topic --partitions 1 --replication-factor 1 |

a2、查看主题,执行命令

1 | ./kafka-topics.sh --list --zookeeper localhost:2181 |

B、springboot项目配置kafka登录验证

b1、application.yml配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | spring: kafka: # 指定kafka 代理地址,可以多个 bootstrap-servers: 114.114.114.144:9092 producer: # 生产者 retries: 1 # 设置大于0的值,则客户端会将发送失败的记录重新发送 # 每次批量发送消息的数量 batch-size: 16384 buffer-memory: 33554432 # 指定消息key和消息体的编解码方式 key-serializer: org.apache.kafka.common.serialization.StringSerializer value-serializer: org.apache.kafka.common.serialization.StringSerializer #修改最大向kafka推送消息大小 properties: max.request.size: 52428800 consumer: #手动提交offset保证数据一定被消费 enable-auto-commit: false #指定从最近地方开始消费(earliest) auto-offset-reset: latest #消费者组 #group-id: dev properties: security: protocol: SASL_PLAINTEXT sasl: mechanism: PLAIN jaas: config: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="visitor" password="qaz@123";' |

b2、创建kafka消费者工厂类,在Application或者单独Configuration配置文件里添加如下代码都可以

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | /** * 配置kafka手动提交offset * * @param consumerFactory 消费者factory * @return 监听factory */ @Bean public KafkaListenerContainerFactory<?> kafkaListenerContainerFactory(ConsumerFactory consumerFactory) { ConcurrentKafkaListenerContainerFactory<Integer, String> factory = new ConcurrentKafkaListenerContainerFactory<>(); factory.setConsumerFactory(consumerFactory); //消费者并发启动个数,最好跟kafka分区数量一致,不能超过分区数量 //factory.setConcurrency(1); factory.getContainerProperties().setPollTimeout(1500); //设置手动提交ackMode factory.getContainerProperties().setAckMode(ContainerProperties.AckMode.MANUAL_IMMEDIATE); return factory; } |

b3、向kafka发送消息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | import lombok.extern.slf4j.Slf4j;import org.apache.kafka.clients.producer.RecordMetadata;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.kafka.core.KafkaTemplate;import org.springframework.kafka.support.SendResult;import org.springframework.stereotype.Component;import org.springframework.util.concurrent.ListenableFuture;/** * 向kafka推送数据 * * @author wangfenglei */@Slf4j@Componentpublic class KafkaDataProducer { @Autowired private KafkaTemplate<String, String> kafkaTemplate; /** * 向kafka push表数据,同步到本地 * * @param msg 消息 * @param topic 主题 * @throws Exception 异常 */ public RecordMetadata sendMsg(String msg, String topic) throws Exception { try { ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send(topic, msg); return future.get().getRecordMetadata(); } catch (Exception e) { log.error("sendMsg to kafka failed!", e); throw e; } }} |

b4、接收kafka消息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | import com.wfl.firefighting.kafka.strategy.BaseConsumerStrategy;import lombok.extern.slf4j.Slf4j;import org.apache.kafka.clients.consumer.ConsumerRecord;import org.springframework.kafka.annotation.KafkaListener;import org.springframework.kafka.support.Acknowledgment;import org.springframework.stereotype.Component; import java.util.Map;import java.util.concurrent.ConcurrentHashMap; /** * kafka消息消费 * * @author wangfenglei */@Component@Slf4jpublic class KafkaDataConsumer { private final Map<String, BaseConsumerStrategy> strategyMap = new ConcurrentHashMap<>(); public KafkaDataConsumer(Map<String, BaseConsumerStrategy> strategyMap){ this.strategyMap.clear(); strategyMap.forEach((k, v)-> this.strategyMap.put(k, v)); } /** * @param record 消息 */ @KafkaListener(topics = {"#{'${customer.kafka.kafka-topic-names}'.split(',')}"}, containerFactory = "kafkaListenerContainerFactory", autoStartup = "${customer.kafka.kafka-receive-flag}") public void receiveMessage(ConsumerRecord record, Acknowledgment ack) throws Exception { String message = (String) record.value(); //接收消息 log.info("Receive from kafka topic[" + record.topic() + "]:" + message); try{ BaseConsumerStrategy strategy = strategyMap.get(record.topic()); if(null != strategy){ strategy.consumer(message); } }finally { //手动提交保证数据被消费 ack.acknowledge(); } }} |

7、kafka客户端配置登录认证

#1.安装kafka客户端

在/root 路径下新拷贝一个kafka安装包,解压后重命名,作为kafka客户端,客户端路径 /root/kafka

#2.创建客户端认证配置文件

在 /root/kafka 路径下创建一个 kafka_client_jaas.conf 文件:

vim /root/kafka/kafka_client_jaas.conf:

1 2 3 4 5 | KafkaClient { org.apache.kafka.common.security.plain.PlainLoginModule required username="producer" password="producerpwd";};<br>注:这里配置用户名和密码需要和服务端配置的账号密码保持一致,这里配置了producer这个用户 |

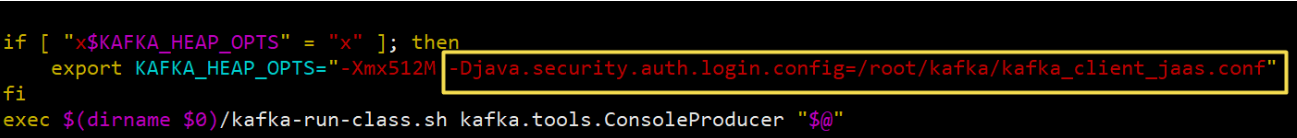

#3.添加kafka-console-producer.sh认证文件路径,后面启动生产者测试时使用

vim /root/kafka/bin/kafka-console-producer.sh

找到 “x$KAFKA_HEAP_OPTS”,添加以下参数:-Djava.security.auth.login.config=/root/kafka/kafka_client_jaas.conf

#4.添加kafka-console-consumer.sh认证文件路径,后面启动消费者测试时使用

vim /root/kafka/bin/kafka-console-consumer.sh ,找到 “x$KAFKA_HEAP_OPTS”,

添加以下参数:-Djava.security.auth.login.config=/root/kafka/kafka_client_jaas.conf

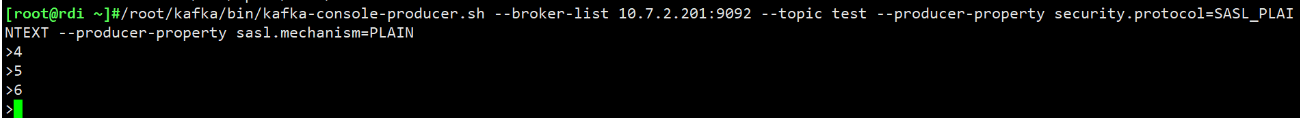

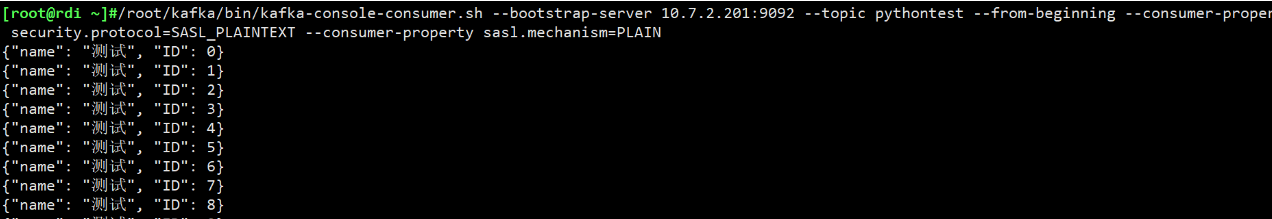

#5.用客户端连接

1 2 3 4 | # 开启生产者:/root/kafka/bin/kafka-console-producer.sh --broker-list 10.7.2.201:9092 --topic test --producer-property \<br>security.protocol=SASL_PLAINTEXT --producer-property sasl.mechanism=PLAIN#开启消费者:/root/kafka/bin/kafka-console-consumer.sh --bootstrap-server 10.7.2.201:9092 --topic test --from-beginning \<br>--consumer-property security.protocol=SASL_PLAINTEXT --consumer-property sasl.mechanism=PLAIN |

生产者可正常生产数据,消费者能消费到数据

8、python连接kafka(python2环境)

.1 安装kafka-python模块包

1 2 3 | 下载模块包:kafka-python-2.0.2.tar.gz解压后,执行以下命令进行安装python setup.py install |

.2向kafka中写入数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | # -*- coding: utf-8 -*-from __future__ import absolute_importfrom __future__ import print_functionfrom kafka import KafkaProducerimport jsonimport sysreload(sys)sys.setdefaultencoding('utf8')def produceLog(topic_name, log): kafka_producer= KafkaProducer( sasl_mechanism="PLAIN", security_protocol='SASL_PLAINTEXT', sasl_plain_username="producer", sasl_plain_password="producerpwd", bootstrap_servers='10.7.2.201:9092' ) kafka_producer.send(topic_name, log, partition=0) kafka_producer.flush() kafka_producer.close() for i in range(10): sendlog_dict = {"name":"测试"} sendlog_dict['ID'] = i sendlog_srt = json.dumps(sendlog_dict, ensure_ascii=False) produceLog("pythontest", sendlog_srt) |

执行后,在kafka中查看 pythontest 主题中的数据,如下图:数据写入成功

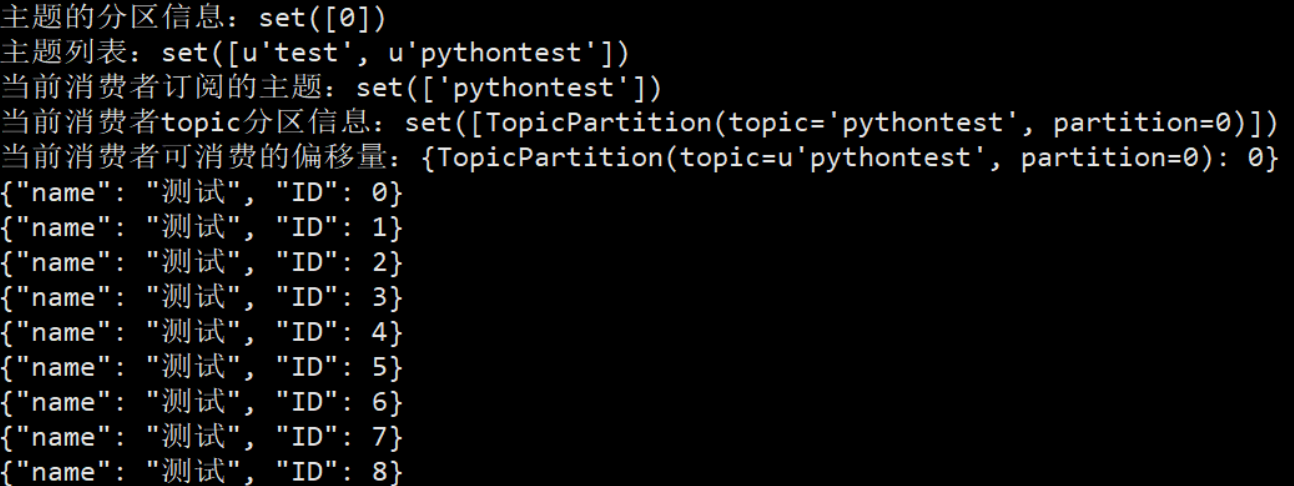

.3消费数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | # -*- coding: utf-8 -*-from __future__ import absolute_importfrom __future__ import print_functionfrom kafka import KafkaConsumerfrom kafka import TopicPartitionimport sysreload(sys)sys.setdefaultencoding('utf8')consumer= KafkaConsumer( 'pythontest', # 消息主题 group_id="test", client_id="python", sasl_mechanism="PLAIN", security_protocol='SASL_PLAINTEXT', sasl_plain_username="producer", sasl_plain_password="producerpwd", bootstrap_servers='10.7.2.201:9092', auto_offset_reset='earliest' # 从头开始消费 ) print("主题的分区信息:{}".format(consumer.partitions_for_topic('pythontest')))print("主题列表:{}".format(consumer.topics()))print("当前消费者订阅的主题:{}".format(consumer.subscription()))print("当前消费者topic分区信息:{}".format(consumer.assignment()))print("当前消费者可消费的偏移量:{}".format(consumer.beginning_offsets(consumer.assignment())))for msg in consumer: print(msg.value) |

运行结果:

-------------------------------------------------------------------------------------------------------------------------------------

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)