🎇作业①

🚀(1)作业要求

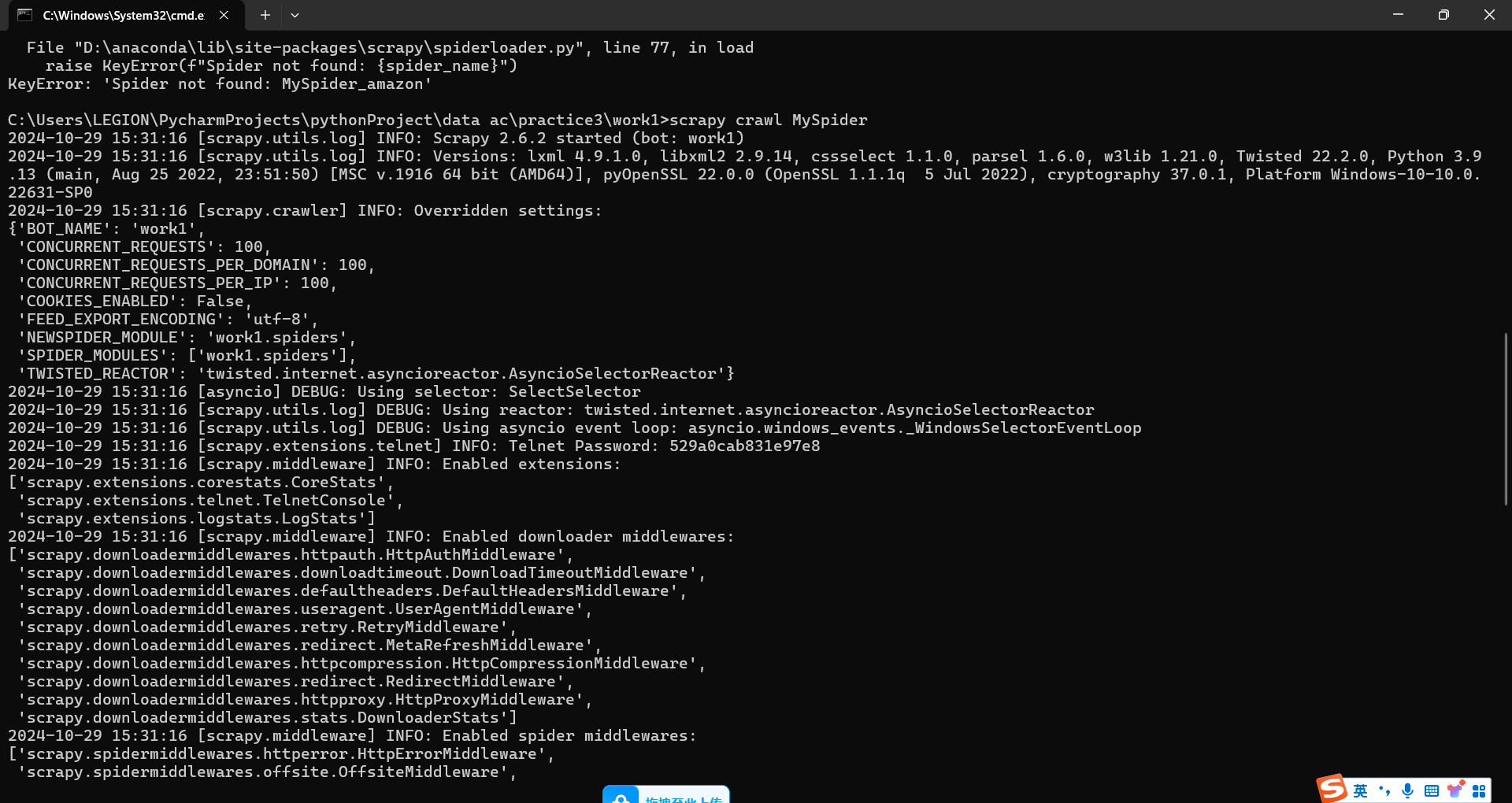

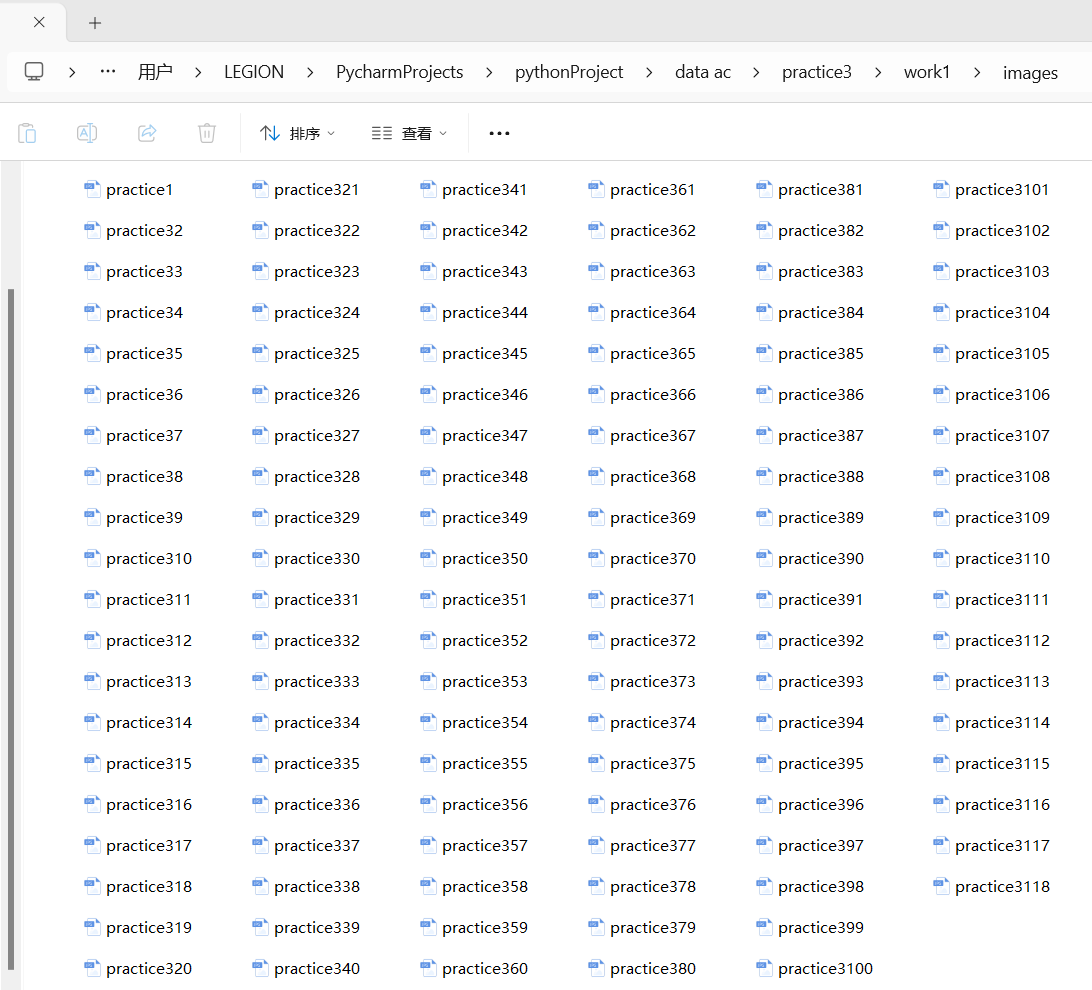

- 要求:指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。 - 输出信息: 将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

- Gitee 文件夹链接

✒️

(2)代码实现及图片

- 多线程

import scrapy

from ..items import Work1Item

from concurrent.futures import ThreadPoolExecutor

class MySpider(scrapy.Spider):

# 爬虫的名字 一般运行爬虫的时候 使用的值

name = 'MySpider'

start_urls = []

for i in range(1,3):

url = f"https://www.amazon.cn/s?k=%E4%B9%A6%E5%8C%85&page={i}&__mk_zh_CN=%E4%BA%9A%E9%A9%AC%E9%80%8A%E7%BD%91%E7%AB%99&crid=1RAID9NTPCARM&qid=1698238172&sprefix=%E4%B9%A6%E5%8C%85%2Caps%2C154&ref=sr_pg_{i}"

start_urls.append(url)

def __init__(self, *args, **kwargs):

super(MySpider, self).__init__(*args, **kwargs)

self.executor = ThreadPoolExecutor(max_workers=4)

def parse(self, response):

src = response.xpath('//img/@src').extract()

#print(src)

img = Work1Item(src=src)

yield img

def process_request(self, request, spider):

# 利用线程池异步发送请求

self.executor.submit(spider.crawler.engine.download, request, spider)

- 单线程

import scrapy

from ..items import Work1Item

class MySpider(scrapy.Spider):

# 爬虫的名字

name = 'MySpider'

start_urls = []

# 构造start_urls

for i in range(1, 3):

url = f"https://www.amazon.cn/s?k=%E4%B9%A6%E5%8C%85&page={i}&__mk_zh_CN=%E4%BA%9A%E9%A9%AC%E9%80%8A%E7%BD%91%E7%AB%99&crid=1RAID9NTPCARM&qid=1698238172&sprefix=%E4%B9%A6%E5%8C%85%2Caps%2C154&ref=sr_pg_{i}"

start_urls.append(url)

def parse(self, response):

# 提取图片URL

src = response.xpath('//img/@src').extract()

img = Work1Item(src=src)

yield img

# 不需要process_request方法

🧾(3)心得体会

通过构建MySpider类,我对 Scrapy 框架的核心组件和运行机制有了更清晰的认识。name属性定义了爬虫的唯一标识,start_urls列表的构建让我学会了如何确定初始的爬取页面集合,这是整个爬虫启动的基础。而parse方法则是数据提取与页面链接跟进的核心逻辑所在,在其中熟练运用xpath表达式从网页的 HTML 结构中精准地提取图片的 URL 以及下一页链接,极大地提升了我对网页数据提取技术的掌握程度,并且深刻体会到了xpath在处理结构化网页数据时的强大与便捷。

🎊作业②

🕸️(1)作业要求

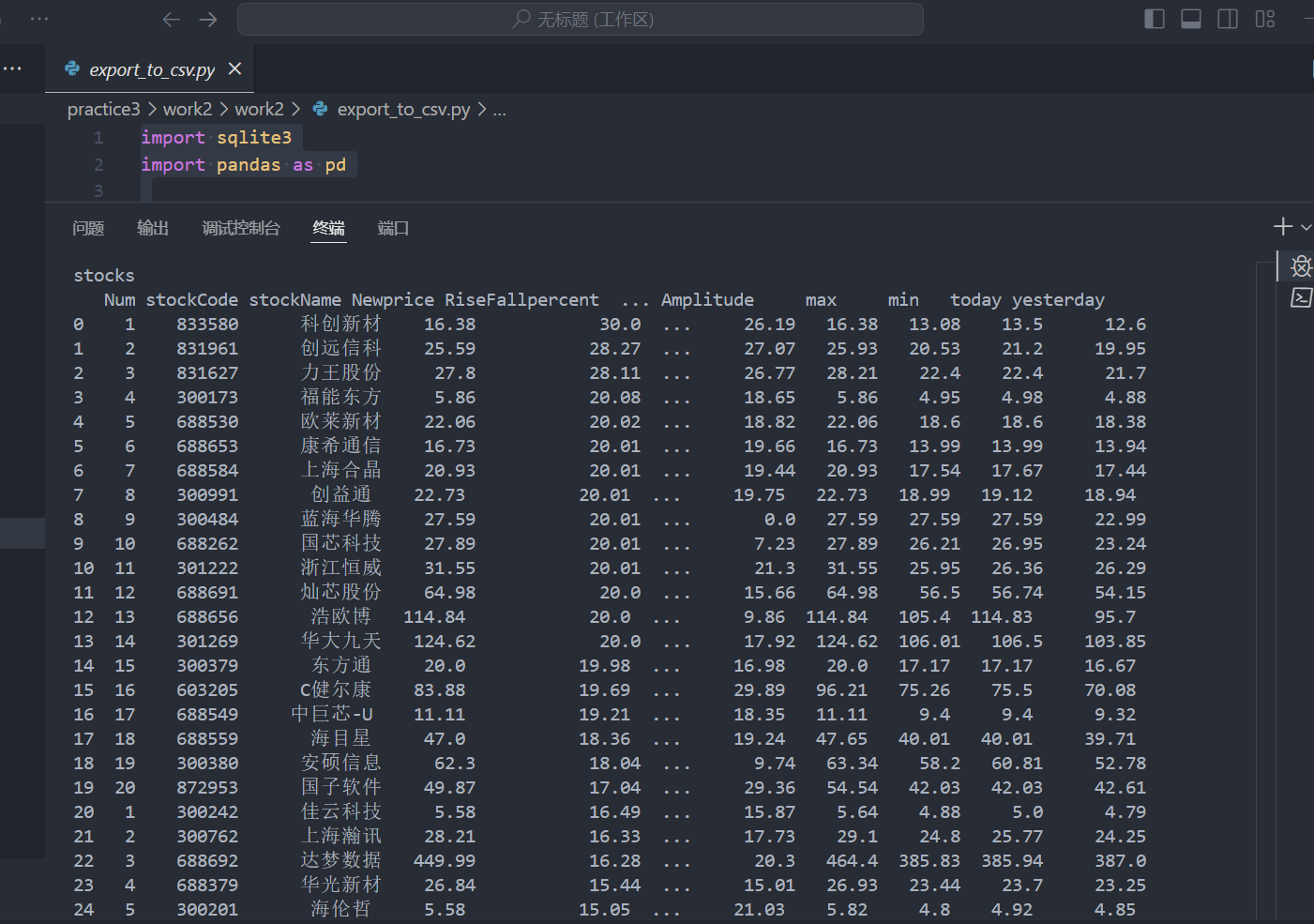

- 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

- 候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/ - 输出信息:

MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

| 序号 | 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 振幅 | 最高价 | 最低价 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | +10.92% | +2.99 | 7.60亿 | 22.34% | 32.00 | 28.08 | 30.20 | 17.55 |

🎄(2)代码实现及图片

主要代码

- 爬起股票.py

import sqlite3

import requests

import re

def getHtml(url):

header = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36 Edg/117.0.2045.47",

"Cookie": "qgqp_b_id=4a3c0dd089eb5ffa967fcab7704d27cd; st_si=19699330068294; st_asi=delete; st_pvi=76265126887030; st_sp=2021-12-18%2022%3A56%3A16; st_inirUrl=https%3A%2F%2Fcn.bing.com%2F; st_sn=2; st_psi=20231007141245108-113200301321-7681547675"}

resp = requests.get(url,headers=header)

html = resp.text

return html

global num

def getContent(html):

stocks = re.findall("\"diff\":\[(.*?)]",html)

#print(stocks)

stocks = list(eval(stocks[0]))

#print(stocks)

num = 0

result = []

for stock in stocks:

num += 1

daima = stock["f12"]

name = stock["f14"]

newprice = stock["f2"]

diefu = stock["f3"]

dieer = stock["f4"]

chengjiaoliang = stock["f5"]

chengjiaoer = stock["f6"]

zhenfu = stock["f7"]

max = stock["f15"]

min = stock["f16"]

today = stock["f17"]

yesterday = stock["f18"]

result.append([num,daima,name,newprice,diefu,dieer,chengjiaoliang,chengjiaoer,zhenfu,max,min,today,yesterday])

return result

class stockDB:

def openDB(self):

self.con = sqlite3.connect("stocks.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute("create table stocks (Num varchar(16), stockCode varchar(16),stockName varchar(16),Newprice varchar(16),RiseFallpercent varchar(16),RiseFall varchar(16),Turnover varchar(16),Dealnum varchar(16),Amplitude varchar(16),max varchar(16),min varchar(16),today varchar(16),yesterday varchar(16))")

except:

self.cursor.execute("delete from stocks")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self,Num,stockcode,stockname,newprice,risefallpercent,risefall,turnover,dealnum,Amplitude,max,min,today,yesterday):

try:

self.cursor.execute("insert into stocks(Num,stockCode,stockName,Newprice,RiseFallpercent,RiseFall,Turnover,Dealnum,Amplitude,max,min,today,yesterday) values (?,?,?,?,?,?,?,?,?,?,?,?,?)",

(Num,stockcode,stockname,newprice,risefallpercent,risefall,turnover,dealnum,Amplitude,max,min,today,yesterday))

except Exception as err:

print(err)

s = "{0:}\t{1:{13}^8}\t{2:{13}^10}\t{3:{13}^10}\t{4:{13}^10}\t{5:{13}^10}\t{6:{13}^10}\t{7:{13}^10}\t{8:{13}^10}\t{9:{13}^10}\t{10:{13}^10}\t{11:{13}^10}\t{12:{13}^10}"

print(s.format("序号","股票代码","股票名称","最新价","涨跌幅","涨跌额","成交量","成交额","振幅","最高","最低","今开","昨收",chr(12288)))

stockdb = stockDB() # 创建数据库对象

stockdb.openDB() # 开启数据库

for page in range(1,3):

url = "http://45.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124030395806868839914_1696659472380&pn=" + str(page)+ "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1696659472381"

html = getHtml(url)

stocks = getContent(html)

for stock in stocks:

print(s.format(stock[0],stock[1],stock[2],stock[3],stock[4],stock[5],stock[6],stock[7],stock[8],stock[9],stock[10],stock[11],stock[12],chr(12288)))

stockdb.insert(stock[0],stock[1],stock[2],stock[3],stock[4],stock[5],stock[6],stock[7],stock[8],stock[9],stock[10],stock[11],stock[12])

# 存入数据库

stockdb.closeDB()

- run.py

from scrapy import cmdline

cmdline.execute("scrapy crawl MySpider -s LOG_ENABLED=True".split())

输出结果

🔮(3)心得体会

使用requests库进行网络请求,通过设置合适的User-Agent和Cookie信息,成功获取到目标网页的 HTML 内容。这让我熟悉了如何模拟浏览器行为,绕过一些简单的反爬虫机制,确保能够稳定地获取数据。运用正则表达式re模块从复杂的 HTML 文本中提取出关键的股票数据信息。通过编写精准的正则表达式模式,如"diff":[(.*?)],能够高效地从返回的数据中筛选出股票列表数据,并进一步处理成结构化的数据格式,这极大地锻炼了我对正则表达式的运用能力和对复杂文本数据的解

🪸作业③

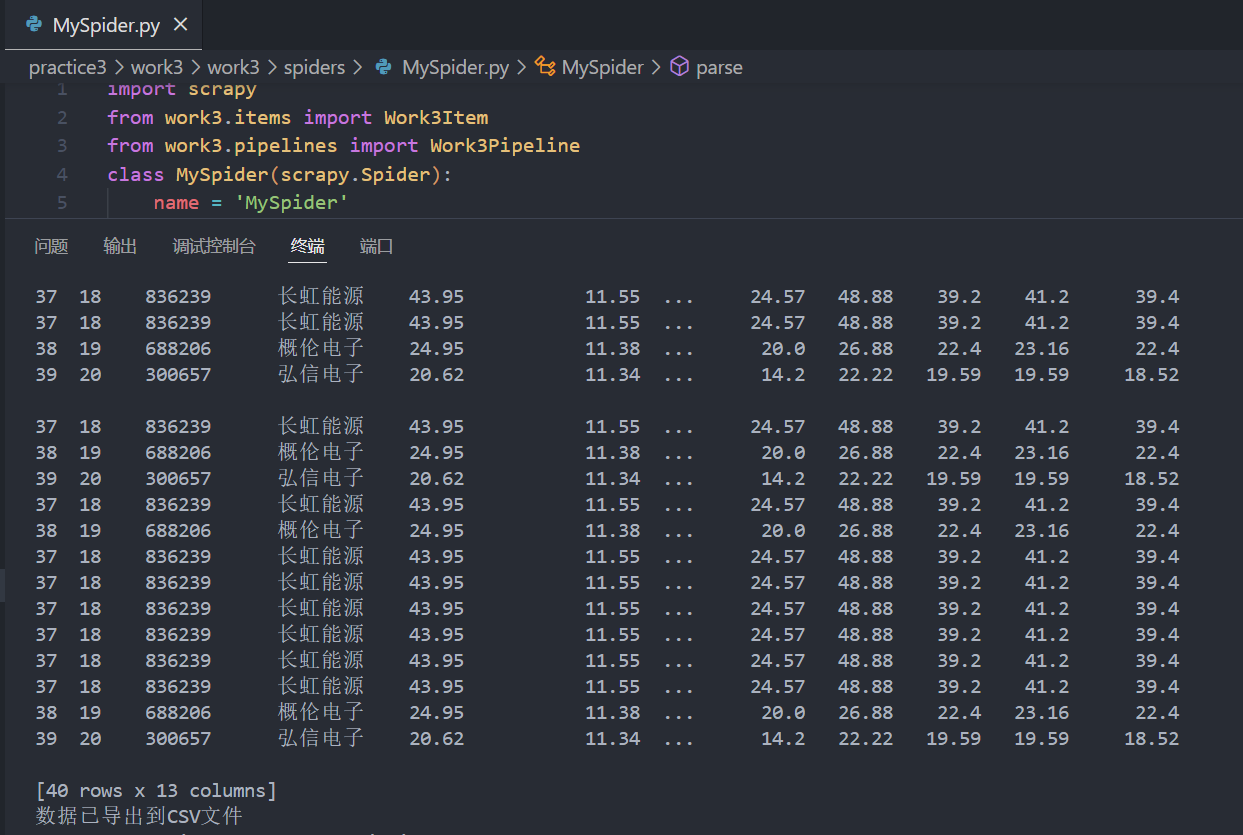

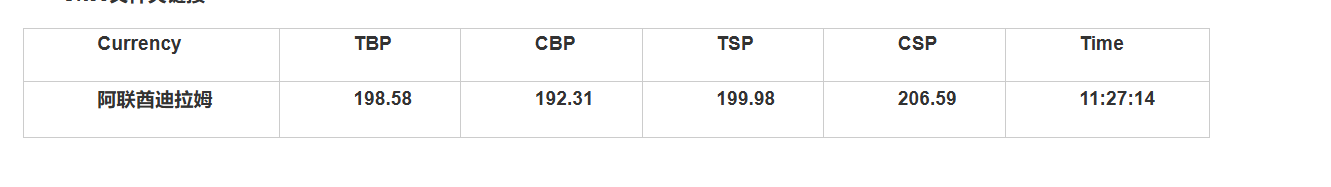

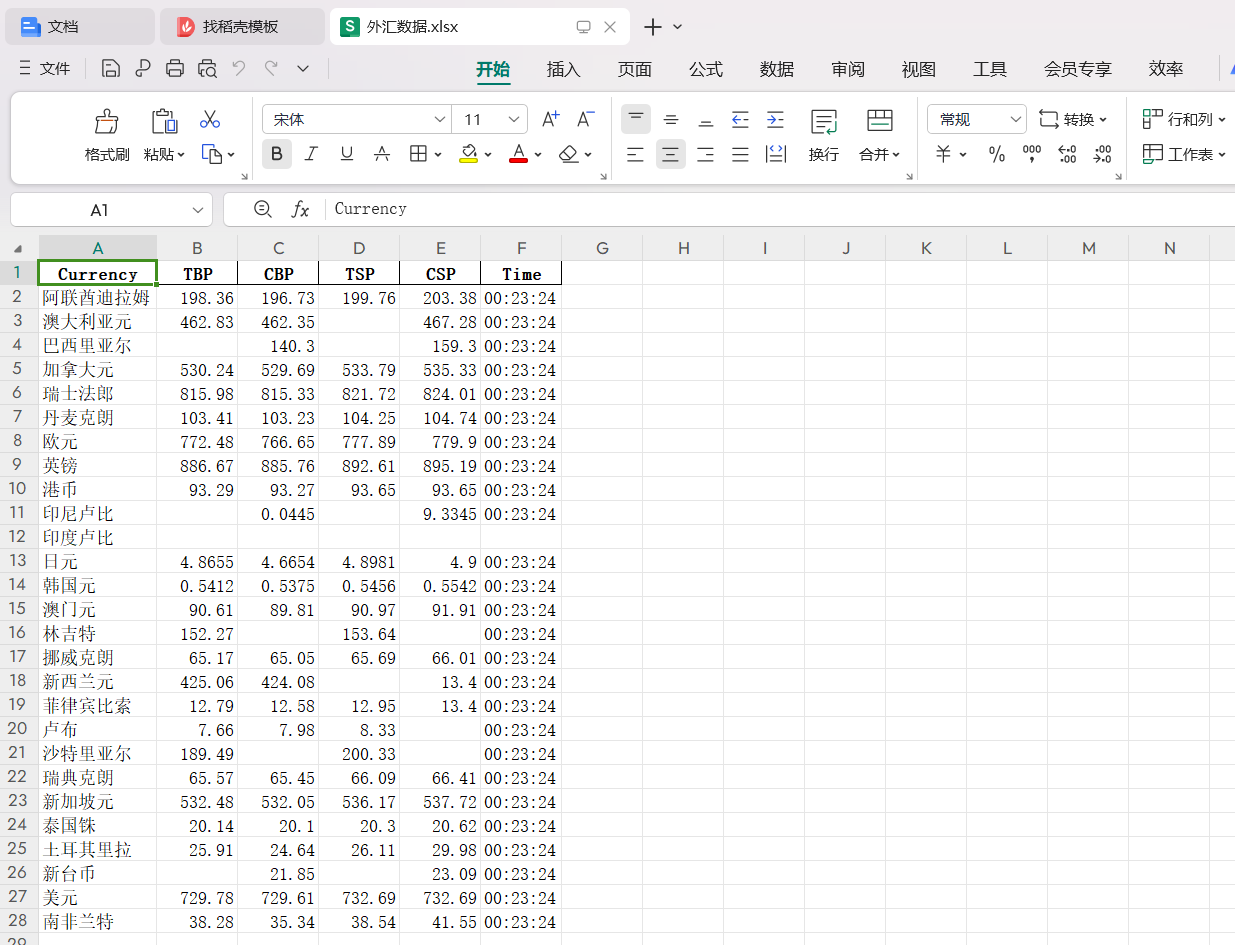

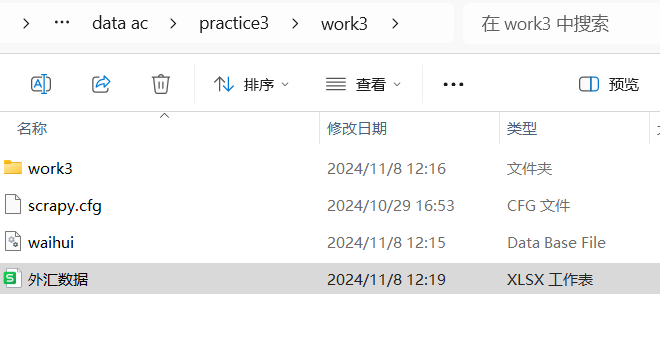

🐻❄️(1)作业要求

- 要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

- 候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

- 输出信息:

🔒(2)代码实现及图片

主要代码

- MySpider.py

import scrapy

from work3.items import Work3Item

from work3.pipelines import Work3Pipeline

class MySpider(scrapy.Spider):

name = 'MySpider'

start_urls =["https://www.boc.cn/sourcedb/whpj/"]

def parse(self, response):

waihuidb = Work3Pipeline() # 创建数据库对象

waihuidb.openDB(MySpider) # 开启数据库

items = response.xpath('//tr[position()>1]')

for i in items:

item = Work3Item()

item['Currency'] = i.xpath('.//td[1]/text()').get()

item['TBP'] = i.xpath('.//td[2]/text()').get()

item['CBP']= i.xpath('.//td[3]/text()').get()

item['TSP']= i.xpath('.//td[4]/text()').get()

item['CSP']=i.xpath('.//td[5]/text()').get()

item['Time']=i.xpath('.//td[8]/text()').get()

print(item)

waihuidb.process_item(item,MySpider)

yield item

- middlewares.py

from scrapy import signals

from itemadapter import is_item, ItemAdapter

class Work3SpiderMiddleware:

@classmethod

def from_crawler(cls, crawler):

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

return None

def process_spider_output(self, response, result, spider):

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

pass

def process_start_requests(self, start_requests, spider):

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info("Spider opened: %s" % spider.name)

class Work3DownloaderMiddleware:

@classmethod

def from_crawler(cls, crawler):

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

return None

def process_response(self, request, response, spider):

return response

def process_exception(self, request, exception, spider):

pass

def spider_opened(self, spider):

spider.logger.info("Spider opened: %s" % spider.name)

代码实现图片

🗝️(3)心得体会

在MySpider类中,我熟练地定义了爬虫的名称name以及起始 URL 列表start_urls,明确了数据爬取的起点。通过parse方法,运用xpath表达式对目标网页(中国银行外汇牌价页面)进行解析。能够精准地定位到表格中的每一行数据,并提取出货币种类Currency、各种买卖价格(TBP、CBP、TSP、CSP)以及时间Time等关键信息,将其封装到Work3Item对象中。这一过程让我对xpath在网页数据提取方面的强大功能有了更深入的理解和熟练的运用,能够快速根据网页结构变化调整提取策略,增强了应对不同网页结构的解析能力。