< Android Camera2 HAL3 学习文档 >

Android Camera2 HAL3 学习文档

一、Android Camera整体架构

-

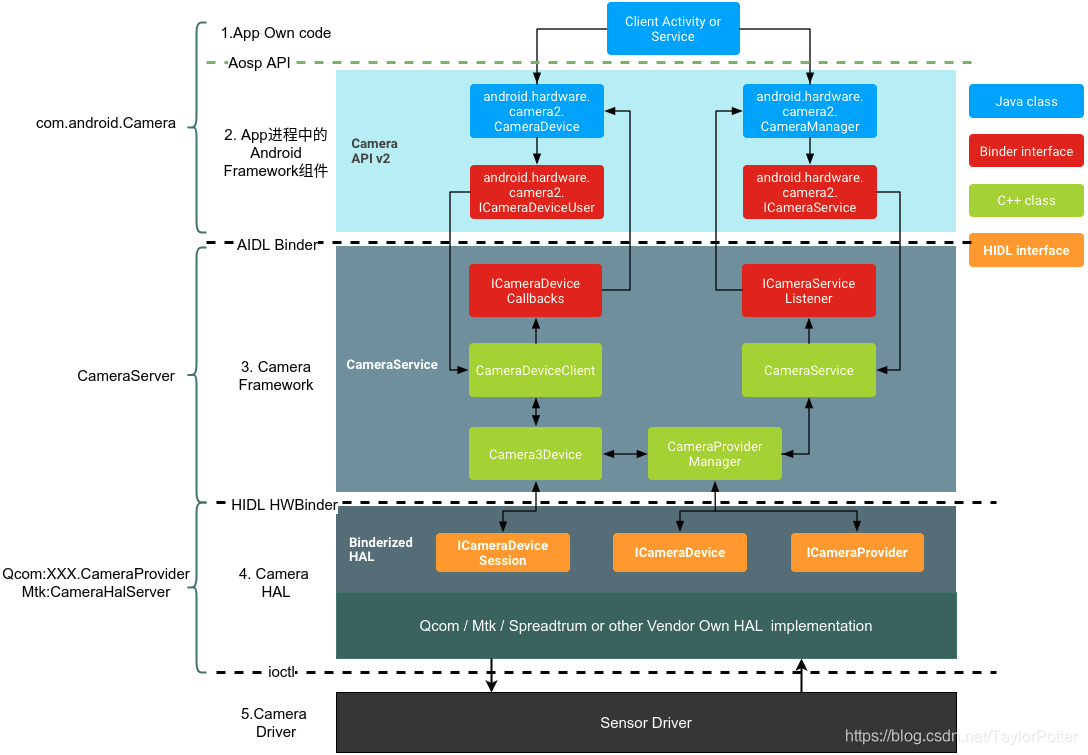

自Android8.0之后大多机型采用Camera API2 HAL3架构,架构分层如下图:

-

Android Camera整体框架主要包括三个进程:app进程、Camera Server进程、hal进程(provider进程)。进程之间的通信都是通过binder实现,其中app和Camera Server通信使用 AIDL(Android Interface Definition Language) ,camera server和hal(provider进程)通信使用HIDL(HAL interface definition language) 。

1.1 Android Camera基本分层

-

由图可知道,Android手机中Camera软件主要有大体上有4层:

-

应用层:应用开发者调用AOSP(Android 开放源代码项目)提供的接口即可,AOSP的接口即Android提供的相机应用的通用接口(Camera API2),这些接口将通过Binder与Framework层的相机服务(Camera Service)进行操作与数据传递;

-

Framework层:位于 frameworks/av/services/camera/libcameraservice/CameraService.cpp ,Framework相机服务(Camera Service)是承上启下的作用,上与应用交互,下与HAL曾交互。

- frameworks/av/camera/提供了ICameraService、ICameraDeviceUser、ICameraDeviceCallbacks、ICameraServiceListener等aidl接口的实现。以及camera server的main函数。

- AIDL基于Binder实现的一个用于让App fw代码访问native fw代码的接口。其实现存在于下述路径:frameworks/av/camera/aidl/android/hardware。

-

Hal层:硬件抽象层,Android 定义好了Framework服务与HAL层通信的协议及接口,HAL层如何实现有各个Vendor供应商自己实现,如Qcom高通的老架构mm-Camera,新架构Camx架构,Mtk联发科的P之后的Hal3架构。

-

Driver层:驱动层,数据由硬件到驱动层处理,驱动层接收HAL层数据以及传递Sensor数据给到HAL层,这里当然是各个Sensor芯片不同驱动也不同。

-

-

将整个架构(AndroidO Treble架构)分这么多层的原因大体是为了分清界限,升级方便,高内聚低耦合,将oem定制的东西和Framework分离。参考资料:AndroidO Treble架构分析

- Android要适应各个手机组装厂商的不同配置,不同sensor,不管怎么换芯片,从Framework及之上都不需要改变,App也不需要改变就可以在各种配置机器上顺利运行,HAL层对上的接口也由Android定义死,各个平台厂商只需要按照接口实现适合自己平台的HAL层即可。

1.2 Android Camera工作大体流程

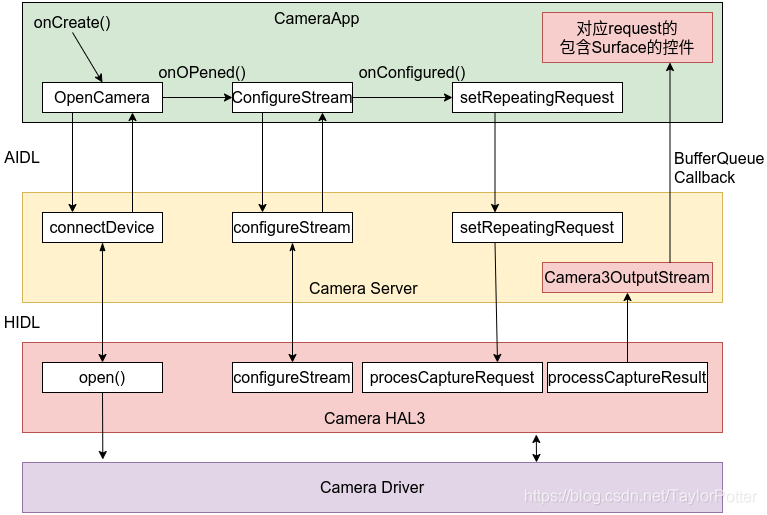

- 上图就是Android Camera整个工作的大体流程表观体现,绿色框中是应用开发者需要做的操作,蓝色为AOSP对其提供的API,黄色为Native Framework Service,紫色为HAL层Service。

-

首先App一般在MainActivity中使用SurfaceView或者SurfaceTexture+TextureView或者GLSurfaceView等控件作为显示预览界面的控件,这些控件的共同点都是包含了一个单独的Surface作为取相机数据的容器。

-

在MainActivity onCreate的时候调用API 去通知Framework Native Service CameraServer需要进行connect HAL,继而打开Camera硬件sensor。

-

openCamera成功会有回调从CameraServer通知到App,再onOpenedCamera或类似回调中去调用类似startPreview的操作。此时会创建CameraCaptureSession,创建过程中会向CameraServer调用ConfigureStream的操作。ConfigureStream的参数中包含了第一步中空间中的Surface的引用,相当于App将Surface容器给到了CameraServer,CameraServer包装了下该Surface容器变成为stream,通过HIDL传递给HAL,继而HAL也做ConfigureStream操作。

-

ConfigureStream成功后CameraServer会给App回调通知ConfigStream成功,接下来App便会调用AOS的 setRepeatingRequest接口给到CameraServer,CameraServer初始化时便起来了一个死循环线程等待来接收Request。

-

CameraServer将request交到Hal层去处理,得到HAL处理结果后取出该Request的处理Result中的Buffer填到App给到的容器中,SetRepeatingRequest如果是预览,则交给Preview的Surface容器;如果是Capture Request则将收到的Buffer交给ImageReader的Surface容器。

-

Surface本质上是BufferQueue的使用者和封装,,当CameraServer中App设置来的Surface容器被填满了BufferQueue机制将会通知到应用,此时App中控件取出各自容器中的内容消费掉,Preview控件中的Surface中的内容将通过View提供到SurfaceFlinger中进行合成最终显示出来,即预览;而ImageReader中的Surface被填了,则App将会取出保存成图片文件消费掉。

-

录制视频工作先暂时放着不研究:可参考[Android][MediaRecorder] Android MediaRecorder框架简洁梳理

- 上述流程可以用下图简单表示出来:

二、Android Camera各层简述

2.1 Camera App层

-

应用层即应用开发者关注的地方,主要就是利用AOSP提供的应用可用的组件实现用户可见可用的相机应用,主要的接口及要点在Android官方文档:Android 开发者/文档/指南/相机

-

应用层开发者需要做的就是按照AOSP的API规定提供的接口,打开相机,做基本的相机参数的设置,发送request指令,将收到的数据显示在应用界面或保存到存储中。

-

应用层开发者不需要关注有手机有几个摄像头他们是什么牌子的,他们是怎么组合的,特定模式下哪个摄像头是开或者是关的,他们利用AOSP提供的接口通过AIDL binder调用向Framework层的CameraServer进程下指令,从CameraServer进程中取的数据。

-

主要一个预览控件和拍照保存控件,基本过程都如下:

- openCamera:Sensor上电

- configureStream: 该步就是将控件如GLSurfaceView,ImageReader等中的Surface容器给到CameraServer.

- request: 预览使用SetRepeatingRequest,拍一张可以使用Capture,本质都是setRequest给到CameraServer

- CameraServer将Request的处理结果Buffer数据填到对应的Surface容器中,填完后由BufferQueue机制回调到引用层对应的Surface控件的CallBack处理函数,接下来要显示预览或保图片App中对应的Surface中都有数据了。

-

两个主要的类:

- (1) CameraManager,CameraManager是一个独一无二地用于检测、连接和描述相机设备的系统服务,负责管理所有的CameraDevice相机设备。通过ICameraService调用到CameraService。

- (2) CameraDevice:CameraDevice是连接在安卓设备上的单个相机的抽象表示。通过ICameraDeviceUser调用到CameraDeviceClient。CameraDevice是抽象类,CameraDeviceImpl.java继承了CameraDevice.java,并完成了抽象方法的具体实现。

-

Camera操作过程中最重要的四个步骤:

- CameraManager-->openCamera ---> 打开相机

- CameraDeviceImpl-->createCaptureSession ---> 创建捕获会话

- CameraCaptureSession-->setRepeatingRequest ---> 设置预览界面

- CameraDeviceImpl-->capture ---> 开始捕获图片

2.2 Camera Framework层简述

-

Native framework:

- 代码路径位于:frameworks/av/camera/。提供了ICameraService、ICameraDeviceUser、ICameraDeviceCallbacks、ICameraServiceListener等aidl接口的实现。以及camera server的main函数。

- AIDL基于Binder实现的一个用于让App fw代码访问native fw代码的接口。其实现存在于下述路径:frameworks/av/camera/aidl/android/hardware。

- 其主要类:

- (1) ICameraService 是相机服务的接口。用于请求连接、添加监听等。

- (2) ICameraDeviceUser 是已打开的特定相机设备的接口。应用框架可通过它访问具体设备。

- (3) ICameraServiceListener 和 ICameraDeviceCallbacks 分别是从 CameraService 和 CameraDevice 到应用框架的回调。

-

Camera Service

- 代码路径:frameworks/av/services/camera/。CameraServer承上启下,向上对应用层提供Aosp的接口服务,下和Hal直接交互。一般而言,CamerServer出现问题的概率极低,大部分还是App层及HAL层出现的问题居多。

2.2.1 CameraServer初始化

2.2.2 App调用CameraServer的相关操作

2.2.2.1 open Camera:

2.2.2.2 configurestream:

2.2.2.3 preview and capture request:

2.2.2.4 flush and close:

2.3 Camera Hal3 子系统

-

Android 的相机硬件抽象层 (HAL) 可将 android.hardware.camera2 中较高级别的相机框架 API 连接到底层的相机驱动程序和硬件。Android 8.0 引入了 Treble,用于将 CameraHal API 切换到由 HAL 接口描述语言 (HIDL) 定义的稳定接口。

-

应用向相机子系统发出request,一个request对应一组结果.request中包含所有配置信息。其中包括分辨率和像素格式;手动传感器、镜头和闪光灯控件;3A 操作模式;RAW 到 YUV 处理控件;以及统计信息的生成等.一次可发起多个请求,而且提交请求时不会出现阻塞。请求始终按照接收的顺序进行处理。

-

由图中看到request中携带了数据容器Surface,交到framework cameraserver中,打包成Camera3OutputStream实例,在一次CameraCaptureSession中包装成Hal request交给HAL层处理。 Hal层获取到处理数据后返回給CameraServer,即CaptureResult通知到Framework,Framework cameraserver则得到HAL层传来的数据给他放进Stream中的容器Surface中。而这些Surface正是来自应用层封装了Surface的控件,这样App就得到了相机子系统传来的数据。

-

HAL3 基于captureRequest和CaptureResult来实现事件和数据的传递,一个Request会对应一个Result。

-

上述是Android原生的HAL3定义,各个芯片厂商可以根据接口来进行定制化的实现,eg. 高通的mm-camera,camx;联发科的mtkcam hal3等。

-

HAL3接口定义在/hardware/interfaces/camera/下。

- HAL层的代码梳理与架构分析放在最后 - Qcom HAL3 Camx架构学习

三、Android Camera源码探索

3.1 Camera2 API

-

Camera api部分:frameworks/base/core/java/android/hardware/camera2

-

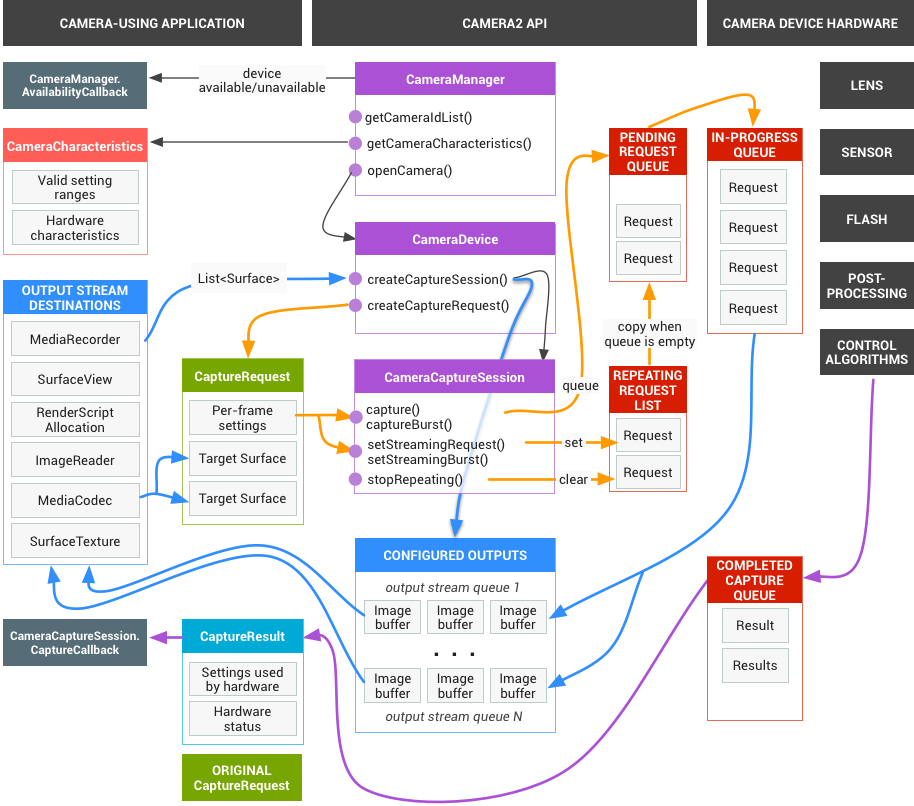

camera2 api关系图:

-

android.hardware.camera2开发包给开发者提供了一个操作相机的开发包,是api-21提供的,用于替代之前的Camera操作控类。该软件包将摄像机设备建模为管道,它接收捕获单个帧的输入请求,根据请求捕获单个图像,然后输出一个捕获结果元数据包,以及一组请求的输出图像缓冲区。请求按顺序处理,多个请求可以立即进行。由于相机设备是具有多个阶段的管道,因此需要在移动中处理多个捕捉请求以便在大多数Android设备上保持完全帧率。

-

Camera2 API中主要涉及以下几个关键类:

- CameraManager:摄像头管理器,用于打开和关闭系统摄像头

- CameraCharacteristics:描述摄像头的各种特性,我们可以通过CameraManager的getCameraCharacteristics(@NonNull String cameraId)方法来获取。

- CameraDevice:描述系统摄像头,类似于早期的Camera。

- CameraCaptureSession:Session类,当需要拍照、预览等功能时,需要先创建该类的实例,然后通过该实例里的方法进行控制(例如:拍照 capture())。

- CaptureRequest:描述了一次操作请求,拍照、预览等操作都需要先传入CaptureRequest参数,具体的参数控制也是通过CameraRequest的成员变量来设置。

- CaptureResult:描述拍照完成后的结果。

-

如果要操作相机设备,需要获取CameraManager实例。CameraDevices提供了一系列静态属性集合来描述camera设备,提供camera可供设置的属性和设备的输出参数。描述这些属性的是CameraCharacteristics实例,就是CameraManager的getCameraCharacteristics(String)方法。

-

为了捕捉或者流式话camera设备捕捉到的图片信息,应用开发者必须创建一个CameraCaptureSession,这个camera session中包含了一系列相机设备的输出surface集合。目标的surface一般通过SurfaceView, SurfaceTexture via Surface(SurfaceTexture), MediaCodec, MediaRecorder, Allocation, and ImageReader.

-

camera 预览界面一般使用SurfaceView或者TextureView,,捕获的图片数据buffers可以通过ImageReader读取。

- TextureView可用于显示内容流。这样的内容流可以例如是视频或OpenGL场景。内容流可以来自应用程序的进程以及远程进程。

- TextureView只能在硬件加速窗口中使用。在软件中渲染时,TextureView将不会绘制任何内容。

- TextureView不会创建单独的窗口,但表现为常规视图。这一关键差异允许TextureView移动,转换和使用动画

-

之后,应用程序需要构建CaptureRequest,在捕获单个图片的时候,这些request请求需要定义一些请求的参数。

-

一旦设置了请求,就可以将其传递到活动捕获会话,以进行一次捕获或无休止地重复使用。两种方法还具有接受用作突发捕获/重复突发的请求列表的变体。重复请求的优先级低于捕获,因此在配置重复请求时通过capture()提交的请求将在当前重复(突发)捕获的任何新实例开始捕获之前捕获。

-

处理完请求后,摄像机设备将生成一个TotalCaptureResult对象,该对象包含有关捕获时摄像机设备状态的信息以及使用的最终设置。如果需要舍入或解决相互矛盾的参数,这些可能与请求有所不同。相机设备还将一帧图像数据发送到请求中包括的每个输出表面。这些是相对于输出CaptureResult异步生成的,有时基本上稍晚。

-

Camera2拍照流程如下图所示:

- 开发者通过创建CaptureRequest向摄像头发起Capture请求,这些请求会排成一个队列供摄像头处理,摄像头将结果包装在CaptureMetadata中返回给开发者。整个流程建立在一个CameraCaptureSession的会话中。

3.1.1 打开相机

- 打开相机之前,我们首先要获取CameraManager,然后获取相机列表,进而获取各个摄像头(主要是前置摄像头和后置摄像头)的参数。

mCameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

try {

final String[] ids = mCameraManager.getCameraIdList();

numberOfCameras = ids.length;

for (String id : ids) {

final CameraCharacteristics characteristics = mCameraManager.getCameraCharacteristics(id);

final int orientation = characteristics.get(CameraCharacteristics.LENS_FACING);

if (orientation == CameraCharacteristics.LENS_FACING_FRONT) {

faceFrontCameraId = id;

faceFrontCameraOrientation = characteristics.get(CameraCharacteristics.SENSOR_ORIENTATION);

frontCameraCharacteristics = characteristics;

} else {

faceBackCameraId = id;

faceBackCameraOrientation = characteristics.get(CameraCharacteristics.SENSOR_ORIENTATION);

backCameraCharacteristics = characteristics;

}

}

} catch (Exception e) {

Log.e(TAG, "Error during camera initialize");

}

-

Camera2与Camera一样也有cameraId的概念,我们通过mCameraManager.getCameraIdList()来获取cameraId列表,然后通过mCameraManager.getCameraCharacteristics(id)获取每个id对应摄像头的参数。

-

关于CameraCharacteristics里面的参数,主要用到的有以下几个:

- LENS_FACING:前置摄像头(LENS_FACING_FRONT)还是后置摄像头(LENS_FACING_BACK)。

- SENSOR_ORIENTATION:摄像头拍照方向。

- FLASH_INFO_AVAILABLE:是否支持闪光灯。

- CameraCharacteristics.INFO_SUPPORTED_HARDWARE_LEVEL:获取当前设备支持的相机特性。

-

事实上,在各个厂商的的Android设备上,Camera2的各种特性并不都是可用的,需要通过characteristics.get(CameraCharacteristics.INFO_SUPPORTED_HARDWARE_LEVEL)方法来根据返回值来获取支持的级别,具体说来:

- INFO_SUPPORTED_HARDWARE_LEVEL_FULL:全方位的硬件支持,允许手动控制全高清的摄像、支持连拍模式以及其他新特性。

- INFO_SUPPORTED_HARDWARE_LEVEL_LIMITED:有限支持,这个需要单独查询。

- INFO_SUPPORTED_HARDWARE_LEVEL_LEGACY:所有设备都会支持,也就是和过时的Camera API支持的特性是一致的。

-

利用这个INFO_SUPPORTED_HARDWARE_LEVEL参数,我们可以来判断是使用Camera还是使用Camera2,具体方法如下:

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

public static boolean hasCamera2(Context mContext) {

if (mContext == null) return false;

if (Build.VERSION.SDK_INT < Build.VERSION_CODES.LOLLIPOP) return false;

try {

CameraManager manager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

String[] idList = manager.getCameraIdList();

boolean notFull = true;

if (idList.length == 0) {

notFull = false;

} else {

for (final String str : idList) {

if (str == null || str.trim().isEmpty()) {

notFull = false;

break;

}

final CameraCharacteristics characteristics = manager.getCameraCharacteristics(str);

final int supportLevel = characteristics.get(CameraCharacteristics.INFO_SUPPORTED_HARDWARE_LEVEL);

if (supportLevel == CameraCharacteristics.INFO_SUPPORTED_HARDWARE_LEVEL_LEGACY) {

notFull = false;

break;

}

}

}

return notFull;

} catch (Throwable ignore) {

return false;

}

}

-

打开相机主要调用的是mCameraManager.openCamera(currentCameraId, stateCallback, backgroundHandler)方法,如你所见,它有三个参数:

- String cameraId:摄像头的唯一ID。

- CameraDevice.StateCallback callback:摄像头打开的相关回调。

- Handler handler:StateCallback需要调用的Handler,我们一般可以用当前线程的Handler。

-

mCameraManager.openCamera(currentCameraId, stateCallback, backgroundHandler); -

上面我们提到了CameraDevice.StateCallback,它是摄像头打开的一个回调,定义了打开,关闭以及出错等各种回调方法,我们可以在 这些回调方法里做对应的操作。

private CameraDevice.StateCallback stateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice cameraDevice) {

//获取CameraDevice

mcameraDevice = cameraDevice;

}

@Override

public void onDisconnected(@NonNull CameraDevice cameraDevice) {

//关闭CameraDevice

cameraDevice.close();

}

@Override

public void onError(@NonNull CameraDevice cameraDevice, int error) {

//关闭CameraDevice

cameraDevice.close();

}

};

3.1.1 关闭相机

- 关闭相机就直接调用

cameraDevice.close();关闭CameraDevice。

3.1.2 开启预览

-

Camera2都是通过创建请求会话的方式进行调用的,具体说来:

- 调用mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW)方法创建CaptureRequest

- 调用mCameraDevice.createCaptureSession()方法创建CaptureSession。

CaptureRequest.Builder createCaptureRequest(@RequestTemplate int templateType)

-

createCaptureRequest()方法里参数templateType代表了请求类型,请求类型一共分为六种,分别为:

- TEMPLATE_PREVIEW:创建预览的请求

- TEMPLATE_STILL_CAPTURE:创建一个适合于静态图像捕获的请求,图像质量优先于帧速率。

- TEMPLATE_RECORD:创建视频录制的请求

- TEMPLATE_VIDEO_SNAPSHOT:创建视视频录制时截屏的请求

- TEMPLATE_ZERO_SHUTTER_LAG:创建一个适用于零快门延迟的请求。在不影响预览帧率的情况下最大化图像质量。

- TEMPLATE_MANUAL:创建一个基本捕获请求,这种请求中所有的自动控制都是禁用的(自动曝光,自动白平衡、自动焦点)。

-

createCaptureSession(@NonNull List<Surface> outputs, @NonNull CameraCaptureSession.StateCallback callback, @Nullable Handler handler) -

createCaptureSession()方法一共包含三个参数:

- List outputs:我们需要输出到的Surface列表。

- CameraCaptureSession.StateCallback callback:会话状态相关回调。

- Handler handler:callback可以有多个(来自不同线程),这个handler用来区别那个callback应该被回调,一般写当前线程的Handler即可。

-

关于CameraCaptureSession.StateCallback里的回调方法:

- onConfigured(@NonNull CameraCaptureSession session); 摄像头完成配置,可以处理Capture请求了。

- onConfigureFailed(@NonNull CameraCaptureSession session); 摄像头配置失败

- onReady(@NonNull CameraCaptureSession session); 摄像头处于就绪状态,当前没有请求需要处理。

- onActive(@NonNull CameraCaptureSession session); 摄像头正在处理请求。

- onClosed(@NonNull CameraCaptureSession session); 会话被关闭

- onSurfacePrepared(@NonNull CameraCaptureSession session, @NonNull Surface surface); Surface准备就绪

-

理解了这些东西,创建预览请求就十分简单了。

previewRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

previewRequestBuilder.addTarget(workingSurface);

//注意这里除了预览的Surface,我们还添加了imageReader.getSurface()它就是负责拍照完成后用来获取数据的

mCameraDevice.createCaptureSession(Arrays.asList(workingSurface, imageReader.getSurface()),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

cameraCaptureSession.setRepeatingRequest(previewRequest, captureCallback, backgroundHandler);

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {

Log.d(TAG, "Fail while starting preview: ");

}

}, null);

-

可以发现,在onConfigured()里调用了cameraCaptureSession.setRepeatingRequest(previewRequest, captureCallback, backgroundHandler),这样我们就可以 持续的进行预览了。

-

上面我们说了添加了imageReader.getSurface()它就是负责拍照完成后用来获取数据,具体操作就是为ImageReader设置一个OnImageAvailableListener,然后在它的onImageAvailable() 方法里获取。

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, mBackgroundHandler);

private final ImageReader.OnImageAvailableListener mOnImageAvailableListener

= new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

//当图片可得到的时候获取图片并保存

mBackgroundHandler.post(new ImageSaver(reader.acquireNextImage(), mFile));

}

};

3.1.4 关闭预览

- 关闭预览就是关闭当前预览的会话,结合上面开启预览的内容,具体实现如下:

if (captureSession != null) {

captureSession.close();

try {

captureSession.abortCaptures();

} catch (Exception ignore) {

} finally {

captureSession = null;

}

}

3.1.5 拍照

- 拍照具体来说分为三步:

-

对焦

- 定义了一个CameraCaptureSession.CaptureCallback来处理对焦请求返回的结果。

-

拍照

- 定义了一个captureStillPicture()来进行拍照。

-

取消对焦

- 拍完照片后,还要解锁相机焦点,让相机恢复到预览状态。

-

// 对焦

private CameraCaptureSession.CaptureCallback captureCallback = new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureProgressed(@NonNull CameraCaptureSession session,

@NonNull CaptureRequest request,

@NonNull CaptureResult partialResult) {

}

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session,

@NonNull CaptureRequest request,

@NonNull TotalCaptureResult result) {

//等待对焦

final Integer afState = result.get(CaptureResult.CONTROL_AF_STATE);

if (afState == null) {

//对焦失败,直接拍照

captureStillPicture();

} else if (CaptureResult.CONTROL_AF_STATE_FOCUSED_LOCKED == afState

|| CaptureResult.CONTROL_AF_STATE_NOT_FOCUSED_LOCKED == afState

|| CaptureResult.CONTROL_AF_STATE_INACTIVE == afState

|| CaptureResult.CONTROL_AF_STATE_PASSIVE_SCAN == afState) {

Integer aeState = result.get(CaptureResult.CONTROL_AE_STATE);

if (aeState == null ||

aeState == CaptureResult.CONTROL_AE_STATE_CONVERGED) {

previewState = STATE_PICTURE_TAKEN;

//对焦完成,进行拍照

captureStillPicture();

} else {

runPreCaptureSequence();

}

}

}

};

// 拍照

private void captureStillPicture() {

try {

if (null == mCameraDevice) {

return;

}

//构建用来拍照的CaptureRequest

final CaptureRequest.Builder captureBuilder =

mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE);

captureBuilder.addTarget(imageReader.getSurface());

//使用相同的AR和AF模式作为预览

captureBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

//设置方向

captureBuilder.set(CaptureRequest.JPEG_ORIENTATION, getPhotoOrientation(mCameraConfigProvider.getSensorPosition()));

//创建会话

CameraCaptureSession.CaptureCallback CaptureCallback = new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session,

@NonNull CaptureRequest request,

@NonNull TotalCaptureResult result) {

Log.d(TAG, "onCaptureCompleted: ");

}

};

//停止连续取景

captureSession.stopRepeating();

//捕获照片

captureSession.capture(captureBuilder.build(), CaptureCallback, null);

} catch (CameraAccessException e) {

Log.e(TAG, "Error during capturing picture");

}

}

// 取消对焦

try {

//重置自动对焦

previewRequestBuilder.set(CaptureRequest.CONTROL_AF_TRIGGER, CameraMetadata.CONTROL_AF_TRIGGER_CANCEL);

captureSession.capture(previewRequestBuilder.build(), captureCallback, backgroundHandler);

//相机恢复正常的预览状态

previewState = STATE_PREVIEW;

//打开连续取景模式

captureSession.setRepeatingRequest(previewRequest, captureCallback, backgroundHandler);

} catch (Exception e) {

Log.e(TAG, "Error during focus unlocking");

}

3.1.6 开始/结束录制视频

- 这部分暂时先放置不深究。

3.2 Android Camera原理之openCamera模块(一)

- Java端上层开发的时候只需要知道如何调度API,如何调起Camera即可,但是关于API的内部实现需要通过Framework层代码往下一步一步步深入,本节从向上的API函数openCamera开始,展开讨论camera调起之后底层是如何工作的。

3.2.1 CameraManager

- CameraManager是本地的SystemService集合中一个service,在SystemServiceRegistry中注册:

registerService(Context.CAMERA_SERVICE, CameraManager.class,

new CachedServiceFetcher<CameraManager>() {

@Override

public CameraManager createService(ContextImpl ctx) {

return new CameraManager(ctx);

}});

- SystemServiceRegistry中有两个HashMap集合来存储本地的SystemService数据,有一点要注意点一些,这和Binder的service不同,他们不是binder service,只是普通的调用模块,集成到一个本地service中,便于管理。

private static final HashMap<Class<?>, String> SYSTEM_SERVICE_NAMES =

new HashMap<Class<?>, String>();

private static final HashMap<String, ServiceFetcher<?>> SYSTEM_SERVICE_FETCHERS =

new HashMap<String, ServiceFetcher<?>>();

3.2.2 openCamera函数

- CameraManager中两个openCamera(...),只是一个传入Handler(句柄),一个传入Executor(操作线程池),是想用线程池来执行Camera中耗时操作。

- cameraId 是一个标识,标识当前要打开的camera

- callback 是一个状态回调,当前camera被打开的时候,这个状态回调会被触发的。

- handler 是传入的一个执行耗时操作的handler

- executor 操作线程池

public void openCamera(@NonNull String cameraId,

@NonNull final CameraDevice.StateCallback callback, @Nullable Handler handler)

public void openCamera(@NonNull String cameraId,

@NonNull @CallbackExecutor Executor executor,

@NonNull final CameraDevice.StateCallback callback)

- 了解一下openCamera的调用流程:

3.2.2.1 openCameraDeviceUserAsync函数

private CameraDevice openCameraDeviceUserAsync(String cameraId,

CameraDevice.StateCallback callback, Executor executor, final int uid)

throws CameraAccessException

{

//......

}

-

返回值是CameraDevice,从Camera2 API中讲解了Camera framework模块中主要类之间的关系,CameraDevice是抽象类,CameraDeviceImpl是其实现类,就是要获取CameraDeviceImpl的实例。

-

这个函数的主要作用就是到底层获取相机设备的信息,并获取当前指定cameraId的设备实例。本函数的主要工作可以分为下面五点:

- 获取当前cameraId指定相机的设备信息

- 利用获取相机的设备信息创建CameraDeviceImpl实例

- 调用远程CameraService获取当前相机的远程服务

- 将获取的远程服务设置到CameraDeviceImpl实例中

- 返回CameraDeviceImpl实例

3.2.2.2 获取当前cameraId指定相机的设备信息

CameraCharacteristics characteristics = getCameraCharacteristics(cameraId);

- 一句简单的调用,返回值是CameraCharacteristics,CameraCharacteristics提供了CameraDevice的各种属性,可以通过getCameraCharacteristics函数来查询。

public CameraCharacteristics getCameraCharacteristics(@NonNull String cameraId)

throws CameraAccessException {

CameraCharacteristics characteristics = null;

if (CameraManagerGlobal.sCameraServiceDisabled) {

throw new IllegalArgumentException("No cameras available on device");

}

synchronized (mLock) {

ICameraService cameraService = CameraManagerGlobal.get().getCameraService();

if (cameraService == null) {

throw new CameraAccessException(CameraAccessException.CAMERA_DISCONNECTED,

"Camera service is currently unavailable");

}

try {

if (!supportsCamera2ApiLocked(cameraId)) {

int id = Integer.parseInt(cameraId);

String parameters = cameraService.getLegacyParameters(id);

CameraInfo info = cameraService.getCameraInfo(id);

characteristics = LegacyMetadataMapper.createCharacteristics(parameters, info);

} else {

CameraMetadataNative info = cameraService.getCameraCharacteristics(cameraId);

characteristics = new CameraCharacteristics(info);

}

} catch (ServiceSpecificException e) {

throwAsPublicException(e);

} catch (RemoteException e) {

throw new CameraAccessException(CameraAccessException.CAMERA_DISCONNECTED,

"Camera service is currently unavailable", e);

}

}

return characteristics;

}

- 一个关键的函数----> supportsCamera2ApiLocked(cameraId),这个函数的意思是 当前camera服务是否支持camera2 api,如果支持,返回true,如果不支持,返回false。

private boolean supportsCameraApiLocked(String cameraId, int apiVersion) {

/*

* Possible return values:

* - NO_ERROR => CameraX API is supported

* - CAMERA_DEPRECATED_HAL => CameraX API is *not* supported (thrown as an exception)

* - Remote exception => If the camera service died

*

* Anything else is an unexpected error we don't want to recover from.

*/

try {

ICameraService cameraService = CameraManagerGlobal.get().getCameraService();

// If no camera service, no support

if (cameraService == null) return false;

return cameraService.supportsCameraApi(cameraId, apiVersion);

} catch (RemoteException e) {

// Camera service is now down, no support for any API level

}

return false;

}

-

调用的CameraService对应的是ICameraService.aidl,对应的实现类在frameworks/av/services/camera/libcameraservice/CameraService.h里。

-

下面是CameraManager与CameraService之间的连接关系图示:

-

CameraManagerGlobal是CameraManager中的内部类,服务端在native层,Camera2API介绍的时候已经说明了当前cameraservice是放在frameworks/av/services/camera/libcameraservice/中的,编译好了之后会生成一个libcameraservices.so的共享库。熟悉camera代码,首先应该熟悉camera架构的代码。

-

这儿监测的是当前camera架构是基于HAL什么版本的,看下面的switch判断:

- 当前device是基于HAL1.0 HAL3.0 HAL3.1,并且apiversion不是API_VERSION_2,此时支持,这里要搞清楚了,这里的API_VERSION_2不是api level 2,而是camera1还是camera2。

- 当前device是基于HAL3.2 HAL3.3 HAL3.4,此时支持

- 目前android版本,正常情况下都是支持camera2的

Status CameraService::supportsCameraApi(const String16& cameraId, int apiVersion,

/*out*/ bool *isSupported) {

ATRACE_CALL();

const String8 id = String8(cameraId);

ALOGV("%s: for camera ID = %s", __FUNCTION__, id.string());

switch (apiVersion) {

case API_VERSION_1:

case API_VERSION_2:

break;

default:

String8 msg = String8::format("Unknown API version %d", apiVersion);

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(ERROR_ILLEGAL_ARGUMENT, msg.string());

}

int deviceVersion = getDeviceVersion(id);

switch(deviceVersion) {

case CAMERA_DEVICE_API_VERSION_1_0:

case CAMERA_DEVICE_API_VERSION_3_0:

case CAMERA_DEVICE_API_VERSION_3_1:

if (apiVersion == API_VERSION_2) {

ALOGV("%s: Camera id %s uses HAL version %d <3.2, doesn't support api2 without shim",

__FUNCTION__, id.string(), deviceVersion);

*isSupported = false;

} else { // if (apiVersion == API_VERSION_1) {

ALOGV("%s: Camera id %s uses older HAL before 3.2, but api1 is always supported",

__FUNCTION__, id.string());

*isSupported = true;

}

break;

case CAMERA_DEVICE_API_VERSION_3_2:

case CAMERA_DEVICE_API_VERSION_3_3:

case CAMERA_DEVICE_API_VERSION_3_4:

ALOGV("%s: Camera id %s uses HAL3.2 or newer, supports api1/api2 directly",

__FUNCTION__, id.string());

*isSupported = true;

break;

case -1: {

String8 msg = String8::format("Unknown camera ID %s", id.string());

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(ERROR_ILLEGAL_ARGUMENT, msg.string());

}

default: {

String8 msg = String8::format("Unknown device version %x for device %s",

deviceVersion, id.string());

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(ERROR_INVALID_OPERATION, msg.string());

}

}

return Status::ok();

}

- 采用camera2 api来获取相机设备的信息。

CameraMetadataNative info = cameraService.getCameraCharacteristics(cameraId);

characteristics = new CameraCharacteristics(info);

- 其中DeviceInfo3是CameraProviderManager::ProviderInfo::DeviceInfo3,CameraProviderManager中的结构体,最终返回的是CameraMetadata类型,它是一个Parcelable类型,native中对应的代码是frameworks/av/camera/include/camera/CameraMetadata.h,java中对应的是frameworks/base/core/java/android/hardware/camera2/impl/CameraMetadataNative.java,Parcelable类型是可以跨进程传输的。下面是在native中定义CameraMetadata为CameraMetadataNative。

namespace hardware {

namespace camera2 {

namespace impl {

using ::android::CameraMetadata;

typedef CameraMetadata CameraMetadataNative;

}

}

}

- 我们关注其中的一个调用函数:

status_t CameraProviderManager::getCameraCharacteristicsLocked(const std::string &id,

CameraMetadata* characteristics) const {

auto deviceInfo = findDeviceInfoLocked(id, /*minVersion*/ {3,0}, /*maxVersion*/ {4,0});

if (deviceInfo == nullptr) return NAME_NOT_FOUND;

return deviceInfo->getCameraCharacteristics(characteristics);

}

- 发现了调用了一个findDeviceInfoLocked(...)函数,返回类型是一个DeviceInfo结构体,CameraProviderManager.h中定义了三个DeviceInfo结构体,除了DeviceInfo之外,还有DeviceInfo1与DeviceInfo3,他们都继承DeviceInfo,其中DeviceInfo1为HALv1服务,DeviceInfo3为HALv3-specific服务,都是提供camera device一些基本信息。这里主要看下findDeviceInfoLocked(...)函数:

CameraProviderManager::ProviderInfo::DeviceInfo* CameraProviderManager::findDeviceInfoLocked(

const std::string& id,

hardware::hidl_version minVersion, hardware::hidl_version maxVersion) const {

for (auto& provider : mProviders) {

for (auto& deviceInfo : provider->mDevices) {

if (deviceInfo->mId == id &&

minVersion <= deviceInfo->mVersion && maxVersion >= deviceInfo->mVersion) {

return deviceInfo.get();

}

}

}

return nullptr;

}

- 这儿的是mProviders是ProviderInfo类型的列表,这个ProviderInfo也是CameraProviderManager.h中定义的结构体,并且上面3种DeviceInfo都是定义在ProviderInfo里面的。下面给出了ProviderInfo的代码大纲,裁剪了很多代码,但是我们还是能看到核心的代码:ProviderInfo是管理当前手机的camera device设备的,通过addDevice保存在mDevices中,接下来我们看下这个addDevice是如何工作的。

struct ProviderInfo :

virtual public hardware::camera::provider::V2_4::ICameraProviderCallback,

virtual public hardware::hidl_death_recipient

{

//......

ProviderInfo(const std::string &providerName,

sp<hardware::camera::provider::V2_4::ICameraProvider>& interface,

CameraProviderManager *manager);

~ProviderInfo();

status_t initialize();

const std::string& getType() const;

status_t addDevice(const std::string& name,

hardware::camera::common::V1_0::CameraDeviceStatus initialStatus =

hardware::camera::common::V1_0::CameraDeviceStatus::PRESENT,

/*out*/ std::string *parsedId = nullptr);

// ICameraProviderCallbacks interface - these lock the parent mInterfaceMutex

virtual hardware::Return<void> cameraDeviceStatusChange(

const hardware::hidl_string& cameraDeviceName,

hardware::camera::common::V1_0::CameraDeviceStatus newStatus) override;

virtual hardware::Return<void> torchModeStatusChange(

const hardware::hidl_string& cameraDeviceName,

hardware::camera::common::V1_0::TorchModeStatus newStatus) override;

// hidl_death_recipient interface - this locks the parent mInterfaceMutex

virtual void serviceDied(uint64_t cookie, const wp<hidl::base::V1_0::IBase>& who) override;

// Basic device information, common to all camera devices

struct DeviceInfo {

//......

};

std::vector<std::unique_ptr<DeviceInfo>> mDevices;

std::unordered_set<std::string> mUniqueCameraIds;

int mUniqueDeviceCount;

// HALv1-specific camera fields, including the actual device interface

struct DeviceInfo1 : public DeviceInfo {

//......

};

// HALv3-specific camera fields, including the actual device interface

struct DeviceInfo3 : public DeviceInfo {

//......

};

private:

void removeDevice(std::string id);

};

- mProviders是如何添加的?

-

1.CameraService --> onFirstRef()

-

2.CameraService --> enumerateProviders()

-

3.CameraProviderManager --> initialize(this)

-

initialize(...)函数原型是:

status_t initialize(wp<StatusListener> listener,ServiceInteractionProxy *proxy = &sHardwareServiceInteractionProxy);- 第2个参数默认是sHardwareServiceInteractionProxy类型:

-

hardware::Camera::provider::V2_4::ICameraProvider::getService(serviceName)出处

-

在./hardware/interfaces/camera/provider/2.4/default/CameraProvider.cpp,传入的参数可能是下面两种的一个:

- const std::string kLegacyProviderName("legacy/0"); 代表 HALv1

- const std::string kExternalProviderName("external/0"); 代码HALv3-specific

-

// 第2个参数默认是sHardwareServiceInteractionProxy类型:

struct ServiceInteractionProxy {

virtual bool registerForNotifications(

const std::string &serviceName,

const sp<hidl::manager::V1_0::IServiceNotification>

¬ification) = 0;

virtual sp<hardware::camera::provider::V2_4::ICameraProvider> getService(

const std::string &serviceName) = 0;

virtual ~ServiceInteractionProxy() {}

};

// Standard use case - call into the normal generated static methods which invoke

// the real hardware service manager

struct HardwareServiceInteractionProxy : public ServiceInteractionProxy {

virtual bool registerForNotifications(

const std::string &serviceName,

const sp<hidl::manager::V1_0::IServiceNotification>

¬ification) override {

return hardware::camera::provider::V2_4::ICameraProvider::registerForNotifications(

serviceName, notification);

}

virtual sp<hardware::camera::provider::V2_4::ICameraProvider> getService(

const std::string &serviceName) override {

return hardware::camera::provider::V2_4::ICameraProvider::getService(serviceName);

}

};

// ICameraProvider

ICameraProvider* HIDL_FETCH_ICameraProvider(const char* name) {

if (strcmp(name, kLegacyProviderName) == 0) {

CameraProvider* provider = new CameraProvider();

if (provider == nullptr) {

ALOGE("%s: cannot allocate camera provider!", __FUNCTION__);

return nullptr;

}

if (provider->isInitFailed()) {

ALOGE("%s: camera provider init failed!", __FUNCTION__);

delete provider;

return nullptr;

}

return provider;

} else if (strcmp(name, kExternalProviderName) == 0) {

ExternalCameraProvider* provider = new ExternalCameraProvider();

return provider;

}

ALOGE("%s: unknown instance name: %s", __FUNCTION__, name);

return nullptr;

}

- addDevice是如何工作的?

- 1.CameraProviderManager::ProviderInfo::initialize()初始化的时候是检查当前的camera device,检查的执行函数是:(1)

- 最终调用到./hardware/interfaces/camera/provider/2.4/default/CameraProvider.cpp中的getCameraIdList函数:CAMERA_DEVICE_STATUS_PRESENT表明当前的camera是可用的,mCameraStatusMap存储了所有的camera 设备列表。(2)

// (1)

std::vector<std::string> devices;

hardware::Return<void> ret = mInterface->getCameraIdList([&status, &devices](

Status idStatus,

const hardware::hidl_vec<hardware::hidl_string>& cameraDeviceNames) {

status = idStatus;

if (status == Status::OK) {

for (size_t i = 0; i < cameraDeviceNames.size(); i++) {

devices.push_back(cameraDeviceNames[i]);

}

} });

// (2)

Return<void> CameraProvider::getCameraIdList(getCameraIdList_cb _hidl_cb) {

std::vector<hidl_string> deviceNameList;

for (auto const& deviceNamePair : mCameraDeviceNames) {

if (mCameraStatusMap[deviceNamePair.first] == CAMERA_DEVICE_STATUS_PRESENT) {

deviceNameList.push_back(deviceNamePair.second);

}

}

hidl_vec<hidl_string> hidlDeviceNameList(deviceNameList);

_hidl_cb(Status::OK, hidlDeviceNameList);

return Void();

}

- 我们理一下整体的调用结构:

- 1.上面谈的camera2 api就是在framework层的,在应用程序进程中。

- 2.CameraService,是camera2 api binder IPC通信方式调用到服务端的,camera相关的操作都在在服务端进行。所在的位置就是./frameworks/av/services/camera/下面

- 3.服务端也只是一个桥梁,service也会调用到HAL,硬件抽象层,具体位置在./hardware/interfaces/camera/provider/2.4

- 4.camera driver,底层的驱动层了,这是真正操作硬件的地方。

3.2.2.3 利用获取相机的设备信息创建CameraDeviceImpl实例

android.hardware.camera2.impl.CameraDeviceImpl deviceImpl =

new android.hardware.camera2.impl.CameraDeviceImpl(

cameraId,

callback,

executor,

characteristics,

mContext.getApplicationInfo().targetSdkVersion);

- 创建CameraDevice实例,传入了刚刚获取的characteristics参数(camera设备信息赋值为CameraDevice实例)。这个实例接下来还会使用,使用的时候再谈一下。

3.2.2.4 调用远程CameraService获取当前相机的远程服务

// Use cameraservice's cameradeviceclient implementation for HAL3.2+ devices

ICameraService cameraService = CameraManagerGlobal.get().getCameraService();

if (cameraService == null) {

throw new ServiceSpecificException(

ICameraService.ERROR_DISCONNECTED,

"Camera service is currently unavailable");

}

cameraUser = cameraService.connectDevice(callbacks, cameraId,

mContext.getOpPackageName(), uid);

- 这个函数的主要目的就是连接当前的cameraDevice设备。调用到CameraService::connectDevice中。

Status CameraService::connectDevice(

const sp<hardware::camera2::ICameraDeviceCallbacks>& cameraCb,

const String16& cameraId,

const String16& clientPackageName,

int clientUid,

/*out*/

sp<hardware::camera2::ICameraDeviceUser>* device) {

ATRACE_CALL();

Status ret = Status::ok();

String8 id = String8(cameraId);

sp<CameraDeviceClient> client = nullptr;

ret = connectHelper<hardware::camera2::ICameraDeviceCallbacks,CameraDeviceClient>(cameraCb, id,

/*api1CameraId*/-1,

CAMERA_HAL_API_VERSION_UNSPECIFIED, clientPackageName,

clientUid, USE_CALLING_PID, API_2,

/*legacyMode*/ false, /*shimUpdateOnly*/ false,

/*out*/client);

if(!ret.isOk()) {

logRejected(id, getCallingPid(), String8(clientPackageName),

ret.toString8());

return ret;

}

*device = client;

return ret;

}

-

- connectDevice函数的第5个参数就是当前binder ipc的返回值,我们connectDevice之后,会得到一个cameraDeviceClient对象,这个对象会返回到应用程序进程中。我们接下来主要看看这个对象是如何生成的。

- validateConnectLocked:检查当前的camera device是否可用,这儿的判断比较简单,只是简单判断当前设备是否存在。

- handleEvictionsLocked:处理camera独占情况,主要的工作是当前的cameradevice如果已经被其他的设备使用了,或者是否有比当前调用优先级更高的调用等等,在执行完这个函数之后,才能完全判断当前的camera device是可用的,并且开始获取camera device的一些信息开始工作了。

- CameraFlashlight-->prepareDeviceOpen:此时准备连接camera device 了,需要判断一下如果当前的camera device有可用的flashlight,那就要开始准备好了,但是flashlight被占用的那就没有办法了。只是一个通知作用。

- getDeviceVersion:判断一下当前的camera device的version 版本,主要判断在CameraProviderManager::getHighestSupportedVersion函数中,这个函数中将camera device支持的最高和最低版本查清楚,然后我们判断当前的camera facing,只有两种情况CAMERA_FACING_BACK = 0与CAMERA_FACING_FRONT = 1,这些都是先置判断条件,只有这些检查都通过,说明当前camera device是确实可用的。

- makeClient:是根据当前的CAMERA_DEVICE_API_VERSION来判断的,当前最新的HAL架构都是基于HALv3的,所以我们采用的client都是CameraDeviceClient。

Status CameraService::makeClient(const sp<CameraService>& cameraService,

const sp<IInterface>& cameraCb, const String16& packageName, const String8& cameraId,

int api1CameraId, int facing, int clientPid, uid_t clientUid, int servicePid,

bool legacyMode, int halVersion, int deviceVersion, apiLevel effectiveApiLevel,

/*out*/sp<BasicClient>* client) {

if (halVersion < 0 || halVersion == deviceVersion) {

// Default path: HAL version is unspecified by caller, create CameraClient

// based on device version reported by the HAL.

switch(deviceVersion) {

case CAMERA_DEVICE_API_VERSION_1_0:

if (effectiveApiLevel == API_1) { // Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName,

api1CameraId, facing, clientPid, clientUid,

getpid(), legacyMode);

} else { // Camera2 API route

ALOGW("Camera using old HAL version: %d", deviceVersion);

return STATUS_ERROR_FMT(ERROR_DEPRECATED_HAL,

"Camera device \"%s\" HAL version %d does not support camera2 API",

cameraId.string(), deviceVersion);

}

break;

case CAMERA_DEVICE_API_VERSION_3_0:

case CAMERA_DEVICE_API_VERSION_3_1:

case CAMERA_DEVICE_API_VERSION_3_2:

case CAMERA_DEVICE_API_VERSION_3_3:

case CAMERA_DEVICE_API_VERSION_3_4:

if (effectiveApiLevel == API_1) { // Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new Camera2Client(cameraService, tmp, packageName,

cameraId, api1CameraId,

facing, clientPid, clientUid,

servicePid, legacyMode);

} else { // Camera2 API route

sp<hardware::camera2::ICameraDeviceCallbacks> tmp =

static_cast<hardware::camera2::ICameraDeviceCallbacks*>(cameraCb.get());

*client = new CameraDeviceClient(cameraService, tmp, packageName, cameraId,

facing, clientPid, clientUid, servicePid);

}

break;

default:

// Should not be reachable

ALOGE("Unknown camera device HAL version: %d", deviceVersion);

return STATUS_ERROR_FMT(ERROR_INVALID_OPERATION,

"Camera device \"%s\" has unknown HAL version %d",

cameraId.string(), deviceVersion);

}

} else {

// A particular HAL version is requested by caller. Create CameraClient

// based on the requested HAL version.

if (deviceVersion > CAMERA_DEVICE_API_VERSION_1_0 &&

halVersion == CAMERA_DEVICE_API_VERSION_1_0) {

// Only support higher HAL version device opened as HAL1.0 device.

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName,

api1CameraId, facing, clientPid, clientUid,

servicePid, legacyMode);

} else {

// Other combinations (e.g. HAL3.x open as HAL2.x) are not supported yet.

ALOGE("Invalid camera HAL version %x: HAL %x device can only be"

" opened as HAL %x device", halVersion, deviceVersion,

CAMERA_DEVICE_API_VERSION_1_0);

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Camera device \"%s\" (HAL version %d) cannot be opened as HAL version %d",

cameraId.string(), deviceVersion, halVersion);

}

}

return Status::ok();

}

- CameraClient与Camera2Client是之前系统版本使用的camera client对象,现在都使用CameraDeviceClient了

- BnCamera --> ./frameworks/av/camera/include/camera/android/hardware/ICamera.h

- ICamera --> ./frameworks/av/camera/include/camera/android/hardware/ICamera.h

- BnCameraDeviceUser --> android/hardware/camera2/BnCameraDeviceUser.h 这是ICameraDeviceUser.aidl自动生成的binder 对象。所以最终得到的client对象就是ICameraDeviceUser.Stub对象。

3.2.2.5 将获取的远程服务设置到CameraDeviceImpl实例中

deviceImpl.setRemoteDevice(cameraUser);

device = deviceImpl;

- 这个cameraUser就是cameraservice端设置的ICameraDeviceUser.Stub对象:

public void setRemoteDevice(ICameraDeviceUser remoteDevice) throws CameraAccessException {

synchronized(mInterfaceLock) {

// TODO: Move from decorator to direct binder-mediated exceptions

// If setRemoteFailure already called, do nothing

if (mInError) return;

mRemoteDevice = new ICameraDeviceUserWrapper(remoteDevice);

IBinder remoteDeviceBinder = remoteDevice.asBinder();

// For legacy camera device, remoteDevice is in the same process, and

// asBinder returns NULL.

if (remoteDeviceBinder != null) {

try {

remoteDeviceBinder.linkToDeath(this, /*flag*/ 0);

} catch (RemoteException e) {

CameraDeviceImpl.this.mDeviceExecutor.execute(mCallOnDisconnected);

throw new CameraAccessException(CameraAccessException.CAMERA_DISCONNECTED,

"The camera device has encountered a serious error");

}

}

mDeviceExecutor.execute(mCallOnOpened);

mDeviceExecutor.execute(mCallOnUnconfigured);

}

}

- 这个mRemoteDevice是应用程序进程和android camera service端之间链接的桥梁,上层操作camera的方法会通过调用mRemoteDevice来调用到camera service端来实现操作底层camera驱动的目的。

3.2.3 小结

- 本节通过我们熟知的openCamera函数讲起,openCamera串起应用程序和cameraService之间的联系,通过研究cameraservice代码,我们知道了底层是如何通过HAL调用camera驱动设备的。下面会逐渐深入讲解camera底层知识,不足之处,敬请谅解。

3.3 Android Camera原理之openCamera模块(二)

- 在openCamera模块(一)中主要讲解了openCamera的调用流程以及camera模块涉及到的4个层次之间的调用关系,但是一些细节问题并没有阐释到,本节补充一下细节问题,力求丰满整个openCamera模块的知识体系。

- 在API一节中谈到了调用openCamera的方法:

manager.openCamera(mCameraId, mStateCallback, mBackgroundHandler); - 其中这个manager就是CameraManager实例,openCamera方法上一篇文章已经介绍地比较清楚了,但是第二个参数mStateCallback没有深入讲解,大家只知道是一个相机状态的回调,但是这个状态很重要。这个状态回调会告知开发者当前的camera处于什么状态,在确切获得这个状态之后,才能进行下一步的操作。例如我打开camera是成功还是失败了,如果不知道的话是不能进行下一步的操作的。

private final CameraDevice.StateCallback mStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice cameraDevice) {

// This method is called when the camera is opened. We start camera preview here.

mCameraOpenCloseLock.release();

mCameraDevice = cameraDevice;

createCameraPreviewSession();

}

@Override

public void onDisconnected(@NonNull CameraDevice cameraDevice) {

mCameraOpenCloseLock.release();

cameraDevice.close();

mCameraDevice = null;

}

@Override

public void onError(@NonNull CameraDevice cameraDevice, int error) {

mCameraOpenCloseLock.release();

cameraDevice.close();

mCameraDevice = null;

Activity activity = getActivity();

if (null != activity) {

activity.finish();

}

}

};

- openCamer(二)想要探讨的是Camera状态是如何回调的。(在上节中已经谈到了详细的调用流程,不再赘述)

3.2.1 openCameraDeviceUserAsync中StateCallback

- openCamera会调用到openCameraDeviceUserAsync(...)中,当然也会把它的StateCallback参数传进来,这个参数和获取到的CameraCharacteristics一起传入CameraDeviceImpl的构造函数中。

android.hardware.camera2.impl.CameraDeviceImpl deviceImpl =

new android.hardware.camera2.impl.CameraDeviceImpl(

cameraId,

callback,

executor,

characteristics,

mContext.getApplicationInfo().targetSdkVersion);

- 但是传入cameraService的回调参数却不是这个回调,看一下代码:

ICameraDeviceCallbacks callbacks = deviceImpl.getCallbacks();

//......

cameraUser = cameraService.connectDevice(callbacks, cameraId,

mContext.getOpPackageName(), uid);

- 这个callbacks是CameraDeviceImpl实例中的参数,那么这个callbacks和我们传入的StateCallback有什么关系了,还是要去CameraDeviceImpl中看。

public CameraDeviceImpl(String cameraId, StateCallback callback, Executor executor,

CameraCharacteristics characteristics, int appTargetSdkVersion) {

if (cameraId == null || callback == null || executor == null || characteristics == null) {

throw new IllegalArgumentException("Null argument given");

}

mCameraId = cameraId;

mDeviceCallback = callback;

mDeviceExecutor = executor;

mCharacteristics = characteristics;

mAppTargetSdkVersion = appTargetSdkVersion;

final int MAX_TAG_LEN = 23;

String tag = String.format("CameraDevice-JV-%s", mCameraId);

if (tag.length() > MAX_TAG_LEN) {

tag = tag.substring(0, MAX_TAG_LEN);

}

TAG = tag;

Integer partialCount =

mCharacteristics.get(CameraCharacteristics.REQUEST_PARTIAL_RESULT_COUNT);

if (partialCount == null) {

// 1 means partial result is not supported.

mTotalPartialCount = 1;

} else {

mTotalPartialCount = partialCount;

}

}

- 构造函数中传入的StateCallback赋给了CameraDeviceImpl中的mDeviceCallback

private final CameraDeviceCallbacks mCallbacks = new CameraDeviceCallbacks();

public CameraDeviceCallbacks getCallbacks() {

return mCallbacks;

}

public class CameraDeviceCallbacks extends ICameraDeviceCallbacks.Stub {

//......

}

3.2.2 CameraDeviceCallbacks回调

- ICameraDeviceCallbacks.aidl自动生成的android/hardware/camera2/ICameraDeviceCallbacks.h文件

class ICameraDeviceCallbacksDefault : public ICameraDeviceCallbacks {

public:

::android::IBinder* onAsBinder() override;

::android::binder::Status onDeviceError(int32_t errorCode, const ::android::hardware::camera2::impl::CaptureResultExtras& resultExtras) override;

::android::binder::Status onDeviceIdle() override;

::android::binder::Status onCaptureStarted(const ::android::hardware::camera2::impl::CaptureResultExtras& resultExtras, int64_t timestamp) override;

::android::binder::Status onResultReceived(const ::android::hardware::camera2::impl::CameraMetadataNative& result, const ::android::hardware::camera2::impl::CaptureResultExtras& resultExtras, const ::std::vector<::android::hardware::camera2::impl::PhysicalCaptureResultInfo>& physicalCaptureResultInfos) override;

::android::binder::Status onPrepared(int32_t streamId) override;

::android::binder::Status onRepeatingRequestError(int64_t lastFrameNumber, int32_t repeatingRequestId) override;

::android::binder::Status onRequestQueueEmpty() override;

};

- 这个回调函数是从CameraService中调上来的。下面的回调包含了Camera执行过程中的各种状态,执行成功、执行失败、数据接收成功等等。这儿暂时不展开描述,等后面capture image的时候会详细阐释。

- onDeviceError

- onDeviceIdle

- onCaptureStarted

- onResultReceived

- onPrepared

- onRepeatingRequestError

- onRequestQueueEmpty

3.2.3 StateCallback回调

- StateCallback是openCamera传入的3个参数中的一个,这是一个标识当前camera连接状态的回调。

public static abstract class StateCallback {

public static final int ERROR_CAMERA_IN_USE = 1;

public static final int ERROR_MAX_CAMERAS_IN_USE = 2;

public static final int ERROR_CAMERA_DISABLED = 3;

public static final int ERROR_CAMERA_DEVICE = 4;

public static final int ERROR_CAMERA_SERVICE = 5;

/** @hide */

@Retention(RetentionPolicy.SOURCE)

@IntDef(prefix = {"ERROR_"}, value =

{ERROR_CAMERA_IN_USE,

ERROR_MAX_CAMERAS_IN_USE,

ERROR_CAMERA_DISABLED,

ERROR_CAMERA_DEVICE,

ERROR_CAMERA_SERVICE })

public @interface ErrorCode {};

public abstract void onOpened(@NonNull CameraDevice camera); // Must implement

public void onClosed(@NonNull CameraDevice camera) {

// Default empty implementation

}

public abstract void onDisconnected(@NonNull CameraDevice camera); // Must implement

public abstract void onError(@NonNull CameraDevice camera,

@ErrorCode int error); // Must implement

}

- onOpened回调:

- 当前camera device已经被打开了,会触发这个回调。探明camera的状态是opened了,这是可以开始createCaptureSession开始使用camera 捕捉图片或者视频了。

- 触发onOpened回调的地方在setRemoteDevice(...),这个函数在connectDevice(...)连接成功之后执行,表明当前的camera device已经连接成功了,触发camera 能够打开的回调。

public void setRemoteDevice(ICameraDeviceUser remoteDevice) throws CameraAccessException {

synchronized(mInterfaceLock) {

// TODO: Move from decorator to direct binder-mediated exceptions

// If setRemoteFailure already called, do nothing

if (mInError) return;

mRemoteDevice = new ICameraDeviceUserWrapper(remoteDevice);

IBinder remoteDeviceBinder = remoteDevice.asBinder();

// For legacy camera device, remoteDevice is in the same process, and

// asBinder returns NULL.

if (remoteDeviceBinder != null) {

try {

remoteDeviceBinder.linkToDeath(this, /*flag*/ 0);

} catch (RemoteException e) {

CameraDeviceImpl.this.mDeviceExecutor.execute(mCallOnDisconnected);

throw new CameraAccessException(CameraAccessException.CAMERA_DISCONNECTED,

"The camera device has encountered a serious error");

}

}

mDeviceExecutor.execute(mCallOnOpened);

mDeviceExecutor.execute(mCallOnUnconfigured);

}

}

private final Runnable mCallOnOpened = new Runnable() {

@Override

public void run() {

StateCallbackKK sessionCallback = null;

synchronized(mInterfaceLock) {

if (mRemoteDevice == null) return; // Camera already closed

sessionCallback = mSessionStateCallback;

}

if (sessionCallback != null) {

sessionCallback.onOpened(CameraDeviceImpl.this);

}

mDeviceCallback.onOpened(CameraDeviceImpl.this);

}

};

- onClosed回调:

- camera device已经被关闭,这个回调被触发。一般是终端开发者closeCamera的时候会释放当前持有的camera device,这是正常的现象。

public void close() {

synchronized (mInterfaceLock) {

if (mClosing.getAndSet(true)) {

return;

}

if (mRemoteDevice != null) {

mRemoteDevice.disconnect();

mRemoteDevice.unlinkToDeath(this, /*flags*/0);

}

if (mRemoteDevice != null || mInError) {

mDeviceExecutor.execute(mCallOnClosed);

}

mRemoteDevice = null;

}

}

private final Runnable mCallOnClosed = new Runnable() {

private boolean mClosedOnce = false;

@Override

public void run() {

if (mClosedOnce) {

throw new AssertionError("Don't post #onClosed more than once");

}

StateCallbackKK sessionCallback = null;

synchronized(mInterfaceLock) {

sessionCallback = mSessionStateCallback;

}

if (sessionCallback != null) {

sessionCallback.onClosed(CameraDeviceImpl.this);

}

mDeviceCallback.onClosed(CameraDeviceImpl.this);

mClosedOnce = true;

}

};

- onDisconnected回调:

- camera device不再可用,打开camera device失败了,一般是因为权限或者安全策略问题导致camera device打不开。一旦连接camera device出现ERROR_CAMERA_DISCONNECTED问题了,这是函数就会被回调,表示当前camera device处于断开的状态。

- onError回调:

- 调用camera device的时候出现了严重的问题。执行CameraService-->connectDevice 出现异常了

} catch (ServiceSpecificException e) {

if (e.errorCode == ICameraService.ERROR_DEPRECATED_HAL) {

throw new AssertionError("Should've gone down the shim path");

} else if (e.errorCode == ICameraService.ERROR_CAMERA_IN_USE ||

e.errorCode == ICameraService.ERROR_MAX_CAMERAS_IN_USE ||

e.errorCode == ICameraService.ERROR_DISABLED ||

e.errorCode == ICameraService.ERROR_DISCONNECTED ||

e.errorCode == ICameraService.ERROR_INVALID_OPERATION) {

// Received one of the known connection errors

// The remote camera device cannot be connected to, so

// set the local camera to the startup error state

deviceImpl.setRemoteFailure(e);

if (e.errorCode == ICameraService.ERROR_DISABLED ||

e.errorCode == ICameraService.ERROR_DISCONNECTED ||

e.errorCode == ICameraService.ERROR_CAMERA_IN_USE) {

// Per API docs, these failures call onError and throw

throwAsPublicException(e);

}

} else {

// Unexpected failure - rethrow

throwAsPublicException(e);

}

} catch (RemoteException e) {

// Camera service died - act as if it's a CAMERA_DISCONNECTED case

ServiceSpecificException sse = new ServiceSpecificException(

ICameraService.ERROR_DISCONNECTED,

"Camera service is currently unavailable");

deviceImpl.setRemoteFailure(sse);

throwAsPublicException(sse);

}

- deviceImpl.setRemoteFailure(e);是执行onError回调的函数。

3.2.4 小结

- Camera的知识点非常多,这两节主要是见微知著,从openCamera讲起,从顶层到底层的浏览一遍整个框架,由浅入深的学习camera知识。下面总结的是连接成功之后,StateCallback-->onOpened回调中camera会如何操作。

3.3 Android Camera原理之createCaptureSession模块

- openCamera成功之后就会执行CameraDeviceImpl-->createCaptureSession,执行成功的回调CameraDevice.StateCallback的onOpened(CameraDevice cameraDevice)方法,当前这个CameraDevice参数就是当前已经打开的相机设备。

- 获取了相机设备,接下来要创建捕捉会话,会话建立成功,可以在当前会话的基础上设置相机预览界面,这时候我们调整摄像头,就能看到屏幕上渲染的相机输入流了,接下来我们可以操作拍照片、拍视频等操作。

- 上图是createCaptureSession执行流程,涉及到的代码模块流程非常复杂,这儿只是提供了核心的一些流程。接下来我们会从代码结构和代码功能的基础上来讲述这一块的内容。

3.3.1 CameraDeviceImpl->createCaptureSession

public void createCaptureSession(List<Surface> outputs,

CameraCaptureSession.StateCallback callback, Handler handler)

throws CameraAccessException {

List<OutputConfiguration> outConfigurations = new ArrayList<>(outputs.size());

for (Surface surface : outputs) {

outConfigurations.add(new OutputConfiguration(surface));

}

createCaptureSessionInternal(null, outConfigurations, callback,

checkAndWrapHandler(handler), /*operatingMode*/ICameraDeviceUser.NORMAL_MODE,

/*sessionParams*/ null);

}

- 将Surface转化为OutputConfiguration,OutputConfiguration是一个描述camera输出数据的类,其中包括Surface和捕获camera会话的特定设置。从其类的定义来看,它是一个实现Parcelable的类,说明其必定要跨进程传输。

- CameraDeviceImpl->createCaptureSession传入的Surface列表:

- 这儿的一个Surface表示输出流,Surface表示有多个输出流,我们有几个显示载体,就需要几个输出流。

- 对于拍照而言,有两个输出流:一个用于预览、一个用于拍照。

- 对于录制视频而言,有两个输出流:一个用于预览、一个用于录制视频。

- 下面是拍照的时候执行的代码:第一个surface是用于预览的,第二个surface,由于是拍照,所以使用ImageReader对象来获取捕获的图片,ImageReader在构造函数的时候调用nativeGetSurface获取Surface,这个Surface作为拍照的Surface来使用。

mCameraDevice.createCaptureSession(Arrays.asList(surface, mImageReader.getSurface()),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {ss

}

@Override

public void onConfigureFailed(

@NonNull CameraCaptureSession cameraCaptureSession) {

}

}, null

);

- 视频录制的也是一样的道理,视频录制使用MediaRecorder来获取视频信息,MediaRecorder在构造的时候也调用nativeGetSurface获取Surface。

- ImageReader->OnImageAvailableListener回调:可以读取Surface对象的图片数据,将其转化为本地可以识别的数据,图片的长宽、时间信息等等。这些image数据信息的采集后续会详细说明,这儿先一笔带过。

private class SurfaceImage extends android.media.Image {

public SurfaceImage(int format) {

mFormat = format;

}

@Override

public void close() {

ImageReader.this.releaseImage(this);

}

public ImageReader getReader() {

return ImageReader.this;

}

private class SurfacePlane extends android.media.Image.Plane {

private SurfacePlane(int rowStride, int pixelStride, ByteBuffer buffer) {

//......

}

final private int mPixelStride;

final private int mRowStride;

private ByteBuffer mBuffer;

}

private long mNativeBuffer;

private long mTimestamp;

private int mTransform;

private int mScalingMode;

private SurfacePlane[] mPlanes;

private int mFormat = ImageFormat.UNKNOWN;

// If this image is detached from the ImageReader.

private AtomicBoolean mIsDetached = new AtomicBoolean(false);

private synchronized native SurfacePlane[] nativeCreatePlanes(int numPlanes,

int readerFormat);

private synchronized native int nativeGetWidth();

private synchronized native int nativeGetHeight();

private synchronized native int nativeGetFormat(int readerFormat);

private synchronized native HardwareBuffer nativeGetHardwareBuffer();

}

- 在准备拍照之前,还会设置一下ImageReader的OnImageAvailableListener回调接口,调用

setOnImageAvailableListener(OnImageAvailableListener listener, Handler handler)设置当前的OnImageAvailableListener 对象。这儿回调接口只有一个onImageAvailable函数,表示当前的捕捉的image已经可用了。然后我们在onImageAvailable回调函数中操作当前捕获的图片。

public interface OnImageAvailableListener {

void onImageAvailable(ImageReader reader);

}

3.3.2 CameraDeviceImpl->createCaptureSessionInternal

private void createCaptureSessionInternal(InputConfiguration inputConfig,

List<OutputConfiguration> outputConfigurations,

CameraCaptureSession.StateCallback callback, Executor executor,

int operatingMode, CaptureRequest sessionParams)

- 传入的几个参数中,inputConfig为null,我们只需关注outputConfigurations即可。

createCaptureSessionInternal函数中代码很多,但是重要的就是执行配置Surface

configureSuccess = configureStreamsChecked(inputConfig, outputConfigurations,

operatingMode, sessionParams);

if (configureSuccess == true && inputConfig != null) {

input = mRemoteDevice.getInputSurface();

}

- 如果配置surface成功,返回一个Input surface这个input surface是用户本地设置的一个输入流。接下来这个input对象会在构造CameraCaptureSessionImpl对象时被传入。 具体参考

3.3.4.CameraCaptureSessionImpl构造函数

3.3.3 CameraDeviceImpl->configureStreamsChecked

- 下面这张图详细列出配置输入输出流函数中执行的主要步骤,由于当前的inputConfig为null,所以核心的执行就是下面粉红色框中的过程——创建输出流

mRemoteDevice.beginConfigure();与mRemoteDevice.endConfigure(operatingMode, null);中间的过程是IPC通知service端告知当前正在处理输入输出流。执行完mRemoteDevice.endConfigure(operatingMode, null);返回success = true;如果中间被终端了,那么success肯定不为true。

3.3.3.1 检查输入流

- checkInputConfiguration(inputConfig);

当前inputConfig为null,所以这部分不执行。

3.3.3.2 检查输出流

- 检查当前缓存的输出流数据列表,如果当前的输出流信息已经在列表中,则不必要重新创建流,如果没有则需要创建流。

// Streams to create

HashSet<OutputConfiguration> addSet = new HashSet<OutputConfiguration>(outputs);

// Streams to delete

List<Integer> deleteList = new ArrayList<Integer>();

for (int i = 0; i < mConfiguredOutputs.size(); ++i) {

int streamId = mConfiguredOutputs.keyAt(i);

OutputConfiguration outConfig = mConfiguredOutputs.valueAt(i);

if (!outputs.contains(outConfig) || outConfig.isDeferredConfiguration()) {

deleteList.add(streamId);

} else {

addSet.remove(outConfig); // Don't create a stream previously created

}

}

private final SparseArray<OutputConfiguration> mConfiguredOutputs = new SparseArray<>();- mConfiguredOutputs是内存中的输出流缓存列表,每次创建输出流都会把streamId和输出流缓存在这个SparseArray中。

- 这个部分代码操作完成之后:

addSet就是要即将要创建输出流的集合列表。deleteList就是即将要删除的streamId列表,保证当前mConfiguredOutputs列表中的输出流数据是最新可用的。

- 下面是删除过期输出流的地方:

// Delete all streams first (to free up HW resources)

for (Integer streamId : deleteList) {

mRemoteDevice.deleteStream(streamId);

mConfiguredOutputs.delete(streamId);

}

- 下面是创建输出流的地方:

// Add all new streams

for (OutputConfiguration outConfig : outputs) {

if (addSet.contains(outConfig)) {

int streamId = mRemoteDevice.createStream(outConfig);

mConfiguredOutputs.put(streamId, outConfig);

}

}

3.3.3.3 mRemoteDevice.createStream(outConfig)

- 这个IPC调用直接调用到CameraDeviceClient.h中的

virtual binder::Status createStream( const hardware::camera2::params::OutputConfiguration &outputConfiguration, /*out*/ int32_t* newStreamId = NULL) override; - 其实第一个参数outputConfiguration表示输出surface,第2个参数是out属性的,表示IPC执行之后返回的参数。该方法中主要就是下面的这段代码了。

const std::vector<sp<IGraphicBufferProducer>>& bufferProducers =

outputConfiguration.getGraphicBufferProducers();

size_t numBufferProducers = bufferProducers.size();

//......

for (auto& bufferProducer : bufferProducers) {

//......

sp<Surface> surface;

res = createSurfaceFromGbp(streamInfo, isStreamInfoValid, surface, bufferProducer,

physicalCameraId);

//......

surfaces.push_back(surface);

}

//......

int streamId = camera3::CAMERA3_STREAM_ID_INVALID;

std::vector<int> surfaceIds;

err = mDevice->createStream(surfaces, deferredConsumer, streamInfo.width,

streamInfo.height, streamInfo.format, streamInfo.dataSpace,

static_cast<camera3_stream_rotation_t>(outputConfiguration.getRotation()),

&streamId, physicalCameraId, &surfaceIds, outputConfiguration.getSurfaceSetID(),

isShared);

-

for循环中使用

outputConfiguration.getGraphicBufferProducers()得到的GraphicBufferProducers创建出对应的surface,同时会对这些surface对象进行判断,检查它们的合法性,合法的话就会将它们加入到surfaces集合中,然后调用mDevice->createStream进一步执行流的创建. -

这里就要说一说Android显示系统的一些知识了,大家要清楚,Android上最终绘制在屏幕上的buffer都是在显存中分配的,而除了这部分外,其他都是在内存中分配的,buffer管理的模块有两个,一个是framebuffer,一个是gralloc,framebuffer用来将渲染好的buffer显示到屏幕上,而gralloc用于分配buffer,我们相机预览的buffer轮转也不例外,它所申请的buffer根上也是由gralloc来分配的,在native层的描述是一个private_handle_t指针,而中间会经过多层的封装,这些buffer都是共享的。

-

只不过它的生命周期中的某个时刻只能属于一个所有者,而这些所有者的角色在不断的变换,这也就是Android中最经典的生产者--消费者的循环模型了,生产者就是BufferProducer,消费者就是BufferConsumer,每一个buffer在它的生命周期过程中转换时都会被锁住,这样它的所有者角色发生变化,而其他对象想要修改它就不可能了,这样就保证了buffer同步。

3.3.3.4 mDevice->createStream

- 首先将上一步传入的surface,也就是以后的consumer加入到队列中,然后调用重载的createStream方法进一步处理。这里的参数width就表示我们要配置的surface的宽度,height表示高度,format表示格式,这个format格式是根据surface查询ANativeWindow获取的——

anw->query(anw, NATIVE_WINDOW_FORMAT, &format)我们前面已经说过,dataSpace的类型为android_dataspace,它表示我们buffer轮转时,buffer的大小,接下来定义一个Camera3OutputStream局部变量,这个也就是我们说的配置流了,接下来的if/else判断会根据我们的意图,创建不同的流对象,比如我们要配置拍照流,它的format格式为HAL_PIXEL_FORMAT_BLOB,所以就执行第一个if分支,创建一个Camera3OutputStream,创建完成后,执行 * id = mNextStreamId++,给id指针赋值,这也就是当前流的id了,所以它是递增的。一般情况下,mStatus的状态在initializeCommonLocked()初始化通过调用internalUpdateStatusLocked方法被赋值为STATUS_UNCONFIGURED状态,所以这里的switch/case分支中就进入case STATUS_UNCONFIGURED,然后直接break跳出了,所以局部变量wasActive的值为false,最后直接返回OK。 - 到这里,createStream的逻辑就执行完成了,还是要提醒大家,createStream的逻辑是在framework中的for循环里执行的,我们的创建相当于只配置了一个surface,如果有多个surface的话,这里会执行多次,相应的Camera3OutputStream流的日志也会打印多次,对于进行定位的问题也非常有帮助。

3.3.3.5 mRemoteDevice.endConfigure

binder::Status CameraDeviceClient::endConfigure(int operatingMode,

const hardware::camera2::impl::CameraMetadataNative& sessionParams) {

//......

status_t err = mDevice->configureStreams(sessionParams, operatingMode);

//......

}

- 这里传入的第二个参与一般为null,调用到

Camera3Device::configureStreams

--->Camera3Device::filterParamsAndConfigureLocked

--->Camera3Device::configureStreamsLocked

Camera3Device::configureStreamsLocked中会直接调用HAL层的配置流方法:res = mInterface->configureStreams(sessionBuffer, &config, bufferSizes); - 完成输入流的配置:

if (mInputStream != NULL && mInputStream->isConfiguring()) {

res = mInputStream->finishConfiguration();

if (res != OK) {

CLOGE("Can't finish configuring input stream %d: %s (%d)",

mInputStream->getId(), strerror(-res), res);

cancelStreamsConfigurationLocked();

return BAD_VALUE;

}

}

- 完成输出流的配置:

for (size_t i = 0; i < mOutputStreams.size(); i++) {

sp<Camera3OutputStreamInterface> outputStream =

mOutputStreams.editValueAt(i);

if (outputStream->isConfiguring() && !outputStream->isConsumerConfigurationDeferred()) {

res = outputStream->finishConfiguration();

if (res != OK) {

CLOGE("Can't finish configuring output stream %d: %s (%d)",

outputStream->getId(), strerror(-res), res);

cancelStreamsConfigurationLocked();

return BAD_VALUE;

}

}

}

outputStream->finishConfiguration()`

--->`Camera3Stream::finishConfiguration`

--->`Camera3OutputStream::configureQueueLocked`

--->`Camera3OutputStream::configureConsumerQueueLocked

- 关于一下

Camera3OutputStream::configureConsumerQueueLocked核心的执行步骤:

// Configure consumer-side ANativeWindow interface. The listener may be used

// to notify buffer manager (if it is used) of the returned buffers.

res = mConsumer->connect(NATIVE_WINDOW_API_CAMERA,

/*listener*/mBufferReleasedListener,

/*reportBufferRemoval*/true);

if (res != OK) {

ALOGE("%s: Unable to connect to native window for stream %d",

__FUNCTION__, mId);

return res;

}

- mConsumer就是配置时创建的surface,我们连接mConsumer,然后分配需要的空间大小。下面申请底层的ANativeWindow窗口,这是一个OpenCL 的窗口。对应的Android设备上一般是两种:Surface和SurfaceFlinger

int maxConsumerBuffers;

res = static_cast<ANativeWindow*>(mConsumer.get())->query(

mConsumer.get(),

NATIVE_WINDOW_MIN_UNDEQUEUED_BUFFERS, &maxConsumerBuffers);

if (res != OK) {

ALOGE("%s: Unable to query consumer undequeued"

" buffer count for stream %d", __FUNCTION__, mId);

return res;

}

- 至此,CameraDeviceImpl->configureStreamsChecked分析完成,接下里我们需要根据配置stream结果来创建CameraCaptureSession

3.3.4 CameraCaptureSessionImpl构造函数

try {

// configure streams and then block until IDLE

configureSuccess = configureStreamsChecked(inputConfig, outputConfigurations,

operatingMode, sessionParams);

if (configureSuccess == true && inputConfig != null) {

input = mRemoteDevice.getInputSurface();

}

} catch (CameraAccessException e) {

configureSuccess = false;

pendingException = e;

input = null;

if (DEBUG) {

Log.v(TAG, "createCaptureSession - failed with exception ", e);

}

}

- 配置流完成之后,返回

configureSuccess表示当前配置是否成功。

然后创建CameraCaptureSessionImpl的时候要用到:

CameraCaptureSessionCore newSession = null;

if (isConstrainedHighSpeed) {

ArrayList<Surface> surfaces = new ArrayList<>(outputConfigurations.size());

for (OutputConfiguration outConfig : outputConfigurations) {

surfaces.add(outConfig.getSurface());

}

StreamConfigurationMap config =

getCharacteristics().get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

SurfaceUtils.checkConstrainedHighSpeedSurfaces(surfaces, /*fpsRange*/null, config);

newSession = new CameraConstrainedHighSpeedCaptureSessionImpl(mNextSessionId++,

callback, executor, this, mDeviceExecutor, configureSuccess,

mCharacteristics);

} else {

newSession = new CameraCaptureSessionImpl(mNextSessionId++, input,

callback, executor, this, mDeviceExecutor, configureSuccess);

}

// TODO: wait until current session closes, then create the new session

mCurrentSession = newSession;

if (pendingException != null) {

throw pendingException;

}

mSessionStateCallback = mCurrentSession.getDeviceStateCallback();

- 一般执行CameraCaptureSessionImpl构造函数。

CameraCaptureSessionImpl(int id, Surface input,

CameraCaptureSession.StateCallback callback, Executor stateExecutor,

android.hardware.camera2.impl.CameraDeviceImpl deviceImpl,

Executor deviceStateExecutor, boolean configureSuccess) {

if (callback == null) {

throw new IllegalArgumentException("callback must not be null");

}

mId = id;

mIdString = String.format("Session %d: ", mId);

mInput = input;

mStateExecutor = checkNotNull(stateExecutor, "stateExecutor must not be null");

mStateCallback = createUserStateCallbackProxy(mStateExecutor, callback);

mDeviceExecutor = checkNotNull(deviceStateExecutor,

"deviceStateExecutor must not be null");

mDeviceImpl = checkNotNull(deviceImpl, "deviceImpl must not be null");

mSequenceDrainer = new TaskDrainer<>(mDeviceExecutor, new SequenceDrainListener(),

/*name*/"seq");

mIdleDrainer = new TaskSingleDrainer(mDeviceExecutor, new IdleDrainListener(),

/*name*/"idle");

mAbortDrainer = new TaskSingleDrainer(mDeviceExecutor, new AbortDrainListener(),

/*name*/"abort");

if (configureSuccess) {

mStateCallback.onConfigured(this);

if (DEBUG) Log.v(TAG, mIdString + "Created session successfully");

mConfigureSuccess = true;

} else {

mStateCallback.onConfigureFailed(this);

mClosed = true; // do not fire any other callbacks, do not allow any other work

Log.e(TAG, mIdString + "Failed to create capture session; configuration failed");

mConfigureSuccess = false;

}

}

- 构造函数执行的最后可以看到,当前

configureSuccess=true,执行mStateCallback.onConfigureFailed(this),如果失败,执行mStateCallback.onConfigureFailed(this)回调。

3.3.5 小结

- createCaptureSession的过程就分析完了,它是我们相机预览最重要的条件,一般session创建成功,那么我们的预览就会正常,session创建失败,则预览一定黑屏,如果有碰到相机黑屏的问题,最大的疑点就是这里,session创建完成后,framework会通过

CameraCaptureSession.StateCallback类的public abstract void onConfigured(@NonNull CameraCaptureSession session)回调到应用层,通知我们session创建成功了,那么我们就可以使用回调方法中的CameraCaptureSession参数,调用它的setRepeatingRequest方法来下预览了,该逻辑执行完成后,相机的预览就起来了。

3.4 Android Camera原理之setRepeatingRequest与capture模块

- 在createCaptureSession之后,Camera 会话就已经创建成功,接下来就开始进行预览。预览回调onCaptureCompleted之后就可以拍照(回调到onCaptureCompleted,说明capture 完整frame数据已经返回了,可以捕捉其中的数据了。),由于预览和拍照的很多流程很相似,拍照只是预览过程中的一个节点,所以我们把预览和拍照放在该节中里讲解。

3.4.1 预览

- 预览发起的函数就是

CameraCaptureSession-->setRepeatingRequest,本节就谈一下Camera 是如何发起预览操作的。 CameraCaptureSession-->setRepeatingRequest是createCaptureSession(List<Surface> outputs, CameraCaptureSession.StateCallback callback, Handler handler)中输出流配置成功之后执行CameraCaptureSession.StateCallback.onConfigured(@NonNull CameraCaptureSession session)函数中执行的。

mCameraDevice.createCaptureSession(Arrays.asList(surface, mImageReader.getSurface()),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

// The camera is already closed

if (null == mCameraDevice) {

return;

}

// When the session is ready, we start displaying the preview.

mCaptureSession = cameraCaptureSession;

try {

// Auto focus should be continuous for camera preview.

mPreviewRequestBuilder.set(CaptureRequest.CONTROL_AF_MODE,

CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

// Flash is automatically enabled when necessary.

setAutoFlash(mPreviewRequestBuilder);

// Finally, we start displaying the camera preview.

mPreviewRequest = mPreviewRequestBuilder.build();

mCaptureSession.setRepeatingRequest(mPreviewRequest,

mCaptureCallback, mBackgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(

@NonNull CameraCaptureSession cameraCaptureSession) {

showToast("Failed");

}

}, null

);

- 最终执行了

mCaptureSession.setRepeatingRequest(mPreviewRequest, mCaptureCallback, mBackgroundHandler);

来执行camera preview操作。像对焦等操作就可以在这个onConfigured回调中完成。onConfigured回调表示当前的配置流已经完成,相机已经显示出来了,可以预览了。onConfigureFailed配置流失败,相机黑屏。

public int setRepeatingRequest(CaptureRequest request, CaptureCallback callback,

Handler handler) throws CameraAccessException {

checkRepeatingRequest(request);

synchronized (mDeviceImpl.mInterfaceLock) {

checkNotClosed();

handler = checkHandler(handler, callback);

return addPendingSequence(mDeviceImpl.setRepeatingRequest(request,

createCaptureCallbackProxy(handler, callback), mDeviceExecutor));

}

}

- 第一个参数CaptureRequest 标识当前capture 请求的属性,是请求一个camera还是多个camera,是否复用之前的请求等等。

- 第二个参数CaptureCallback 是捕捉回调,这是开发者直接接触的回调。

public interface CaptureCallback {

public static final int NO_FRAMES_CAPTURED = -1;

public void onCaptureStarted(CameraDevice camera,

CaptureRequest request, long timestamp, long frameNumber);

public void onCapturePartial(CameraDevice camera,

CaptureRequest request, CaptureResult result);

public void onCaptureProgressed(CameraDevice camera,

CaptureRequest request, CaptureResult partialResult);

public void onCaptureCompleted(CameraDevice camera,

CaptureRequest request, TotalCaptureResult result);

public void onCaptureFailed(CameraDevice camera,

CaptureRequest request, CaptureFailure failure);

public void onCaptureSequenceCompleted(CameraDevice camera,

int sequenceId, long frameNumber);

public void onCaptureSequenceAborted(CameraDevice camera,

int sequenceId);

public void onCaptureBufferLost(CameraDevice camera,

CaptureRequest request, Surface target, long frameNumber);

}

-

这需要开发者自己实现,这些回调是如何调用到上层的,后续补充CameraDeviceCallbacks回调模块,这都是通过CameraDeviceCallbacks回调调上来的。

-

下面我们从camera 调用原理的角度分析一下

mCaptureSession.setRepeatingRequest--->CameraDeviceImpl.setRepeatingRequest--->CameraDeviceImpl.submitCaptureRequest

其中CameraDeviceImpl.setRepeatingRequest中第3个参数传入的是true。之所以这个强调一点,因为接下来执行CameraDeviceImpl.capture的时候也会执行setRepeatingRequest,这里第3个参数传入的就是false。第3个参数boolean repeating如果为true,表示当前捕获的是一个过程,camera frame不断在填充;如果为false,表示当前捕获的是一个瞬间,就是拍照。

public int setRepeatingRequest(CaptureRequest request, CaptureCallback callback,

Executor executor) throws CameraAccessException {

List<CaptureRequest> requestList = new ArrayList<CaptureRequest>();

requestList.add(request);

return submitCaptureRequest(requestList, callback, executor, /*streaming*/true);

}

private int submitCaptureRequest(List<CaptureRequest> requestList, CaptureCallback callback,

Executor executor, boolean repeating) {

//......

}

- CameraDeviceImpl.submitCaptureRequest核心工作就是3步:

- 验证当前CaptureRequest列表中的request是否合理:核心就是验证与request绑定的Surface是否存在。

- 向底层发送请求信息。

- 将底层返回的请求信息和传入的CaptureCallback 绑定,以便后续正确回调。

- 而这三步中,第二步却是核心工作。

3.4.1.1 向底层发送captureRequest请求

SubmitInfo requestInfo;

CaptureRequest[] requestArray = requestList.toArray(new CaptureRequest[requestList.size()]);

// Convert Surface to streamIdx and surfaceIdx

for (CaptureRequest request : requestArray) {

request.convertSurfaceToStreamId(mConfiguredOutputs);

}

requestInfo = mRemoteDevice.submitRequestList(requestArray, repeating);

if (DEBUG) {

Log.v(TAG, "last frame number " + requestInfo.getLastFrameNumber());

}

for (CaptureRequest request : requestArray) {

request.recoverStreamIdToSurface();

}

-

执行request.convertSurfaceToStreamId(mConfiguredOutputs);将本地已经缓存的surface和stream记录在内存中,并binder传输到camera service层中,防止camera service端重复请求。

-

requestInfo = mRemoteDevice.submitRequestList(requestArray, repeating);这儿直接调用到camera service端。这儿需要重点讲解一下的。

-

request.recoverStreamIdToSurface();回调成功,清除之前在内存中的数据。

-

CameraDeviceClient::submitRequest--->CameraDeviceClient::submitRequestList:

- 这个函数代码很多,前面很多执行都是在复用检索之前的缓存是否可用,我们关注一下核心的执行:预览的情况下传入的streaming是true,执行上面;如果是拍照的话,那就执行下面的else。err = mDevice->setStreamingRequestList(metadataRequestList, surfaceMapList, &(submitInfo->mLastFrameNumber));

- 传入的submitInfo就是要返回上层的回调参数,如果是预览状态,需要不断更新当前的的frame数据,所以每次更新最新的frame number。

if (streaming) {

err = mDevice->setStreamingRequestList(metadataRequestList, surfaceMapList,

&(submitInfo->mLastFrameNumber));

if (err != OK) {

String8 msg = String8::format(

"Camera %s: Got error %s (%d) after trying to set streaming request",

mCameraIdStr.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

res = STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION,

msg.string());

} else {

Mutex::Autolock idLock(mStreamingRequestIdLock);

mStreamingRequestId = submitInfo->mRequestId;

}

} else {

err = mDevice->captureList(metadataRequestList, surfaceMapList,

&(submitInfo->mLastFrameNumber));

if (err != OK) {

String8 msg = String8::format(

"Camera %s: Got error %s (%d) after trying to submit capture request",

mCameraIdStr.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

res = STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION,

msg.string());

}

ALOGV("%s: requestId = %d ", __FUNCTION__, submitInfo->mRequestId);

}

- Camera3Device::setStreamingRequestList--->Camera3Device::submitRequestsHelper:

status_t Camera3Device::submitRequestsHelper(

const List<const PhysicalCameraSettingsList> &requests,

const std::list<const SurfaceMap> &surfaceMaps,

bool repeating,

/*out*/

int64_t *lastFrameNumber) {

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

status_t res = checkStatusOkToCaptureLocked();

if (res != OK) {

// error logged by previous call

return res;

}

RequestList requestList;

res = convertMetadataListToRequestListLocked(requests, surfaceMaps,

repeating, /*out*/&requestList);

if (res != OK) {

// error logged by previous call

return res;

}

if (repeating) {

res = mRequestThread->setRepeatingRequests(requestList, lastFrameNumber);

} else {

res = mRequestThread->queueRequestList(requestList, lastFrameNumber);

}

//......

return res;

}

-

预览的时候会执行mRequestThread->setRepeatingRequests(requestList, lastFrameNumber);

拍照的时候执行mRequestThread->queueRequestList(requestList, lastFrameNumber); -

mRequestThread->setRepeatingRequests:

status_t Camera3Device::RequestThread::setRepeatingRequests(

const RequestList &requests,

/*out*/

int64_t *lastFrameNumber) {

ATRACE_CALL();

Mutex::Autolock l(mRequestLock);

if (lastFrameNumber != NULL) {

*lastFrameNumber = mRepeatingLastFrameNumber;

}

mRepeatingRequests.clear();

mRepeatingRequests.insert(mRepeatingRequests.begin(),

requests.begin(), requests.end());

unpauseForNewRequests();

mRepeatingLastFrameNumber = hardware::camera2::ICameraDeviceUser::NO_IN_FLIGHT_REPEATING_FRAMES;

return OK;

}

- 将当前提交的CaptureRequest请求放入之前的预览请求队列中,告知HAL层有新的request请求,HAL层连接请求开始工作,源源不断地输出信息到上层。这儿是跑在Camera3Device中定义的RequestThread线程中,可以保证在预览的时候不断地捕获信息流,camera就不断处于预览的状态了。

3.4.1.2 将返回请求信息和 CaptureCallback 绑定

if (callback != null) {

mCaptureCallbackMap.put(requestInfo.getRequestId(),

new CaptureCallbackHolder(