Siamese Neural Networks for One-shot Image Recognition

一、背景

除了以前的基础需要经常复习之外,常见的几类场景的算法也要学习一下(想了一下,文本相似度、图片相似、精准营销、生存分析)。这次来学习一下计算图片相似度的一种算法,Siamese NetWork. Siamese Network 有个有点就是可以计算没有学习过的目标群是否属于一个类别

二、Model

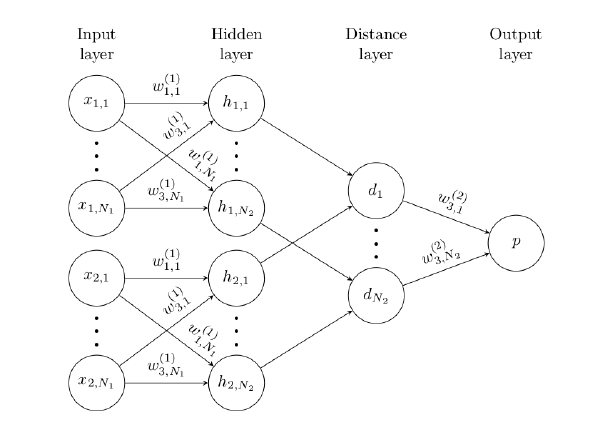

一个简单的两个hidden layer的 siamese network。其中每一层的权重都是共享的。

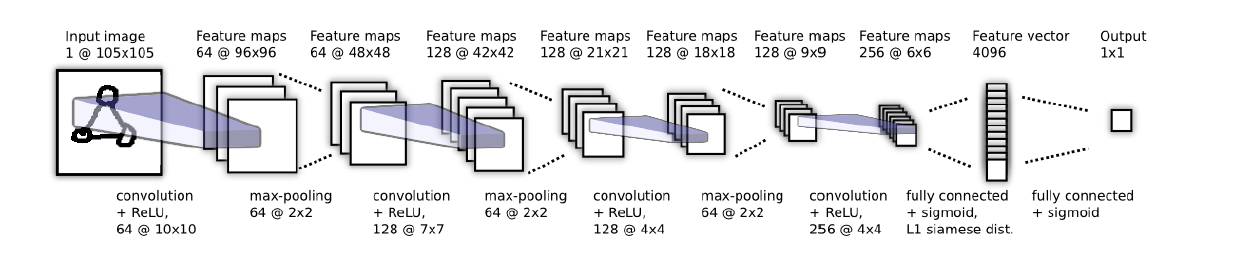

模型首先由一些卷积单元组成,使用RELU激活函数得到特征层(可以增加max_pooling 得到特征层)。 公式里面的2代表 max_pooling的步长。星号为卷积符号。

1) $a_{1,m}^{(k)}=maxpooling(max(0,W_{1-1,l}^{(k)})\star h_{1,(l-1)}+b_{l}),2)$

下图为网络结构示意图。没有画连体结构,但是连体的部分在 4096单元后立即相连。最后一层卷积网络输出到一个全连接层。然后再增加一层计算连体网络距离的网络层, 最后用sigmoid计算相似度。$alpha$为学得参数。

2)$p=\sigma(\sum_{j}\alpha _{j}|h_{1,L-1}^{(j)}-h_{2,L-1}^{(j)}|)$

Loss Function。使用交叉熵作为损失函数。

3) $L (x_{1}^{i},x_{2}^{i})=y(x_{1}^{i},x_{2}^{i})log\ p(x_{1}^{i},x_{2}^{i})+(1-y(x_{1}^{i},x_{2}^{i}))log\ (1-p(x_{1}^{i},x_{2}^{i}))+\lambda ^{T}|w|^{2}$

后续是初始化参数、参数优化策略一些计算等。

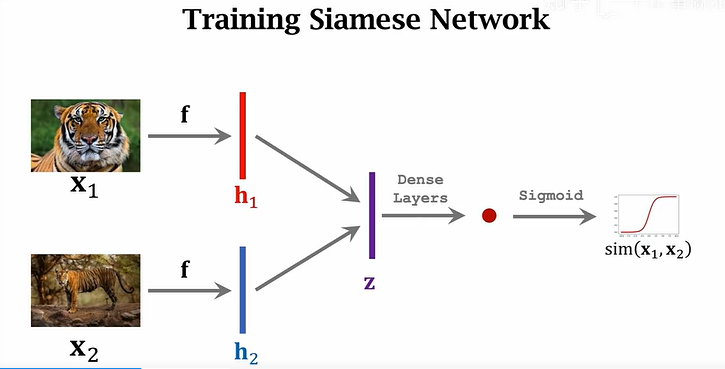

另外,从其他资料(王树森小样本学习视频里)看到一种构建模型的方式为下图所示。其中f为同一个卷积函数。如果两个类别接近,则输出接近1,否则接近0。

损失函数使用 Contrative Loss。很好衡量距离的公式。

4) $L=\frac{1}{2N}\sum_{n=1}^{N}yd^{2}+(1-y)max(margin-d,0)^{2}$

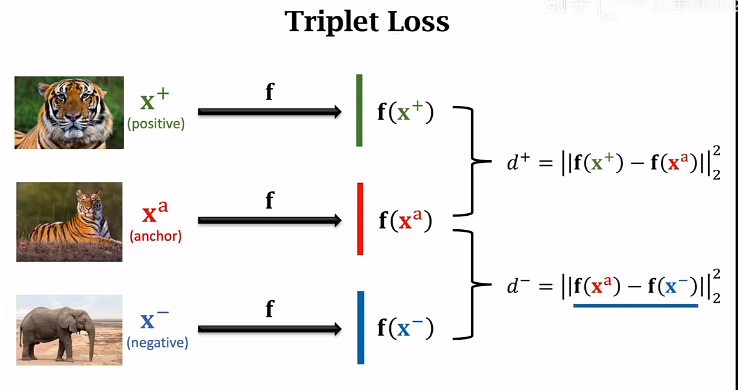

Triplet Loss. 首先选择一个类别的图片当作锚点(Anchor),然后选取一个同类别的样本为 Positive,再选一个不一样的类别负样本,同样f为同一个卷积层,得到三个输出后算距离。我们希望学出来的模型有如下特点,同样本距离d(+)很小,不同样本距离 d(-)很大,定义参数 $\alpha$,如果$ d(-) >= d(+) + \alpha$ ,即已经有足够的距离能区分二者,则认为分类是正确的,否则认为分不开二者。需要算loss,$loss = d(+)+\alpha - d(-)$. 定义损失函数Triplet Loss为:

5) $L(x^{a},x^{+},x^{-})=max(0,d^{+}+\alpha-d^{-})$

三、Example

选取 Kaggle的一篇当作学习例子。Plant Disease Using Siamese Network - Keras | Kaggle。

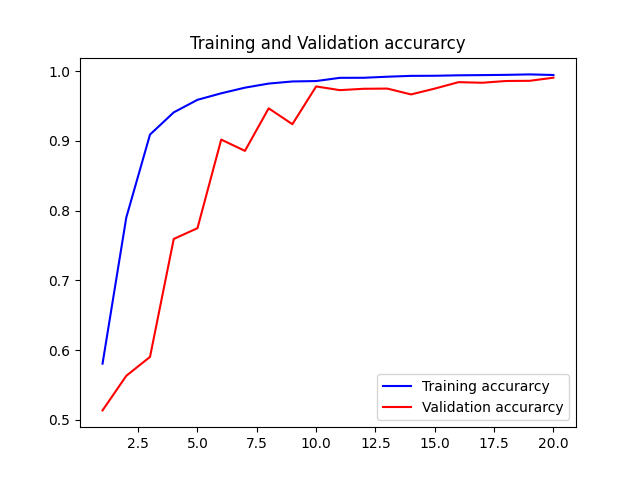

1)测试与训练的准确率

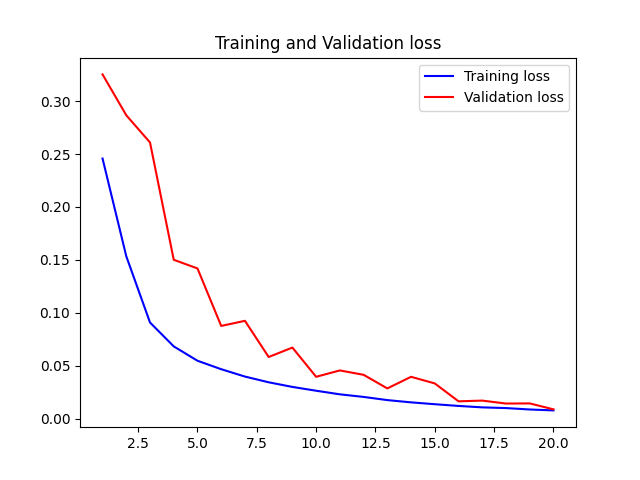

2)测试与训练集的loss

四、Code

1)版本

- tensorflow 2.11.0

- keras 2.11.0

- CPU计算。

2)configs

import os selected_image_size = 224 resize = True total_sample_size = 10000 # 5k-50k channel = 1 size = 2 folder_count = 38 image_count = 20 # 0-50 epochs = 20 if resize: batch_size = 256 else: batch_size = 64 path = "C:/Users/nan.wu2/PycharmProjects/job/study/data/PlantVillage_resize_224/" print(path)

3)prepare_data

import re import numpy as np from PIL import Image from sklearn.model_selection import train_test_split from keras import backend as K from keras.layers import Activation from keras.layers import Input, Lambda, Dense, Dropout, Convolution2D, MaxPooling2D, Flatten from keras.models import Sequential, Model from keras.optimizers import RMSprop from keras import optimizers import matplotlib.image as mpimg import matplotlib.pyplot as plt from keras import callbacks from keras.callbacks import ModelCheckpoint, LearningRateScheduler, EarlyStopping, ReduceLROnPlateau, TensorBoard import os from keras.models import Model, load_model import json from keras.models import model_from_json, load_model from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D, BatchNormalization import warnings import configs def get_data(size, total_sample_size): image = mpimg.imread(configs.path + 's' + str(1) + '/' + str(1) + '.jpg', 'rw+') # ::size slide,size is step. 2 steps pick a pixel if configs.resize: image = image[::size, ::size] dim1, dim2 = image.shape[0], image.shape[1] # same label data x_genuine_pair = np.zeros([total_sample_size, 2, 1, dim1, dim2]) y_genuine = np.zeros([total_sample_size, 1]) count = 0 for i in range(configs.folder_count): for j in range(int(total_sample_size / configs.folder_count)): ind1 = 0 ind2 = 2 while ind1 == ind2: ind1 = np.random.randint(configs.image_count) ind2 = np.random.randint(configs.image_count) img1 = mpimg.imread(configs.path + "s" + str(i + 1) + '/' + str(ind1 + 1) + '.jpg', 'rw+') img2 = mpimg.imread(configs.path + "s" + str(i + 1) + '/' + str(ind2 + 1) + '.jpg', 'rw+') if configs.resize: img1 = img1[::size, ::size] img2 = img2[::size, ::size] x_genuine_pair[count, 0, 0, :, :] = img1 x_genuine_pair[count, 1, 0, :, :] = img2 y_genuine[count] = 1 count += 1 count = 0 x_impossible_pair = np.zeros([total_sample_size, 2, 1, dim1, dim2]) y_impossible = np.zeros([total_sample_size, 1]) for i in range(int(total_sample_size / configs.image_count)): for j in range(configs.image_count): ind1 = 0 ind2 = 0 while ind1 == ind2: ind1 = np.random.randint(configs.folder_count) ind2 = np.random.randint(configs.folder_count) img1 = mpimg.imread(configs.path + 's' + str(ind1 + 1) + '/' + str(j + 1) + '.jpg', 'rw+') img2 = mpimg.imread(configs.path + 's' + str(ind2 + 1) + '/' + str(j + 1) + '.jpg', 'rw+') if configs.resize: img1 = img1[::size, ::size] img2 = img2[::size, ::size] x_impossible_pair[count, 0, 0, :, :] = img1 x_impossible_pair[count, 1, 0, :, :] = img2 y_impossible[count] = 0 count += 1 X = np.concatenate([x_genuine_pair, x_impossible_pair], axis=0) / 255.0 Y = np.concatenate([y_genuine, y_impossible], axis=0) return X, Y

4) siamese_network_funcs

import re import numpy as np from PIL import Image from sklearn.model_selection import train_test_split from keras import backend as K from keras.layers import Activation from keras.layers import Input, Lambda, Dense, Dropout, Convolution2D, MaxPooling2D, Flatten from keras.models import Sequential, Model from keras.optimizers import RMSprop from keras import optimizers import matplotlib.image as mpimg import matplotlib.pyplot as plt from keras import callbacks from keras.callbacks import ModelCheckpoint, LearningRateScheduler, EarlyStopping, ReduceLROnPlateau, TensorBoard import os from keras.models import Model, load_model import json from keras.models import model_from_json, load_model from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D, BatchNormalization import warnings import configs warnings.filterwarnings('ignore') def build_base_network(input_shape): seq = Sequential() nb_filter = [16, 32, 16] # m*n cols, a * b size kernel border_mode: valid (m-a+1)(n-b+1), same: m*n, full: (m+a-1)(n+b-1) # dim_ordering. 'th' is first 3*224*224, 'tf' 224 * 224 * 3 # filters. filter chanel number. # conv2 seq.add(Convolution2D(nb_filter[0], kernel_size=(3, 3), input_shape=input_shape, padding='valid', data_format="channels_last")) seq.add(Activation('relu')) seq.add(MaxPooling2D(pool_size=(2, 2), data_format="channels_last")) seq.add(Dropout(.25)) # conv2 seq.add(Convolution2D(nb_filter[1], kernel_size=(3, 3), padding='valid', data_format="channels_last")) seq.add(Activation('relu')) seq.add(MaxPooling2D(pool_size=(2, 2), data_format="channels_last")) seq.add(Dropout(.25)) # conv3 seq.add(Convolution2D(nb_filter[2], kernel_size=(3, 3), padding='valid', data_format="channels_last")) seq.add(Activation('relu')) seq.add(MaxPooling2D(pool_size=(2, 2), data_format="channels_last")) seq.add(Dropout(.25)) # flatten seq.add(Flatten()) seq.add(Dense(128, activation='relu')) seq.add(Dropout(0.1)) seq.add(Dense(50, activation='relu')) return seq def euclidean_distance(vects): x, y = vects return K.sqrt(K.sum(K.square(x - y), axis=1, keepdims=True)) def eucl_dist_output_shape(shapes): shape1, shape2 = shapes return (shape1[0], 1) def contrastive_loss(y_true, y_pred): margin = 1 return K.mean(y_true * K.square(y_pred) + (1 - y_true) * K.square(K.maximum(margin - y_pred, 0))) def compute_accuracy(predictions, labels): '''Compute classification accuracy with a fixed threshold on distances. ''' return labels[predictions.ravel() < 0.5].mean() def accuracy(y_true, y_pred): '''Compute classification accuracy with a fixed threshold on distances. ''' return K.mean(K.equal(y_true, K.cast(y_pred < 0.5, y_true.dtype)))

5) 运行模块

import re import numpy as np from PIL import Image from sklearn.model_selection import train_test_split from keras import backend as K from keras.layers import Activation from keras.layers import Input, Lambda, Dense, Dropout, Convolution2D, MaxPooling2D, Flatten from keras.models import Sequential, Model from keras.optimizers import RMSprop from keras import optimizers import matplotlib.image as mpimg import matplotlib.pyplot as plt import seaborn as sns from keras import callbacks from keras.callbacks import ModelCheckpoint, LearningRateScheduler, EarlyStopping, ReduceLROnPlateau, TensorBoard import os from keras.models import Model, load_model import json from keras.models import model_from_json, load_model from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D, BatchNormalization import warnings import configs import prepare_data import siamese_network_funcs warnings.filterwarnings('ignore') image1 = mpimg.imread(configs.path + 's1/1.jpg') X, Y = prepare_data.get_data(configs.size, configs.total_sample_size) X = X.reshape(X.shape[0], 2, 112, 112, 1) # plt.figure(figsize=(10, 5)) # sns.barplot(x=[0, 1], y=[np.sum(Y==0), np.sum(Y==1)]) # plt.show() x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=.15) print('x_train', x_train.shape) print('x_test', x_test.shape) print('y_train', y_train.shape) print('y_test', y_test.shape) # 1,122,122 for gpu # 122, 122, 1 for cpu # input_dim = x_train.shape[2:] input_dim = (112, 112, 1) img_a = Input(shape=(112, 112, 1)) img_b = Input(shape=(112, 112, 1)) print("input_dim " + str(input_dim)) base_network = siamese_network_funcs.build_base_network(input_shape=input_dim) feat_vecs_a = base_network(img_a) feat_vecs_b = base_network(img_b) distance = Lambda(siamese_network_funcs.euclidean_distance, output_shape=siamese_network_funcs.eucl_dist_output_shape)( [feat_vecs_a, feat_vecs_b]) # rms = optimizers.Adam(lr=0.0001, beta_1=0.9, beta_2=0.999, epsilon=None, decay=0.0, amsgrad=False) # RMSprop() rms = RMSprop() earlyStopping = EarlyStopping(monitor='val_loss', min_delta=0, patience=3, verbose=1, restore_best_weights=True) callback_early_stop_reduceLROnPlateau = [earlyStopping] model = Model(inputs=[img_a, img_b], outputs=distance) model.compile(loss=siamese_network_funcs.contrastive_loss, optimizer=rms, metrics=[siamese_network_funcs.accuracy]) print(model.summary()) img_1 = x_train[:, 0] img_2 = x_train[:, 1] print('x_train shape' + str(img_1.shape)) print('y_train' + str(y_train.shape)) history = model.fit([img_1, img_2], y_train, validation_split=.20, batch_size=configs.batch_size, verbose=1, epochs=configs.epochs, callbacks=callback_early_stop_reduceLROnPlateau) # Option 1: Save Weights + Architecture model.save_weights('model_weights.h5') with open('model_architecture.json', 'w') as f: f.write(model.to_json()) print('saved') pred = model.predict([x_test[:, 0], x_test[:, 1]]) print('Accuracy on test set: %0.2f%%' % (100 * siamese_network_funcs.compute_accuracy(pred, y_test))) pred = model.predict([x_train[:, 0], x_train[:, 1]]) print('* Accuracy on training set: %0.2f%%' % (100 * siamese_network_funcs.compute_accuracy(pred, y_train))) acc = history.history['accuracy'] val_acc = history.history['val_accuracy'] loss = history.history['loss'] val_loss = history.history['val_loss'] epochs = range(1, len(acc) + 1) # Train and validation accuracy plt.plot(epochs, acc, 'b', label='Training accurarcy') plt.plot(epochs, val_acc, 'r', label='Validation accurarcy') plt.title('Training and Validation accurarcy') plt.legend() plt.figure() # Train and validation loss plt.plot(epochs, loss, 'b', label='Training loss') plt.plot(epochs, val_loss, 'r', label='Validation loss') plt.title('Training and Validation loss') plt.legend() plt.show()

参考:

1《Siamese Neural Networks for One-shot Image Recognition》

2 【王树森】小样本学习2/3

3 https://www.kaggle.com/code/bulentsiyah/plant-disease-using-siamese-network-keras/notebook