Socket与系统调用深度分析

一、系统调用

linux内核中设置了一组用于实现系统功能的子程序,称为系统调用。和普通库函数调用相似,只是系统调用由操作系统核心提供,运行于核心态,而普通的函数调用由函数库或用户自己提供,运行于用户态。

在Linux中,每个系统调用被赋予一个系统调用号。通过这个独一无二的号就可以关联系统调用。当用户空间的进程执行一个系统调用的时候,这个系统调用号就被用来指明到底是要执行哪个系统调用。系统调用号一旦分配就不能再有任何变更,否则编译好的应用程序就会崩溃。

内核记录了系统调用表中的所有已经注册过的系统调用列表,存储在system_call_table中。当应用程序由于int 0x80指令而陷入内核态的时候,中断处理函数system_call()开始发挥作用。system_call()函数会根据传入的系统调用号(在x86上系统调用号是通过eax寄存器传递给内核的)来调用对应的内核函数,从而完成相应的系统调用。

当我们进行编程时,可以利用系统封装好的API来间接进行调用系统调用。一个API里面可能对应一个系统调用,也可能一个也没有,封装例程会将系统调用封装好,一个例程往往对应一个系统调用,当用户程序在执行API的xyz()调用时,将会进入封装例程xyz()中,由封装例程里的中断汇编代码调用系统调用处理程序system_call(),然后系统调用处理程序调用系统调用服务程序sys_xyz()。

二、结合socket相关系统调用的内核处理函数深入分析

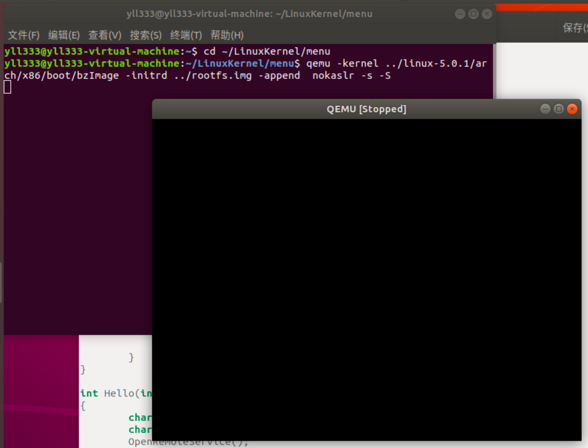

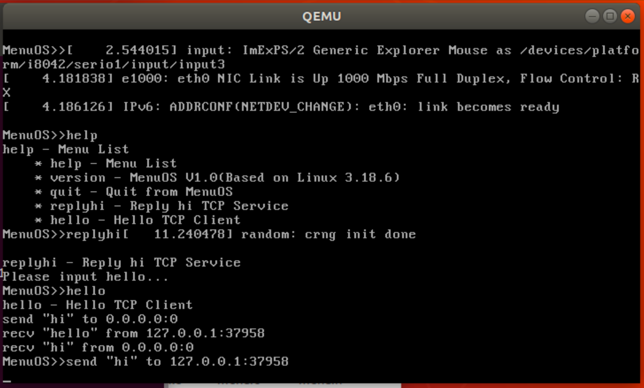

经过上一次实验,MenuOS上已经集成了tcp/ip的相关程序,即hello和replyhi程序,现在依据这个socket程序来进行系统调用的跟踪。

1.首先,启动gdb调试:

2.从用户态进入内核:

根据上课时老师的介绍,我们知道内核的初始化完成了以下的函数调用过程:

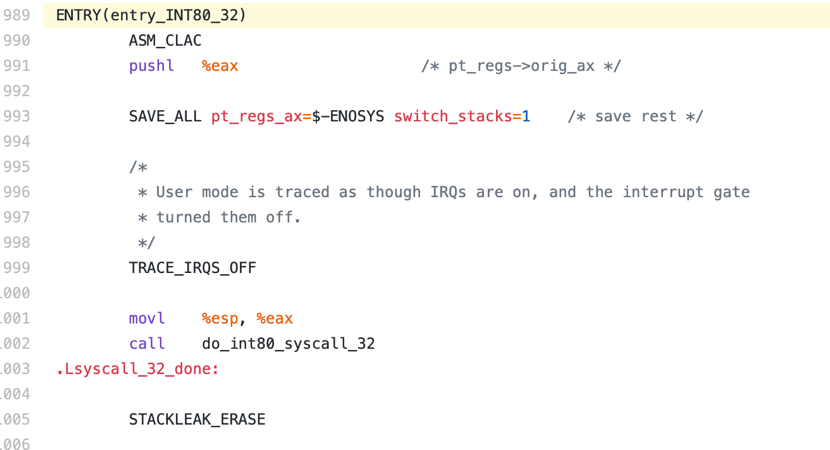

对于x86-32位系统是:start_kernel --> trap_init --> idt_setup_traps --> 0x80--entry_INT80_32,在5.0内核int0x80对应的中断服务例程是entry_INT80_32,而不是原来的名称system_call了。我们在设置断点的时候只能跟踪到前三个函数,因为entry_INT80_32是一段汇编代码,在内核初始化的时候,int0x80指令和entry_INT80_32会进行绑定,因此在用户态程序发起系统调用,执行到int0x80这条指令的时候,程序会直接trap进enrty_INT80_32这段代码中。

看一下系统调用的服务程序,是一段汇编代码:

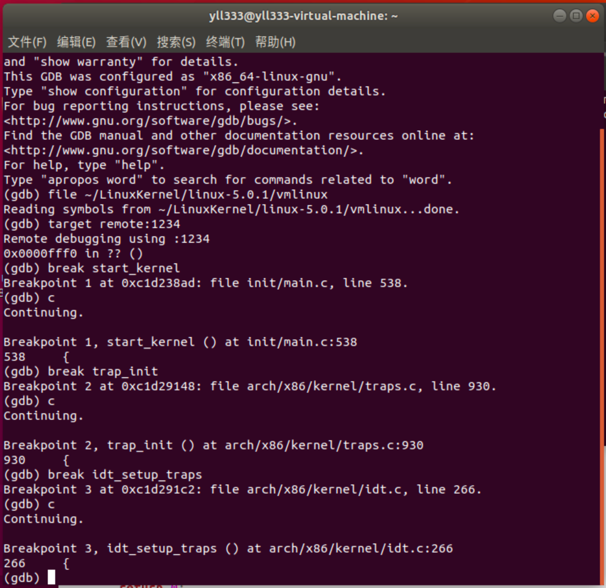

分别在start_kernel、trap_init和idt_setup_traps打上断点,验证一下:

根据断点信息提示,start_kernel()函数在init/main.c程序的第538行,看一下代码:

asmlinkage __visible void __init start_kernel(void) { char *command_line; char *after_dashes; set_task_stack_end_magic(&init_task); smp_setup_processor_id(); debug_objects_early_init(); cgroup_init_early(); local_irq_disable(); early_boot_irqs_disabled = true; /* * Interrupts are still disabled. Do necessary setups, then * enable them. */ boot_cpu_init(); page_address_init(); pr_notice("%s", linux_banner); setup_arch(&command_line); /* * Set up the the initial canary and entropy after arch * and after adding latent and command line entropy. */ add_latent_entropy(); add_device_randomness(command_line, strlen(command_line)); boot_init_stack_canary(); mm_init_cpumask(&init_mm); setup_command_line(command_line); setup_nr_cpu_ids(); setup_per_cpu_areas(); smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */ boot_cpu_hotplug_init(); build_all_zonelists(NULL); page_alloc_init(); pr_notice("Kernel command line: %s\n", boot_command_line); parse_early_param(); after_dashes = parse_args("Booting kernel", static_command_line, __start___param, __stop___param - __start___param, -1, -1, NULL, &unknown_bootoption); if (!IS_ERR_OR_NULL(after_dashes)) parse_args("Setting init args", after_dashes, NULL, 0, -1, -1, NULL, set_init_arg); jump_label_init(); /* * These use large bootmem allocations and must precede * kmem_cache_init() */ setup_log_buf(0); vfs_caches_init_early(); sort_main_extable(); trap_init(); mm_init(); ftrace_init(); /* trace_printk can be enabled here */ early_trace_init(); /* * Set up the scheduler prior starting any interrupts (such as the * timer interrupt). Full topology setup happens at smp_init() * time - but meanwhile we still have a functioning scheduler. */ sched_init(); /* * Disable preemption - early bootup scheduling is extremely * fragile until we cpu_idle() for the first time. */ preempt_disable(); if (WARN(!irqs_disabled(), "Interrupts were enabled *very* early, fixing it\n")) local_irq_disable(); radix_tree_init(); /* * Set up housekeeping before setting up workqueues to allow the unbound * workqueue to take non-housekeeping into account. */ housekeeping_init(); /* * Allow workqueue creation and work item queueing/cancelling * early. Work item execution depends on kthreads and starts after * workqueue_init(). */ workqueue_init_early(); rcu_init(); /* Trace events are available after this */ trace_init(); if (initcall_debug) initcall_debug_enable(); context_tracking_init(); /* init some links before init_ISA_irqs() */ early_irq_init(); init_IRQ(); tick_init(); rcu_init_nohz(); init_timers(); hrtimers_init(); softirq_init(); timekeeping_init(); time_init(); printk_safe_init(); perf_event_init(); profile_init(); call_function_init(); WARN(!irqs_disabled(), "Interrupts were enabled early\n"); early_boot_irqs_disabled = false; local_irq_enable(); kmem_cache_init_late(); /* * HACK ALERT! This is early. We're enabling the console before * we've done PCI setups etc, and console_init() must be aware of * this. But we do want output early, in case something goes wrong. */ console_init(); if (panic_later) panic("Too many boot %s vars at `%s'", panic_later, panic_param); lockdep_init(); /* * Need to run this when irqs are enabled, because it wants * to self-test [hard/soft]-irqs on/off lock inversion bugs * too: */ locking_selftest(); /* * This needs to be called before any devices perform DMA * operations that might use the SWIOTLB bounce buffers. It will * mark the bounce buffers as decrypted so that their usage will * not cause "plain-text" data to be decrypted when accessed. */ mem_encrypt_init(); #ifdef CONFIG_BLK_DEV_INITRD if (initrd_start && !initrd_below_start_ok && page_to_pfn(virt_to_page((void *)initrd_start)) < min_low_pfn) { pr_crit("initrd overwritten (0x%08lx < 0x%08lx) - disabling it.\n", page_to_pfn(virt_to_page((void *)initrd_start)), min_low_pfn); initrd_start = 0; } #endif kmemleak_init(); setup_per_cpu_pageset(); numa_policy_init(); acpi_early_init(); if (late_time_init) late_time_init(); sched_clock_init(); calibrate_delay(); pid_idr_init(); anon_vma_init(); #ifdef CONFIG_X86 if (efi_enabled(EFI_RUNTIME_SERVICES)) efi_enter_virtual_mode(); #endif thread_stack_cache_init(); cred_init(); fork_init(); proc_caches_init(); uts_ns_init(); buffer_init(); key_init(); security_init(); dbg_late_init(); vfs_caches_init(); pagecache_init(); signals_init(); seq_file_init(); proc_root_init(); nsfs_init(); cpuset_init(); cgroup_init(); taskstats_init_early(); delayacct_init(); check_bugs(); acpi_subsystem_init(); arch_post_acpi_subsys_init(); sfi_init_late(); /* Do the rest non-__init'ed, we're now alive */ arch_call_rest_init(); }

至此,我们完成了内核的初始化过程。接下来,来看看socket如何启用系统调用,内核具体调用了哪些函数。

3.socket系统调用

所有的socket系统调用的总入口是sys_socketcall(),在include/linux/syscalls.h中定义:

/* ipc/shm.c */ asmlinkage long sys_shmget(key_t key, size_t size, int flag); asmlinkage long sys_shmctl(int shmid, int cmd, struct shmid_ds __user *buf); asmlinkage long sys_shmat(int shmid, char __user *shmaddr, int shmflg); asmlinkage long sys_shmdt(char __user *shmaddr); /* net/socket.c */ asmlinkage long sys_socket(int, int, int); asmlinkage long sys_socketpair(int, int, int, int __user *); asmlinkage long sys_bind(int, struct sockaddr __user *, int); asmlinkage long sys_listen(int, int); asmlinkage long sys_accept(int, struct sockaddr __user *, int __user *); asmlinkage long sys_connect(int, struct sockaddr __user *, int); asmlinkage long sys_getsockname(int, struct sockaddr __user *, int __user *); asmlinkage long sys_getpeername(int, struct sockaddr __user *, int __user *); asmlinkage long sys_sendto(int, void __user *, size_t, unsigned, struct sockaddr __user *, int); asmlinkage long sys_recvfrom(int, void __user *, size_t, unsigned, struct sockaddr __user *, int __user *); asmlinkage long sys_setsockopt(int fd, int level, int optname, char __user *optval, int optlen); asmlinkage long sys_getsockopt(int fd, int level, int optname, char __user *optval, int __user *optlen); asmlinkage long sys_shutdown(int, int); asmlinkage long sys_sendmsg(int fd, struct user_msghdr __user *msg, unsigned flags); asmlinkage long sys_recvmsg(int fd, struct user_msghdr __user *msg, unsigned flags);

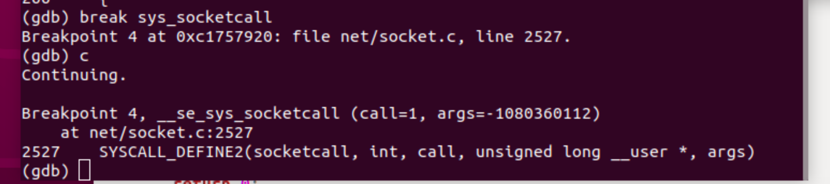

那么在sys_socketcall()处打个断点看看:

由断点处给出的信息可知,在net/socket.c中有一个函数SYSCALL_DEFINE2(socketcall, int, call, unsigned long __user *, args),SYSCALL_DEFINE2是一个宏,这个函数便是socket调用的入口。看看具体的代码:

SYSCALL_DEFINE2(socketcall, int, call, unsigned long __user *, args) { unsigned long a[AUDITSC_ARGS]; unsigned long a0, a1; int err; unsigned int len; if (call < 1 || call > SYS_SENDMMSG) return -EINVAL; call = array_index_nospec(call, SYS_SENDMMSG + 1); len = nargs[call]; if (len > sizeof(a)) return -EINVAL; /* copy_from_user should be SMP safe. */ if (copy_from_user(a, args, len)) return -EFAULT; err = audit_socketcall(nargs[call] / sizeof(unsigned long), a); if (err) return err; a0 = a[0]; a1 = a[1]; switch (call) { case SYS_SOCKET: err = __sys_socket(a0, a1, a[2]); break; case SYS_BIND: err = __sys_bind(a0, (struct sockaddr __user *)a1, a[2]); break; case SYS_CONNECT: err = __sys_connect(a0, (struct sockaddr __user *)a1, a[2]); break; case SYS_LISTEN: err = __sys_listen(a0, a1); break; case SYS_ACCEPT: err = __sys_accept4(a0, (struct sockaddr __user *)a1, (int __user *)a[2], 0); break; case SYS_GETSOCKNAME: err = __sys_getsockname(a0, (struct sockaddr __user *)a1, (int __user *)a[2]); break; case SYS_GETPEERNAME: err = __sys_getpeername(a0, (struct sockaddr __user *)a1, (int __user *)a[2]); break; case SYS_SOCKETPAIR: err = __sys_socketpair(a0, a1, a[2], (int __user *)a[3]); break; case SYS_SEND: err = __sys_sendto(a0, (void __user *)a1, a[2], a[3], NULL, 0); break; case SYS_SENDTO: err = __sys_sendto(a0, (void __user *)a1, a[2], a[3], (struct sockaddr __user *)a[4], a[5]); break; case SYS_RECV: err = __sys_recvfrom(a0, (void __user *)a1, a[2], a[3], NULL, NULL); break; case SYS_RECVFROM: err = __sys_recvfrom(a0, (void __user *)a1, a[2], a[3], (struct sockaddr __user *)a[4], (int __user *)a[5]); break; case SYS_SHUTDOWN: err = __sys_shutdown(a0, a1); break; case SYS_SETSOCKOPT: err = __sys_setsockopt(a0, a1, a[2], (char __user *)a[3], a[4]); break; case SYS_GETSOCKOPT: err = __sys_getsockopt(a0, a1, a[2], (char __user *)a[3], (int __user *)a[4]); break; case SYS_SENDMSG: err = __sys_sendmsg(a0, (struct user_msghdr __user *)a1, a[2], true); break; case SYS_SENDMMSG: err = __sys_sendmmsg(a0, (struct mmsghdr __user *)a1, a[2], a[3], true); break; case SYS_RECVMSG: err = __sys_recvmsg(a0, (struct user_msghdr __user *)a1, a[2], true); break; case SYS_RECVMMSG: if (IS_ENABLED(CONFIG_64BIT) || !IS_ENABLED(CONFIG_64BIT_TIME)) err = __sys_recvmmsg(a0, (struct mmsghdr __user *)a1, a[2], a[3], (struct __kernel_timespec __user *)a[4], NULL); else err = __sys_recvmmsg(a0, (struct mmsghdr __user *)a1, a[2], a[3], NULL, (struct old_timespec32 __user *)a[4]); break; case SYS_ACCEPT4: err = __sys_accept4(a0, (struct sockaddr __user *)a1, (int __user *)a[2], a[3]); break; default: err = -EINVAL; break; } return err; }

第一个判断是API序号鉴定,需要在socket接口调用范围内。

第二个判断是根据API序号取得该API的参数个数,nargs数组中定义。

第三个判断是将参数从用户态args拷到内核态a中。

第四个判断是selinux的一些鉴权过程,可以忽略。

之后的switch-case语句是根据对应的API序号进行调用,并将参数强制类型转换为对应API需要的参数类型。比如对应call参数传入的是SYS_BIND()函数,那么就会调用到内核处理程序__sys_bind。这是Socket相关系统调用的内核处理函数内部通过“多态机制”对不同的网络协议进行的封装方法。

4.在socket程序中系统调用的具体实现

对于我们使用到的socket程序中的replyhi和hello在建立通讯的过程中,使用到了哪些函数呢?可以看一下源码中的相关代码:

#include"syswrapper.h" #define MAX_CONNECT_QUEUE 1024 int Replyhi() { char szBuf[MAX_BUF_LEN] = "\0"; char szReplyMsg[MAX_BUF_LEN] = "hi\0"; InitializeService(); while (1) { ServiceStart(); RecvMsg(szBuf); SendMsg(szReplyMsg); ServiceStop(); } ShutdownService(); return 0; } int StartReplyhi(int argc, char *argv[]) { int pid; /* fork another process */ pid = fork(); if (pid < 0) { /* error occurred */ fprintf(stderr, "Fork Failed!"); exit(-1); } else if (pid == 0) { /* child process */ Replyhi(); printf("Reply hi TCP Service Started!\n"); } else { /* parent process */ printf("Please input hello...\n"); } } int Hello(int argc, char *argv[]) { char szBuf[MAX_BUF_LEN] = "\0"; char szMsg[MAX_BUF_LEN] = "hello\0"; OpenRemoteService(); SendMsg(szMsg); RecvMsg(szBuf); CloseRemoteService(); return 0; }

可以看出,对应replyhi指令的函数运行顺序是:

StartReplyhi()->Replyhi()

其中,在Replyhi()函数中,函数的调用顺序为:

InitializeService()->ServiceStart()->RecvMsg()-> SendMsg()->ServiceStop()

这些函数定义在头文件中:

/********************************************************************/ /* Copyright (C) SSE-USTC, 2012 */ /* */ /* FILE NAME : syswraper.h */ /* PRINCIPAL AUTHOR : Mengning */ /* SUBSYSTEM NAME : system */ /* MODULE NAME : syswraper */ /* LANGUAGE : C */ /* TARGET ENVIRONMENT : Linux */ /* DATE OF FIRST RELEASE : 2012/11/22 */ /* DESCRIPTION : the interface to Linux system(socket) */ /********************************************************************/ /* * Revision log: * * Created by Mengning,2012/11/22 * */ #ifndef _SYS_WRAPER_H_ #define _SYS_WRAPER_H_ #include<stdio.h> #include<arpa/inet.h> /* internet socket */ #include<string.h> //#define NDEBUG #include<assert.h> #define PORT 5001 #define IP_ADDR "127.0.0.1" #define MAX_BUF_LEN 1024 /* private macro */ #define PrepareSocket(addr,port) \ int sockfd = -1; \ struct sockaddr_in serveraddr; \ struct sockaddr_in clientaddr; \ socklen_t addr_len = sizeof(struct sockaddr); \ serveraddr.sin_family = AF_INET; \ serveraddr.sin_port = htons(port); \ serveraddr.sin_addr.s_addr = inet_addr(addr); \ memset(&serveraddr.sin_zero, 0, 8); \ sockfd = socket(PF_INET,SOCK_STREAM,0); #define InitServer() \ int ret = bind( sockfd, \ (struct sockaddr *)&serveraddr, \ sizeof(struct sockaddr)); \ if(ret == -1) \ { \ fprintf(stderr,"Bind Error,%s:%d\n", \ __FILE__,__LINE__); \ close(sockfd); \ return -1; \ } \ listen(sockfd,MAX_CONNECT_QUEUE); #define InitClient() \ int ret = connect(sockfd, \ (struct sockaddr *)&serveraddr, \ sizeof(struct sockaddr)); \ if(ret == -1) \ { \ fprintf(stderr,"Connect Error,%s:%d\n", \ __FILE__,__LINE__); \ return -1; \ } /* public macro */ #define InitializeService() \ PrepareSocket(IP_ADDR,PORT); \ InitServer(); #define ShutdownService() \ close(sockfd); #define OpenRemoteService() \ PrepareSocket(IP_ADDR,PORT); \ InitClient(); \ int newfd = sockfd; #define CloseRemoteService() \ close(sockfd); #define ServiceStart() \ int newfd = accept( sockfd, \ (struct sockaddr *)&clientaddr, \ &addr_len); \ if(newfd == -1) \ { \ fprintf(stderr,"Accept Error,%s:%d\n", \ __FILE__,__LINE__); \ } #define ServiceStop() \ close(newfd); #define RecvMsg(buf) \ ret = recv(newfd,buf,MAX_BUF_LEN,0); \ if(ret > 0) \ { \ printf("recv \"%s\" from %s:%d\n", \ buf, \ (char*)inet_ntoa(clientaddr.sin_addr), \ ntohs(clientaddr.sin_port)); \ } #define SendMsg(buf) \ ret = send(newfd,buf,strlen(buf),0); \ if(ret > 0) \ { \ printf("send \"hi\" to %s:%d\n", \ (char*)inet_ntoa(clientaddr.sin_addr), \ ntohs(clientaddr.sin_port)); \ } #endif /* _SYS_WRAPER_H_ */

首先在InitializeService()函数中,调用了PrepareSocket()函数,对数据结构进行了初始化,并初始化了系统调用函数socket (),之后调用了InitServer()函数,再去看InitServer()的定义,这里调用了系统调用函数,bind(),listen(),close()函数,接着分析ServiceStart()等函数,分别调用了accept(),recv(),send(),close()系统调用函数。

那么这些函数到底是如何对应到SYSCALL_DEFINE2(socketcall, int, call, unsigned long __user *, args)中的socketcall的呢?在arch/x86/entry/syscalls/syscall_32.tbl中,定义了这些系统调用的对应列表:

0 i386 restart_syscall sys_restart_syscall __ia32_sys_restart_syscall 1 i386 exit sys_exit __ia32_sys_exit 2 i386 fork sys_fork __ia32_sys_fork 3 i386 read sys_read __ia32_sys_read 4 i386 write sys_write __ia32_sys_write 5 i386 open sys_open __ia32_compat_sys_open 6 i386 close sys_close __ia32_sys_close

359 i386 socket sys_socket __ia32_sys_socket 360 i386 socketpair sys_socketpair __ia32_sys_socketpair 361 i386 bind sys_bind __ia32_sys_bind 362 i386 connect sys_connect __ia32_sys_connect 363 i386 listen sys_listen __ia32_sys_listen 364 i386 accept4 sys_accept4 __ia32_sys_accept4 365 i386 getsockopt sys_getsockopt __ia32_compat_sys_getsockopt 366 i386 setsockopt sys_setsockopt __ia32_compat_sys_setsockopt 367 i386 getsockname sys_getsockname __ia32_sys_getsockname 368 i386 getpeername sys_getpeername __ia32_sys_getpeername 369 i386 sendto sys_sendto __ia32_sys_sendto 370 i386 sendmsg sys_sendmsg __ia32_compat_sys_sendmsg 371 i386 recvfrom sys_recvfrom __ia32_compat_sys_recvfrom 372 i386 recvmsg sys_recvmsg __ia32_compat_sys_recvmsg 373 i386 shutdown sys_shutdown __ia32_sys_shutdown

系统调用号359-373对socket相关的系统调用进行了绑定。

对应net/socket.c文件中SYSCALL_DEFINE2(socketcall, int, call, unsigned long __user *, args)函数中的switch-case语句中的不同case,分别有下列的对应关系:

socket()->SYS_SOCKET->__sys_socket()

bind()->SYS_BIND->__sys_bind()

listen()->SYS_LISTEN->__sys_listen ()

accept()->SYS_ACCEPT->__sys_accept4()

recv()->SYS_RECV->__sys_recvfrom()

send()->SYS_SEND->__sys_sendto()

connect()->SYS_CONNECT->__sys_connect()

接下来分析hello指令用到了哪些函数,在hello指令中,函数的调用顺序为:

Hello()->OpenRemoteService()->SendMsg()->RecvMsg()->CloseRemoteService()

对照上面的代码来看,这些函数分别调用到的系统调用函数为:

connect(),send(),recv(),close()函数

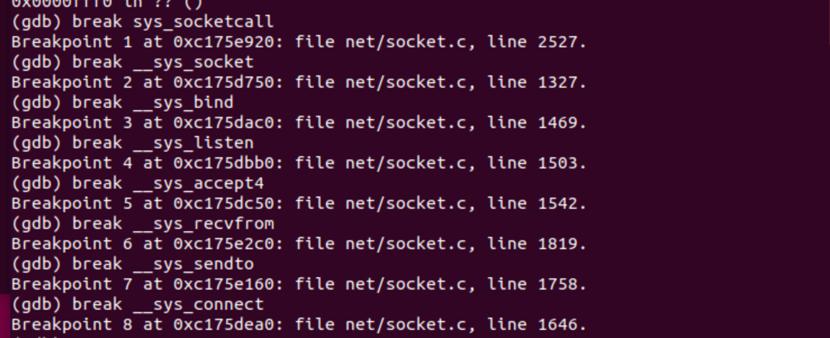

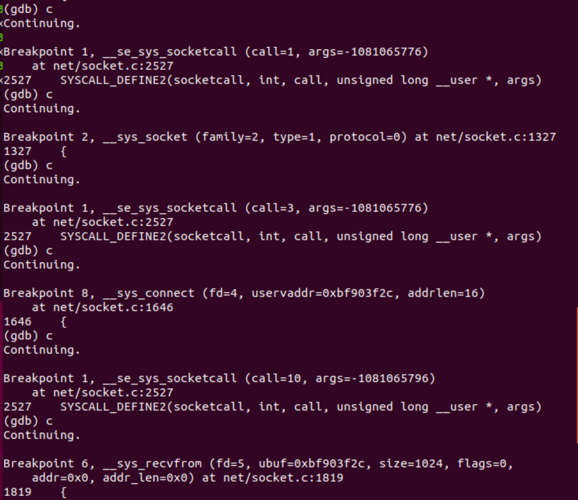

知道调用到的系统调用函数,我们来设置断点来验证一下,为以上的系统调用函数对应到的内核处理程序都设置一下断点:

breakpoint1对应sys_socketcall

breakpoint2对应__sys_socket()

breakpoint3对应__sys_bind()

breakpoint4对应__sys_listen ()

breakpoint5对应__sys_accept4()

breakpoint6对应__sys_recvfrom()

breakpoint7对应__sys_sendto()

breakpoint8对应__sys_connect()

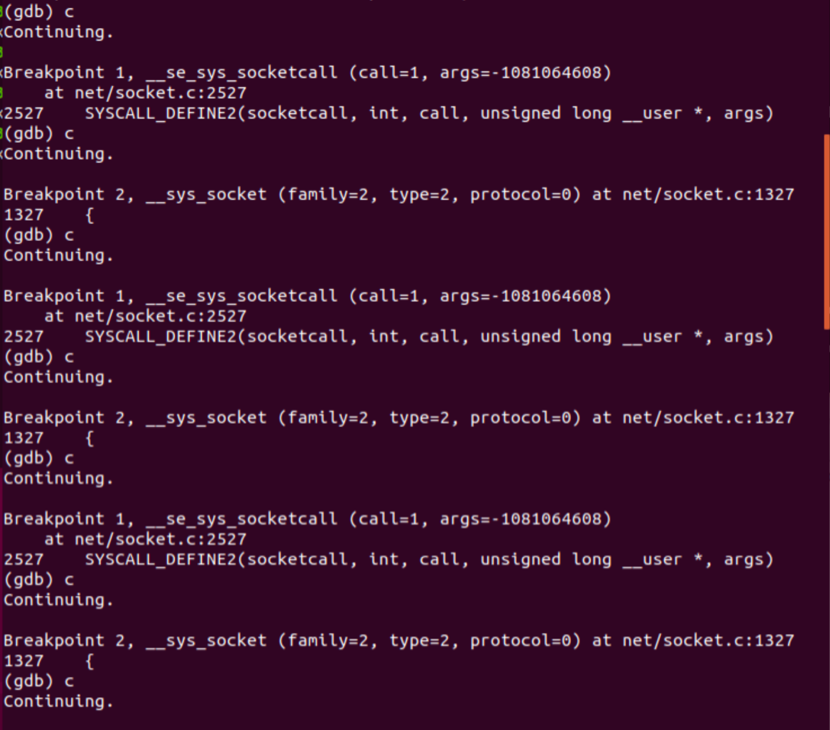

再按c继续执行,并根据系统提示输入replyhi指令,看看tcp在通信过程中是怎么进行调用内核处理程序的:

前6个断点处打印的信息有三个连续的breakpoint1和breakpoint2断点信息,这是在启动网卡:

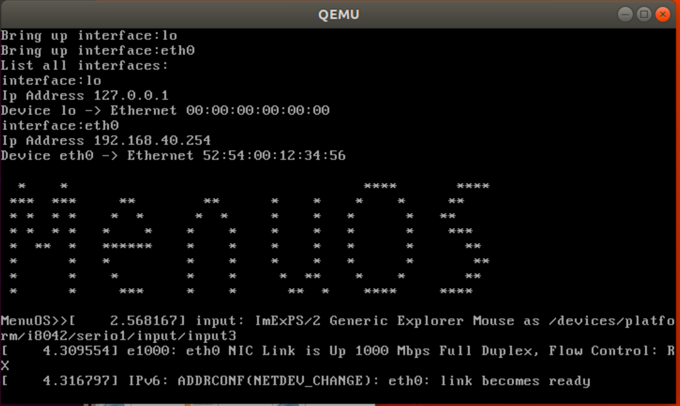

接着跟踪断点,直到输入hello前:

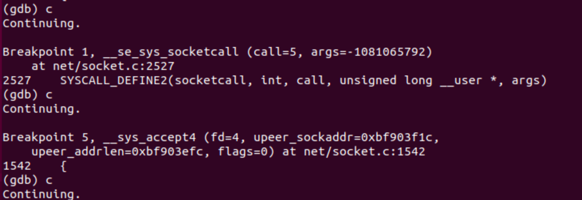

这八个断点信息其实可以看作四次调用内核处理程序,因为在sys_socketcall设置了断点,而每次调用相应的内核程序时,都会先进入这个函数,之后才调用到内核程序。

这四次内核程序调用对应到的断点号分别为breakpoint2,breakpoint3,breakpoint4,breakpoint5,它们分别代表调用的是内核程序__sys_socket(),__sys_bind(),__sys_listen ()和__sys_accept4(),刚好对应到系统调用程序:socket(),bind(),listen()和accept()。

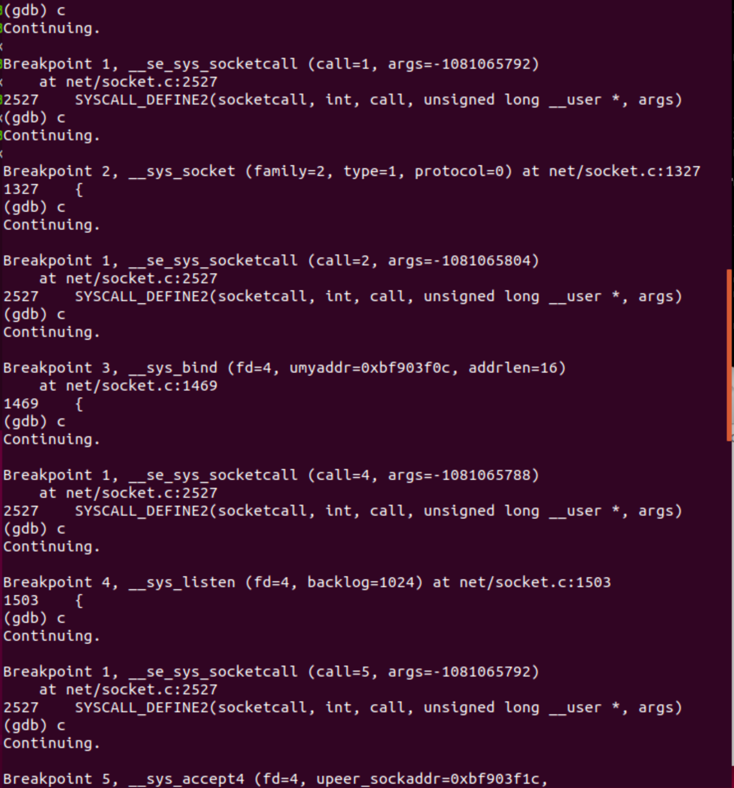

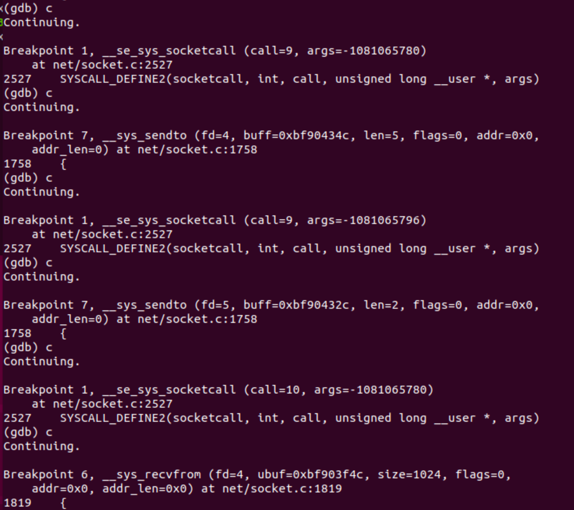

再接着执行,根据提示在MenuOS中输入hello指令,系统输出信息为:

断点输出信息为:

这14条断点信息代表调用了7次内核处理程序,这7次程序调用的断点号分别为breakpoint2,breakpoint8,breakpoint6,breakpoint7,breakpoint7,breakpoint6和breakpoint5,先后调用了__sys_socket(),__sys_connect(),__sys_recvfrom(),__sys_sendto(),__sys_sendto(),__sys_recvfrom()和__sys_accept4(),

前五个输出代表连接端先初始化一个socket,再连接到之前的replyhi请求端,并接受了从请求端发送过来的信息,包括地址信息,再将hello信息发送给对方。讲道理,Hello()函数运行到这里就结束了,那么后面两个输出是从何而来呢,我们仔细看上面socket程序源码便可以明白,在Replyhi()函数中,对Hello()发送过来的信息是不做判断的,Hello()每发送一条信息过来,Replyhi()都会直接接收,并向其再发送一条“hi”,因此便有了之后的两条程序执行信息,对应着__sys_accept4()和__sys_recvfrom()。

到这里,我们可以得出结论,socket相关程序在进行系统调用时,通过库函数提供的系统调用接口,这些接口经过系统调用列表与call参数进行了绑定,同时,每个call参数都与执行相应功能的内核处理程序形成了一一对应关系。这样,用户便能利用socket接口在用户态通过系统调用机制进入内核。

浙公网安备 33010602011771号

浙公网安备 33010602011771号