kafka集群搭建

一直听说过kafka跟rabbitmq,redis相似,但是没试过,今天特意抽了点时间学习下kafka集群搭建,了解下。

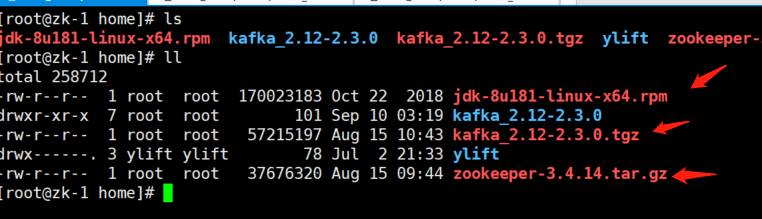

1.环境准备

3台虚拟机(1C4G)

192.168.77.31 zk-1

192.168.77.32 zk-2

192.168.77.33 zk-3

通过官网下载好安装包.

安装kafka集群,需要先安装zookeeper,这里也一并把zookeeper集群也装了一遍。

2.安装过zookeeper集群

1. 安装jdk1.8,并设置环境变量加载

echo 'export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64/' >>/etc/profile

echo 'export PATH=$JAVA_HOME/bin:$PATH' >>/etc/profile

echo 'export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar' >>/etc/profile

source /etc/profile

2. 安装zookeeper

#安装zookeeper

tar -xf ./zookeeper-3.4.14.tar.gz -C /opt && ln -s /opt/zookeeper-3.4.14/ /usr/local/zookeeper

cd /usr/local/zookeeper/conf

mv ./zoo_sample.cfg zoo.cfg

修改配置文件

tickTime=2000

initLimit=10

syncLimit=5

dataLogDir=/opt/zookeeper/logs

dataDir=/opt/zookeeper/data

clientPort=2181

autopurge.snapRetainCount=500

autopurge.purgeInterval=24

#对应机器的ip设置为0.0.0.0

server.1= 192.168.77.31:2888:3888

server.2= 192.168.77.32:2888:3888

server.3= 192.168.77.33:2888:3888

#创建相关目录,三台节点都需要

mkdir -p /opt/zookeeper/{logs,data}

创建ServerID标识,每台机器的ServerID都不一致

echo "1" > /opt/zookeeper/data/myid

#添加环境变量

sed -i '$a\export PATH=$PATH:/usr/local/zookeeper/bin' /etc/profile

#刷新环境变量

source /etc/profile

zkServer.sh start

#查看zk集群状态

zkServer.sh status

3.安装kafka集群

tar -xf kafka_2.12-2.3.0.tgz -C /usr/local/

cd /usr/local/kafka_2.12-2.3.0/config/

修改server.properties文件

config/server.properties:

broker.id=0 #id唯一,三台不能重复

listeners=PLAINTEXT://:9092

log.dirs=/tmp/kafka-logs

zookeeper.connect=zk-1:2181,zk-2:2181,zk-3:2181

echo 'export PATH=$PATH:/usr/local/kafka_2.12-2.3.0/bin/' >>/etc/profile

source /etc/profile

后台启动kafka

nohup kafka-server-start.sh /usr/local/kafka_2.12-2.3.0/config/server.properties &

启动后查看进程是否启动完成

一些常用的kafka命令

Create a topic

kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic test

replication-factor 复制印子1

partitions 分区

列出主题

kafka-topics.sh --list --bootstrap-server localhost:9092

查询topic内容:

创建topic,并发送消息

kafka-console-producer.sh --broker-list localhost:9092 --topic test66

Now create a new topic with a replication factor of three:

kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 3 --partitions 1 --topic my-replicated-topic

Okay but now that we have a cluster how can we know which broker is doing what? To see that run the "describe topics" command:

kafka-topics.sh --describe --bootstrap-server localhost:9092 --topic my-replicated-topic

消费信息

kafka-console-consumer.sh --bootstrap-server localhost:9092 --from-beginning --topic my-replicated-topic

浙公网安备 33010602011771号

浙公网安备 33010602011771号